- Data Structure In the Era of Big Data

- https://dataconomy.com/2017/04/big-data-101-data-structures/

- http://web.stanford.edu/class/cs168/

- https://www.ics.uci.edu/~pattis/ICS-23/

- https://redislabs.com/redis-enterprise/data-structures/

- Efficient Large Scale Maximum Inner Product Search

- https://www.epaperpress.com/vbhash/index.html

- XSearch: Distributed Indexing and Search in Large-Scale File Systems

- Data Structures for Big Data

- https://cs.uwaterloo.ca/~imunro/cs840/CS840.html

- https://graphics.stanford.edu/courses/cs468-06-fall/

- CS369G: Algorithmic Techniques for Big Data

- http://www.jblumenstock.com/teaching/course=info251

Vector search engine (aka neural search engine or deep search engine) uses deep learning models to encode data sets into meaningful vector representations, where distance between vectors represent the similarities between items.

- https://www.microsoft.com/en-us/ai/ai-lab-vector-search

- https://github.com/textkernel/vector-search-plugin

- https://github.com/pingcap/awesome-database-learning

- http://ekzhu.com/datasketch/index.html

- https://github.com/gakhov/pdsa

- https://pdsa.readthedocs.io/en/latest/

- https://iq.opengenus.org/probabilistic-data-structures/

- Probabilistic Data Structures and Algorithms for Big Data Applications

- PROBABILISTIC HASHING TECHNIQUES FOR BIG DATA

- COMP 480/580 Probabilistic Algorithms and Data Structures

- COMS 4995: Randomized Algorithms

To quote the hash function at Wikipedia:

A hash function is any function that can be used to map data of arbitrary size to fixed-size values. The values returned by a hash function are called hash values, hash codes, digests, or simply hashes. The values are used to index a fixed-size table called a hash table. Use of a hash function to index a hash table is called hashing or scatter storage addressing.

Hashed indexes use a hashing function to compute the hash of the value of the index field. The hashing function collapses embedded documents and computes the hash for the entire value but does not support multi-key (i.e. arrays) indexes.

Real life data tends to get corrupted because machines (and humans) are never as reliable as we wish for. One efficient way is make sure your data wasn't unintendedly modified is to generate some kind of hash. That hash shall be unique, compact and efficient:

- unique: any kind of modification to the data shall generate a different hash

- compact: as few bits or bytes as possible to keep the overhead low

- efficient: use little computing resources, i.e. fast and low memory usage

Hashing models can be broadly categorized into two different categories:

quantizationandprojection. The projection models focus on learning a low-dimensional transformation of the input data in a way that encourages related data-points to be closer together in the new space. In contrast, the quantization models seek to convert those projections into binary by using a thresholding mechanism. The projection branch can be further divided into data-independent, data-dependent (unsupervised) and data-dependent (supervised) depending on whether the projections are influenced by the distribution of the data or available class-labels.

- Hash function

- https://github.com/caoyue10/DeepHash-Papers

- https://zhuanlan.zhihu.com/p/43569947

- https://www.tutorialspoint.com/dbms/dbms_hashing.htm

- Indexing based on Hashing

- https://docs.mongodb.com/manual/core/index-hashed/

- https://www.cs.cmu.edu/~adamchik/15-121/lectures/Hashing/hashing.html

- https://www2.cs.sfu.ca/CourseCentral/354/zaiane/material/notes/Chapter11/node15.html

- https://github.com/Pfzuo/Level-Hashing

- https://thehive.ai/insights/learning-hash-codes-via-hamming-distance-targets

- Various hashing methods for image retrieval and serves as the baselines

- http://papers.nips.cc/paper/5893-practical-and-optimal-lsh-for-angular-distance

- Hash functions: An empirical comparison

The basis of the FNV hash algorithm was taken from an idea sent as reviewer comments to the IEEE POSIX P1003.2 committee by Glenn Fowler and Phong Vo back in 1991. In a subsequent ballot round: Landon Curt Noll improved on their algorithm. Some people tried this hash and found that it worked rather well. In an EMail message to Landon, they named it the ``Fowler/Noll/Vo'' or FNV hash.

FNV hashes are designed to be fast while maintaining a low collision rate. The FNV speed allows one to quickly hash lots of data while maintaining a reasonable collision rate. The high dispersion of the FNV hashes makes them well suited for hashing nearly identical strings such as URLs, hostnames, filenames, text, IP addresses, etc.

Locality-Sensitive Hashing (LSH) is a class of methods for the nearest neighbor search problem, which is defined as follows: given a dataset of points in a metric space (e.g., Rd with the Euclidean distance), our goal is to preprocess the data set so that we can quickly answer nearest neighbor queries: given a previously unseen query point, we want to find one or several points in our dataset that are closest to the query point.

- http://web.mit.edu/andoni/www/LSH/index.html

- http://yongyuan.name/blog/vector-ann-search.html

- https://github.com/arbabenko/GNOIMI

- https://github.com/willard-yuan/hashing-baseline-for-image-retrieval

- http://yongyuan.name/habir/

- 4 Pictures that Explain LSH - Locality Sensitive Hashing Tutorial

- https://eng.uber.com/lsh/

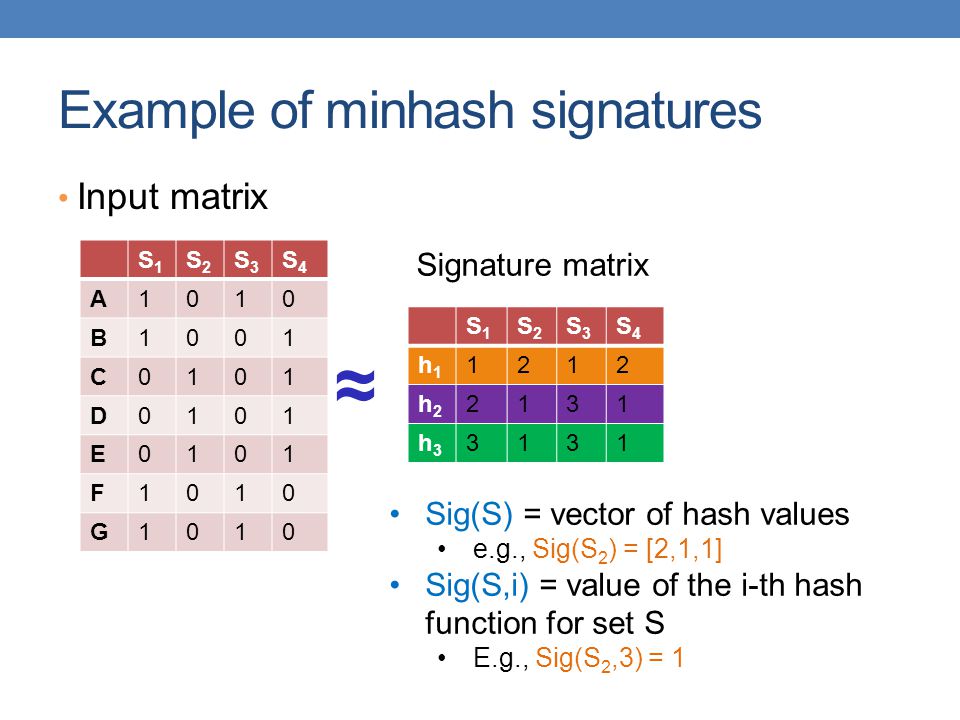

- MinHash Tutorial with Python Code

- MinHash Sketches: A Brief Survey

- https://skeptric.com/minhash-lsh/

- Asymmetric Minwise Hashing for Indexing Binary Inner Products and Set Containment

- GPU-based minwise hashing: GPU-based minwise hashing

- Ranking Preserving Hashing for Fast Similarity Search

- Unsupervised Rank-Preserving Hashing for Large-Scale Image Retrieval

- http://math.ucsd.edu/~dmeyer/research/publications/rankhash/rankhash.pdf

- Order Preserving Hashing for Approximate Nearest Neighbor Search

- https://songdj.github.io/

Cuckoo Hashing is a technique for resolving collisions in hash tables that produces a dictionary with constant-time worst-case lookup and deletion operations as well as amortized constant-time insertion operations.

- An Overview of Cuckoo Hashing

- Some Open Questions Related to Cuckoo Hashing

- Practical Survey on Hash Tables

- Elastic Cuckoo Page Tables: Rethinking Virtual Memory Translation for Parallelism

- MinCounter: An Efficient Cuckoo Hashing Scheme for Cloud Storage Systems

- Bloom Filters, Cuckoo Hashing, Cuckoo Filters, Adaptive Cuckoo Filters and Learned Bloom Filters

- https://courses.cs.washington.edu/courses/cse452/18sp/ConsistentHashing.pdf

- https://nlogn.in/consistent-hashing-system-design/

- https://www.ic.unicamp.br/~celio/peer2peer/structured-theory/consistent-hashing.pdf

- https://www.cs.utah.edu/~jeffp/teaching/cs7931-S15/cs7931/3-rp.pdf

- Random Projection and the Assembly Hypothesis

- Random Projections and Sampling Algorithms for Clustering of High-Dimensional Polygonal Curves

- https://www.dennisrohde.work/uploads/poster_neurips19.pdf

- https://www.dennisrohde.work/

- Brain computation by assemblies of neurons

- https://github.com/wilseypa/rphash

- https://www.cs.rice.edu/~bc20/

- https://www.cs.rice.edu/~as143/

- Locality Sensitive Sampling for Extreme-Scale Optimization and Deep Learning

- http://mlwiki.org/index.php/Bit_Sampling_LSH

- Mutual Information Estimation using LSH Sampling

The fruit fly Drosophila's olfactory circuit has inspired a new locality sensitive hashing (LSH) algorithm, FlyHash. In contrast with classical LSH algorithms that produce low dimensional hash codes, FlyHash produces sparse high-dimensional hash codes and has also been shown to have superior empirical performance compared to classical LSH algorithms in similarity search. However, FlyHash uses random projections and cannot learn from data. Building on inspiration from FlyHash and the ubiquity of sparse expansive representations in neurobiology, our work proposes a novel hashing algorithm BioHash that produces sparse high dimensional hash codes in a data-driven manner. We show that BioHash outperforms previously published benchmarks for various hashing methods. Since our learning algorithm is based on a local and biologically plausible synaptic plasticity rule, our work provides evidence for the proposal that LSH might be a computational reason for the abundance of sparse expansive motifs in a variety of biological systems. We also propose a convolutional variant BioConvHash that further improves performance. From the perspective of computer science, BioHash and BioConvHash are fast, scalable and yield compressed binary representations that are useful for similarity search.

- Bio-Inspired Hashing for Unsupervised Similarity Search

- https://mitibmwatsonailab.mit.edu/research/blog/bio-inspired-hashing-for-unsupervised-similarity-search/

- https://deepai.org/publication/bio-inspired-hashing-for-unsupervised-similarity-search

- https://github.com/josebetomex/BioHash

- http://www.people.vcu.edu/~gasmerom/MAT131/repnearest.html

- https://spaces.ac.cn/archives/8159

- https://github.com/dataplayer12/Fly-LSH

- https://science.sciencemag.org/content/358/6364/793/tab-pdf

- https://arxiv.org/abs/1812.01844

Bloom filters, counting Bloom filters, and multi-hashing tables

- https://www.geeksforgeeks.org/bloom-filters-introduction-and-python-implementation/

- http://www.cs.jhu.edu/~fabian/courses/CS600.624/slides/bloomslides.pdf

- https://www2021.thewebconf.org/papers/consistent-sampling-through-extremal-process/

- Cache-, Hash- and Space-Efficient Bloom Filters

- Fluid Co-processing: GPU Bloom-filters for CPU Joins

- https://diegomestre2.github.io/

- Xor Filters: Faster and Smaller Than Bloom Filters

- https://www.cs.princeton.edu/~chazelle/pubs/soda-rev04.pdf

- https://webee.technion.ac.il/~ayellet/Ps/nelson.pdf

- https://www.cnblogs.com/linguanh/p/10460421.html

- https://www.runoob.com/redis/redis-hyperloglog.html

- http://homepage.cs.uiowa.edu/~ghosh/

- http://ticki.github.io/blog/skip-lists-done-right/

- https://lotabout.me/2018/skip-list/

- Skip Lists: A Probabilistic Alternative to Balanced Trees

- https://florian.github.io/count-min-sketch/

- Count Min Sketch: The Art and Science of Estimating Stuff

- http://dimacs.rutgers.edu/~graham/pubs/papers/cmencyc.pdf

- https://github.com/barrust/count-min-sketch

- http://hkorte.github.io/slides/cmsketch/

- Data Sketching

- What is Data Sketching, and Why Should I Care?

- http://www.cse.ust.hk/faculty/arya/publications.html

- https://sunju.org/research/subspace-search/

- http://www.cs.memphis.edu/~nkumar/

- NNS Benchmark: Evaluating Approximate Nearest Neighbor Search Algorithms in High Dimensional Euclidean Space

- Datasets for approximate nearest neighbor search

- http://mzwang.top/2021/03/12/proximity-graph-monotonicity/

- http://math.sfsu.edu/beck/teach/870/brendan.pdf

- Practical Graph Mining With R

- https://graphworkflow.com/decoding/gestalt/proximity/

- http://mzwang.top/about/

- http://web.mit.edu/8.334/www/grades/projects/projects17/KevinZhou.pdf

- Approximate nearest neighbor algorithm based on navigable small world graphs

- Navigable Small-World Networks

- https://github.com/js1010/cuhnsw

- https://github.com/nmslib/hnswlib

- https://www.libhunt.com/l/cuda/t/hnsw

- https://erikbern.com/2015/10/01/nearest-neighbors-and-vector-models-part-2-how-to-search-in-high-dimensional-spaces.html

- fast library for ANN search and KNN graph construction

- Building KNN Graph for Billion High Dimensional Vectors Efficiently

- https://github.com/aaalgo/kgraph

- https://www.msra.cn/zh-cn/news/features/approximate-nearest-neighbor-search

- https://static.googleusercontent.com/media/research.google.com/zh-CN//pubs/archive/37599.pdf

- Three Success Stories About Compact Data Structures

- Image Similarity Search with Compact Data Structures

- 02951 Compact Data Structures

- Computation over Compressed Structured Data

- http://www2.compute.dtu.dk/~phbi/

- http://www2.compute.dtu.dk/~inge/

- https://github.com/fclaude/libcds2

- https://diegocaro.github.io/thesis/index.html

- http://www.birdsproject.eu/course-compact-data-structures-during-udcs-international-summer-school-2018/

In computer science, a succinct data structure is a data structure which uses an amount of space that is "close" to the information-theoretic lower bound, but (unlike other compressed representations) still allows for efficient query operations.

- https://www.cs.helsinki.fi/group/suds/

- https://www.cs.helsinki.fi/group/algodan/

- http://simongog.github.io/

- http://algo2.iti.kit.edu/gog/homepage/index.html

- https://arxiv.org/abs/1904.02809

- Succinct Data Structures-Exploring succinct trees in theory and practice

- Presentation "COBS: A Compact Bit-Sliced Signature Index" at SPIRE 2019 (Best Paper Award)

The Succinct Data Structure Library (SDSL) contains many succinct data structures from the following categories:

- Bit-vectors supporting Rank and Select

- Integer Vectors

- Wavelet Trees

- Compressed Suffix Arrays (CSA)

- Balanced Parentheses Representations

- Longest Common Prefix (LCP) Arrays

- Compressed Suffix Trees (CST)

- Range Minimum/Maximum Query (RMQ) Structures

- https://github.com/simongog/sdsl-lite

- https://github.com/simongog/sdsl-lite/wiki/Literature

- https://github.com/simongog/

- https://www2.eecs.berkeley.edu/Pubs/TechRpts/2009/EECS-2009-101.pdf

- https://arxiv.org/abs/1908.00672

By using hash-code to construct index, we can achieve constant or sub-linear search time complexity.

Hash functions are learned from a given training dataset.

- https://cs.nju.edu.cn/lwj/slides/L2H.pdf

- Repository of Must Read Papers on Learning to Hash

- Learning to Hash: Paper, Code and Dataset

- Learning to Hash with Binary Reconstructive Embeddings

- http://zpascal.net/cvpr2015/Lai_Simultaneous_Feature_Learning_2015_CVPR_paper.pdf

- https://github.com/twistedcubic/learn-to-hash

- https://cs.nju.edu.cn/lwj/slides/hash2.pdf

- Learning to hash for large scale image retrieval

- http://stormluke.me/learning-to-hash-intro/

- Learning to Hash for Source Separation

- https://github.com/sunwookimiub/BLSH