diff --git a/book/_config.yml b/book/_config.yml

index d12c700..94ea7f4 100644

--- a/book/_config.yml

+++ b/book/_config.yml

@@ -30,6 +30,7 @@ parse:

- amsmath

- dollarmath

- html_image

+ - substitution

sphinx:

config:

html_show_copyright: false

diff --git a/tutorials/W0D2_PythonWorkshop2/W0D2_Tutorial1.ipynb b/tutorials/W0D2_PythonWorkshop2/W0D2_Tutorial1.ipynb

index f15b7ab..25fce25 100644

--- a/tutorials/W0D2_PythonWorkshop2/W0D2_Tutorial1.ipynb

+++ b/tutorials/W0D2_PythonWorkshop2/W0D2_Tutorial1.ipynb

@@ -47,9 +47,10 @@

"source": [

"---\n",

"## Tutorial objectives\n",

+ "\n",

"We learned basic Python and NumPy concepts in the previous tutorial. These new and efficient coding techniques can be applied repeatedly in tutorials from the NMA course, and elsewhere.\n",

"\n",

- "In this tutorial, we'll introduce spikes in our LIF neuron and evaluate the refractory period's effect in spiking dynamics!\n"

+ "In this tutorial, we'll introduce spikes in our LIF neuron and evaluate the refractory period's effect in spiking dynamics!"

]

},

{

@@ -296,7 +297,7 @@

"\n",

"

\n",

"\n",

- "Another important statistic is the sample [histogram](https://en.wikipedia.org/wiki/Histogram). For our LIF neuron it provides an approximate representation of the distribution of membrane potential $V_m(t)$ at time $t=t_k\\in[0,t_{max}]$. For $N$ realizations $V\\left(t_k\\right)$ and $J$ bins is given by:\n",

+ "Another important statistic is the sample [histogram](https://en.wikipedia.org/wiki/Histogram). For our LIF neuron, it provides an approximate representation of the distribution of membrane potential $V_m(t)$ at time $t=t_k\\in[0,t_{max}]$. For $N$ realizations $V\\left(t_k\\right)$ and $J$ bins is given by:\n",

"\n",

"

\n",

"\\begin{equation}\n",

@@ -609,7 +610,7 @@

"execution": {}

},

"source": [

- "A spike takes place whenever $V(t)$ crosses $V_{th}$. In that case, a spike is recorded and $V(t)$ resets to $V_{reset}$ value. This is summarized in the *reset condition*:\n",

+ "A spike occures whenever $V(t)$ crosses $V_{th}$. In that case, a spike is recorded, and $V(t)$ resets to $V_{reset}$ value. This is summarized in the *reset condition*:\n",

"\n",

"\\begin{equation}\n",

"V(t) = V_{reset}\\quad \\text{ if } V(t)\\geq V_{th}\n",

@@ -771,7 +772,7 @@

"for step, t in enumerate(t_range):\n",

"\n",

" # Skip first iteration\n",

- " if step==0:\n",

+ " if step == 0:\n",

" continue\n",

"\n",

" # Compute v_n\n",

@@ -852,7 +853,7 @@

"for step, t in enumerate(t_range):\n",

"\n",

" # Skip first iteration\n",

- " if step==0:\n",

+ " if step == 0:\n",

" continue\n",

"\n",

" # Compute v_n\n",

@@ -1004,7 +1005,7 @@

"execution": {}

},

"source": [

- "Numpy arrays can be indexed by boolean arrays to select a subset of elements (also works with lists of booleans).\n",

+ "Boolean arrays can index NumPy arrays to select a subset of elements (also works with lists of booleans).\n",

"\n",

"The boolean array itself can be initiated by a condition, as shown in the example below.\n",

"\n",

@@ -1189,7 +1190,7 @@

},

"outputs": [],

"source": [

- "# to_remove solutions\n",

+ "# to_remove solution\n",

"\n",

"# Set random number generator\n",

"np.random.seed(2020)\n",

@@ -1549,9 +1550,8 @@

},

"source": [

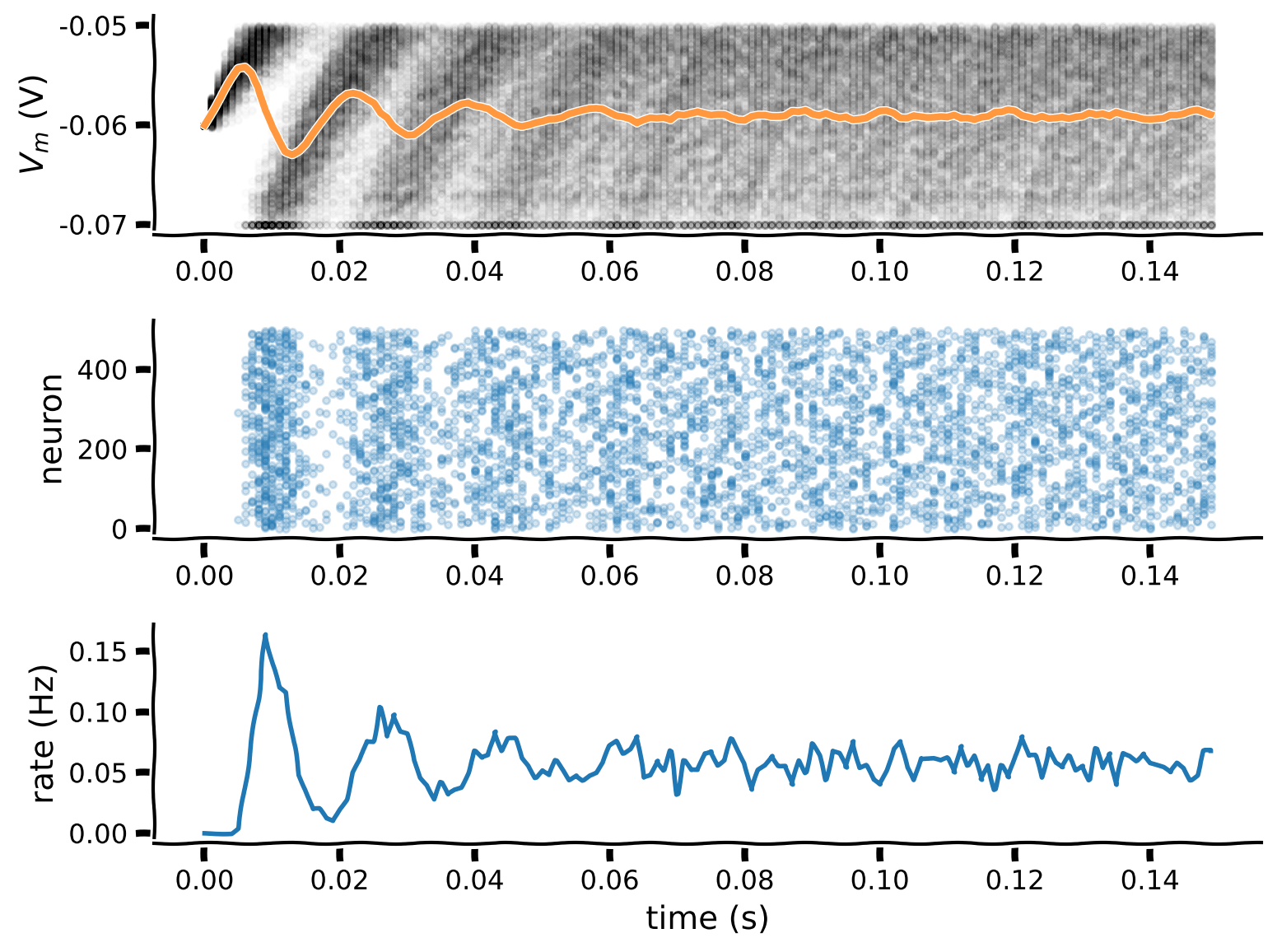

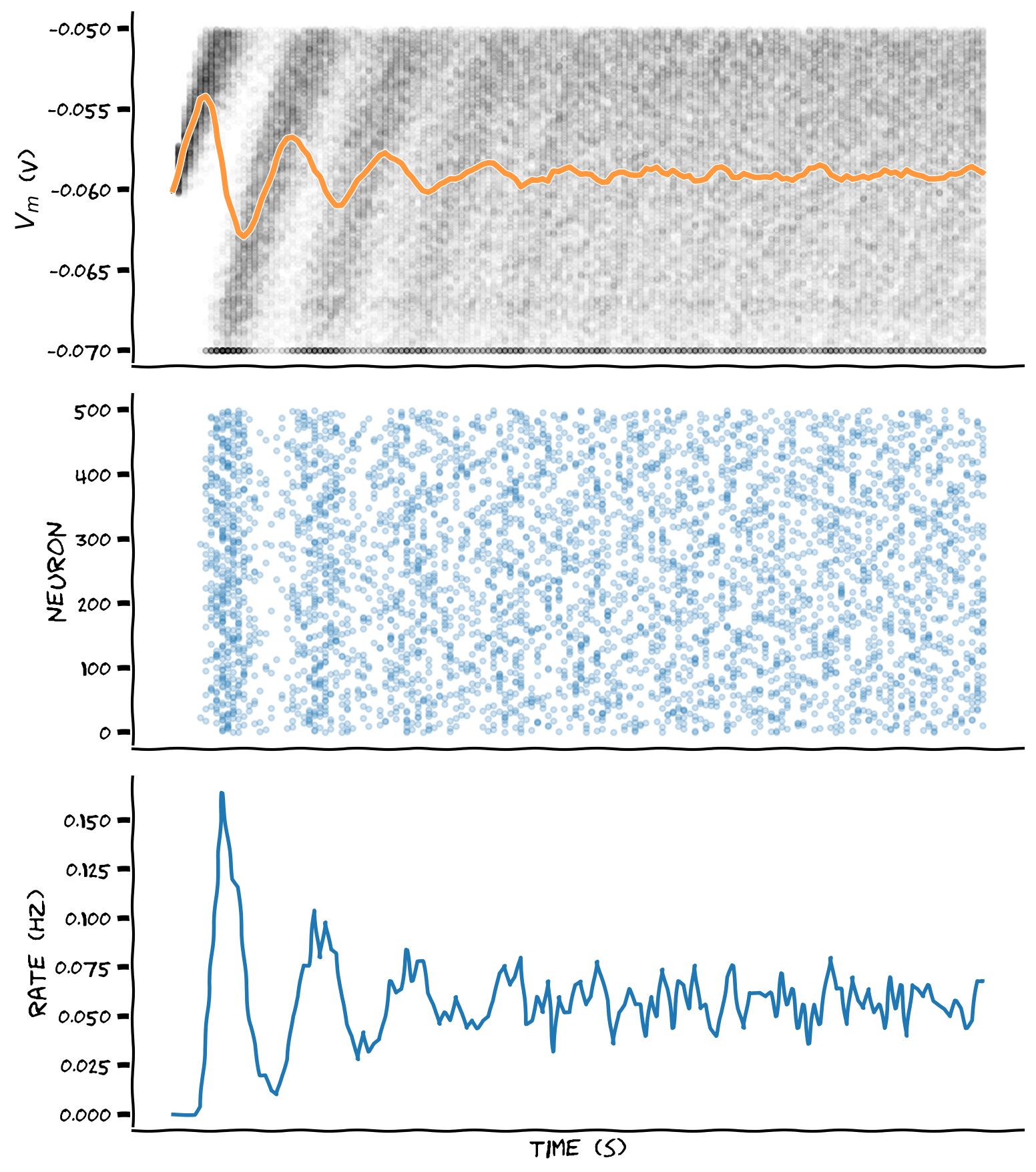

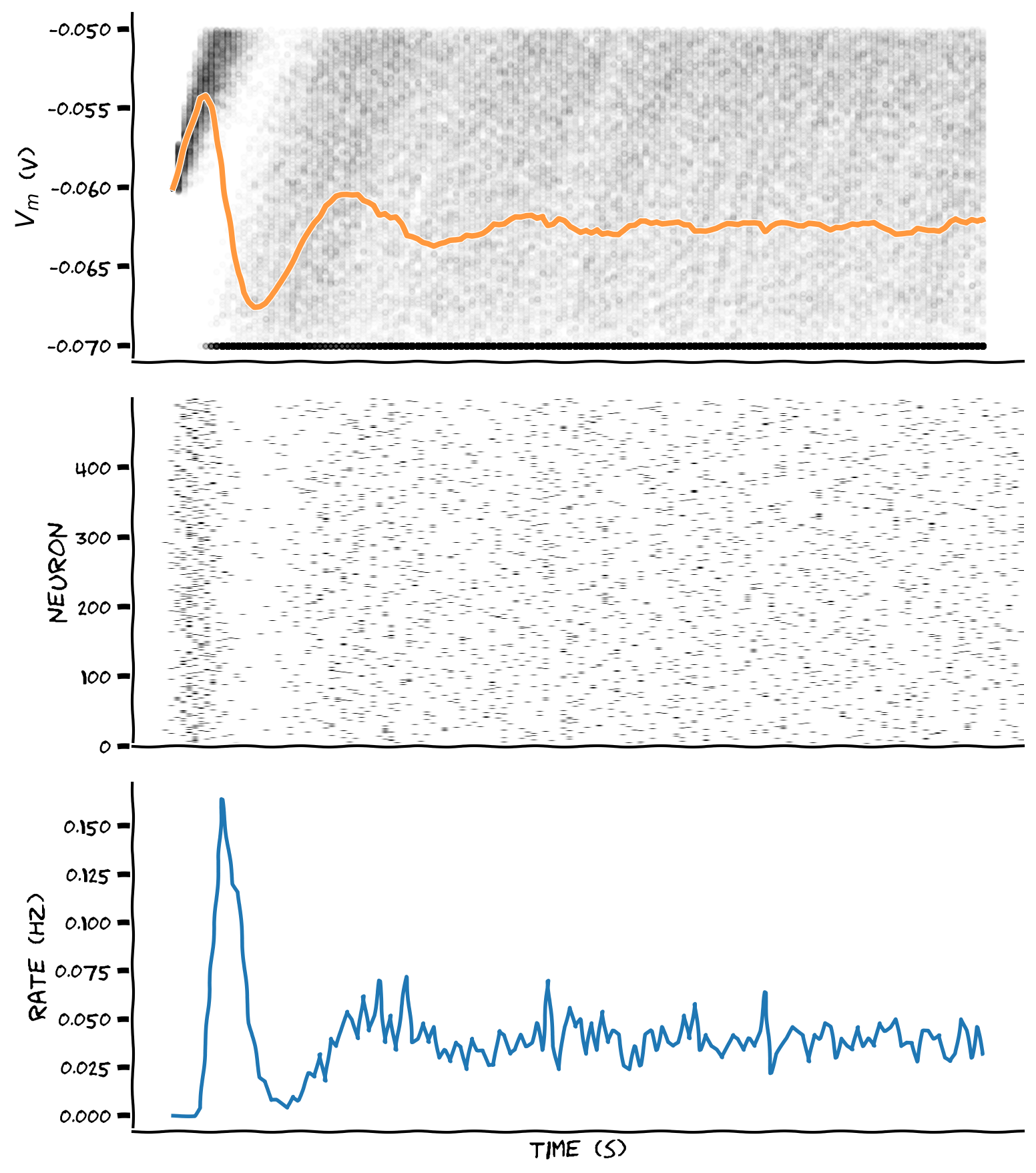

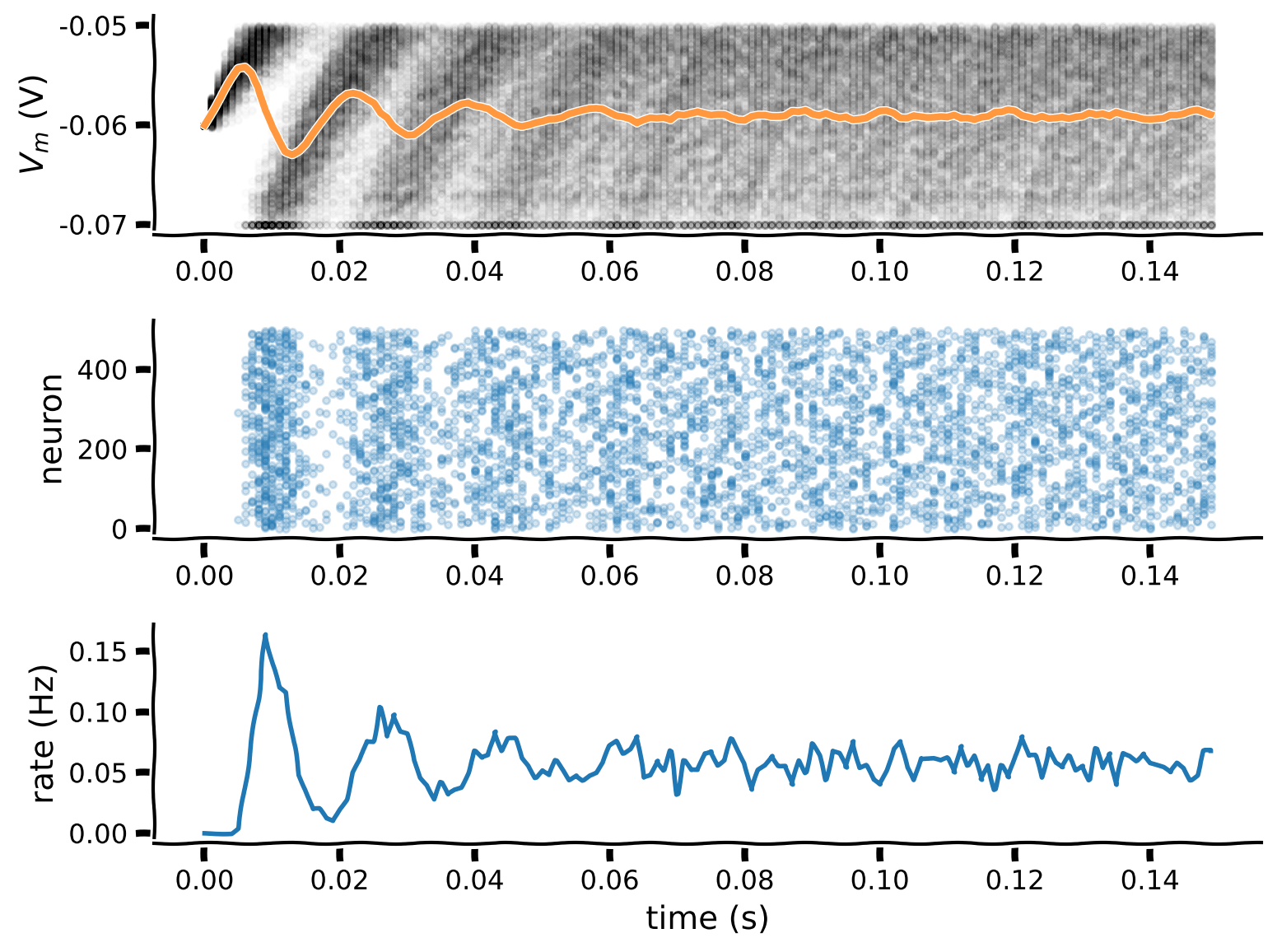

"## Coding Exercise 5: Investigating refactory periods\n",

- "Investigate the effect of (absolute) refractory period $t_{ref}$ on the evolution of output rate $\\lambda(t)$. Add refractory period $t_{ref}=10$ ms after each spike, during which $V(t)$ is clamped to $V_{reset}$.\n",

"\n",

- "\n"

+ "Investigate the effect of (absolute) refractory period $t_{ref}$ on the evolution of output rate $\\lambda(t)$. Add refractory period $t_{ref}=10$ ms after each spike, during which $V(t)$ is clamped to $V_{reset}$."

]

},

{

@@ -1697,13 +1697,13 @@

},

"source": [

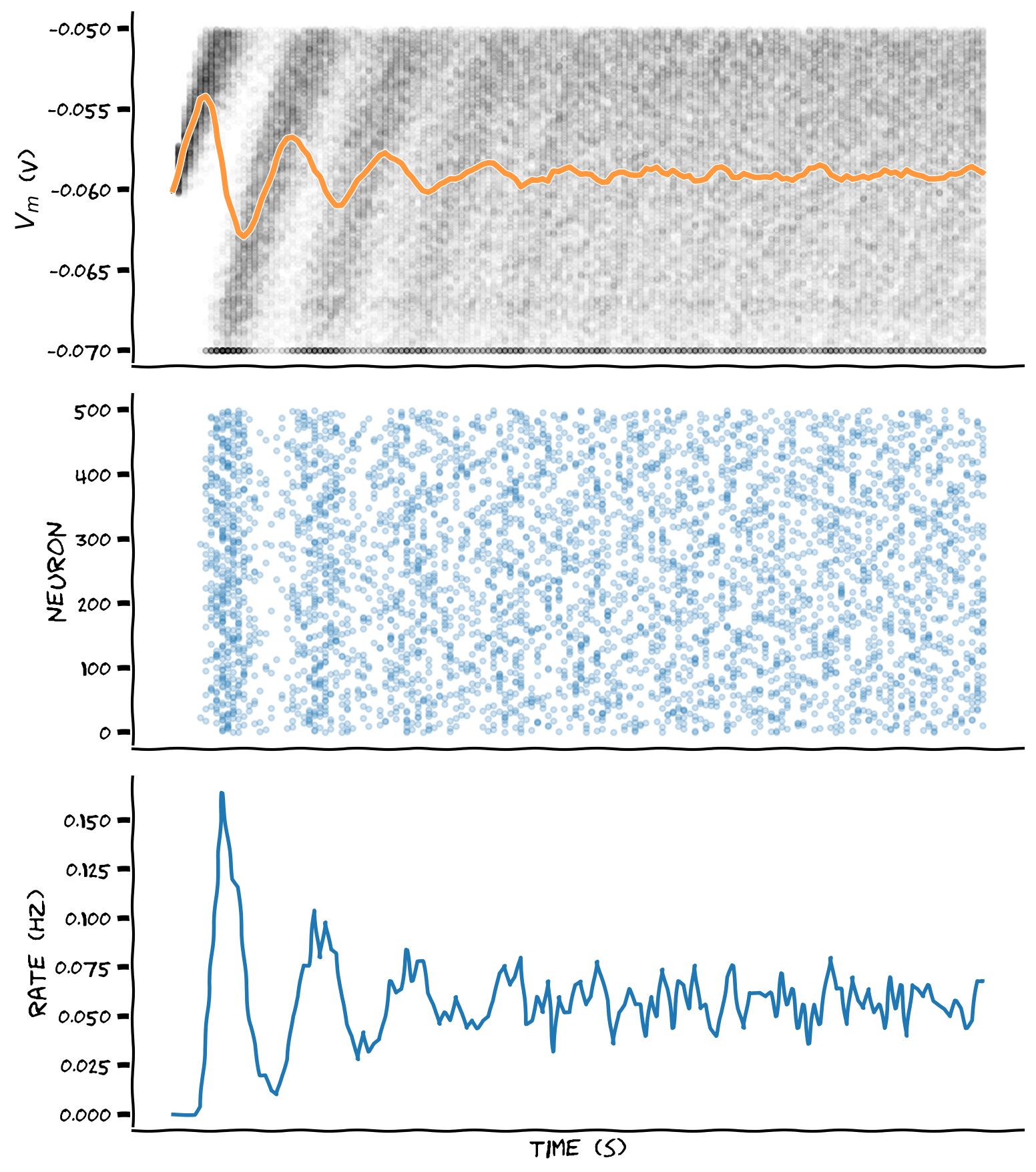

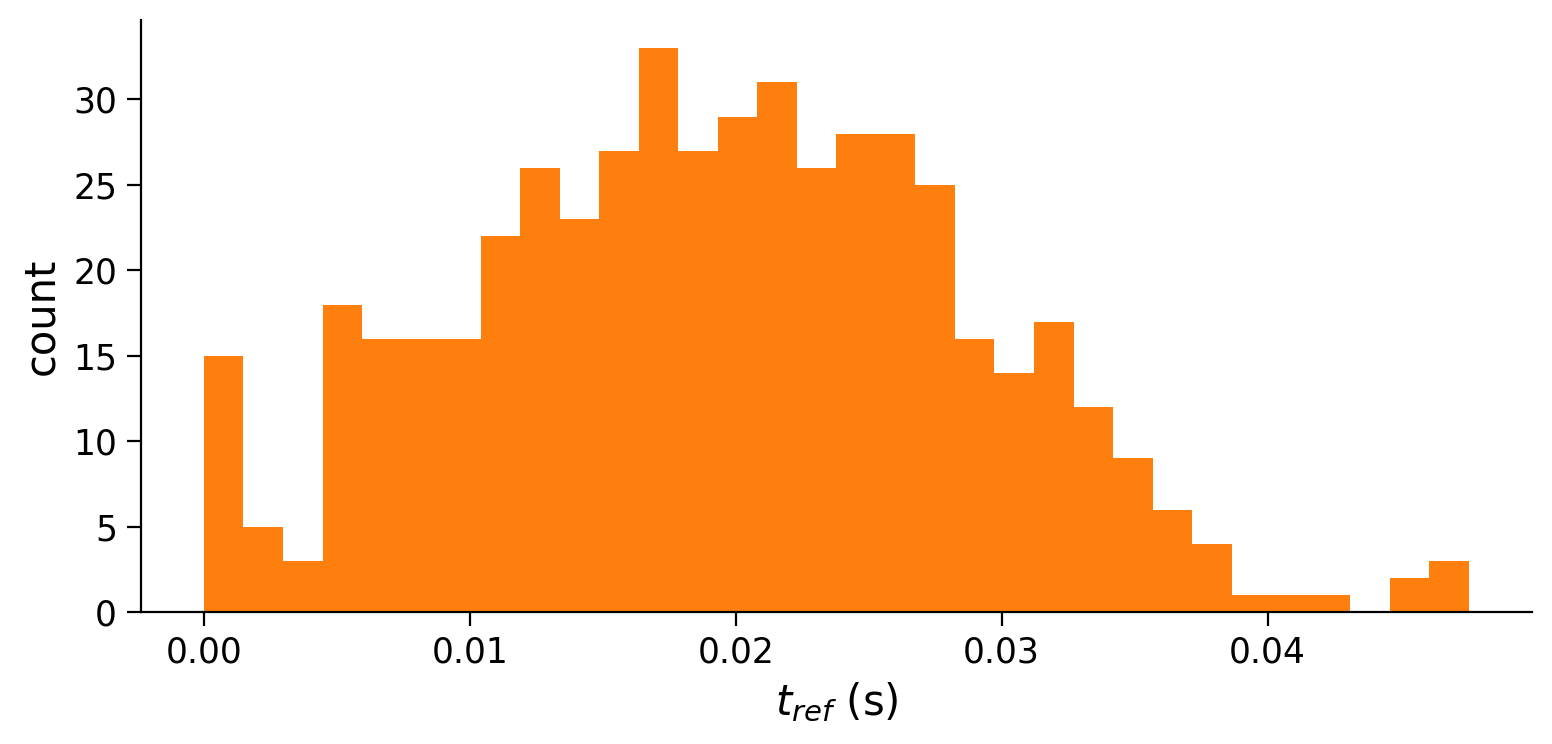

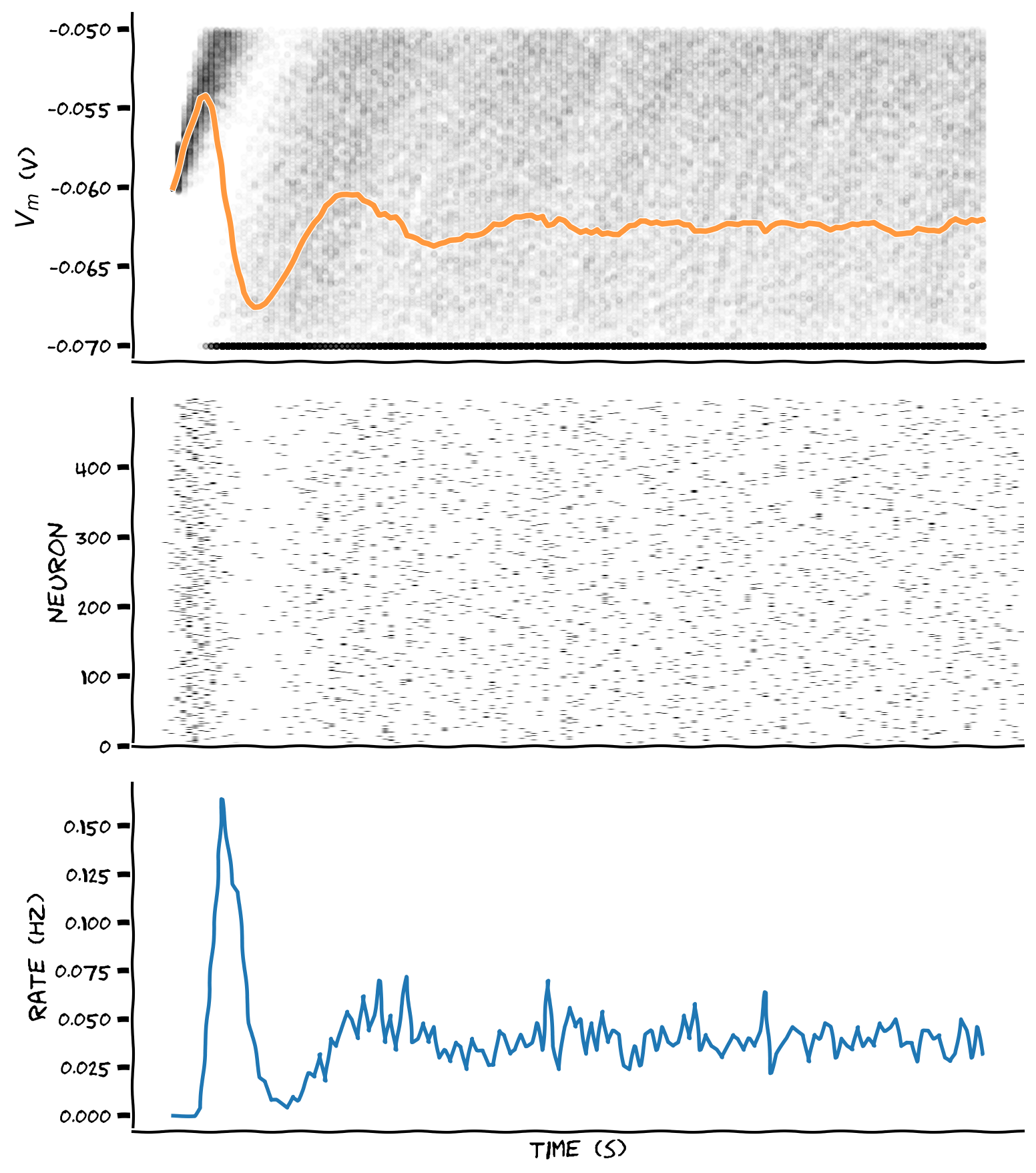

"## Interactive Demo 1: Random refractory period\n",

+ "\n",

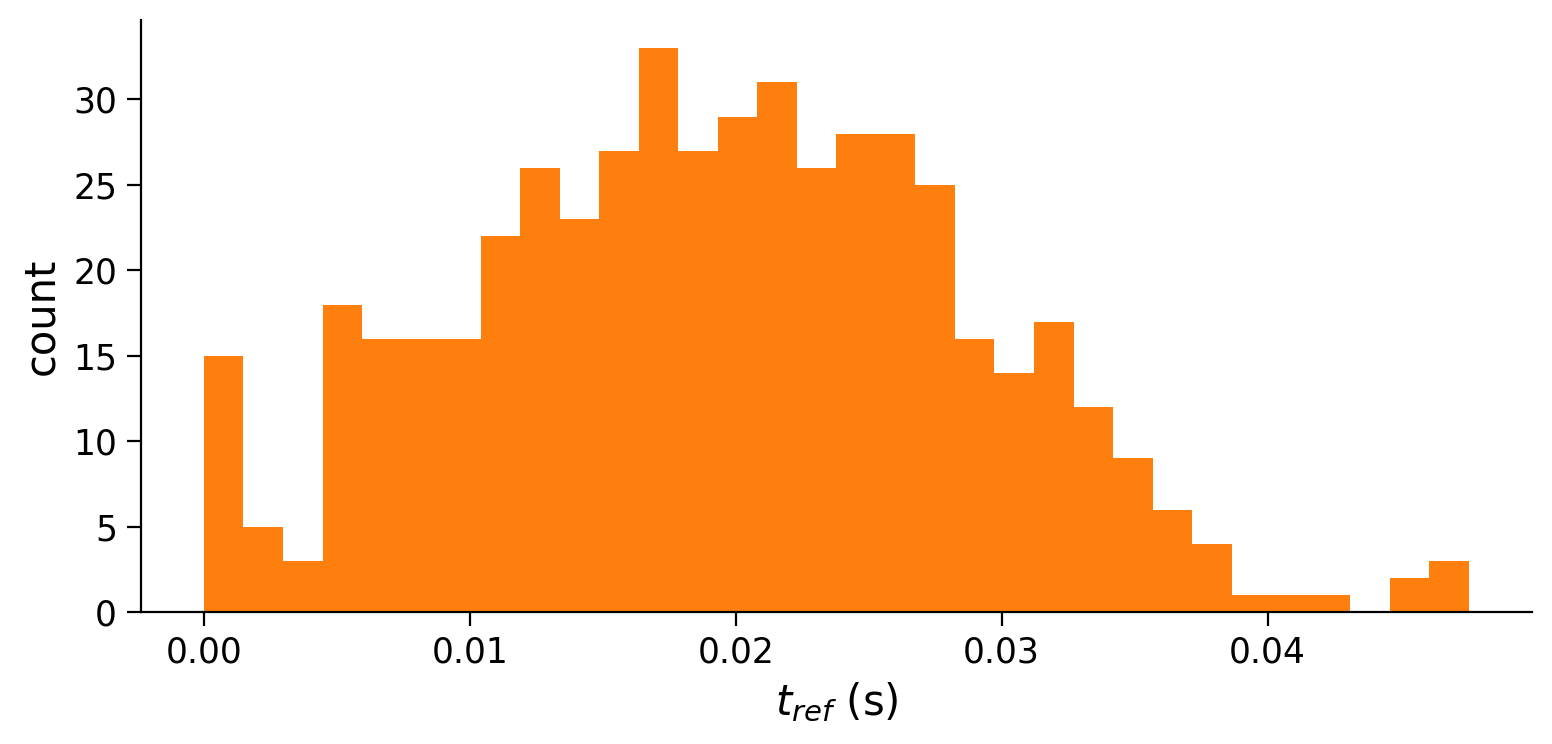

"In the following interactive demo, we will investigate the effect of random refractory periods. We will use random refactory periods $t_{ref}$ with\n",

"$t_{ref} = \\mu + \\sigma\\,\\mathcal{N}$, where $\\mathcal{N}$ is the [normal distribution](https://en.wikipedia.org/wiki/Normal_distribution), $\\mu=0.01$ and $\\sigma=0.007$.\n",

"\n",

- "Refractory period samples `t_ref` of size `n` is initialized with `np.random.normal`. We clip negative values to `0` with boolean indexes. (Why?) You can double click the cell to see the hidden code.\n",

+ "Refractory period samples `t_ref` of size `n` is initialized with `np.random.normal`. We clip negative values to `0` with boolean indexes. (Why?) You can double-click the cell to see the hidden code.\n",

"\n",

- "You can play with the parameters mu and sigma and visualize the resulting simulation.\n",

- "What is the effect of different $\\sigma$ values?\n"

+ "You can play with the parameters mu and sigma and visualize the resulting simulation. What is the effect of different $\\sigma$ values?"

]

},

{

@@ -1934,6 +1934,7 @@

},

"source": [

"## Coding Exercise 6: Rewriting code with functions\n",

+ "\n",

"We will now re-organize parts of the code from the previous exercise with functions. You need to complete the function `spike_clamp()` to update $V(t)$ and deal with spiking and refractoriness"

]

},

@@ -1953,7 +1954,7 @@

" v (numpy array of floats)\n",

" membrane potential at previous time step of shape (neurons)\n",

"\n",

- " v (numpy array of floats)\n",

+ " i (numpy array of floats)\n",

" synaptic input at current time step of shape (neurons)\n",

"\n",

" dt (float)\n",

@@ -1967,6 +1968,7 @@

"\n",

" return v\n",

"\n",

+ "\n",

"def spike_clamp(v, delta_spike):\n",

" \"\"\"\n",

" Resets membrane potential of neurons if v>= vth\n",

@@ -2051,6 +2053,8 @@

},

"outputs": [],

"source": [

+ "# to_remove solution\n",

+ "\n",

"def ode_step(v, i, dt):\n",

" \"\"\"\n",

" Evolves membrane potential by one step of discrete time integration\n",

@@ -2059,7 +2063,7 @@

" v (numpy array of floats)\n",

" membrane potential at previous time step of shape (neurons)\n",

"\n",

- " v (numpy array of floats)\n",

+ " i (numpy array of floats)\n",

" synaptic input at current time step of shape (neurons)\n",

"\n",

" dt (float)\n",

@@ -2073,7 +2077,7 @@

"\n",

" return v\n",

"\n",

- "# to_remove solution\n",

+ "\n",

"def spike_clamp(v, delta_spike):\n",

" \"\"\"\n",

" Resets membrane potential of neurons if v>= vth\n",

@@ -2244,7 +2248,7 @@

"execution": {}

},

"source": [

- "Using classes helps with code reuse and reliability. Well-designed classes are like black boxes in that they receive inputs and provide expected outputs. The details of how the class processes inputs and produces outputs are unimportant.\n",

+ "Using classes helps with code reuse and reliability. Well-designed classes are like black boxes, receiving inputs and providing expected outputs. The details of how the class processes inputs and produces outputs are unimportant.\n",

"\n",

"See additional details here: [A Beginner's Python Tutorial/Classes](https://en.wikibooks.org/wiki/A_Beginner%27s_Python_Tutorial/Classes)\n",

"\n",

diff --git a/tutorials/W0D2_PythonWorkshop2/instructor/W0D2_Tutorial1.ipynb b/tutorials/W0D2_PythonWorkshop2/instructor/W0D2_Tutorial1.ipynb

index 59f621d..db23b72 100644

--- a/tutorials/W0D2_PythonWorkshop2/instructor/W0D2_Tutorial1.ipynb

+++ b/tutorials/W0D2_PythonWorkshop2/instructor/W0D2_Tutorial1.ipynb

@@ -47,9 +47,10 @@

"source": [

"---\n",

"## Tutorial objectives\n",

+ "\n",

"We learned basic Python and NumPy concepts in the previous tutorial. These new and efficient coding techniques can be applied repeatedly in tutorials from the NMA course, and elsewhere.\n",

"\n",

- "In this tutorial, we'll introduce spikes in our LIF neuron and evaluate the refractory period's effect in spiking dynamics!\n"

+ "In this tutorial, we'll introduce spikes in our LIF neuron and evaluate the refractory period's effect in spiking dynamics!"

]

},

{

@@ -296,7 +297,7 @@

"\n",

"

\n",

"\n",

- "Another important statistic is the sample [histogram](https://en.wikipedia.org/wiki/Histogram). For our LIF neuron it provides an approximate representation of the distribution of membrane potential $V_m(t)$ at time $t=t_k\\in[0,t_{max}]$. For $N$ realizations $V\\left(t_k\\right)$ and $J$ bins is given by:\n",

+ "Another important statistic is the sample [histogram](https://en.wikipedia.org/wiki/Histogram). For our LIF neuron, it provides an approximate representation of the distribution of membrane potential $V_m(t)$ at time $t=t_k\\in[0,t_{max}]$. For $N$ realizations $V\\left(t_k\\right)$ and $J$ bins is given by:\n",

"\n",

"

\n",

"\\begin{equation}\n",

@@ -611,7 +612,7 @@

"execution": {}

},

"source": [

- "A spike takes place whenever $V(t)$ crosses $V_{th}$. In that case, a spike is recorded and $V(t)$ resets to $V_{reset}$ value. This is summarized in the *reset condition*:\n",

+ "A spike occures whenever $V(t)$ crosses $V_{th}$. In that case, a spike is recorded, and $V(t)$ resets to $V_{reset}$ value. This is summarized in the *reset condition*:\n",

"\n",

"\\begin{equation}\n",

"V(t) = V_{reset}\\quad \\text{ if } V(t)\\geq V_{th}\n",

@@ -773,7 +774,7 @@

"for step, t in enumerate(t_range):\n",

"\n",

" # Skip first iteration\n",

- " if step==0:\n",

+ " if step == 0:\n",

" continue\n",

"\n",

" # Compute v_n\n",

@@ -856,7 +857,7 @@

"for step, t in enumerate(t_range):\n",

"\n",

" # Skip first iteration\n",

- " if step==0:\n",

+ " if step == 0:\n",

" continue\n",

"\n",

" # Compute v_n\n",

@@ -1008,7 +1009,7 @@

"execution": {}

},

"source": [

- "Numpy arrays can be indexed by boolean arrays to select a subset of elements (also works with lists of booleans).\n",

+ "Boolean arrays can index NumPy arrays to select a subset of elements (also works with lists of booleans).\n",

"\n",

"The boolean array itself can be initiated by a condition, as shown in the example below.\n",

"\n",

@@ -1195,7 +1196,7 @@

},

"outputs": [],

"source": [

- "# to_remove solutions\n",

+ "# to_remove solution\n",

"\n",

"# Set random number generator\n",

"np.random.seed(2020)\n",

@@ -1557,9 +1558,8 @@

},

"source": [

"## Coding Exercise 5: Investigating refactory periods\n",

- "Investigate the effect of (absolute) refractory period $t_{ref}$ on the evolution of output rate $\\lambda(t)$. Add refractory period $t_{ref}=10$ ms after each spike, during which $V(t)$ is clamped to $V_{reset}$.\n",

"\n",

- "\n"

+ "Investigate the effect of (absolute) refractory period $t_{ref}$ on the evolution of output rate $\\lambda(t)$. Add refractory period $t_{ref}=10$ ms after each spike, during which $V(t)$ is clamped to $V_{reset}$."

]

},

{

@@ -1707,13 +1707,13 @@

},

"source": [

"## Interactive Demo 1: Random refractory period\n",

+ "\n",

"In the following interactive demo, we will investigate the effect of random refractory periods. We will use random refactory periods $t_{ref}$ with\n",

"$t_{ref} = \\mu + \\sigma\\,\\mathcal{N}$, where $\\mathcal{N}$ is the [normal distribution](https://en.wikipedia.org/wiki/Normal_distribution), $\\mu=0.01$ and $\\sigma=0.007$.\n",

"\n",

- "Refractory period samples `t_ref` of size `n` is initialized with `np.random.normal`. We clip negative values to `0` with boolean indexes. (Why?) You can double click the cell to see the hidden code.\n",

+ "Refractory period samples `t_ref` of size `n` is initialized with `np.random.normal`. We clip negative values to `0` with boolean indexes. (Why?) You can double-click the cell to see the hidden code.\n",

"\n",

- "You can play with the parameters mu and sigma and visualize the resulting simulation.\n",

- "What is the effect of different $\\sigma$ values?\n"

+ "You can play with the parameters mu and sigma and visualize the resulting simulation. What is the effect of different $\\sigma$ values?"

]

},

{

@@ -1944,17 +1944,18 @@

},

"source": [

"## Coding Exercise 6: Rewriting code with functions\n",

+ "\n",

"We will now re-organize parts of the code from the previous exercise with functions. You need to complete the function `spike_clamp()` to update $V(t)$ and deal with spiking and refractoriness"

]

},

{

- "cell_type": "code",

- "execution_count": null,

+ "cell_type": "markdown",

"metadata": {

+ "colab_type": "text",

"execution": {}

},

- "outputs": [],

"source": [

+ "```python\n",

"def ode_step(v, i, dt):\n",

" \"\"\"\n",

" Evolves membrane potential by one step of discrete time integration\n",

@@ -1963,7 +1964,7 @@

" v (numpy array of floats)\n",

" membrane potential at previous time step of shape (neurons)\n",

"\n",

- " v (numpy array of floats)\n",

+ " i (numpy array of floats)\n",

" synaptic input at current time step of shape (neurons)\n",

"\n",

" dt (float)\n",

@@ -1977,6 +1978,7 @@

"\n",

" return v\n",

"\n",

+ "\n",

"def spike_clamp(v, delta_spike):\n",

" \"\"\"\n",

" Resets membrane potential of neurons if v>= vth\n",

@@ -2050,7 +2052,9 @@

" last_spike[spiked] = t\n",

"\n",

"# Plot multiple realizations of Vm, spikes and mean spike rate\n",

- "plot_all(t_range, v_n, raster)"

+ "plot_all(t_range, v_n, raster)\n",

+ "\n",

+ "```"

]

},

{

@@ -2061,6 +2065,8 @@

},

"outputs": [],

"source": [

+ "# to_remove solution\n",

+ "\n",

"def ode_step(v, i, dt):\n",

" \"\"\"\n",

" Evolves membrane potential by one step of discrete time integration\n",

@@ -2069,7 +2075,7 @@

" v (numpy array of floats)\n",

" membrane potential at previous time step of shape (neurons)\n",

"\n",

- " v (numpy array of floats)\n",

+ " i (numpy array of floats)\n",

" synaptic input at current time step of shape (neurons)\n",

"\n",

" dt (float)\n",

@@ -2083,7 +2089,7 @@

"\n",

" return v\n",

"\n",

- "# to_remove solution\n",

+ "\n",

"def spike_clamp(v, delta_spike):\n",

" \"\"\"\n",

" Resets membrane potential of neurons if v>= vth\n",

@@ -2254,7 +2260,7 @@

"execution": {}

},

"source": [

- "Using classes helps with code reuse and reliability. Well-designed classes are like black boxes in that they receive inputs and provide expected outputs. The details of how the class processes inputs and produces outputs are unimportant.\n",

+ "Using classes helps with code reuse and reliability. Well-designed classes are like black boxes, receiving inputs and providing expected outputs. The details of how the class processes inputs and produces outputs are unimportant.\n",

"\n",

"See additional details here: [A Beginner's Python Tutorial/Classes](https://en.wikibooks.org/wiki/A_Beginner%27s_Python_Tutorial/Classes)\n",

"\n",

diff --git a/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_9aaee1d8.py b/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_950b2963.py

similarity index 99%

rename from tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_9aaee1d8.py

rename to tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_950b2963.py

index dcce5f8..25a9424 100644

--- a/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_9aaee1d8.py

+++ b/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_950b2963.py

@@ -17,7 +17,7 @@

for step, t in enumerate(t_range):

# Skip first iteration

- if step==0:

+ if step == 0:

continue

# Compute v_n

diff --git a/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_bf9f75ab.py b/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_bf9f75ab.py

new file mode 100644

index 0000000..1174616

--- /dev/null

+++ b/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_bf9f75ab.py

@@ -0,0 +1,94 @@

+

+def ode_step(v, i, dt):

+ """

+ Evolves membrane potential by one step of discrete time integration

+

+ Args:

+ v (numpy array of floats)

+ membrane potential at previous time step of shape (neurons)

+

+ i (numpy array of floats)

+ synaptic input at current time step of shape (neurons)

+

+ dt (float)

+ time step increment

+

+ Returns:

+ v (numpy array of floats)

+ membrane potential at current time step of shape (neurons)

+ """

+ v = v + dt/tau * (el - v + r*i)

+

+ return v

+

+

+def spike_clamp(v, delta_spike):

+ """

+ Resets membrane potential of neurons if v>= vth

+ and clamps to vr if interval of time since last spike < t_ref

+

+ Args:

+ v (numpy array of floats)

+ membrane potential of shape (neurons)

+

+ delta_spike (numpy array of floats)

+ interval of time since last spike of shape (neurons)

+

+ Returns:

+ v (numpy array of floats)

+ membrane potential of shape (neurons)

+ spiked (numpy array of floats)

+ boolean array of neurons that spiked of shape (neurons)

+ """

+

+ # Boolean array spiked indexes neurons with v>=vth

+ spiked = (v >= vth)

+ v[spiked] = vr

+

+ # Boolean array clamped indexes refractory neurons

+ clamped = (t_ref > delta_spike)

+ v[clamped] = vr

+

+ return v, spiked

+

+

+# Set random number generator

+np.random.seed(2020)

+

+# Initialize step_end, t_range, n, v_n and i

+t_range = np.arange(0, t_max, dt)

+step_end = len(t_range)

+n = 500

+v_n = el * np.ones([n, step_end])

+i = i_mean * (1 + 0.1 * (t_max / dt)**(0.5) * (2 * np.random.random([n, step_end]) - 1))

+

+# Initialize binary numpy array for raster plot

+raster = np.zeros([n,step_end])

+

+# Initialize t_ref and last_spike

+mu = 0.01

+sigma = 0.007

+t_ref = mu + sigma*np.random.normal(size=n)

+t_ref[t_ref<0] = 0

+last_spike = -t_ref * np.ones([n])

+

+# Loop over time steps

+for step, t in enumerate(t_range):

+

+ # Skip first iteration

+ if step==0:

+ continue

+

+ # Compute v_n

+ v_n[:,step] = ode_step(v_n[:,step-1], i[:,step], dt)

+

+ # Reset membrane potential and clamp

+ v_n[:,step], spiked = spike_clamp(v_n[:,step], t - last_spike)

+

+ # Update raster and last_spike

+ raster[spiked,step] = 1.

+ last_spike[spiked] = t

+

+# Plot multiple realizations of Vm, spikes and mean spike rate

+with plt.xkcd():

+ plot_all(t_range, v_n, raster)

\ No newline at end of file

diff --git a/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_5061d76b.py b/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_c368c1ef.py

similarity index 100%

rename from tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_5061d76b.py

rename to tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_c368c1ef.py

diff --git a/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_bf9f75ab_0.png b/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_bf9f75ab_0.png

new file mode 100644

index 0000000..b2dce30

Binary files /dev/null and b/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_bf9f75ab_0.png differ

diff --git a/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_bf9f75ab_1.png b/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_bf9f75ab_1.png

new file mode 100644

index 0000000..f816f55

Binary files /dev/null and b/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_bf9f75ab_1.png differ

diff --git a/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_5061d76b_0.png b/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_c368c1ef_0.png

similarity index 100%

rename from tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_5061d76b_0.png

rename to tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_c368c1ef_0.png

diff --git a/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_5061d76b_1.png b/tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_c368c1ef_1.png

similarity index 100%

rename from tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_5061d76b_1.png

rename to tutorials/W0D2_PythonWorkshop2/static/W0D2_Tutorial1_Solution_c368c1ef_1.png

diff --git a/tutorials/W0D2_PythonWorkshop2/student/W0D2_Tutorial1.ipynb b/tutorials/W0D2_PythonWorkshop2/student/W0D2_Tutorial1.ipynb

index 7447ec4..009a421 100644

--- a/tutorials/W0D2_PythonWorkshop2/student/W0D2_Tutorial1.ipynb

+++ b/tutorials/W0D2_PythonWorkshop2/student/W0D2_Tutorial1.ipynb

@@ -47,9 +47,10 @@

"source": [

"---\n",

"## Tutorial objectives\n",

+ "\n",

"We learned basic Python and NumPy concepts in the previous tutorial. These new and efficient coding techniques can be applied repeatedly in tutorials from the NMA course, and elsewhere.\n",

"\n",

- "In this tutorial, we'll introduce spikes in our LIF neuron and evaluate the refractory period's effect in spiking dynamics!\n"

+ "In this tutorial, we'll introduce spikes in our LIF neuron and evaluate the refractory period's effect in spiking dynamics!"

]

},

{

@@ -296,7 +297,7 @@

"\n",

"

\n",

"\n",

- "Another important statistic is the sample [histogram](https://en.wikipedia.org/wiki/Histogram). For our LIF neuron it provides an approximate representation of the distribution of membrane potential $V_m(t)$ at time $t=t_k\\in[0,t_{max}]$. For $N$ realizations $V\\left(t_k\\right)$ and $J$ bins is given by:\n",

+ "Another important statistic is the sample [histogram](https://en.wikipedia.org/wiki/Histogram). For our LIF neuron, it provides an approximate representation of the distribution of membrane potential $V_m(t)$ at time $t=t_k\\in[0,t_{max}]$. For $N$ realizations $V\\left(t_k\\right)$ and $J$ bins is given by:\n",

"\n",

"

\n",

"\\begin{equation}\n",

@@ -573,7 +574,7 @@

"execution": {}

},

"source": [

- "A spike takes place whenever $V(t)$ crosses $V_{th}$. In that case, a spike is recorded and $V(t)$ resets to $V_{reset}$ value. This is summarized in the *reset condition*:\n",

+ "A spike occures whenever $V(t)$ crosses $V_{th}$. In that case, a spike is recorded, and $V(t)$ resets to $V_{reset}$ value. This is summarized in the *reset condition*:\n",

"\n",

"\\begin{equation}\n",

"V(t) = V_{reset}\\quad \\text{ if } V(t)\\geq V_{th}\n",

@@ -735,7 +736,7 @@

"for step, t in enumerate(t_range):\n",

"\n",

" # Skip first iteration\n",

- " if step==0:\n",

+ " if step == 0:\n",

" continue\n",

"\n",

" # Compute v_n\n",

@@ -795,7 +796,7 @@

"execution": {}

},

"source": [

- "[*Click for solution*](https://github.com/NeuromatchAcademy/precourse/tree/main/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_9aaee1d8.py)\n",

+ "[*Click for solution*](https://github.com/NeuromatchAcademy/precourse/tree/main/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_950b2963.py)\n",

"\n"

]

},

@@ -897,7 +898,7 @@

"execution": {}

},

"source": [

- "Numpy arrays can be indexed by boolean arrays to select a subset of elements (also works with lists of booleans).\n",

+ "Boolean arrays can index NumPy arrays to select a subset of elements (also works with lists of booleans).\n",

"\n",

"The boolean array itself can be initiated by a condition, as shown in the example below.\n",

"\n",

@@ -1081,13 +1082,13 @@

"execution": {}

},

"source": [

- "[*Click for solution*](https://github.com/NeuromatchAcademy/precourse/tree/main/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_5061d76b.py)\n",

+ "[*Click for solution*](https://github.com/NeuromatchAcademy/precourse/tree/main/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_c368c1ef.py)\n",

"\n",

"*Example output:*\n",

"\n",

- " \n",

+ "

\n",

+ " \n",

"\n",

- "

\n",

"\n",

- " \n",

+ "

\n",

+ " \n",

"\n"

]

},

@@ -1374,9 +1375,8 @@

},

"source": [

"## Coding Exercise 5: Investigating refactory periods\n",

- "Investigate the effect of (absolute) refractory period $t_{ref}$ on the evolution of output rate $\\lambda(t)$. Add refractory period $t_{ref}=10$ ms after each spike, during which $V(t)$ is clamped to $V_{reset}$.\n",

"\n",

- "\n"

+ "Investigate the effect of (absolute) refractory period $t_{ref}$ on the evolution of output rate $\\lambda(t)$. Add refractory period $t_{ref}=10$ ms after each spike, during which $V(t)$ is clamped to $V_{reset}$."

]

},

{

@@ -1477,13 +1477,13 @@

},

"source": [

"## Interactive Demo 1: Random refractory period\n",

+ "\n",

"In the following interactive demo, we will investigate the effect of random refractory periods. We will use random refactory periods $t_{ref}$ with\n",

"$t_{ref} = \\mu + \\sigma\\,\\mathcal{N}$, where $\\mathcal{N}$ is the [normal distribution](https://en.wikipedia.org/wiki/Normal_distribution), $\\mu=0.01$ and $\\sigma=0.007$.\n",

"\n",

- "Refractory period samples `t_ref` of size `n` is initialized with `np.random.normal`. We clip negative values to `0` with boolean indexes. (Why?) You can double click the cell to see the hidden code.\n",

+ "Refractory period samples `t_ref` of size `n` is initialized with `np.random.normal`. We clip negative values to `0` with boolean indexes. (Why?) You can double-click the cell to see the hidden code.\n",

"\n",

- "You can play with the parameters mu and sigma and visualize the resulting simulation.\n",

- "What is the effect of different $\\sigma$ values?\n"

+ "You can play with the parameters mu and sigma and visualize the resulting simulation. What is the effect of different $\\sigma$ values?"

]

},

{

@@ -1714,6 +1714,7 @@

},

"source": [

"## Coding Exercise 6: Rewriting code with functions\n",

+ "\n",

"We will now re-organize parts of the code from the previous exercise with functions. You need to complete the function `spike_clamp()` to update $V(t)$ and deal with spiking and refractoriness"

]

},

@@ -1733,7 +1734,7 @@

" v (numpy array of floats)\n",

" membrane potential at previous time step of shape (neurons)\n",

"\n",

- " v (numpy array of floats)\n",

+ " i (numpy array of floats)\n",

" synaptic input at current time step of shape (neurons)\n",

"\n",

" dt (float)\n",

@@ -1747,6 +1748,7 @@

"\n",

" return v\n",

"\n",

+ "\n",

"def spike_clamp(v, delta_spike):\n",

" \"\"\"\n",

" Resets membrane potential of neurons if v>= vth\n",

@@ -1824,106 +1826,20 @@

]

},

{

- "cell_type": "code",

- "execution_count": null,

+ "cell_type": "markdown",

"metadata": {

+ "colab_type": "text",

"execution": {}

},

- "outputs": [],

"source": [

- "def ode_step(v, i, dt):\n",

- " \"\"\"\n",

- " Evolves membrane potential by one step of discrete time integration\n",

- "\n",

- " Args:\n",

- " v (numpy array of floats)\n",

- " membrane potential at previous time step of shape (neurons)\n",

- "\n",

- " v (numpy array of floats)\n",

- " synaptic input at current time step of shape (neurons)\n",

- "\n",

- " dt (float)\n",

- " time step increment\n",

- "\n",

- " Returns:\n",

- " v (numpy array of floats)\n",

- " membrane potential at current time step of shape (neurons)\n",

- " \"\"\"\n",

- " v = v + dt/tau * (el - v + r*i)\n",

- "\n",

- " return v\n",

- "\n",

- "# to_remove solution\n",

- "def spike_clamp(v, delta_spike):\n",

- " \"\"\"\n",

- " Resets membrane potential of neurons if v>= vth\n",

- " and clamps to vr if interval of time since last spike < t_ref\n",

- "\n",

- " Args:\n",

- " v (numpy array of floats)\n",

- " membrane potential of shape (neurons)\n",

- "\n",

- " delta_spike (numpy array of floats)\n",

- " interval of time since last spike of shape (neurons)\n",

- "\n",

- " Returns:\n",

- " v (numpy array of floats)\n",

- " membrane potential of shape (neurons)\n",

- " spiked (numpy array of floats)\n",

- " boolean array of neurons that spiked of shape (neurons)\n",

- " \"\"\"\n",

+ "[*Click for solution*](https://github.com/NeuromatchAcademy/precourse/tree/main/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_bf9f75ab.py)\n",

"\n",

- " # Boolean array spiked indexes neurons with v>=vth\n",

- " spiked = (v >= vth)\n",

- " v[spiked] = vr\n",

- "\n",

- " # Boolean array clamped indexes refractory neurons\n",

- " clamped = (t_ref > delta_spike)\n",

- " v[clamped] = vr\n",

- "\n",

- " return v, spiked\n",

- "\n",

- "\n",

- "# Set random number generator\n",

- "np.random.seed(2020)\n",

- "\n",

- "# Initialize step_end, t_range, n, v_n and i\n",

- "t_range = np.arange(0, t_max, dt)\n",

- "step_end = len(t_range)\n",

- "n = 500\n",

- "v_n = el * np.ones([n, step_end])\n",

- "i = i_mean * (1 + 0.1 * (t_max / dt)**(0.5) * (2 * np.random.random([n, step_end]) - 1))\n",

- "\n",

- "# Initialize binary numpy array for raster plot\n",

- "raster = np.zeros([n,step_end])\n",

- "\n",

- "# Initialize t_ref and last_spike\n",

- "mu = 0.01\n",

- "sigma = 0.007\n",

- "t_ref = mu + sigma*np.random.normal(size=n)\n",

- "t_ref[t_ref<0] = 0\n",

- "last_spike = -t_ref * np.ones([n])\n",

- "\n",

- "# Loop over time steps\n",

- "for step, t in enumerate(t_range):\n",

- "\n",

- " # Skip first iteration\n",

- " if step==0:\n",

- " continue\n",

- "\n",

- " # Compute v_n\n",

- " v_n[:,step] = ode_step(v_n[:,step-1], i[:,step], dt)\n",

- "\n",

- " # Reset membrane potential and clamp\n",

- " v_n[:,step], spiked = spike_clamp(v_n[:,step], t - last_spike)\n",

+ "*Example output:*\n",

"\n",

- " # Update raster and last_spike\n",

- " raster[spiked,step] = 1.\n",

- " last_spike[spiked] = t\n",

+ "

\n",

"\n"

]

},

@@ -1374,9 +1375,8 @@

},

"source": [

"## Coding Exercise 5: Investigating refactory periods\n",

- "Investigate the effect of (absolute) refractory period $t_{ref}$ on the evolution of output rate $\\lambda(t)$. Add refractory period $t_{ref}=10$ ms after each spike, during which $V(t)$ is clamped to $V_{reset}$.\n",

"\n",

- "\n"

+ "Investigate the effect of (absolute) refractory period $t_{ref}$ on the evolution of output rate $\\lambda(t)$. Add refractory period $t_{ref}=10$ ms after each spike, during which $V(t)$ is clamped to $V_{reset}$."

]

},

{

@@ -1477,13 +1477,13 @@

},

"source": [

"## Interactive Demo 1: Random refractory period\n",

+ "\n",

"In the following interactive demo, we will investigate the effect of random refractory periods. We will use random refactory periods $t_{ref}$ with\n",

"$t_{ref} = \\mu + \\sigma\\,\\mathcal{N}$, where $\\mathcal{N}$ is the [normal distribution](https://en.wikipedia.org/wiki/Normal_distribution), $\\mu=0.01$ and $\\sigma=0.007$.\n",

"\n",

- "Refractory period samples `t_ref` of size `n` is initialized with `np.random.normal`. We clip negative values to `0` with boolean indexes. (Why?) You can double click the cell to see the hidden code.\n",

+ "Refractory period samples `t_ref` of size `n` is initialized with `np.random.normal`. We clip negative values to `0` with boolean indexes. (Why?) You can double-click the cell to see the hidden code.\n",

"\n",

- "You can play with the parameters mu and sigma and visualize the resulting simulation.\n",

- "What is the effect of different $\\sigma$ values?\n"

+ "You can play with the parameters mu and sigma and visualize the resulting simulation. What is the effect of different $\\sigma$ values?"

]

},

{

@@ -1714,6 +1714,7 @@

},

"source": [

"## Coding Exercise 6: Rewriting code with functions\n",

+ "\n",

"We will now re-organize parts of the code from the previous exercise with functions. You need to complete the function `spike_clamp()` to update $V(t)$ and deal with spiking and refractoriness"

]

},

@@ -1733,7 +1734,7 @@

" v (numpy array of floats)\n",

" membrane potential at previous time step of shape (neurons)\n",

"\n",

- " v (numpy array of floats)\n",

+ " i (numpy array of floats)\n",

" synaptic input at current time step of shape (neurons)\n",

"\n",

" dt (float)\n",

@@ -1747,6 +1748,7 @@

"\n",

" return v\n",

"\n",

+ "\n",

"def spike_clamp(v, delta_spike):\n",

" \"\"\"\n",

" Resets membrane potential of neurons if v>= vth\n",

@@ -1824,106 +1826,20 @@

]

},

{

- "cell_type": "code",

- "execution_count": null,

+ "cell_type": "markdown",

"metadata": {

+ "colab_type": "text",

"execution": {}

},

- "outputs": [],

"source": [

- "def ode_step(v, i, dt):\n",

- " \"\"\"\n",

- " Evolves membrane potential by one step of discrete time integration\n",

- "\n",

- " Args:\n",

- " v (numpy array of floats)\n",

- " membrane potential at previous time step of shape (neurons)\n",

- "\n",

- " v (numpy array of floats)\n",

- " synaptic input at current time step of shape (neurons)\n",

- "\n",

- " dt (float)\n",

- " time step increment\n",

- "\n",

- " Returns:\n",

- " v (numpy array of floats)\n",

- " membrane potential at current time step of shape (neurons)\n",

- " \"\"\"\n",

- " v = v + dt/tau * (el - v + r*i)\n",

- "\n",

- " return v\n",

- "\n",

- "# to_remove solution\n",

- "def spike_clamp(v, delta_spike):\n",

- " \"\"\"\n",

- " Resets membrane potential of neurons if v>= vth\n",

- " and clamps to vr if interval of time since last spike < t_ref\n",

- "\n",

- " Args:\n",

- " v (numpy array of floats)\n",

- " membrane potential of shape (neurons)\n",

- "\n",

- " delta_spike (numpy array of floats)\n",

- " interval of time since last spike of shape (neurons)\n",

- "\n",

- " Returns:\n",

- " v (numpy array of floats)\n",

- " membrane potential of shape (neurons)\n",

- " spiked (numpy array of floats)\n",

- " boolean array of neurons that spiked of shape (neurons)\n",

- " \"\"\"\n",

+ "[*Click for solution*](https://github.com/NeuromatchAcademy/precourse/tree/main/tutorials/W0D2_PythonWorkshop2/solutions/W0D2_Tutorial1_Solution_bf9f75ab.py)\n",

"\n",

- " # Boolean array spiked indexes neurons with v>=vth\n",

- " spiked = (v >= vth)\n",

- " v[spiked] = vr\n",

- "\n",

- " # Boolean array clamped indexes refractory neurons\n",

- " clamped = (t_ref > delta_spike)\n",

- " v[clamped] = vr\n",

- "\n",

- " return v, spiked\n",

- "\n",

- "\n",

- "# Set random number generator\n",

- "np.random.seed(2020)\n",

- "\n",

- "# Initialize step_end, t_range, n, v_n and i\n",

- "t_range = np.arange(0, t_max, dt)\n",

- "step_end = len(t_range)\n",

- "n = 500\n",

- "v_n = el * np.ones([n, step_end])\n",

- "i = i_mean * (1 + 0.1 * (t_max / dt)**(0.5) * (2 * np.random.random([n, step_end]) - 1))\n",

- "\n",

- "# Initialize binary numpy array for raster plot\n",

- "raster = np.zeros([n,step_end])\n",

- "\n",

- "# Initialize t_ref and last_spike\n",

- "mu = 0.01\n",

- "sigma = 0.007\n",

- "t_ref = mu + sigma*np.random.normal(size=n)\n",

- "t_ref[t_ref<0] = 0\n",

- "last_spike = -t_ref * np.ones([n])\n",

- "\n",

- "# Loop over time steps\n",

- "for step, t in enumerate(t_range):\n",

- "\n",

- " # Skip first iteration\n",

- " if step==0:\n",

- " continue\n",

- "\n",

- " # Compute v_n\n",

- " v_n[:,step] = ode_step(v_n[:,step-1], i[:,step], dt)\n",

- "\n",

- " # Reset membrane potential and clamp\n",

- " v_n[:,step], spiked = spike_clamp(v_n[:,step], t - last_spike)\n",

+ "*Example output:*\n",

"\n",

- " # Update raster and last_spike\n",

- " raster[spiked,step] = 1.\n",

- " last_spike[spiked] = t\n",

+ " \n",

"\n",

- "# Plot multiple realizations of Vm, spikes and mean spike rate\n",

- "with plt.xkcd():\n",

- " plot_all(t_range, v_n, raster)"

+ "

\n",

"\n",

- "# Plot multiple realizations of Vm, spikes and mean spike rate\n",

- "with plt.xkcd():\n",

- " plot_all(t_range, v_n, raster)"

+ " \n",

+ "\n"

]

},

{

@@ -2024,7 +1940,7 @@

"execution": {}

},

"source": [

- "Using classes helps with code reuse and reliability. Well-designed classes are like black boxes in that they receive inputs and provide expected outputs. The details of how the class processes inputs and produces outputs are unimportant.\n",

+ "Using classes helps with code reuse and reliability. Well-designed classes are like black boxes, receiving inputs and providing expected outputs. The details of how the class processes inputs and produces outputs are unimportant.\n",

"\n",

"See additional details here: [A Beginner's Python Tutorial/Classes](https://en.wikibooks.org/wiki/A_Beginner%27s_Python_Tutorial/Classes)\n",

"\n",

diff --git a/tutorials/W0D4_Calculus/W0D4_Tutorial1.ipynb b/tutorials/W0D4_Calculus/W0D4_Tutorial1.ipynb

index 5389114..fbd037d 100644

--- a/tutorials/W0D4_Calculus/W0D4_Tutorial1.ipynb

+++ b/tutorials/W0D4_Calculus/W0D4_Tutorial1.ipynb

@@ -27,7 +27,7 @@

"\n",

"**Content reviewers:** Aderogba Bayo, Tessy Tom, Matt McCann\n",

"\n",

- "**Production editors:** Matthew McCann, Ella Batty"

+ "**Production editors:** Matthew McCann, Spiros Chavlis, Ella Batty"

]

},

{

@@ -50,14 +50,12 @@

"\n",

"*Estimated timing of tutorial: 80 minutes*\n",

"\n",

- "In this tutorial, we will cover aspects of calculus that will be frequently used in the main NMA course. We assume that you have some familiarty with calculus, but may be a bit rusty or may not have done much practice. Specifically the objectives of this tutorial are\n",

+ "In this tutorial, we will cover aspects of calculus that will be frequently used in the main NMA course. We assume that you have some familiarity with calculus but may be a bit rusty or may not have done much practice. Specifically, the objectives of this tutorial are\n",

"\n",

- "* Get an intuitive understanding of derivative and integration operations\n",

- "* Learn to calculate the derivatives of 1- and 2-dimensional functions/signals numerically\n",

- "* Familiarize with the concept of neuron transfer function in 1- and 2-dimensions.\n",

- "* Familiarize with the idea of numerical integration using Riemann sum\n",

- "\n",

- "\n"

+ "* Get an intuitive understanding of derivative and integration operations\n",

+ "* Learn to calculate the derivatives of 1- and 2-dimensional functions/signals numerically\n",

+ "* Familiarize with the concept of the neuron transfer function in 1- and 2-dimensions.\n",

+ "* Familiarize with the idea of numerical integration using the Riemann sum"

]

},

{

@@ -494,13 +492,13 @@

"source": [

"### Interactive Demo 1: Geometrical understanding\n",

"\n",

- "In the interactive demo below, you can pick different functions to examine in the drop down menu. You can then choose to show the derivative function and/or the integral function.\n",

+ "In the interactive demo below, you can pick different functions to examine in the drop-down menu. You can then choose to show the derivative function and/or the integral function.\n",

"\n",

"For the integral, we have chosen the unknown constant $C$ such that the integral function at the left x-axis limit is $0$, as $f(t = -10) = 0$. So the integral will reflect the area under the curve starting from that position.\n",

"\n",

"For each function:\n",

"\n",

- "* Examine just the function first. Discuss and predict what the derivative and integral will look like. Remember that derivative = slope of function, integral = area under curve from $t = -10$ to that t.\n",

+ "* Examine just the function first. Discuss and predict what the derivative and integral will look like. Remember that _derivative = slope of the function_, _integral = area under the curve from $t = -10$ to that $t$_.\n",

"* Check the derivative - does it match your expectations?\n",

"* Check the integral - does it match your expectations?"

]

@@ -550,9 +548,9 @@

"execution": {}

},

"source": [

- "In the demo above you may have noticed that the derivative and integral of the exponential function is same as the exponential function itself.\n",

+ "In the demo above, you may have noticed that the derivative and integral of the exponential function are the same as the exponential function itself.\n",

"\n",

- "Some functions like the exponential function, when differentiated or integrated, equal a scalar times the same function. This is a similar idea to eigenvectors of a matrix being those that, when multipled by the matrix, equal a scalar times themselves, as you saw yesterday!\n",

+ "When differentiated or integrated, some functions, like the exponential function, equal scalar times the same function. This is a similar idea to eigenvectors of a matrix being those that, when multiplied by the matrix, equal scalar times themselves, as you saw yesterday!\n",

"\n",

"When\n",

"\n",

@@ -679,15 +677,11 @@

"execution": {}

},

"source": [

- "## Section 2.1: Analytical Differentiation\n",

- "\n",

- "*Estimated timing to here from start of tutorial: 20 min*\n",

+ "When we find the derivative analytically, we obtain the exact formula for the derivative function.\n",

"\n",

- "When we find the derivative analytically, we are finding the exact formula for the derivative function.\n",

+ "To do this, instead of having to do some fancy math every time, we can often consult [an online resource](https://en.wikipedia.org/wiki/Differentiation_rules) for a list of common derivatives, in this case, our trusty friend Wikipedia.\n",

"\n",

- "To do this, instead of having to do some fancy math every time, we can often consult [an online resource](https://en.wikipedia.org/wiki/Differentiation_rules) for a list of common derivatives, in this case our trusty friend Wikipedia.\n",

- "\n",

- "If I told you to find the derivative of $f(t) = t^3$, you could consult that site and find in Section 2.1, that if $f(t) = t^n$, then $\\frac{d(f(t))}{dt} = nt^{n-1}$. So you would be able to tell me that the derivative of $f(t) = t^3$ is $\\frac{d(f(t))}{dt} = 3t^{2}$.\n",

+ "If I told you to find the derivative of $f(t) = t^3$, you could consult that site and find in Section 2.1 that if $f(t) = t^n$, then $\\frac{d(f(t))}{dt} = nt^{n-1}$. So you would be able to tell me that the derivative of $f(t) = t^3$ is $\\frac{d(f(t))}{dt} = 3t^{2}$.\n",

"\n",

"This list of common derivatives often contains only very simple functions. Luckily, as we'll see in the next two sections, we can often break the derivative of a complex function down into the derivatives of more simple components."

]

@@ -717,7 +711,7 @@

"source": [

"#### Coding Exercise 2.1.1: Derivative of the postsynaptic potential alpha function\n",

"\n",

- "Let's use the product rule to get the derivative of the post-synaptic potential alpha function. As we saw in Video 3, the shape of the postsynaptic potential is given by the so called alpha function:\n",

+ "Let's use the product rule to get the derivative of the postsynaptic potential alpha function. As we saw in Video 3, the shape of the postsynaptic potential is given by the so-called alpha function:\n",

"\n",

"\\begin{equation}\n",

"f(t) = t \\cdot \\text{exp}\\left( -\\frac{t}{\\tau} \\right)\n",

@@ -725,7 +719,7 @@

"\n",

"Here $f(t)$ is a product of $t$ and $\\text{exp} \\left(-\\frac{t}{\\tau} \\right)$. So we can have $u(t) = t$ and $v(t) = \\text{exp} \\left( -\\frac{t}{\\tau} \\right)$ and use the product rule!\n",

"\n",

- "We have defined $u(t)$ and $v(t)$ in the code below, in terms of the variable $t$ which is an array of time steps from 0 to 10. Define $\\frac{du}{dt}$ and $\\frac{dv}{dt}$, the compute the full derivative of the alpha function using the product rule. You can always consult wikipedia to figure out $\\frac{du}{dt}$ and $\\frac{dv}{dt}$!"

+ "We have defined $u(t)$ and $v(t)$ in the code below for the variable $t$, an array of time steps from 0 to 10. Define $\\frac{du}{dt}$ and $\\frac{dv}{dt}$, then compute the full derivative of the alpha function using the product rule. You can always consult Wikipedia to figure out $\\frac{du}{dt}$ and $\\frac{dv}{dt}$!"

]

},

{

@@ -818,7 +812,7 @@

"source": [

"### Section 2.1.2: Chain Rule\n",

"\n",

- "Many times we encounter situations in which the variable $a$ is changing with time ($t$) and affecting another variable $r$. How can we estimate the derivative of $r$ with respect to $a$ i.e. $\\frac{dr}{da} = ?$\n",

+ "We often encounter situations in which the variable $a$ changes with time ($t$) and affects another variable $r$. How can we estimate the derivative of $r$ with respect to $a$, i.e., $\\frac{dr}{da} = ?$\n",

"\n",

"To calculate $\\frac{dr}{da}$ we use the [Chain Rule](https://en.wikipedia.org/wiki/Chain_rule).\n",

"\n",

@@ -826,9 +820,9 @@

"\\frac{dr}{da} = \\frac{dr}{dt}\\cdot\\frac{dt}{da}\n",

"\\end{equation}\n",

"\n",

- "That is, we calculate the derivative of both variables with respect to t and divide that derivative of $r$ by that derivative of $a$.\n",

+ "We calculate the derivative of both variables with respect to $t$ and divide that derivative of $r$ by that derivative of $a$.\n",

"\n",

- "We can also use this formula to simplify taking derivatives of complex functions! We can make an arbitrary function t so that we can compute more simple derivatives and multiply, as we will see in this exercise."

+ "We can also use this formula to simplify taking derivatives of complex functions! We can make an arbitrary function $t$ to compute more simple derivatives and multiply, as seen in this exercise."

]

},

{

@@ -845,9 +839,9 @@

"r(a) = e^{a^4 + 1}\n",

"\\end{equation}\n",

"\n",

- "What is $\\frac{dr}{da}$? This is a more complex function so we can't simply consult a table of common derivatives. Can you use the chain rule to help?\n",

+ "What is $\\frac{dr}{da}$? This is a more complex function, so we can't simply consult a table of common derivatives. Can you use the chain rule to help?\n",

"\n",

- "**Hint:** we didn't define t but you could set t equal to the function in the exponent."

+ "**Hint:** We didn't define $t$, but you could set $t$ equal to the function in the exponent."

]

},

{

@@ -875,7 +869,7 @@

"dr/da = dr/dt * dt/da\n",

" = e^t(4a^3)\n",

" = 4a^3e^{a^4 + 1}\n",

- "\"\"\""

+ "\"\"\";"

]

},

{

@@ -901,7 +895,7 @@

"\n",

"There is a useful Python library for getting the analytical derivatives of functions: SymPy. We actually used this in Interactive Demo 1, under the hood.\n",

"\n",

- "See the following cell for an example of setting up a sympy function and finding the derivative."

+ "See the following cell for an example of setting up a SymPy function and finding the derivative."

]

},

{

@@ -955,9 +949,9 @@

"source": [

"### Interactive Demo 2.2: Numerical Differentiation of the Sine Function\n",

"\n",

- "Below, we find the numerical derivative of the sine function for different values of $h$, and and compare the result the analytical solution.\n",

+ "Below, we find the numerical derivative of the sine function for different values of $h$ and compare the result to the analytical solution.\n",

"\n",

- "- What values of h result in more accurate numerical derivatives?"

+ "* What values of $h$ result in more accurate numerical derivatives?"

]

},

{

@@ -1041,7 +1035,7 @@

"\n",

"*Estimated timing to here from start of tutorial: 34 min*\n",

"\n",

- "When we inject a constant current (DC) in a neuron, its firing rate changes as a function of strength of the injected current. This is called the **input-output transfer function** or just the *transfer function* or *I/O Curve* of the neuron. For most neurons this can be approximated by a sigmoid function e.g.,:\n",

+ "When we inject a constant current (DC) into a neuron, its firing rate changes as a function of the strength of the injected current. This is called the **input-output transfer function** or just the *transfer function* or *I/O Curve* of the neuron. For most neurons, this can be approximated by a sigmoid function, e.g.:\n",

"\n",

"\\begin{equation}\n",

"rate(I) = \\frac{1}{1+\\text{exp}(-a \\cdot (I-\\theta))} - \\frac{1}{\\text{exp}(a \\cdot \\theta)} + \\eta\n",

@@ -1051,7 +1045,7 @@

"\n",

"*You will visit this equation in a different context in Week 3*\n",

"\n",

- "The slope of a neurons input-output transfer function, i.e., $\\frac{d(r(I))}{dI}$, is called the **gain** of the neuron, as it tells how the neuron output will change if the input is changed. In other words, the slope of the transfer function tells us in which range of inputs the neuron output is most sensitive to changes in its input."

+ "The slope of a neuron input-output transfer function, i.e., $\\frac{d(r(I))}{dI}$, is called the **gain** of the neuron, as it tells how the neuron output will change if the input is changed. In other words, the slope of the transfer function tells us in which range of inputs the neuron output is most sensitive to changes in its input."

]

},

{

@@ -1062,9 +1056,9 @@

"source": [

"### Interactive Demo 2.3: Calculating the Transfer Function and Gain of a Neuron\n",

"\n",

- "In the following demo, you can estimate the gain of the following neuron transfer function using numerical differentiaton. We will use our timestep as h. See the cell below for a function that computes the rate via the fomula above and then the gain using numerical differentiation. In the following cell, you can play with the parameters $a$ and $\\theta$ to change the shape of the transfer functon (and see the resulting gain function). You can also set $I_{mean}$ to see how the slope is computed for that value of I. In the left plot, the red vertical lines are the two values of the current being used to compute the slope, while the blue lines point to the corresponding ouput firing rates.\n",

+ "In the following demo, you can estimate the gain of the following neuron transfer function using numerical differentiation. We will use our timestep as h. See the cell below for a function that computes the rate via the formula above and then the gain using numerical differentiation. In the following cell, you can play with the parameters $a$ and $\\theta$ to change the shape of the transfer function (and see the resulting gain function). You can also set $I_{mean}$ to see how the slope is computed for that value of I. In the left plot, the red vertical lines are the two values of the current being used to calculate the slope, while the blue lines point to the corresponding output firing rates.\n",

"\n",

- "Change the parameters of the neuron transfer function (i.e., $a$ and $\\theta$) and see if you can predict the value of $I$ for which the neuron has maximal slope and which parameter determines the peak value of the gain.\n",

+ "Change the parameters of the neuron transfer function (i.e., $a$ and $\\theta$) and see if you can predict the value of $I$ for which the neuron has a maximal slope and which parameter determines the peak value of the gain.\n",

"\n",

"1. Ensure you understand how the right plot relates to the left!\n",

"2. How does $\\theta$ affect the transfer function and gain?\n",

@@ -1324,13 +1318,13 @@

"source": [

"### Interactive Demo 3: Visualize partial derivatives\n",

"\n",

- "In the demo below, you can input any function of x and y and then visualize both the function and partial derivatives.\n",

+ "In the demo below, you can input any function of $x$ and $y$ and then visualize both the function and partial derivatives.\n",

"\n",

- "We visualized the 2-dimensional function as a surface plot in which the values of the function are rendered as color. Yellow represents a high value and blue represents a low value. The height of the surface also shows the numerical value of the function. A more complete description of 2D surface plots and why we need them is located in Bonus Section 1.1. The first plot is that of our function. And the two bottom plots are the derivative surfaces with respect to $x$ and $y$ variables.\n",

+ "We visualized the 2-dimensional function as a surface plot in which the function values are rendered as color. Yellow represents a high value, and blue represents a low value. The height of the surface also shows the numerical value of the function. A complete description of 2D surface plots and why we need them can be found in Bonus Section 1.1. The first plot is that of our function. And the two bottom plots are the derivative surfaces with respect to $x$ and $y$ variables.\n",

"\n",

"1. Ensure you understand how the plots relate to each other - if not, review the above material\n",

- "2. Can you come up with a function where the partial derivative with respect to x will be a linear plane and the derivative with respect to y will be more curvy?\n",

- "3. What happens to the partial derivatives if there are no terms involving multiplying $x$ and $y$ together?"

+ "2. Can you come up with a function where the partial derivative with respect to x will be a linear plane, and the derivative with respect to y will be more curvy?\n",

+ "3. What happens to the partial derivatives if no terms involve multiplying $x$ and $y$ together?"

]

},

{

@@ -1619,7 +1613,7 @@

},

"source": [

"There are other methods of numerical integration, such as\n",

- "**[Lebesgue integral](https://en.wikipedia.org/wiki/Lebesgue_integral)** and **Runge Kutta**. In the Lebesgue integral, we divide the area under the curve into horizontal stripes. That is, instead of the independent variable, the range of the function $f(t)$ is divided into small intervals. In any case, the Riemann sum is the basis of Euler's method of integration for solving ordinary differential equations - something you will do in a later tutorial today."

+ "**[Lebesgue integral](https://en.wikipedia.org/wiki/Lebesgue_integral)** and **Runge Kutta**. In the Lebesgue integral, we divide the area under the curve into horizontal stripes. That is, instead of the independent variable, the range of the function $f(t)$ is divided into small intervals. In any case, the Riemann sum is the basis of Euler's integration method for solving ordinary differential equations - something you will do in a later tutorial today."

]

},

{

@@ -1653,7 +1647,8 @@

},

"source": [

"### Coding Exercise 4.2: Calculating Charge Transfer with Excitatory Input\n",

- "An incoming spike elicits a change in the post-synaptic membrane potential (PSP) which can be captured by the following function:\n",

+ "\n",

+ "An incoming spike elicits a change in the post-synaptic membrane potential (PSP), which can be captured by the following function:\n",

"\n",

"\\begin{equation}\n",

"PSP(t) = J \\cdot t \\cdot \\text{exp}\\left(-\\frac{t-t_{sp}}{\\tau_{s}}\\right)\n",

@@ -1661,7 +1656,7 @@

"\n",

"where $J$ is the synaptic amplitude, $t_{sp}$ is the spike time and $\\tau_s$ is the synaptic time constant.\n",

"\n",

- "Estimate the total charge transfered to the postsynaptic neuron during an PSP with amplitude $J=1.0$, $\\tau_s = 1.0$ and $t_{sp} = 1$ (that is the spike occured at $1$ ms). The total charge will be the integral of the PSP function."

+ "Estimate the total charge transferred to the postsynaptic neuron during a PSP with amplitude $J=1.0$, $\\tau_s = 1.0$ and $t_{sp} = 1$ (that is the spike occurred at $1$ ms). The total charge will be the integral of the PSP function."

]

},

{

@@ -1905,9 +1900,7 @@

"execution": {}

},

"source": [

- "Notice how the differentiation operation amplifies the fast changes which were contributed by noise. By contrast, the integration operation supresses the fast changing noise. If we perform the same operation of averaging the adjancent samples on the orange trace, we will further smooth the signal. Such sums and subtractions form the basis of digital filters.\n",

- "\n",

- "\n"

+ "Notice how the differentiation operation amplifies the fast changes which were contributed by noise. By contrast, the integration operation suppresses the fast-changing noise. We will further smooth the signal if we perform the same operation of averaging the adjacent samples on the orange trace. Such sums and subtractions form the basis of digital filters."

]

},

{

@@ -1921,13 +1914,13 @@

"\n",

"*Estimated timing of tutorial: 80 minutes*\n",

"\n",

- "* Geometrically, integration is the area under the curve and differentiation is the slope of the function\n",

+ "* Geometrically, integration is the area under the curve, and differentiation is the slope of the function\n",

"* The concepts of slope and area can be easily extended to higher dimensions. We saw this when we took the derivative of a 2-dimensional transfer function of a neuron\n",

- "* Numerical estimates of both derivatives and integrals require us to choose a time step $h$. The smaller the $h$, the better the estimate, but for small values of $h$, more computations are needed. So there is always some tradeoff.\n",

- "* Partial derivatives are just the estimate of the slope along one of the many dimensions of the function. We can combine the slopes in different directions using vector sum to find the direction of the slope.\n",

- "* Because the derivative of a function is zero at the local peak or trough, derivatives are used to solve optimization problems.\n",

- "* When thinking of signal, integration operation is equivalent to smoothening the signals (i.e., remove fast changes)\n",

- "* Differentiation operations remove slow changes and enhance high frequency content of a signal"

+ "* Numerical estimates of both derivatives and integrals require us to choose a time step $h$. The smaller the $h$, the better the estimate, but more computations are needed for small values of $h$. So there is always some tradeoff\n",

+ "* Partial derivatives are just the estimate of the slope along one of the many dimensions of the function. We can combine the slopes in different directions using vector sum to find the direction of the slope\n",

+ "* Because the derivative of a function is zero at the local peak or trough, derivatives are used to solve optimization problems\n",

+ "* When thinking of signal, integration operation is equivalent to smoothening the signals (i.e., removing fast changes)\n",

+ "* Differentiation operations remove slow changes and enhance the high-frequency content of a signal"

]

},

{

@@ -1950,11 +1943,11 @@

"source": [

"## Bonus Section 1.1: Understanding 2D plots\n",

"\n",

- "Let's take the example of a neuron driven by excitatory and inhibitory inputs. Because this is for illustrative purposes, we will not go in the details of the numerical range of the input and output variables.\n",

+ "Let's take the example of a neuron driven by excitatory and inhibitory inputs. Because this is for illustrative purposes, we will not go into the details of the numerical range of the input and output variables.\n",

"\n",

- "In the function below, we assume that the firing rate of a neuron increases motonotically with an increase in excitation and decreases monotonically with an increase in inhibition. The inhibition is modelled as a subtraction. Like for the 1-dimensional transfer function, here we assume that we can approximate the transfer function as a sigmoid function.\n",

+ "In the function below, we assume that the firing rate of a neuron increases monotonically with an increase in excitation and decreases monotonically with an increase in inhibition. The inhibition is modeled as a subtraction. As for the 1-dimensional transfer function, we assume that we can approximate the transfer function as a sigmoid function.\n",

"\n",

- "To evaluate the partial derivatives we can use the same numerical differentiation as before but now we apply it to each row and column separately."

+ "We can use the same numerical differentiation as before to evaluate the partial derivatives, but now we apply it to each row and column separately."

]

},

{

@@ -2039,15 +2032,15 @@

"execution": {}

},

"source": [

- "In the **Top-Left** plot, we see how the neuron output rate increases as a function of excitatory input (e.g., the blue trace). However, as we increase inhibition, expectedly the neuron output decreases and the curve is shifted downwards. This constant shift in the curve suggests that the effect of inhibition is subtractive, and the amount of subtraction does not depend on the neuron output.\n",

+ "The **Top-Left** plot shows how the neuron output rate increases as a function of excitatory input (e.g., the blue trace). However, as we increase inhibition, expectedly, the neuron output decreases, and the curve is shifted downwards. This constant shift in the curve suggests that the effect of inhibition is subtractive, and the amount of subtraction does not depend on the neuron output.\n",

"\n",

"We can alternatively see how the neuron output changes with respect to inhibition and study how excitation affects that. This is visualized in the **Top-Right** plot.\n",

"\n",

"This type of plotting is very intuitive, but it becomes very tedious to visualize when there are larger numbers of lines to be plotted. A nice solution to this visualization problem is to render the data as color, as surfaces, or both.\n",

"\n",

- "This is what we have done in the plot on the bottom. The colormap on the right shows the output of the neuron as a function of inhibitory input and excitatory input. The output rate is shown both as height along the z-axis and as the color. Blue means low firing rate and yellow means high firing rate (see the color bar).\n",

+ "This is what we have done in the plot at the bottom. The color map on the right shows the neuron's output as a function of inhibitory and excitatory input. The output rate is shown both as height along the z-axis and as the color. Blue means a low firing rate, and yellow represents a high firing rate (see the color bar).\n",

"\n",

- "In the above plot, the output rate of the neuron goes below zero. This is of course not physiological as neurons cannot have negative firing rates. In models, we either choose the operating point such that the output does not go below zero, or else we clamp the neuron output to zero if it goes below zero. You will learn about it more in Week 2."

+ "In the above plot, the output rate of the neuron goes below zero. This is, of course, not physiological, as neurons cannot have negative firing rates. In models, we either choose the operating point so that the output does not go below zero, or we clamp the neuron output to zero if it goes below zero. You will learn about it more in Week 2."

]

},

{

@@ -2142,8 +2135,7 @@

"execution": {}

},

"source": [

- "Is this what you expected? Change the parameters in the function to generate the 2-d transfer function of the neuron for different excitatory and inhibitory $a$ and $\\theta$ and test your intuitions Can you relate this shape of the partial derivative surface to the gain of the 1-d transfer-function of a neuron (Section 2)?\n",

- "\n"

+ "Is this what you expected? Change the parameters in the function to generate the 2-D transfer function of the neuron for different excitatory and inhibitory $a$ and $\\theta$ and test your intuitions. Can you relate this shape of the partial derivative surface to the gain of the 1-D transfer function of a neuron (Section 2)?"

]

},

{

diff --git a/tutorials/W0D4_Calculus/W0D4_Tutorial2.ipynb b/tutorials/W0D4_Calculus/W0D4_Tutorial2.ipynb

index 852bd30..a86e9dc 100644

--- a/tutorials/W0D4_Calculus/W0D4_Tutorial2.ipynb

+++ b/tutorials/W0D4_Calculus/W0D4_Tutorial2.ipynb

@@ -2,7 +2,7 @@

"cells": [

{

"cell_type": "markdown",

- "id": "74ca2e85",

+ "id": "bc470a90",

"metadata": {

"colab_type": "text",

"execution": {},

@@ -54,18 +54,18 @@

"\n",

"*Estimated timing of tutorial: 45 minutes*\n",

"\n",

- "A great deal of neuroscience can be modelled using differential equations, from gating channels to single neurons to a network of neurons to blood flow, to behaviour. A simple way to think about differential equations is they are equations that describe how something changes.\n",

+ "A great deal of neuroscience can be modeled using differential equations, from gating channels to single neurons to a network of neurons to blood flow to behavior. A simple way to think about differential equations is they are equations that describe how something changes.\n",

"\n",

- "The most famous of these in neuroscience is the Nobel Prize winning Hodgkin Huxley equation, which describes a neuron by modelling the gating of each axon. But we will not start there; we will start a few steps back.\n",

+ "The most famous of these in neuroscience is the Nobel Prize-winning Hodgkin-Huxley equation, which describes a neuron by modeling the gating of each axon. But we will not start there; we will start a few steps back.\n",

"\n",

- "Differential Equations are mathematical equations that describe how something like population or a neuron changes over time. The reason why differential equations are so useful is they can generalise a process such that one equation can be used to describe many different outcomes.\n",

- "The general form of a first order differential equation is:\n",

+ "Differential Equations are mathematical equations that describe how something like a population or a neuron changes over time. Differential equations are so useful because they can generalize a process such that one equation can be used to describe many different outcomes.\n",

+ "The general form of a first-order differential equation is:\n",

"\n",

"\\begin{equation}\n",

"\\frac{d}{dt}y(t) = f\\left( t,y(t) \\right)\n",

"\\end{equation}\n",

"\n",

- "which can be read as \"the change in a process $y$ over time $t$ is a function $f$ of time $t$ and itself $y$\". This might initially seem like a paradox as you are using a process $y$ you want to know about to describe itself, a bit like the MC Escher drawing of two hands painting [each other](https://en.wikipedia.org/wiki/Drawing_Hands). But that is the beauty of mathematics - this can be solved some of time, and when it cannot be solved exactly we can use numerical methods to estimate the answer (as we will see in the next tutorial).\n",

+ "which can be read as \"the change in a process $y$ over time $t$ is a function $f$ of time $t$ and itself $y$\". This might initially seem like a paradox as you are using a process $y$ you want to know about to describe itself, a bit like the MC Escher drawing of two hands painting [each other](https://en.wikipedia.org/wiki/Drawing_Hands). But that is the beauty of mathematics - this can be solved sometimes, and when it cannot be solved exactly, we can use numerical methods to estimate the answer (as we will see in the next tutorial).\n",

"\n",

"In this tutorial, we will see how __differential equations are motivated by observations of physical responses__. We will break down the population differential equation, then the integrate and fire model, which leads nicely into raster plots and frequency-current curves to rate models.\n",

"\n",

@@ -73,7 +73,7 @@

"- Get an intuitive understanding of a linear population differential equation (humans, not neurons)\n",

"- Visualize the relationship between the change in population and the population\n",

"- Breakdown the Leaky Integrate and Fire (LIF) differential equation\n",

- "- Code the exact solution of an LIF for a constant input\n",

+ "- Code the exact solution of a LIF for a constant input\n",

"- Visualize and listen to the response of the LIF for different inputs"

]

},

@@ -556,6 +556,7 @@

},

"source": [

"### Think! 1.1: Interpretating the behavior of a linear population equation\n",

+ "\n",

"Using the plot below of change of population $\\frac{d}{dt} p(t) $ as a function of population $p(t)$ with birth-rate $\\alpha=0.3$, discuss the following questions:\n",

"\n",

"1. Why is the population differential equation known as a linear differential equation?\n",

@@ -638,13 +639,14 @@

},

"source": [

"### Section 1.1.1: Initial condition\n",

- "The linear population differential equation is known as an initial value differential equation because we need an initial population value to solve it. Here we will set our initial population at time $0$ to $1$:\n",

+ "\n",

+ "The linear population differential equation is an initial value differential equation because we need an initial population value to solve it. Here we will set our initial population at time $0$ to $1$:\n",

"\n",

"\\begin{equation}\n",

"p(0) = 1\n",

"\\end{equation}\n",

"\n",

- "Different initial conditions will lead to different answers, but they will not change the differential equation. This is one of the strengths of a differential equation."

+ "Different initial conditions will lead to different answers but will not change the differential equation. This is one of the strengths of a differential equation."

]

},

{

@@ -655,7 +657,8 @@

},

"source": [

"### Section 1.1.2: Exact Solution\n",

- "To calculate the exact solution of a differential equation, we must integrate both sides. Instead of numerical integration (as you delved into in the last tutorial), we will first try to solve the differential equations using analytical integration. As with derivatives, we can find analytical integrals of simple equations by consulting [a list](https://en.wikipedia.org/wiki/Lists_of_integrals). We can then get integrals for more complex equations using some mathematical tricks - the harder the equation the more obscure the trick.\n",

+ "\n",

+ "To calculate the exact solution of a differential equation, we must integrate both sides. Instead of numerical integration (as you delved into in the last tutorial), we will first try to solve the differential equations using analytical integration. As with derivatives, we can find analytical integrals of simple equations by consulting [a list](https://en.wikipedia.org/wiki/Lists_of_integrals). We can then get integrals for more complex equations using some mathematical tricks - the harder the equation, the more obscure the trick.\n",

"\n",

"The linear population equation\n",

"\\begin{equation}\n",

@@ -674,9 +677,9 @@

"\\text{\"Population\"} = \\text{\"grows/declines exponentially as a function of time and birth rate\"}.\n",

"\\end{equation}\n",

"\n",

- "Most differential equations do not have a known exact solution, so in the next tutorial on numerical methods we will show how the solution can be estimated.\n",

+ "Most differential equations do not have a known exact solution, so we will show how the solution can be estimated in the next tutorial on numerical methods.\n",

"\n",

- "A small aside: a good deal of progress in mathematics was due to mathematicians writing taunting letters to each other saying they had a trick that could solve something better than everyone else. So do not worry too much about the tricks."

+ "A small aside: a good deal of progress in mathematics was due to mathematicians writing taunting letters to each other, saying they had a trick to solve something better than everyone else. So do not worry too much about the tricks."

]

},

{

@@ -742,7 +745,7 @@

"\n",

"*Estimated timing to here from start of tutorial: 12 min*\n",

"\n",

- "One of the goals when designing a differential equation is to make it generalisable. Which means that the differential equation will give reasonable solutions for different countries with different birth rates $\\alpha$.\n"

+ "One of the goals when designing a differential equation is to make it generalizable. This means that the differential equation will give reasonable solutions for different countries with different birth rates $\\alpha$."

]

},

{

@@ -754,7 +757,7 @@

"source": [

"### Interactive Demo 1.2: Parameter Change\n",

"\n",

- "Play with the widget to see the relationship between $\\alpha$ and the population differential equation as a function of population (left-hand side), and the population solution as a function of time (right-hand side). Pay close attention to the transition point from positive to negative.\n",

+ "Play with the widget to see the relationship between $\\alpha$ and the population differential equation as a function of population (left-hand side) and the population solution as a function of time (right-hand side). Pay close attention to the transition point from positive to negative.\n",

"\n",

"How do changing parameters of the population equation affect the outcome?\n",

"\n",

@@ -823,17 +826,15 @@

"execution": {}

},

"source": [

- "The population differential equation is an over-simplification and has some very obvious limitations:\n",

- "1. Population growth is not exponential as there are limited number of resources so the population will level out at some point.\n",

- "2. It does not include any external factors on the populations like weather, predators and preys.\n",

- "\n",

- "These kind of limitations can be addressed by extending the model.\n",

- "\n",

+ "The population differential equation is an over-simplification and has some pronounced limitations:\n",

+ "1. Population growth is not exponential as there are limited resources, so the population will level out at some point.\n",

+ "2. It does not include any external factors on the populations, like weather, predators, and prey.\n",

"\n",

- "While it might not seem that the population equation has direct relevance to neuroscience, a similar equation is used to describe the accumulation of evidence for decision making. This is known as the Drift Diffusion Model and you will see in more detail in the Linear System day in Neuromatch (W2D2).\n",