-

Notifications

You must be signed in to change notification settings - Fork 70

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Auto Segmentation error in 'brats_segmentation_v0.3.3' model #239

Comments

|

Hi, the error is |

There is no problem regarding 63 line in decorder.py that error showed. Is it correct to modify it as follows?? In train.json code In metadata.json |

|

Hi @ulphypro , I suspect here "line 63" is for the json file that produced the error, not for |

|

Thank you. @yiheng-wang-nv I edited total 3 files: inference.json, train.json and metadata.json included in 'brats_mri_segmentation_v0.3.3'. There is no problem in corresponding codes. I don't know why it occurs the error that is '[2022-11-22 15:37:19,967] [5628] [MainThread] [ERROR] (uvicorn.error:56) - Application startup failed. Exiting.'. Corresponding codes are as following : edited inference.json, train.json and metadata.json. |

|

Edited inference.json code included in brats_mri_segmentation_v0.3.3 |

|

Edited train.json code included in brats_mri_segmentation_v0.3.3 { |

|

Edited train.json code included in brats_mri_segmentation_v0.3.3 { |

|

@yiheng-wang-nv I edited only total 6 lines. train.json metadata.json |

|

this place is wrong: "ensure_channel_first" should not be inside the list of "keys", it is another arg. |

|

Someone uploaded as demo video how to edit json files . Project-MONAI/MONAILabel#1051 The person input "ensure_channel_first" inside "keys". Then, where should I put "ensure_channel_first"? |

|

|

Thank you for helping me @yiheng-wang-nv I edited inference.json and train.json code as you let me know.

[2022-11-22 16:24:42,505] [12688] [MainThread] [ERROR] (uvicorn.error:119) - Traceback (most recent call last): [2022-11-22 16:24:42,506] [12688] [MainThread] [ERROR] (uvicorn.error:56) - Application startup failed. Exiting. |

|

Dear @yiheng-wang-nv |

|

This place in inference config is wrong: the comma after "image" will raise the error, and actually only one key named "image" is used, there is no need to put it into a list. or |

I'm sorry. The result also occurs same error although I edited json files. : [2022-11-22 17:52:02,288] [956] [MainThread] [ERROR] (uvicorn.error:119) - Traceback (most recent call last): [2022-11-22 17:52:02,288] [956] [MainThread] [ERROR] (uvicorn.error:56) - Application startup failed. Exiting. |

|

Hi, We've tried to explain what's happening with this issue in this reply: Project-MONAI/MONAILabel#1051 (comment) I try to explain here again:

The issue is not only with the code, but it is also with the way the dataset was created. Each nifti file should only have one modality, not 4. Hope this helps, |

|

Hi @ulphypro I modified the inference.json into: and run the bundle inference command and no error happened. Since you did not post the actual json file here, I'm not able to detect more about it. If possible, please push your changes into your forked repo, and attach the link, thanks! |

By |

|

Dear @yiheng-wang-nv @diazandr3s Error associated with inference.json and train.json doesn't occur any more though it couldn't detect segment as green. Thank you for helping me. bandicam.2022-11-25.10-00-04-016.mp4 |

Dear @diazandr3s @yiheng-wang-nv As @diazandr3s mention Project-MONAI/MONAILabel#1051 (comment) and here, I downloaded a single dataset(BraTs2021 included flair, t1, t1ce and t2) and I run in 3D-Slicer again. Running process is as follow.

(in 3D-Slicer)

But, it occurrs error as bottom figure, when I opened dataset clicking 'Next Sample' button. And it also occurrs error in Window Powershell as bottom. Traceback (most recent call last): Can you let me know what should I do how to solve the error? I |

|

I downloaded BraTS2021 dataset as you mention. Should I run using apps/radiology with BraTS2021 dataset? After starting monailabel server using command 'monailabel start_server --app apps/radiology --studies datasets/Task01_BrainTumour/imagesTr --conf models segmentation' in Window Powershell, I can't run 3D-Slicer. Because it doesn't support segmentation associated with brain tumor. Person that I posted in Project-MONAI/model-zoo#239 is also me. |

|

Again, the BRATS dataset has 4 modalities (four image tensors) per patient/case. If you used Task01 dataset from the Medical Segmentation Decathlon, you're using all four modalities in a single nifti file. This DO NOT work as is with Slicer: Project-MONAI/MONAILabel#1051 (comment) This is what I recommend: Project-MONAI/MONAILabel#1051 (comment) Hope that helps, |

|

Hi @ulphypro , did the error be resolved? Should we keep this ticket open? |

|

Hi, I'm always getting an error on the 9th epoch of the training. Why is this the case? Val 9/50 221/250 , dice_tc: 0.7409106 , dice_wt: 0.837617 , dice_et: 0.78997755 , time 4.82s

|

Hi @EdenSehatAI, from the error message, seems missing this file. |

Describe the bug

[2022-11-22 10:24:37,281] [19036] [MainThread] [ERROR] (uvicorn.error:119) - Traceback (most recent call last):

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\starlette\routing.py", line 635, in lifespan

async with self.lifespan_context(app):

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\starlette\routing.py", line 530, in aenter

await self.router.startup()

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\starlette\routing.py", line 612, in startup

await handler()

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\monailabel\app.py", line 106, in startup_event

instance = app_instance()

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\monailabel\interfaces\utils\app.py", line 51, in app_instance

app = c(app_dir=app_dir, studies=studies, conf=conf)

File "C:\Users\AA\apps\monaibundle\main.py", line 90, in init

super().init(

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\monailabel\interfaces\app.py", line 96, in init

self.trainers = self.init_trainers() if settings.MONAI_LABEL_TASKS_TRAIN else {}

File "C:\Users\AA\apps\monaibundle\main.py", line 116, in init_trainers

t = BundleTrainTask(b, self.conf)

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\monailabel\tasks\train\bundle.py", line 83, in init

self.bundle_config.read_config(self.bundle_config_path)

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\monai\bundle\config_parser.py", line 300, in read_config

content.update(self.load_config_files(f, **kwargs))

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\monai\bundle\config_parser.py", line 403, in load_config_files

for k, v in (cls.load_config_file(i, **kwargs)).items():

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\site-packages\monai\bundle\config_parser.py", line 382, in load_config_file

return json.load(f, **kwargs)

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\json_init.py", line 293, in load

return loads(fp.read(),

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\json_init.py", line 346, in loads

return _default_decoder.decode(s)

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\json\decoder.py", line 337, in decode

obj, end = self.raw_decode(s, idx=_w(s, 0).end())

File "C:\Users\AA\AppData\Local\Programs\Python\Python39\lib\json\decoder.py", line 353, in raw_decode

obj, end = self.scan_once(s, idx)

json.decoder.JSONDecodeError: Expecting ',' delimiter: line 63 column 43 (char 1947)

[2022-11-22 10:24:37,282] [19036] [MainThread] [ERROR] (uvicorn.error:56) - Application startup failed. Exiting.

To Reproduce

Steps to reproduce the behavior:

'in_channel' value edited 4 into 1 and added 'ensure_channel_first": ture in inference.json

"target": "SegResNet",

"blocks_down": [

1,

2,

2,

4

],

"blocks_up": [

1,

1,

1

],

"init_filters": 16,

"in_channels": 1,

"out_channels": 3,

"dropout_prob": 0.2

},

"network": "$@network_def.to(@device)",

"preprocessing": {

"target": "Compose",

"transforms": [

{

"target": "LoadImaged",

"keys": "image",

"ensure_channel_first": true

},

'in_channel' value edited 4 into 1 and added 'ensure_channel_first": ture in train.json

"target": "SegResNet",

"blocks_down": [

1,

2,

2,

4

],

"blocks_up": [

1,

1,

1

],

"init_filters": 16,

"in_channels": 1,

"out_channels": 3,

"dropout_prob": 0.2

},

.

.

.

"train": {

"preprocessing_transforms": [

{

"target": "LoadImaged",

"keys": [

"image",

"label",

"ensure_channel_first": true

]

},

'("inputs" :) num_channel' value edits 4 into 1 train.json

"intended_use": "This is an example, not to be used for diagnostic purposes",

"references": [

"Myronenko, Andriy. '3D MRI brain tumor segmentation using autoencoder regularization.' International MICCAI Brainlesion Workshop. Springer, Cham, 2018. https://arxiv.org/abs/1810.11654"

],

"network_data_format": {

"inputs": {

"image": {

"type": "image",

"format": "magnitude",

"modality": "MR",

"num_channels": 1,

"spatial_shape": [

"8n",

"8n",

"8*n"

],

Expected behavior

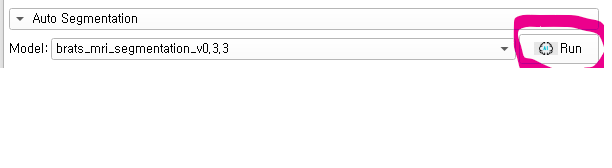

I can expect result when I press 'run' button in Auto Segmentation option, after I edited and added inference.json, train.josn and metadata.json.

Screenshots

But I can't extract brain tumor as following figure.

Environment

Printing MONAI config...

MONAI version: 1.0.1

Numpy version: 1.23.4

Pytorch version: 1.12.1+cu113

MONAI flags: HAS_EXT = False, USE_COMPILED = False, USE_META_DICT = False

MONAI rev id: 8271a193229fe4437026185e218d5b06f7c8ce69

MONAI file: C:\Users\TRL 3D\AppData\Local\Programs\Python\Python39\lib\site-packages\monai_init_.py

Optional dependencies:

Pytorch Ignite version: 0.4.10

Nibabel version: 4.0.2

scikit-image version: 0.19.3

Pillow version: 9.3.0

Tensorboard version: 2.10.1

gdown version: 4.5.3

TorchVision version: 0.13.1+cu113

tqdm version: 4.64.1

lmdb version: 1.3.0

psutil version: 5.9.4

pandas version: 1.5.1

einops version: 0.6.0

transformers version: 4.24.0

mlflow version: 2.0.1

pynrrd version: 0.4.3

For details about installing the optional dependencies, please visit:

https://docs.monai.io/en/latest/installation.html#installing-the-recommended-dependencies

================================

Printing system config...

System: Windows

Win32 version: ('10', '10.0.22000', 'SP0', 'Multiprocessor Free')

Win32 edition: Core

Platform: Windows-10-10.0.22000-SP0

Processor: AMD64 Family 25 Model 80 Stepping 0, AuthenticAMD

Machine: AMD64

Python version: 3.9.13

Process name: python.exe

Command: ['C:\Users\TRL 3D\AppData\Local\Programs\Python\Python39\python.exe', '-c', 'import monai; monai.config.print_debug_info()']

Open files: [popenfile(path='C:\Program Files\WindowsApps\Microsoft.LanguageExperiencePackko-KR_22000.29.134.0_neutral__8wekyb3d8bbwe\Windows\System32\ko-KR\39386f74d1967f5c37a5b4171f81c8f3\kernel32.dll.mui', fd=-1), popenfile(path='C:\Program Files\WindowsApps\Microsoft.LanguageExperiencePackko-KR_22000.29.134.0_neutral__8wekyb3d8bbwe\Windows\System32\ko-KR\fe441ef3ed396a241e46f9f354057863\tzres.dll.mui', fd=-1), popenfile(path='C:\Program Files\WindowsApps\Microsoft.LanguageExperiencePackko-KR_22000.29.134.0_neutral__8wekyb3d8bbwe\Windows\System32\ko-KR\a7c1941e6709c10ab525083b61805316\KernelBase.dll.mui', fd=-1)]

Num physical CPUs: 8

Num logical CPUs: 16

Num usable CPUs: 16

CPU usage (%): [10.3, 9.4, 6.9, 3.8, 4.4, 0.6, 1.9, 2.2, 6.6, 15.2, 7.8, 3.2, 2.2, 0.9, 6.0, 41.1]

CPU freq. (MHz): 3301

Load avg. in last 1, 5, 15 mins (%): [0.0, 0.0, 0.0]

Disk usage (%): 60.3

Avg. sensor temp. (Celsius): UNKNOWN for given OS

Total physical memory (GB): 15.4

Available memory (GB): 7.1

Used memory (GB): 8.3

================================

Printing GPU config...

Num GPUs: 1

Has CUDA: True

CUDA version: 11.3

cuDNN enabled: True

cuDNN version: 8302

Current device: 0

Library compiled for CUDA architectures: ['sm_37', 'sm_50', 'sm_60', 'sm_61', 'sm_70', 'sm_75', 'sm_80', 'sm_86', 'compute_37']

GPU 0 Name: NVIDIA GeForce RTX 3070 Laptop GPU

GPU 0 Is integrated: False

GPU 0 Is multi GPU board: False

GPU 0 Multi processor count: 40

GPU 0 Total memory (GB): 8.0

GPU 0 CUDA capability (maj.min): 8.6

The text was updated successfully, but these errors were encountered: