'

-api_key = encoder.request_api_key(username, email)

-

-# Index in 1 line of code

-items = ['dogs', 'toilet', 'paper', 'enjoy walking']

-encoder.add_documents(user, api_key, items)

-

-# Search in 1 line of code and get the most similar results.

-encoder.search('basin')

-```

-

-Add metadata to your search (information about your vectors)

-

-```

-# Add the number of letters of each word

-metadata = [7, 6, 5, 12]

-encoder.add_documents(user, api_key, items=items, metadata=metadata)

-```

-

-#### Using a document-orientated-approach instead:

-

-```

-from vectorhub.encoders.text import Transformer2Vec

-encoder = Transformer2Vec('bert-base-uncased')

-

-from vectorai import ViClient

-vi_client = ViClient(username, api_key)

-docs = vi_client.create_sample_documents(10)

-vi_client.insert_documents('collection_name_here', docs, models={'color': encoder.encode})

-

-# Now we can search through our collection

-vi_client.search('collection_name_here', field='color_vector_', vector=encoder.encode('purple'))

-```

-

----

-

-### Easily access information with your model!

-

-```

-# If you want to additional information about the model, you can access the information below:

-text_encoder.definition.repo

-text_encoder.definition.description

-# If you want all the information in a dictionary, you can call:

-text_encoder.definition.create_dict() # returns a dictionary with model id, description, paper, etc.

-```

-

----

-

-### Turn Off Error-Catching

-

-By default, if encoding errors, it returns a vector filled with 1e-7 so that if you are encoding and then inserting then it errors out.

-However, if you want to turn off automatic error-catching in VectorHub, simply run:

-

-```

-import vectorhub

-vectorhub.options.set_option('catch_vector_errors', False)

-```

-

-If you want to turn it back on again, run:

-```

-vectorhub.options.set_option('catch_vector_errors', True)

-```

-

----

-

-### Instantiate our auto_encoder class as such and use any of the models!

-

-```

-from vectorhub.auto_encoder import AutoEncoder

-encoder = AutoEncoder.from_model('text/bert')

-encoder.encode("Hello vectorhub!")

-[0.47, 0.83, 0.148, ...]

-```

-

-You can choose from our list of models:

-```

-['text/albert', 'text/bert', 'text/labse', 'text/use', 'text/use-multi', 'text/use-lite', 'text/legal-bert', 'audio/fairseq', 'audio/speech-embedding', 'audio/trill', 'audio/trill-distilled', 'audio/vggish', 'audio/yamnet', 'audio/wav2vec', 'image/bit', 'image/bit-medium', 'image/inception', 'image/inception-v2', 'image/inception-v3', 'image/inception-resnet', 'image/mobilenet', 'image/mobilenet-v2', 'image/resnet', 'image/resnet-v2', 'qa/use-multi-qa', 'qa/use-qa', 'qa/dpr', 'qa/lareqa-qa']

-```

-## What are Vectors?

-Common Terminologys when operating with Vectors:

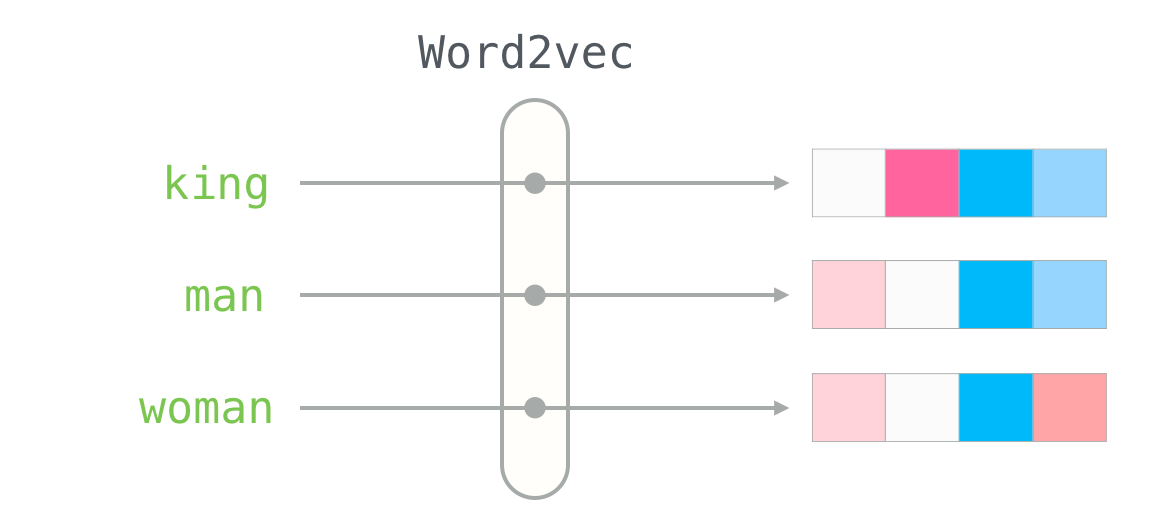

-- Vectors (aka. Embeddings, Encodings, Neural Representation) ~ It is a list of numbers to represent a piece of data.

- E.g. the vector for the word "king" using a Word2Vec model is [0.47, 0.83, 0.148, ...]

-- ____2Vec (aka. Models, Encoders, Embedders) ~ Turns data into vectors e.g. Word2Vec turns words into vector

-

-

-

-

-

-### How can I use vectors?

-

-Vectors have a broad range of applications. The most common use case is to perform semantic vector search and analysing the topics/clusters using vector analytics.

-

-If you are interested in these applications, take a look at [Vector AI](https://github.com/vector-ai/vectorai).

-

-### How can I obtain vectors?

-- Taking the outputs of layers from deep learning models

-- Data cleaning, such as one hot encoding labels

-- Converting graph representations to vectors

-

-### How To Upload Your 2Vec Model

-

-[Read here if you would like to contribute your model!](https://vector-ai.github.io/vectorhub/how_to_add_a_model.html)

-

-## Philosophy

-

-The goal of VectorHub is to provide a flexible yet comprehensive framework that allows people to easily be able to turn their data into vectors in whatever form the data can be in. While our focus is largely on simplicity, customisation should always be an option and the level of abstraction is always up model-uploader as long as the reason is justified. For example - with text, we chose to keep the encoding at the text level as opposed to the token level because selection of text should not be applied at the token level so practitioners are aware of what texts go into the actual vectors (i.e. instead of ignoring a '[next][SEP][wo][##rd]', we are choosing to ignore 'next word' explicitly. We think this will allow practitioners to focus better on what should matter when it comes to encoding.

-

-Similarly, when we are turning data into vectors, we convert to native Python objects. The decision for this is to attempt to remove as many dependencies as possible once the vectors are created - specifically those of deep learning frameworks such as Tensorflow/PyTorch. This is to allow other frameworks to be built on top of it.

-

-## Team

-

-This library is maintained by the Relevance AI - your go-to solution for data science tooling with tvectors. If you are interested in using our API for vector search, visit https://relevance.ai or if you are interested in using API, check out https://relevance.ai its free for public research and open source.

-

-### Credit:

-

-This library wouldn't exist if it weren't for the following libraries and the incredible machine learning community that releases their state-of-the-art models:

+This repository was first set up by RelevanceAI - a platform to build and deploy AI apps and agents.

-1. https://github.com/huggingface/transformers

-2. https://github.com/tensorflow/hub

-3. https://github.com/pytorch/pytorch

-4. Word2Vec image - Alammar, Jay (2018). The Illustrated Transformer [Blog post]. Retrieved from https://jalammar.github.io/illustrated-transformer/

-5. https://github.com/UKPLab/sentence-transformers

+VectorHub is a free and open-source educational platform to learn how to build information retrieval and feature engineering powered by vector embeddings. VectorHub is community-led and maintained by Superlinked - an open-source compute framework , focused on turning complex data into vector embeddings.

diff --git a/assets/monthly_downloads.svg b/assets/monthly_downloads.svg

deleted file mode 100644

index 335c456f..00000000

--- a/assets/monthly_downloads.svg

+++ /dev/null

@@ -1 +0,0 @@

-Monthly Downloads Monthly Downloads 10965 10965 Total Downloads Total Downloads 98552 98552 Weekly Downloads Weekly Downloads 2661 2661

-

-Created by FontForge 20120731 at Mon Oct 24 17:37:40 2016

- By ,,,

-Copyright Dave Gandy 2016. All rights reserved.

-

-

-

-

-

diff --git a/docs/_static/css/fonts/fontawesome-webfont.ttf b/docs/_static/css/fonts/fontawesome-webfont.ttf

deleted file mode 100644

index 35acda2f..00000000

Binary files a/docs/_static/css/fonts/fontawesome-webfont.ttf and /dev/null differ

diff --git a/docs/_static/css/fonts/fontawesome-webfont.woff b/docs/_static/css/fonts/fontawesome-webfont.woff

deleted file mode 100644

index 400014a4..00000000

Binary files a/docs/_static/css/fonts/fontawesome-webfont.woff and /dev/null differ

diff --git a/docs/_static/css/fonts/fontawesome-webfont.woff2 b/docs/_static/css/fonts/fontawesome-webfont.woff2

deleted file mode 100644

index 4d13fc60..00000000

Binary files a/docs/_static/css/fonts/fontawesome-webfont.woff2 and /dev/null differ

diff --git a/docs/_static/css/fonts/lato-bold-italic.woff b/docs/_static/css/fonts/lato-bold-italic.woff

deleted file mode 100644

index 88ad05b9..00000000

Binary files a/docs/_static/css/fonts/lato-bold-italic.woff and /dev/null differ

diff --git a/docs/_static/css/fonts/lato-bold-italic.woff2 b/docs/_static/css/fonts/lato-bold-italic.woff2

deleted file mode 100644

index c4e3d804..00000000

Binary files a/docs/_static/css/fonts/lato-bold-italic.woff2 and /dev/null differ

diff --git a/docs/_static/css/fonts/lato-bold.woff b/docs/_static/css/fonts/lato-bold.woff

deleted file mode 100644

index c6dff51f..00000000

Binary files a/docs/_static/css/fonts/lato-bold.woff and /dev/null differ

diff --git a/docs/_static/css/fonts/lato-bold.woff2 b/docs/_static/css/fonts/lato-bold.woff2

deleted file mode 100644

index bb195043..00000000

Binary files a/docs/_static/css/fonts/lato-bold.woff2 and /dev/null differ

diff --git a/docs/_static/css/fonts/lato-normal-italic.woff b/docs/_static/css/fonts/lato-normal-italic.woff

deleted file mode 100644

index 76114bc0..00000000

Binary files a/docs/_static/css/fonts/lato-normal-italic.woff and /dev/null differ

diff --git a/docs/_static/css/fonts/lato-normal-italic.woff2 b/docs/_static/css/fonts/lato-normal-italic.woff2

deleted file mode 100644

index 3404f37e..00000000

Binary files a/docs/_static/css/fonts/lato-normal-italic.woff2 and /dev/null differ

diff --git a/docs/_static/css/fonts/lato-normal.woff b/docs/_static/css/fonts/lato-normal.woff

deleted file mode 100644

index ae1307ff..00000000

Binary files a/docs/_static/css/fonts/lato-normal.woff and /dev/null differ

diff --git a/docs/_static/css/fonts/lato-normal.woff2 b/docs/_static/css/fonts/lato-normal.woff2

deleted file mode 100644

index 3bf98433..00000000

Binary files a/docs/_static/css/fonts/lato-normal.woff2 and /dev/null differ

diff --git a/docs/_static/css/theme.css b/docs/_static/css/theme.css

deleted file mode 100644

index 8cd4f101..00000000

--- a/docs/_static/css/theme.css

+++ /dev/null

@@ -1,4 +0,0 @@

-html{box-sizing:border-box}*,:after,:before{box-sizing:inherit}article,aside,details,figcaption,figure,footer,header,hgroup,nav,section{display:block}audio,canvas,video{display:inline-block;*display:inline;*zoom:1}[hidden],audio:not([controls]){display:none}*{-webkit-box-sizing:border-box;-moz-box-sizing:border-box;box-sizing:border-box}html{font-size:100%;-webkit-text-size-adjust:100%;-ms-text-size-adjust:100%}body{margin:0}a:active,a:hover{outline:0}abbr[title]{border-bottom:1px dotted}b,strong{font-weight:700}blockquote{margin:0}dfn{font-style:italic}ins{background:#ff9;text-decoration:none}ins,mark{color:#000}mark{background:#ff0;font-style:italic;font-weight:700}.rst-content code,.rst-content tt,code,kbd,pre,samp{font-family:monospace,serif;_font-family:courier new,monospace;font-size:1em}pre{white-space:pre}q{quotes:none}q:after,q:before{content:"";content:none}small{font-size:85%}sub,sup{font-size:75%;line-height:0;position:relative;vertical-align:baseline}sup{top:-.5em}sub{bottom:-.25em}dl,ol,ul{margin:0;padding:0;list-style:none;list-style-image:none}li{list-style:none}dd{margin:0}img{border:0;-ms-interpolation-mode:bicubic;vertical-align:middle;max-width:100%}svg:not(:root){overflow:hidden}figure,form{margin:0}label{cursor:pointer}button,input,select,textarea{font-size:100%;margin:0;vertical-align:baseline;*vertical-align:middle}button,input{line-height:normal}button,input[type=button],input[type=reset],input[type=submit]{cursor:pointer;-webkit-appearance:button;*overflow:visible}button[disabled],input[disabled]{cursor:default}input[type=search]{-webkit-appearance:textfield;-moz-box-sizing:content-box;-webkit-box-sizing:content-box;box-sizing:content-box}textarea{resize:vertical}table{border-collapse:collapse;border-spacing:0}td{vertical-align:top}.chromeframe{margin:.2em 0;background:#ccc;color:#000;padding:.2em 0}.ir{display:block;border:0;text-indent:-999em;overflow:hidden;background-color:transparent;background-repeat:no-repeat;text-align:left;direction:ltr;*line-height:0}.ir br{display:none}.hidden{display:none!important;visibility:hidden}.visuallyhidden{border:0;clip:rect(0 0 0 0);height:1px;margin:-1px;overflow:hidden;padding:0;position:absolute;width:1px}.visuallyhidden.focusable:active,.visuallyhidden.focusable:focus{clip:auto;height:auto;margin:0;overflow:visible;position:static;width:auto}.invisible{visibility:hidden}.relative{position:relative}big,small{font-size:100%}@media print{body,html,section{background:none!important}*{box-shadow:none!important;text-shadow:none!important;filter:none!important;-ms-filter:none!important}a,a:visited{text-decoration:underline}.ir a:after,a[href^="#"]:after,a[href^="javascript:"]:after{content:""}blockquote,pre{page-break-inside:avoid}thead{display:table-header-group}img,tr{page-break-inside:avoid}img{max-width:100%!important}@page{margin:.5cm}.rst-content .toctree-wrapper>p.caption,h2,h3,p{orphans:3;widows:3}.rst-content .toctree-wrapper>p.caption,h2,h3{page-break-after:avoid}}.btn,.fa:before,.icon:before,.rst-content .admonition,.rst-content .admonition-title:before,.rst-content .admonition-todo,.rst-content .attention,.rst-content .caution,.rst-content .code-block-caption .headerlink:before,.rst-content .danger,.rst-content .error,.rst-content .hint,.rst-content .important,.rst-content .note,.rst-content .seealso,.rst-content .tip,.rst-content .warning,.rst-content code.download span:first-child:before,.rst-content dl dt .headerlink:before,.rst-content h1 .headerlink:before,.rst-content h2 .headerlink:before,.rst-content h3 .headerlink:before,.rst-content h4 .headerlink:before,.rst-content h5 .headerlink:before,.rst-content h6 .headerlink:before,.rst-content p.caption .headerlink:before,.rst-content table>caption .headerlink:before,.rst-content tt.download span:first-child:before,.wy-alert,.wy-dropdown .caret:before,.wy-inline-validate.wy-inline-validate-danger .wy-input-context:before,.wy-inline-validate.wy-inline-validate-info .wy-input-context:before,.wy-inline-validate.wy-inline-validate-success .wy-input-context:before,.wy-inline-validate.wy-inline-validate-warning .wy-input-context:before,.wy-menu-vertical li.current>a,.wy-menu-vertical li.current>a span.toctree-expand:before,.wy-menu-vertical li.on a,.wy-menu-vertical li.on a span.toctree-expand:before,.wy-menu-vertical li span.toctree-expand:before,.wy-nav-top a,.wy-side-nav-search .wy-dropdown>a,.wy-side-nav-search>a,input[type=color],input[type=date],input[type=datetime-local],input[type=datetime],input[type=email],input[type=month],input[type=number],input[type=password],input[type=search],input[type=tel],input[type=text],input[type=time],input[type=url],input[type=week],select,textarea{-webkit-font-smoothing:antialiased}.clearfix{*zoom:1}.clearfix:after,.clearfix:before{display:table;content:""}.clearfix:after{clear:both}/*!

- * Font Awesome 4.7.0 by @davegandy - http://fontawesome.io - @fontawesome

- * License - http://fontawesome.io/license (Font: SIL OFL 1.1, CSS: MIT License)