Azure Cosmos DB is a globally distributed database system that allows you to read and write data from the local replicas of your database and it transparently replicates the data to all the regions associated with your Cosmos account.

Azure Cosmos DB is a fully managed NoSQL database designed to provide low latency, elastic scalability of throughput, well-defined semantics for data consistency, and high availability.

You can configure your databases to be globally distributed and available in any of the Azure regions. To lower the latency, place the data close to where your users are. Choosing the required regions depends on the global reach of your application and where your users are located.

With Azure Cosmos DB, you can add or remove the regions associated with your account at any time. Your application doesn't need to be paused or redeployed to add or remove a region.

With its novel multi-master replication protocol, every region supports both writes and reads. The multi-master capability also enables:

- Unlimited elastic write and read scalability.

- 99.999% read and write availability all around the world.

- Guaranteed reads and writes served in less than 10 milliseconds at the 99th percentile.

Your application can perform near real-time reads and writes against all the regions you chose for your database. Azure Cosmos DB internally handles the data replication between regions with consistency level guarantees of the level you selected.

Running a database in multiple regions worldwide increases the availability of a database. If one region is unavailable, other regions automatically handle application requests. Azure Cosmos DB offers 99.999% read and write availability for multi-region databases.

The Azure Cosmos DB account is the fundamental unit of global distribution and high availability. Your Azure Cosmos DB account contains a unique Domain Name System (DNS) name and you can manage an account by using the Azure portal or the Azure CLI, or by using different language-specific SDKs. For globally distributing your data and throughput across multiple Azure regions, you can add and remove Azure regions to your account at any time.

An Azure Cosmos DB container is the fundamental unit of scalability. You can virtually have an unlimited provisioned throughput (RU/s) and storage on a container. Azure Cosmos DB transparently partitions your container using the logical partition key that you specify in order to elastically scale your provisioned throughput and storage.

Currently, you can create a maximum of 50 Azure Cosmos DB accounts under an Azure subscription (can be increased via support request). After you create an account under your Azure subscription, you can manage the data in your account by creating databases, containers, and items.

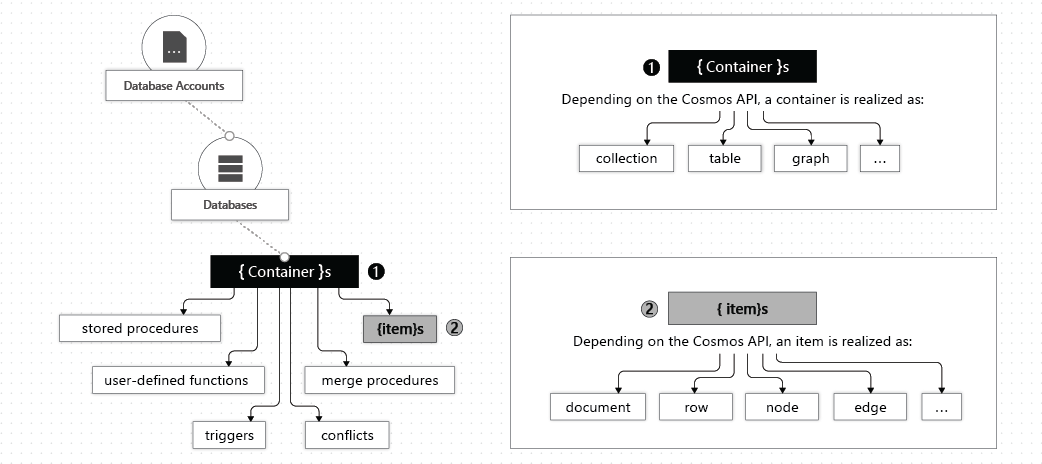

The following image shows the hierarchy of different entities in an Azure Cosmos DB account:

You can create one or multiple Azure Cosmos DB databases under your account. A database is analogous to a namespace. A database is the unit of management for a set of Azure Cosmos DB containers.

An Azure Cosmos DB container is where data is stored. Unlike most relational databases, which scale up with larger sizes of virtual machines, Azure Cosmos DB scales out.

Data is stored on one or more servers called partitions. To increase partitions, you increase throughput, or they grow automatically as storage increases. This relationship provides a virtually unlimited amount of throughput and storage for a container.

When you create a container, you need to supply a partition key. The partition key is a property that you select from your items to help Azure Cosmos DB distribute the data efficiently across partitions. Azure Cosmos DB uses the value of this property to route data to the appropriate partition to be written, updated, or deleted. You can also use the partition key in the WHERE clause in queries for efficient data retrieval.

The underlying storage mechanism for data in Azure Cosmos DB is called a physical partition. Physical partitions can have a throughput amount up to 10,000 Request Units per second, and they can store up to 50 GB of data. Azure Cosmos DB abstracts this partitioning concept with a logical partition, which can store up to 20 GB of data.

When you create a container, you configure throughput in one of the following modes:

- Dedicated throughput: The throughput on a container is exclusively reserved for that container. There are two types of dedicated throughput: standard and autoscale.

- Shared throughput: Throughput is specified at the database level and then shared with up to 25 containers within the database. Sharing of throughput excludes containers that are configured with their own dedicated throughput.

Depending on which API you use, individual data entities can be represented in various ways:

| Azure Cosmos DB entity | API for NoSQL | API for Cassandra | API for MongoDB | API for Gremlin | API for Table |

|---|---|---|---|---|---|

| Azure Cosmos DB item | Item | Row | Document | Node or edge | Item |

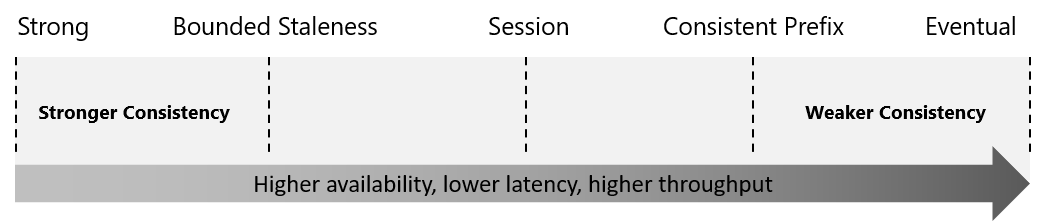

Azure Cosmos DB approaches data consistency as a spectrum of choices instead of two extremes. Strong consistency and eventual consistency are at the ends of the spectrum, but there are many consistency choices along the spectrum. Developers can use these options to make precise choices and granular tradeoffs with respect to high availability and performance.

Azure Cosmos DB offers five well-defined levels. From strongest to weakest, the levels are:

- Strong

- Bounded staleness

- Session

- Consistent prefix

- Eventual

Each level provides availability and performance tradeoffs. The following image shows the different consistency levels as a spectrum.

The consistency levels are region-agnostic and are guaranteed for all operations, regardless of:

- The region where the reads and writes are served

- The number of regions associated with your Azure Cosmos DB account

- Whether your account is configured with a single or multiple write regions.

Read consistency applies to a single read operation scoped within a partition-key range or a logical partition.

Each of the consistency models can be used for specific real-world scenarios. Each provides precise availability and performance tradeoffs backed by comprehensive SLAs. The following simple considerations help you make the right choice in many common scenarios.

You can configure the default consistency level on your Azure Cosmos DB account at any time. The default consistency level configured on your account applies to all Azure Cosmos DB databases and containers under that account. All reads and queries issued against a container or a database use the specified consistency level by default.

Read consistency applies to a single read operation scoped within a logical partition. The read operation can be issued by a remote client or a stored procedure.

Azure Cosmos DB guarantees that 100 percent of read requests meet the consistency guarantee for the consistency level chosen. The precise definitions of the five consistency levels in Azure Cosmos DB using the TLA+ specification language are provided in the azure-cosmos-tla GitHub repo.

Strong consistency offers a linearizability guarantee. Linearizability refers to serving requests concurrently. The reads are guaranteed to return the most recent committed version of an item. A client never sees an uncommitted or partial write. Users are always guaranteed to read the latest committed write.

In bounded staleness consistency, the lag of data between any two regions is always less than a specified amount. The amount can be "K" versions (that is, "updates") of an item or by "T" time intervals, whichever is reached first. In other words, when you choose bounded staleness, the maximum "staleness" of the data in any region can be configured in two ways:

- The number of versions (K) of the item

- The time interval (T) reads might lag behind the writes

Bounded Staleness is beneficial primarily to single-region write accounts with two or more regions. If the data lag in a region (determined per physical partition) exceeds the configured staleness value, writes for that partition are throttled until staleness is back within the configured upper bound.

For a single-region account, Bounded Staleness provides the same write consistency guarantees as Session and Eventual Consistency. With Bounded Staleness, data is replicated to a local majority (three replicas in a four replica set) in the single region.

In session consistency, within a single client session, reads are guaranteed to honor the read-your-writes, and write-follows-reads guarantees. This guarantee assumes a single “writer” session or sharing the session token for multiple writers.

Like all consistency levels weaker than Strong, writes are replicated to a minimum of three replicas (in a four replica set) in the local region, with asynchronous replication to all other regions.

In consistent prefix, updates made as single document writes see eventual consistency. Updates made as a batch within a transaction, are returned consistent to the transaction in which they were committed. Write operations within a transaction of multiple documents are always visible together.

Assume two write operations are performed on documents Doc1 and Doc2, within transactions T1 and T2. When client does a read in any replica, the user sees either “Doc1 v1 and Doc2 v1” or “ Doc1 v2 and Doc2 v2”, but never “Doc1 v1 and Doc2 v2” or “Doc1 v2 and Doc2 v1” for the same read or query operation.

In eventual consistency, there's no ordering guarantee for reads. In the absence of any further writes, the replicas eventually converge.

Eventual consistency is the weakest form of consistency because a client might read the values that are older than the ones it read before. Eventual consistency is ideal where the application doesn't require any ordering guarantees. Examples include count of Retweets, Likes, or nonthreaded comments.

Azure Cosmos DB offers multiple database APIs, which include NoSQL, MongoDB, PostgreSQL, Cassandra, Gremlin, and Table. By using these APIs, you can model real world data using documents, key-value, graph, and column-family data models. These APIs allow your applications to treat Azure Cosmos DB as if it were various other databases technologies, without the overhead of management, and scaling approaches. Azure Cosmos DB helps you to use the ecosystems, tools, and skills you already have for data modeling and querying with its various APIs.

All the APIs offer automatic scaling of storage and throughput, flexibility, and performance guarantees. There's no one best API, and you may choose any one of the APIs to build your application

API for NoSQL is native to Azure Cosmos DB.

API for MongoDB, PostgreSQL, Cassandra, Gremlin, and Table implement the wire protocol of open-source database engines. These APIs are best suited if the following conditions are true:

- If you have existing MongoDB, PostgreSQL Cassandra, or Gremlin applications

- If you don't want to rewrite your entire data access layer

- If you want to use the open-source developer ecosystem, client-drivers, expertise, and resources for your database

The Azure Cosmos DB API for NoSQL stores data in document format. It offers the best end-to-end experience as we have full control over the interface, service, and the SDK client libraries. Any new feature that is rolled out to Azure Cosmos DB is first available on API for NoSQL accounts. NoSQL accounts provide support for querying items using the Structured Query Language (SQL) syntax.

The Azure Cosmos DB API for MongoDB stores data in a document structure, via BSON format. It's compatible with MongoDB wire protocol; however, it doesn't use any native MongoDB related code. The API for MongoDB is a great choice if you want to use the broader MongoDB ecosystem and skills, without compromising on using Azure Cosmos DB features.

Azure Cosmos DB for PostgreSQL is a managed service for running PostgreSQL at any scale, with the Citus open source superpower of distributed tables. It stores data either on a single node, or distributed in a multi-node configuration.

The Azure Cosmos DB API for Cassandra stores data in column-oriented schema. Apache Cassandra offers a highly distributed, horizontally scaling approach to storing large volumes of data while offering a flexible approach to a column-oriented schema. API for Cassandra in Azure Cosmos DB aligns with this philosophy to approaching distributed NoSQL databases. This API for Cassandra is wire protocol compatible with native Apache Cassandra.

The Azure Cosmos DB API for Gremlin allows users to make graph queries and stores data as edges and vertices.

Use the API for Gremlin for scenarios:

- Involving dynamic data

- Involving data with complex relations

- Involving data that is too complex to be modeled with relational databases

- If you want to use the existing Gremlin ecosystem and skills

The Azure Cosmos DB API for Table stores data in key/value format. If you're currently using Azure Table storage, you might see some limitations in latency, scaling, throughput, global distribution, index management, low query performance. API for Table overcomes these limitations and the recommendation is to migrate your app if you want to use the benefits of Azure Cosmos DB. API for Table only supports OLTP scenarios.

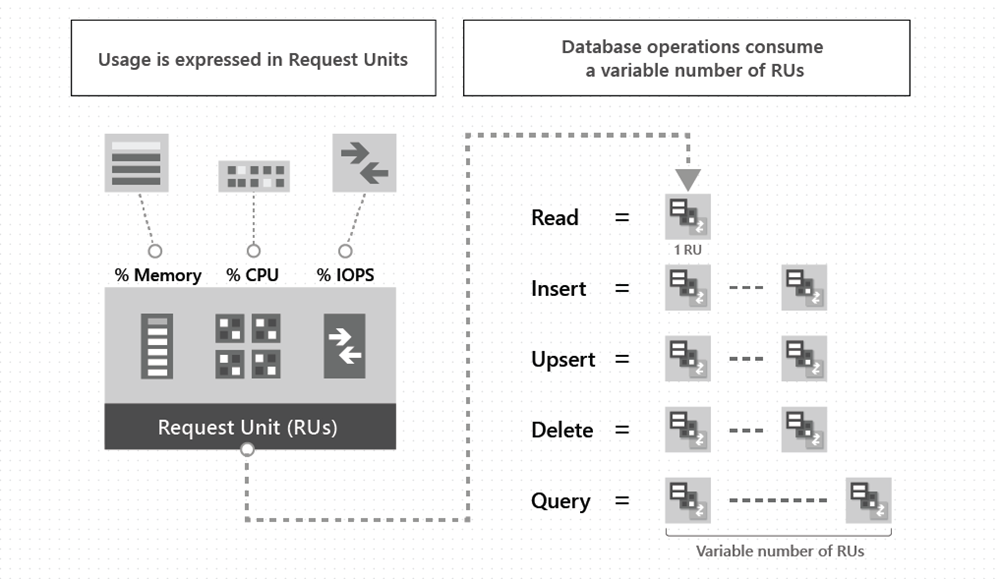

With Azure Cosmos DB, you pay for the throughput you provision and the storage you consume on an hourly basis. Throughput must be provisioned to ensure that sufficient system resources are available for your Azure Cosmos database always.

The cost of all database operations is normalized in Azure Cosmos DB and expressed by request units (or RUs, for short). A request unit represents the system resources such as CPU, IOPS, and memory that are required to perform the database operations supported by Azure Cosmos DB.

The cost to do a point read, which is fetching a single item by its ID and partition key value, for a 1-KB item is 1RU. All other database operations are similarly assigned a cost using RUs. No matter which API you use to interact with your Azure Cosmos container, costs are measured by RUs. Whether the database operation is a write, point read, or query, costs are measured in RUs.

The following image shows the high-level idea of RUs:

The type of Azure Cosmos DB account you're using determines the way consumed RUs get charged. There are three modes in which you can create an account:

- Provisioned throughput mode: In this mode, you provision the number of RUs for your application on a per-second basis in increments of 100 RUs per second. To scale the provisioned throughput for your application, you can increase or decrease the number of RUs at any time in increments or decrements of 100 RUs. You can make your changes either programmatically or by using the Azure portal. You can provision throughput at container and database granularity level.

- Serverless mode: In this mode, you don't have to provision any throughput when creating resources in your Azure Cosmos DB account. At the end of your billing period, you get billed for the number of request units that have been consumed by your database operations.

- Autoscale mode: In this mode, you can automatically and instantly scale the throughput (RU/s) of your database or container based on its usage. This scaling operation doesn't affect the availability, latency, throughput, or performance of the workload. This mode is well suited for mission-critical workloads that have variable or unpredictable traffic patterns, and require SLAs on high performance and scale.

This unit focuses on Azure Cosmos DB .NET SDK v3 for API for NoSQL. (Microsoft.Azure.Cosmos NuGet package.) If you're familiar with the previous version of the .NET SDK, you might be familiar with the terms collection and document.

The azure-cosmos-dotnet-v3 GitHub repository includes the latest .NET sample solutions. You use these solutions to perform CRUD (create, read, update, and delete) and other common operations on Azure Cosmos DB resources.

Because Azure Cosmos DB supports multiple API models, version 3 of the .NET SDK uses the generic terms container and item. A container can be a collection, graph, or table. An item can be a document, edge/vertex, or row, and is the content inside a container.

Following are examples showing some of the key operations you should be familiar with. For more examples, please visit the GitHub link shown earlier. The examples below all use the async version of the methods.

Creates a new CosmosClient with a connection string. CosmosClient is thread-safe. The recommendation is to maintain a single instance of CosmosClient per lifetime of the application that enables efficient connection management and performance.

CosmosClient client = new CosmosClient(endpoint, key);The CosmosClient.CreateDatabaseAsync method throws an exception if a database with the same name already exists.

// New instance of Database class referencing the server-side database

Database database1 = await client.CreateDatabaseAsync(

id: "adventureworks-1"

);The CosmosClient.CreateDatabaseIfNotExistsAsync checks if a database exists, and if it doesn't, creates it. Only the database id is used to verify if there's an existing database.

// New instance of Database class referencing the server-side database

Database database2 = await client.CreateDatabaseIfNotExistsAsync(

id: "adventureworks-2"

);Reads a database from the Azure Cosmos DB service as an asynchronous operation.

DatabaseResponse readResponse = await database.ReadAsync();Delete a Database as an asynchronous operation.

await database.DeleteAsync();The Database.CreateContainerIfNotExistsAsync method checks if a container exists, and if it doesn't, it creates it. Only the container id is used to verify if there's an existing container.

// Set throughput to the minimum value of 400 RU/s

ContainerResponse simpleContainer = await database.CreateContainerIfNotExistsAsync(

id: containerId,

partitionKeyPath: partitionKey,

throughput: 400);Container container = database.GetContainer(containerId);

ContainerProperties containerProperties = await container.ReadContainerAsync();Delete a Container as an asynchronous operation.

await database.GetContainer(containerId).DeleteContainerAsync();Use the Container.CreateItemAsync method to create an item. The method requires a JSON serializable object that must contain an id property, and a partitionKey.

ItemResponse<SalesOrder> response = await container.CreateItemAsync(salesOrder, new PartitionKey(salesOrder.AccountNumber));Use the Container.ReadItemAsync method to read an item. The method requires type to serialize the item to along with an id property, and a partitionKey.

string id = "[id]";

string accountNumber = "[partition-key]";

ItemResponse<SalesOrder> response = await container.ReadItemAsync(id, new PartitionKey(accountNumber));The Container.GetItemQueryIterator method creates a query for items under a container in an Azure Cosmos database using a SQL statement with parameterized values. It returns a FeedIterator.

QueryDefinition query = new QueryDefinition(

"select * from sales s where s.AccountNumber = @AccountInput ")

.WithParameter("@AccountInput", "Account1");

FeedIterator<SalesOrder> resultSet = container.GetItemQueryIterator<SalesOrder>(

query,

requestOptions: new QueryRequestOptions()

{

PartitionKey = new PartitionKey("Account1"),

MaxItemCount = 1

});- The azure-cosmos-dotnet-v3 GitHub repository includes the latest .NET sample solutions to perform CRUD and other common operations on Azure Cosmos DB resources.

- Visit this article Azure Cosmos DB.NET V3 SDK (Microsoft.Azure.Cosmos) examples for the SQL API for direct links to specific examples in the GitHub repository.

Azure Cosmos DB provides language-integrated, transactional execution of JavaScript that lets you write stored procedures, triggers, and user-defined functions (UDFs). To call a stored procedure, trigger, or user-defined function, you need to register it. For more information, see How to work with stored procedures, triggers, user-defined functions in Azure Cosmos DB.

ℹ️ This unit focuses on stored procedures, the following unit covers triggers and user-defined functions.

Stored procedures can create, update, read, query, and delete items inside an Azure Cosmos container. Stored procedures are registered per collection, and can operate on any document or an attachment present in that collection.

Here's a simple stored procedure that returns a "Hello World" response.

var helloWorldStoredProc = {

id: "helloWorld",

serverScript: function () {

var context = getContext();

var response = context.getResponse();

response.setBody("Hello, World");

},

};The context object provides access to all operations that can be performed in Azure Cosmos DB, and access to the request and response objects. In this case, you use the response object to set the body of the response to be sent back to the client.

When you create an item by using a stored procedure, the item is inserted into the Azure Cosmos DB container and an ID for the newly created item is returned. Creating an item is an asynchronous operation and depends on the JavaScript callback functions. The callback function has two parameters: one for the error object in case the operation fails, and another for a return value, in this case, the created object. Inside the callback, you can either handle the exception or throw an error. If a callback isn't provided and there's an error, the Azure Cosmos DB runtime throws an error.

The stored procedure also includes a parameter to set the description as a boolean value. When the parameter is set to true and the description is missing, the stored procedure throws an exception. Otherwise, the rest of the stored procedure continues to run.

This stored procedure takes as input documentToCreate, the body of a document to be created in the current collection. All such operations are asynchronous and depend on JavaScript function callbacks.

var createDocumentStoredProc = {

id: "createMyDocument",

body: function createMyDocument(documentToCreate) {

var context = getContext();

var collection = context.getCollection();

var accepted = collection.createDocument(

collection.getSelfLink(),

documentToCreate,

function (err, documentCreated) {

if (err) throw new Error("Error" + err.message);

context.getResponse().setBody(documentCreated.id);

}

);

if (!accepted) return;

},

};When defining a stored procedure in the Azure portal, input parameters are always sent as a string to the stored procedure. Even if you pass an array of strings as an input, the array is converted to string and sent to the stored procedure. To work around this, you can define a function within your stored procedure to parse the string as an array. The following code shows how to parse a string input parameter as an array:

function sample(arr) {

if (typeof arr === "string") arr = JSON.parse(arr);

arr.forEach(function (a) {

// do something here

console.log(a);

});

}All Azure Cosmos DB operations must complete within a limited amount of time. Stored procedures have a limited amount of time to run on the server. All collection functions return a Boolean value that represents whether that operation completes or not.

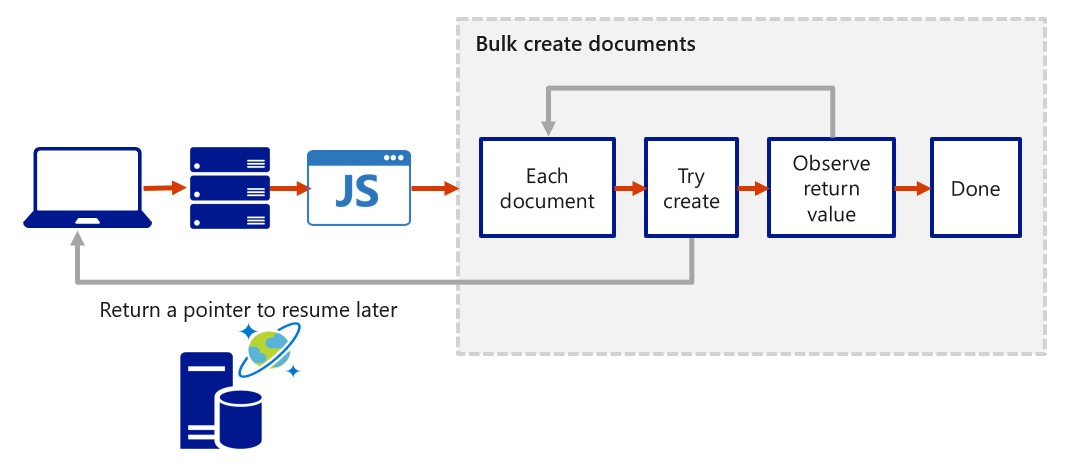

You can implement transactions on items within a container by using a stored procedure. JavaScript functions can implement a continuation-based model to batch or resume execution. The continuation value can be any value of your choice and your applications can then use this value to resume a transaction from a new starting point. The diagram below depicts how the transaction continuation model can be used to repeat a server-side function until the function finishes its entire processing workload.

Azure Cosmos DB supports pretriggers and post-triggers. Pretriggers are executed before modifying a database item and post-triggers are executed after modifying a database item. Triggers aren't automatically executed. They must be specified for each database operation where you want them to execute. After you define a trigger, you should register it by using the Azure Cosmos DB SDKs.

For examples of how to register and call a trigger, see pretriggers and post-triggers.

The following example shows how a pretrigger is used to validate the properties of an Azure Cosmos item that is being created. It adds a timestamp property to a newly added item if it doesn't contain one.

function validateToDoItemTimestamp() {

var context = getContext();

var request = context.getRequest();

// item to be created in the current operation

var itemToCreate = request.getBody();

// validate properties

if (!("timestamp" in itemToCreate)) {

var ts = new Date();

itemToCreate["timestamp"] = ts.getTime();

}

// update the item that will be created

request.setBody(itemToCreate);

}Pretriggers can't have any input parameters. The request object in the trigger is used to manipulate the request message associated with the operation. In the previous example, the pretrigger is run when creating an Azure Cosmos item and the request message body contains the item to be created in JSON format.

When triggers are registered, you can specify the operations that it can run with. This trigger should be created with a TriggerOperation value of TriggerOperation.Create, using the trigger in a replace operation isn't permitted.

For examples of how to register and call a pretrigger, visit the pretriggers article.

The following example shows a post-trigger. This trigger queries for the metadata item and updates it with details about the newly created item.

function updateMetadata() {

var context = getContext();

var container = context.getCollection();

var response = context.getResponse();

// item that was created

var createdItem = response.getBody();

// query for metadata document

var filterQuery = 'SELECT * FROM root r WHERE r.id = "_metadata"';

var accept = container.queryDocuments(

container.getSelfLink(),

filterQuery,

updateMetadataCallback

);

if (!accept) throw "Unable to update metadata, abort";

function updateMetadataCallback(err, items, responseOptions) {

if (err) throw new Error("Error" + err.message);

if (items.length != 1) throw "Unable to find metadata document";

var metadataItem = items[0];

// update metadata

metadataItem.createdItems += 1;

metadataItem.createdNames += " " + createdItem.id;

var accept = container.replaceDocument(

metadataItem._self,

metadataItem,

function (err, itemReplaced) {

if (err) throw "Unable to update metadata, abort";

}

);

if (!accept) throw "Unable to update metadata, abort";

return;

}

}One thing that is important to note is the transactional execution of triggers in Azure Cosmos DB. The post-trigger runs as part of the same transaction for the underlying item itself. An exception during the post-trigger execution fails the whole transaction. Anything committed is rolled back and an exception returned.

The following sample creates a UDF to calculate income tax for various income brackets. This user-defined function would then be used inside a query. For the purposes of this example assume there's a container called "Incomes" with properties as follows:

{

"name": "User One",

"country": "USA",

"income": 70000

}The following code sample is a function definition to calculate income tax for various income brackets:

function tax(income) {

if (income == undefined) throw "no input";

if (income < 1000) return income * 0.1;

else if (income < 10000) return income * 0.2;

else return income * 0.4;

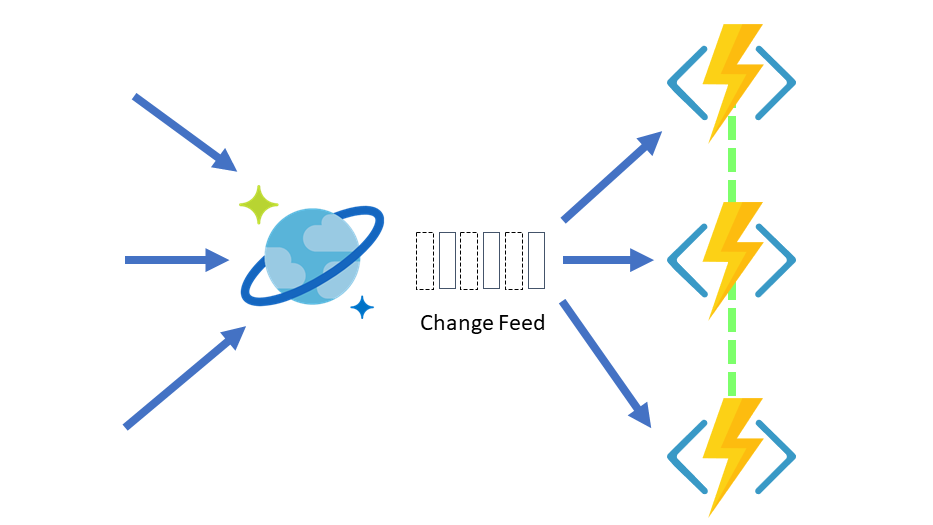

}Change feed in Azure Cosmos DB is a persistent record of changes to a container in the order they occur. Change feed support in Azure Cosmos DB works by listening to an Azure Cosmos DB container for any changes. It then outputs the sorted list of documents that were changed in the order in which they were modified. The persisted changes can be processed asynchronously and incrementally, and the output can be distributed across one or more consumers for parallel processing.

Today, you see all inserts and updates in the change feed. You can't filter the change feed for a specific type of operation. Currently change feed doesn't log delete operations. As a workaround, you can add a soft marker on the items that are being deleted. For example, you can add an attribute in the item called "deleted," set its value to "true," and then set a time-to-live (TTL) value on the item. Setting the TTL ensures that the item is automatically deleted.

You can work with the Azure Cosmos DB change feed using either a push model or a pull model. With a push model, the change feed processor pushes work to a client that has business logic for processing this work. However, the complexity in checking for work and storing state for the last processed work is handled within the change feed processor.

With a pull model, the client has to pull the work from the server. In this case, the client has business logic for processing work and also stores state for the last processed work. The client handles load balancing across multiple clients processing work in parallel, and handling errors.

ℹ️ It is recommended to use the push model because you won't need to worry about polling the change feed for future changes, storing state for the last processed change, and other benefits.

Most scenarios that use the Azure Cosmos DB change feed use one of the push model options. However, there are some scenarios where you might want the extra low level control of the pull model. The extra low-level control includes:

- Reading changes from a particular partition key

- Controlling the pace at which your client receives changes for processing

- Doing a one-time read of the existing data in the change feed (for example, to do a data migration)

There are two ways you can read from the change feed with a push model: Azure Functions Azure Cosmos DB triggers, and the change feed processor library. Azure Functions uses the change feed processor behind the scenes, so these are both similar ways to read the change feed. Think of Azure Functions as simply a hosting platform for the change feed processor, not an entirely different way of reading the change feed. Azure Functions uses the change feed processor behind the scenes. It automatically parallelizes change processing across your container's partitions.

You can create small reactive Azure Functions that are automatically triggered on each new event in your Azure Cosmos DB container's change feed. With the Azure Functions trigger for Azure Cosmos DB, you can use the Change Feed Processor's scaling and reliable event detection functionality without the need to maintain any worker infrastructure.

The change feed processor is part of the Azure Cosmos DB .NET V3 and Java V4 SDKs. It simplifies the process of reading the change feed and distributes the event processing across multiple consumers effectively.

There are four main components of implementing the change feed processor:

-

The monitored container: The monitored container has the data from which the change feed is generated. Any inserts and updates to the monitored container are reflected in the change feed of the container.

-

The lease container: The lease container acts as a state storage and coordinates processing the change feed across multiple workers. The lease container can be stored in the same account as the monitored container or in a separate account.

-

The compute instance: A compute instance hosts the change feed processor to listen for changes. Depending on the platform, it might represented by a VM, a kubernetes pod, an Azure App Service instance, an actual physical machine. It has a unique identifier referenced as the instance name throughout this article.

-

The delegate: The delegate is the code that defines what you, the developer, want to do with each batch of changes that the change feed processor reads.

When implementing the change feed processor the point of entry is always the monitored container, from a Container instance you call GetChangeFeedProcessorBuilder:

/// <summary>

/// Start the Change Feed Processor to listen for changes and process them with the HandleChangesAsync implementation.

/// </summary>

private static async Task<ChangeFeedProcessor> StartChangeFeedProcessorAsync(

CosmosClient cosmosClient,

IConfiguration configuration)

{

string databaseName = configuration["SourceDatabaseName"];

string sourceContainerName = configuration["SourceContainerName"];

string leaseContainerName = configuration["LeasesContainerName"];

Container leaseContainer = cosmosClient.GetContainer(databaseName, leaseContainerName);

ChangeFeedProcessor changeFeedProcessor = cosmosClient.GetContainer(databaseName, sourceContainerName)

.GetChangeFeedProcessorBuilder<ToDoItem>(processorName: "changeFeedSample", onChangesDelegate: HandleChangesAsync)

.WithInstanceName("consoleHost")

.WithLeaseContainer(leaseContainer)

.Build();

Console.WriteLine("Starting Change Feed Processor...");

await changeFeedProcessor.StartAsync();

Console.WriteLine("Change Feed Processor started.");

return changeFeedProcessor;

}Where the first parameter is a distinct name that describes the goal of this processor and the second name is the delegate implementation that handles changes. Following is an example of a delegate:

/// <summary>

/// The delegate receives batches of changes as they are generated in the change feed and can process them.

/// </summary>

static async Task HandleChangesAsync(

ChangeFeedProcessorContext context,

IReadOnlyCollection<ToDoItem> changes,

CancellationToken cancellationToken)

{

Console.WriteLine($"Started handling changes for lease {context.LeaseToken}...");

Console.WriteLine($"Change Feed request consumed {context.Headers.RequestCharge} RU.");

// SessionToken if needed to enforce Session consistency on another client instance

Console.WriteLine($"SessionToken ${context.Headers.Session}");

// We may want to track any operation's Diagnostics that took longer than some threshold

if (context.Diagnostics.GetClientElapsedTime() > TimeSpan.FromSeconds(1))

{

Console.WriteLine($"Change Feed request took longer than expected. Diagnostics:" + context.Diagnostics.ToString());

}

foreach (ToDoItem item in changes)

{

Console.WriteLine($"Detected operation for item with id {item.id}, created at {item.creationTime}.");

// Simulate some asynchronous operation

await Task.Delay(10);

}

Console.WriteLine("Finished handling changes.");

}Afterwards, you define the compute instance name or unique identifier with WithInstanceName, this should be unique and different in each compute instance you're deploying, and finally, which is the container to maintain the lease state with WithLeaseContainer.

Calling Build gives you the processor instance that you can start by calling StartAsync.

The normal life cycle of a host instance is:

- Read the change feed.

- If there are no changes, sleep for a predefined amount of time (customizable with

WithPollIntervalin theBuilder) and go to #1. - If there are changes, send them to the delegate.

- When the delegate finishes processing the changes successfully, update the lease store with the latest processed point in time and go to #1.