|

| 1 | +{ |

| 2 | + "cells": [ |

| 3 | + { |

| 4 | + "cell_type": "markdown", |

| 5 | + "metadata": {}, |

| 6 | + "source": [ |

| 7 | + "# Using Opik with AWS Bedrock\n", |

| 8 | + "\n", |

| 9 | + "Opik integrates with AWS Bedrock to provide a simple way to log traces for all Bedrock LLM calls. This works for all supported models, including if you are using the streaming API.\n" |

| 10 | + ] |

| 11 | + }, |

| 12 | + { |

| 13 | + "cell_type": "markdown", |

| 14 | + "metadata": {}, |

| 15 | + "source": [ |

| 16 | + "## Creating an account on Comet.com\n", |

| 17 | + "\n", |

| 18 | + "[Comet](https://www.comet.com/site?from=llm&utm_source=opik&utm_medium=colab&utm_content=bedrock&utm_campaign=opik) provides a hosted version of the Opik platform, [simply create an account](https://www.comet.com/signup?from=llm&utm_source=opik&utm_medium=colab&utm_content=bedrock&utm_campaign=opik) and grab you API Key.\n", |

| 19 | + "\n", |

| 20 | + "> You can also run the Opik platform locally, see the [installation guide](https://www.comet.com/docs/opik/self-host/overview/?from=llm&utm_source=opik&utm_medium=colab&utm_content=bedrock&utm_campaign=opik) for more information." |

| 21 | + ] |

| 22 | + }, |

| 23 | + { |

| 24 | + "cell_type": "code", |

| 25 | + "execution_count": null, |

| 26 | + "metadata": {}, |

| 27 | + "outputs": [], |

| 28 | + "source": [ |

| 29 | + "%pip install --upgrade opik boto3" |

| 30 | + ] |

| 31 | + }, |

| 32 | + { |

| 33 | + "cell_type": "code", |

| 34 | + "execution_count": null, |

| 35 | + "metadata": {}, |

| 36 | + "outputs": [], |

| 37 | + "source": [ |

| 38 | + "import opik\n", |

| 39 | + "\n", |

| 40 | + "opik.configure(use_local=False)" |

| 41 | + ] |

| 42 | + }, |

| 43 | + { |

| 44 | + "cell_type": "markdown", |

| 45 | + "metadata": {}, |

| 46 | + "source": [ |

| 47 | + "## Preparing our environment\n", |

| 48 | + "\n", |

| 49 | + "First, we will set up our bedrock client. Uncomment the following lines to pass AWS Credentials manually or [checkout other ways of passing credentials to Boto3](https://boto3.amazonaws.com/v1/documentation/api/latest/guide/credentials.html). You will also need to request access to the model in the UI before being able to generate text, here we are gonna use the Llama 3.2 model, you can request access to it in [this page for the us-east1](https://us-east-1.console.aws.amazon.com/bedrock/home?region=us-east-1#/providers?model=meta.llama3-2-3b-instruct-v1:0) region." |

| 50 | + ] |

| 51 | + }, |

| 52 | + { |

| 53 | + "cell_type": "code", |

| 54 | + "execution_count": null, |

| 55 | + "metadata": {}, |

| 56 | + "outputs": [], |

| 57 | + "source": [ |

| 58 | + "import boto3\n", |

| 59 | + "\n", |

| 60 | + "REGION = \"us-east-1\"\n", |

| 61 | + "\n", |

| 62 | + "MODEL_ID = \"us.meta.llama3-2-3b-instruct-v1:0\"\n", |

| 63 | + "\n", |

| 64 | + "bedrock = boto3.client(\n", |

| 65 | + " service_name=\"bedrock-runtime\",\n", |

| 66 | + " region_name=REGION,\n", |

| 67 | + " # aws_access_key_id=ACCESS_KEY,\n", |

| 68 | + " # aws_secret_access_key=SECRET_KEY,\n", |

| 69 | + " # aws_session_token=SESSION_TOKEN,\n", |

| 70 | + ")" |

| 71 | + ] |

| 72 | + }, |

| 73 | + { |

| 74 | + "cell_type": "markdown", |

| 75 | + "metadata": {}, |

| 76 | + "source": [ |

| 77 | + "## Logging traces\n", |

| 78 | + "\n", |

| 79 | + "In order to log traces to Opik, we need to wrap our Bedrock calls with the `track_bedrock` function:" |

| 80 | + ] |

| 81 | + }, |

| 82 | + { |

| 83 | + "cell_type": "code", |

| 84 | + "execution_count": null, |

| 85 | + "metadata": {}, |

| 86 | + "outputs": [], |

| 87 | + "source": [ |

| 88 | + "import os\n", |

| 89 | + "\n", |

| 90 | + "from opik.integrations.bedrock import track_bedrock\n", |

| 91 | + "\n", |

| 92 | + "bedrock_client = track_bedrock(bedrock, project_name=\"bedrock-integration-demo\")" |

| 93 | + ] |

| 94 | + }, |

| 95 | + { |

| 96 | + "cell_type": "code", |

| 97 | + "execution_count": null, |

| 98 | + "metadata": {}, |

| 99 | + "outputs": [], |

| 100 | + "source": [ |

| 101 | + "PROMPT = \"Why is it important to use a LLM Monitoring like CometML Opik tool that allows you to log traces and spans when working with LLM Models hosted on AWS Bedrock?\"\n", |

| 102 | + "\n", |

| 103 | + "response = bedrock_client.converse(\n", |

| 104 | + " modelId=MODEL_ID,\n", |

| 105 | + " messages=[{\"role\": \"user\", \"content\": [{\"text\": PROMPT}]}],\n", |

| 106 | + " inferenceConfig={\"temperature\": 0.5, \"maxTokens\": 512, \"topP\": 0.9},\n", |

| 107 | + ")\n", |

| 108 | + "print(\"Response\", response[\"output\"][\"message\"][\"content\"][0][\"text\"])" |

| 109 | + ] |

| 110 | + }, |

| 111 | + { |

| 112 | + "cell_type": "markdown", |

| 113 | + "metadata": {}, |

| 114 | + "source": [ |

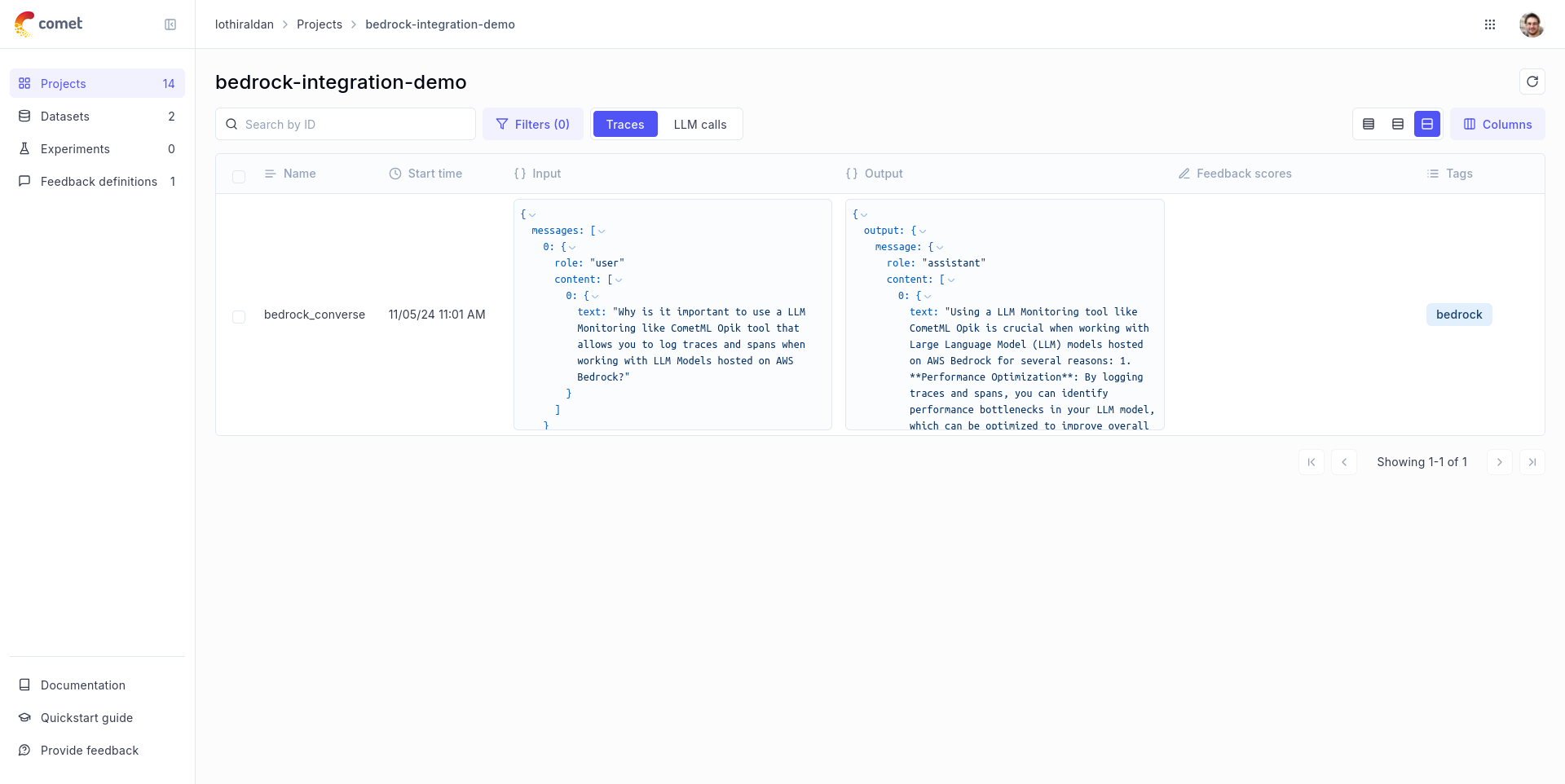

| 115 | + "The prompt and response messages are automatically logged to Opik and can be viewed in the UI.\n", |

| 116 | + "\n", |

| 117 | + "" |

| 118 | + ] |

| 119 | + }, |

| 120 | + { |

| 121 | + "cell_type": "markdown", |

| 122 | + "metadata": {}, |

| 123 | + "source": [ |

| 124 | + "# Logging traces with streaming" |

| 125 | + ] |

| 126 | + }, |

| 127 | + { |

| 128 | + "cell_type": "code", |

| 129 | + "execution_count": null, |

| 130 | + "metadata": {}, |

| 131 | + "outputs": [], |

| 132 | + "source": [ |

| 133 | + "def stream_conversation(\n", |

| 134 | + " bedrock_client,\n", |

| 135 | + " model_id,\n", |

| 136 | + " messages,\n", |

| 137 | + " system_prompts,\n", |

| 138 | + " inference_config,\n", |

| 139 | + "):\n", |

| 140 | + " \"\"\"\n", |

| 141 | + " Sends messages to a model and streams the response.\n", |

| 142 | + " Args:\n", |

| 143 | + " bedrock_client: The Boto3 Bedrock runtime client.\n", |

| 144 | + " model_id (str): The model ID to use.\n", |

| 145 | + " messages (JSON) : The messages to send.\n", |

| 146 | + " system_prompts (JSON) : The system prompts to send.\n", |

| 147 | + " inference_config (JSON) : The inference configuration to use.\n", |

| 148 | + " additional_model_fields (JSON) : Additional model fields to use.\n", |

| 149 | + "\n", |

| 150 | + " Returns:\n", |

| 151 | + " Nothing.\n", |

| 152 | + "\n", |

| 153 | + " \"\"\"\n", |

| 154 | + "\n", |

| 155 | + " response = bedrock_client.converse_stream(\n", |

| 156 | + " modelId=model_id,\n", |

| 157 | + " messages=messages,\n", |

| 158 | + " system=system_prompts,\n", |

| 159 | + " inferenceConfig=inference_config,\n", |

| 160 | + " )\n", |

| 161 | + "\n", |

| 162 | + " stream = response.get(\"stream\")\n", |

| 163 | + " if stream:\n", |

| 164 | + " for event in stream:\n", |

| 165 | + " if \"messageStart\" in event:\n", |

| 166 | + " print(f\"\\nRole: {event['messageStart']['role']}\")\n", |

| 167 | + "\n", |

| 168 | + " if \"contentBlockDelta\" in event:\n", |

| 169 | + " print(event[\"contentBlockDelta\"][\"delta\"][\"text\"], end=\"\")\n", |

| 170 | + "\n", |

| 171 | + " if \"messageStop\" in event:\n", |

| 172 | + " print(f\"\\nStop reason: {event['messageStop']['stopReason']}\")\n", |

| 173 | + "\n", |

| 174 | + " if \"metadata\" in event:\n", |

| 175 | + " metadata = event[\"metadata\"]\n", |

| 176 | + " if \"usage\" in metadata:\n", |

| 177 | + " print(\"\\nToken usage\")\n", |

| 178 | + " print(f\"Input tokens: {metadata['usage']['inputTokens']}\")\n", |

| 179 | + " print(f\":Output tokens: {metadata['usage']['outputTokens']}\")\n", |

| 180 | + " print(f\":Total tokens: {metadata['usage']['totalTokens']}\")\n", |

| 181 | + " if \"metrics\" in event[\"metadata\"]:\n", |

| 182 | + " print(f\"Latency: {metadata['metrics']['latencyMs']} milliseconds\")\n", |

| 183 | + "\n", |

| 184 | + "\n", |

| 185 | + "system_prompt = \"\"\"You are an app that creates playlists for a radio station\n", |

| 186 | + " that plays rock and pop music. Only return song names and the artist.\"\"\"\n", |

| 187 | + "\n", |

| 188 | + "# Message to send to the model.\n", |

| 189 | + "input_text = \"Create a list of 3 pop songs.\"\n", |

| 190 | + "\n", |

| 191 | + "\n", |

| 192 | + "message = {\"role\": \"user\", \"content\": [{\"text\": input_text}]}\n", |

| 193 | + "messages = [message]\n", |

| 194 | + "\n", |

| 195 | + "# System prompts.\n", |

| 196 | + "system_prompts = [{\"text\": system_prompt}]\n", |

| 197 | + "\n", |

| 198 | + "# inference parameters to use.\n", |

| 199 | + "temperature = 0.5\n", |

| 200 | + "top_p = 0.9\n", |

| 201 | + "# Base inference parameters.\n", |

| 202 | + "inference_config = {\"temperature\": temperature, \"topP\": 0.9}\n", |

| 203 | + "\n", |

| 204 | + "\n", |

| 205 | + "stream_conversation(\n", |

| 206 | + " bedrock_client,\n", |

| 207 | + " MODEL_ID,\n", |

| 208 | + " messages,\n", |

| 209 | + " system_prompts,\n", |

| 210 | + " inference_config,\n", |

| 211 | + ")" |

| 212 | + ] |

| 213 | + }, |

| 214 | + { |

| 215 | + "cell_type": "markdown", |

| 216 | + "metadata": {}, |

| 217 | + "source": [ |

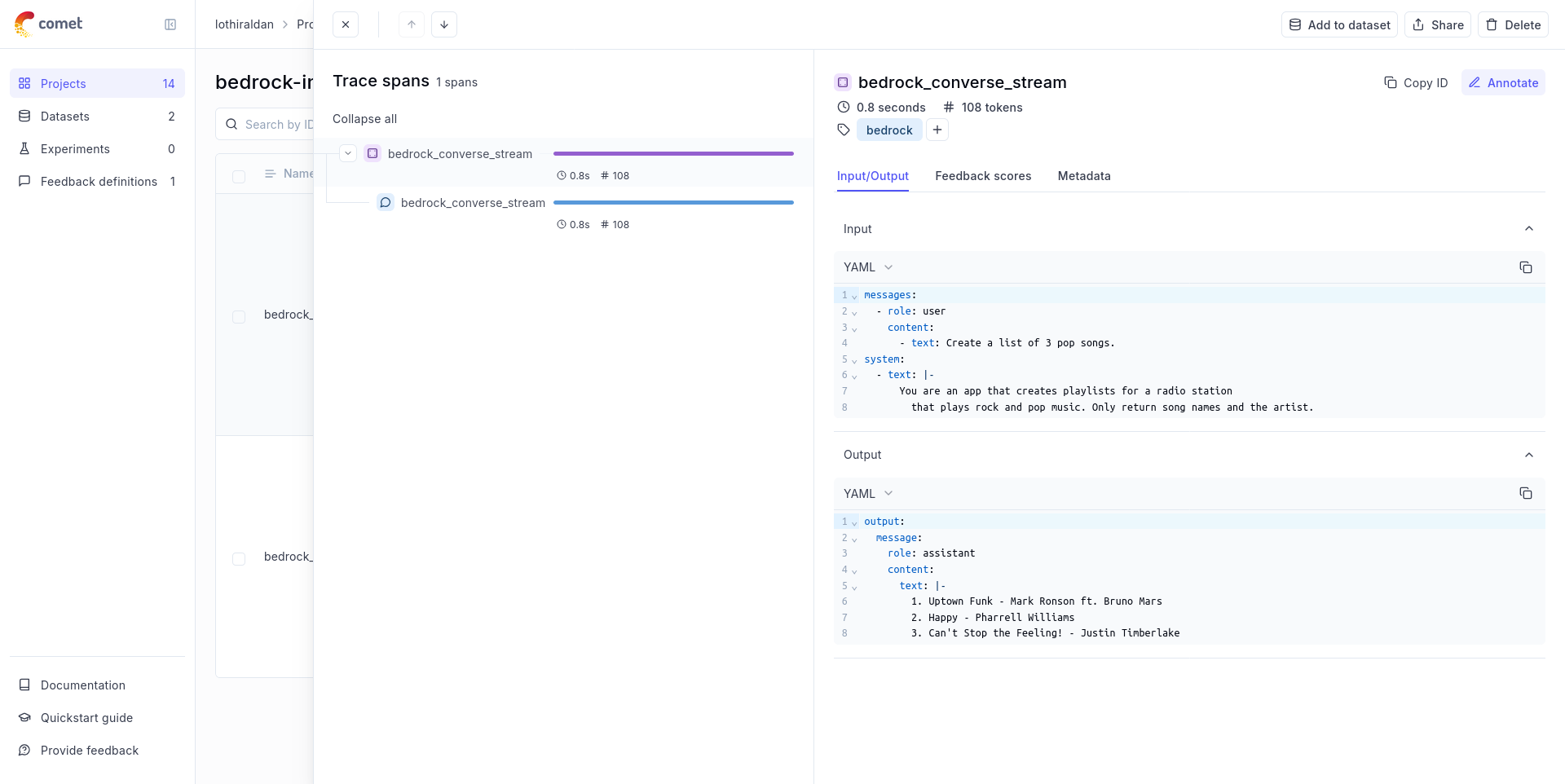

| 218 | + "" |

| 219 | + ] |

| 220 | + }, |

| 221 | + { |

| 222 | + "cell_type": "markdown", |

| 223 | + "metadata": {}, |

| 224 | + "source": [ |

| 225 | + "## Using it with the `track` decorator\n", |

| 226 | + "\n", |

| 227 | + "If you have multiple steps in your LLM pipeline, you can use the `track` decorator to log the traces for each step. If Bedrock is called within one of these steps, the LLM call with be associated with that corresponding step:" |

| 228 | + ] |

| 229 | + }, |

| 230 | + { |

| 231 | + "cell_type": "code", |

| 232 | + "execution_count": null, |

| 233 | + "metadata": {}, |

| 234 | + "outputs": [], |

| 235 | + "source": [ |

| 236 | + "from opik import track\n", |

| 237 | + "from opik.integrations.bedrock import track_bedrock\n", |

| 238 | + "\n", |

| 239 | + "bedrock = boto3.client(\n", |

| 240 | + " service_name=\"bedrock-runtime\",\n", |

| 241 | + " region_name=REGION,\n", |

| 242 | + " # aws_access_key_id=ACCESS_KEY,\n", |

| 243 | + " # aws_secret_access_key=SECRET_KEY,\n", |

| 244 | + " # aws_session_token=SESSION_TOKEN,\n", |

| 245 | + ")\n", |

| 246 | + "\n", |

| 247 | + "os.environ[\"OPIK_PROJECT_NAME\"] = \"bedrock-integration-demo\"\n", |

| 248 | + "bedrock_client = track_bedrock(bedrock)\n", |

| 249 | + "\n", |

| 250 | + "\n", |

| 251 | + "@track\n", |

| 252 | + "def generate_story(prompt):\n", |

| 253 | + " res = bedrock_client.converse(\n", |

| 254 | + " modelId=MODEL_ID, messages=[{\"role\": \"user\", \"content\": [{\"text\": prompt}]}]\n", |

| 255 | + " )\n", |

| 256 | + " return res[\"output\"][\"message\"][\"content\"][0][\"text\"]\n", |

| 257 | + "\n", |

| 258 | + "\n", |

| 259 | + "@track\n", |

| 260 | + "def generate_topic():\n", |

| 261 | + " prompt = \"Generate a topic for a story about Opik.\"\n", |

| 262 | + " res = bedrock_client.converse(\n", |

| 263 | + " modelId=MODEL_ID, messages=[{\"role\": \"user\", \"content\": [{\"text\": prompt}]}]\n", |

| 264 | + " )\n", |

| 265 | + " return res[\"output\"][\"message\"][\"content\"][0][\"text\"]\n", |

| 266 | + "\n", |

| 267 | + "\n", |

| 268 | + "@track\n", |

| 269 | + "def generate_opik_story():\n", |

| 270 | + " topic = generate_topic()\n", |

| 271 | + " story = generate_story(topic)\n", |

| 272 | + " return story\n", |

| 273 | + "\n", |

| 274 | + "\n", |

| 275 | + "generate_opik_story()" |

| 276 | + ] |

| 277 | + }, |

| 278 | + { |

| 279 | + "cell_type": "markdown", |

| 280 | + "metadata": {}, |

| 281 | + "source": [ |

| 282 | + "The trace can now be viewed in the UI:\n", |

| 283 | + "\n", |

| 284 | + "" |

| 285 | + ] |

| 286 | + } |

| 287 | + ], |

| 288 | + "metadata": { |

| 289 | + "kernelspec": { |

| 290 | + "display_name": "Python 3 (ipykernel)", |

| 291 | + "language": "python", |

| 292 | + "name": "python3" |

| 293 | + }, |

| 294 | + "language_info": { |

| 295 | + "codemirror_mode": { |

| 296 | + "name": "ipython", |

| 297 | + "version": 3 |

| 298 | + }, |

| 299 | + "file_extension": ".py", |

| 300 | + "mimetype": "text/x-python", |

| 301 | + "name": "python", |

| 302 | + "nbconvert_exporter": "python", |

| 303 | + "pygments_lexer": "ipython3", |

| 304 | + "version": "3.10.12" |

| 305 | + } |

| 306 | + }, |

| 307 | + "nbformat": 4, |

| 308 | + "nbformat_minor": 4 |

| 309 | +} |

0 commit comments