In Node.js binary data is handled with the Buffer constructor. The Buffer constructor is a global, so there's no need to require any core module in order to use the Node core Buffer API.

const buffer = Buffer.alloc(10)Buffer.alloc(size[, fill[, encoding]])

By default the Buffer.alloc function produces a zero-filled buffer. There is an unsafe way:

const buffer = Buffer.allocUnsafe(10)Buffer.from(x)x could be array, arrayBuffer, buffer or object

buffer.toString()buf.toString([encoding[, start[, end]]])

encoding could be utf-8 (default), hex, base64, utf16le, latin1, and others legacy

const buffer = Buffer.from('👀')

const json = JSON.stringify(buffer)

const parsed = JSON.parse(json)

console.log(parsed) // prints { type: 'Buffer', data: [ 240, 159, 145, 128 ] }

console.log(Buffer.from(parsed.data)) // prints <Buffer f0 9f 91 80>So Buffer instances are represented in JSON by an object that has a type property with a string value of Buffer and a data property with an array of numbers, representing the value of each byte in the buffer.

When deserializing, JSON.parse will only turn that JSON representation of the buffer into a plain JavaScript object, to turn it into an object the data array must be passed to Buffer.from

Readable: A source of input. You receive data from aReadablestream.Writable: A destination. You stream data to aWritablestream. Sometimes referred to as a “sink”, because it is the end-destination for streaming data.Duplex: A stream that implements both aReadableandWritableinterface.Duplexstreams can receive data, as well as produce data. An example would be a TCP socket, where data flows in both directions.Transform: A type ofDuplexstream, where the data passing through the stream is altered. The output will be different from the input in some way. You can send data to aTransformstream, and also read data from it after the data has been transformed.PassThrough: A special type ofTransformstream, which does not alter the data passing through it. According to the documentation, these are mostly used for testing and examples; they serve little actual purpose beyond passing data along.

- All streams inherits from

EventEmitter - Working with stream events directly is useful when you want finer grained control over how a stream is consumed.

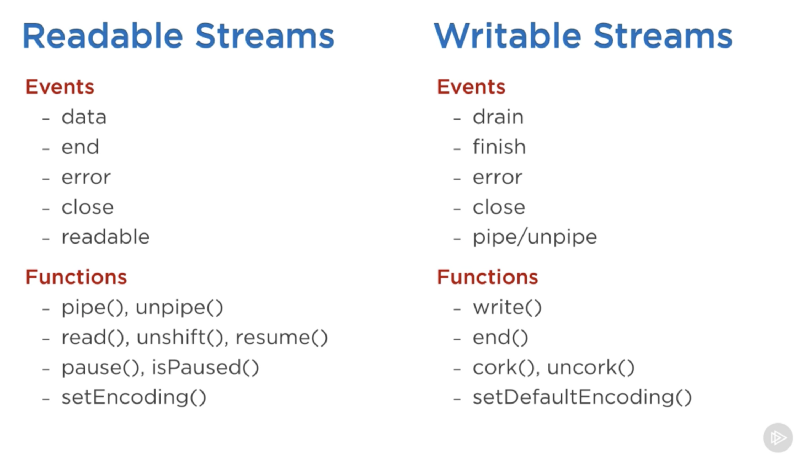

data: Emitted when the stream outputs a chunk of data. The data chunk is passed to the handler.readable: Emitted when there is data ready to be read from the stream.end: Emitted when no more data is available for consumption.error: An error has occurred within the stream, and an error object is passed to the handler. Unhandled stream errors can crash your program.close: The 'close' event is emitted when the stream and any of its underlying resources (a file descriptor, for example) have been closed. The event indicates that no more events will be emitted, and no further computation will occur.

drain: The writable stream's internal buffer has been cleared and is ready to have more data written into it.finish: All data has been written to the underlying system.error: An error occurred while writing data, and an error object is passed to the handler. Unhandled stream errors can crash your program.close: The 'close' event is emitted when the stream and any of its underlying resources (a file descriptor, for example) have been closed. The event indicates that no more events will be emitted, and no further computation will occur.

- To connect streams together and start the flow of data, we use the

pipemethod available on readable streams. - The

pipelinemethod takes any number of streams as arguments, and a callback function as its last argument. If an error occurs anywhere in the pipeline, the pipeline will end and the callback will be invoked with the error that occurred. The callback function is also invoked when the pipeline successfully ends, providing us a way to know when the pipeline has completed.

All streams created by Node.js APIs operate exclusively on strings and Buffer (or Uint8Array) objects. It is possible, however, for stream implementations to work with other types of JavaScript values. Stream instances are switched into object mode using the objectMode option when the stream is created.

Both Writable and Readable streams will store data in an internal buffer that can be retrieved using writable.writableBuffer or readable.readableBuffer, respectively.

Data is buffered in Readable streams when the implementation calls stream.push(chunk)

Data is buffered in Writable streams when the writable.write(chunk) method is called repeatedly.

const { Writable } = require('stream');

const data = []

const writable = new Writable({

decodeStrings: false, // allow to set that always decode strings

objectMode: true, // allow to use abny kind og javascript object

write (chunk, enc, next) {

if (chunk.toString().indexOf('a') >= 0) {

next(new Error('chunk is invalid'));

} else {

data.push(chunk)

next()

}

}

});

writable.on('finish', () => { console.log('finished writing', data) })

writable.write('A\n')

writable.write(1)

writable.write('C\n')

writable.end('nothing more to write')const { Readable } = require('stream');

const data = ['some', 'data', 'to', 'read'];

const readable = new Readable({

encoding: 'utf8', // allow to set encoding if it is a string

objectMode: true, // allow to not use Buffer

read(size) {

if (data.length === 0) this.push(null)

else this.push(data.shift())

}

});

readable.on('data', (data) => { console.log('got data', data) })

readable.on('end', () => { console.log('finished reading') })Simple option: stream.Readable.from(iterable, [options])

const { Readable } = require('stream')

const readable = Readable.from(['some', 'data', 'to', 'read'])

readable.on('data', (data) => { console.log('got data', data) })

readable.on('end', () => { console.log('finished reading') })const { Duplex } = require('stream');

const myDuplex = new Duplex({

read(size) {

// ...

},

write(chunk, encoding, callback) {

// ...

}

});const { Transform, pipeline } = require("stream");

const upperCaseTransform = new Transform({

transform: function(chunk, encoding, callback) {

callback(null, chunk.toString().toUpperCase());

}

});

pipeline(process.stdin, upperCaseTransform, process.stdout, err => {

if (err) {

console.log("Pipeline encountered an error:", err);

} else {

console.log("Pipeline ended");

}

});A function to get notified when a stream is no longer readable, writable or has experienced an error or a premature close event.

const { finished } = require('stream');

const rs = fs.createReadStream('archive.tar');

finished(rs, (err) => {

if (err) {

console.error('Stream failed.', err);

} else {

console.log('Stream is done reading.');

}

});

rs.resume(); // Drain the stream.The pipeline command is a module method to pipe between streams and generators forwarding errors and properly cleaning up and provide a callback when the pipeline is complete.

const { PassThrough, pipeline } = require("stream");

const pass = new PassThrough();

pipeline(process.stdin, pass, process.stdout, err => {

if (err) {

console.log("Pipeline encountered an error:", err);

} else {

console.log("Pipeline ended");

}

});

///// or

process.stdin.pipe(pass).pipe(process.stdout)