This script allows users of Google Search Console (GSC) to extract all the different reports from the Index Coverage report section of the platform and the Sitemap Coverage report section. I wrote a blog post about why I built this script.

The script uses the ECMAScript modules import/export syntax so double check that you are above version 20 to run the script.

# Check Node version

node -vAfter downloading/cloning the repo, install the necessary modules to run the script.

npm installAfter that you can run the script with npm start command from your terminal.

npm startYou will get a prompt message in your terminal asking for your Google Account email address and your password. This is to login automatically through the headless browser.

If you have 2-step Verification enabled it will prompt a warning message and wait for 30 seconds to give the user time to verify access through one of your devices.

Once verified and logged in, the script will extract all the list of GSC properties present in your account. At this point you will have to choose which properties you would like to extract data from.

Select one or multiple properties using the spacebar. Move up and down using the arrow keys.

When this is done, your will see the processing messages in your terminal while the script runs.

The script will create a "index-results_${date}.xlsx" Excel file. The file will contain 1 summary tab with the number of Indexed URLs per property when you choose more than 1 property and up to 4 tabs per property including:

- A summary of the index coverage extraction of the property.

- The individual URLs extracted from the Coverage section.

- A summary of the index coverage extraction from the Sitemap section.

- The individual URLs extracted from the Sitemap section.

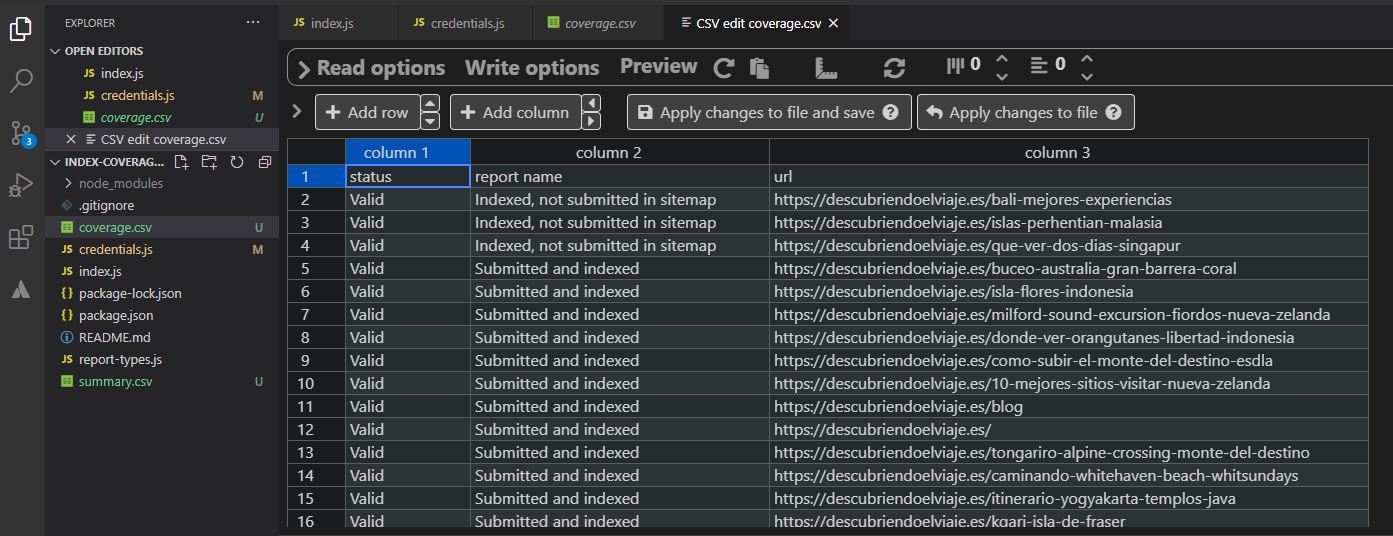

The "sitename_COV" tab and the "coverage.csv" file will contain all the URLs that have been extracted from each individual coverage report. If you have requested a domain property the tab in Excel and the CSV will be precded by DOM.

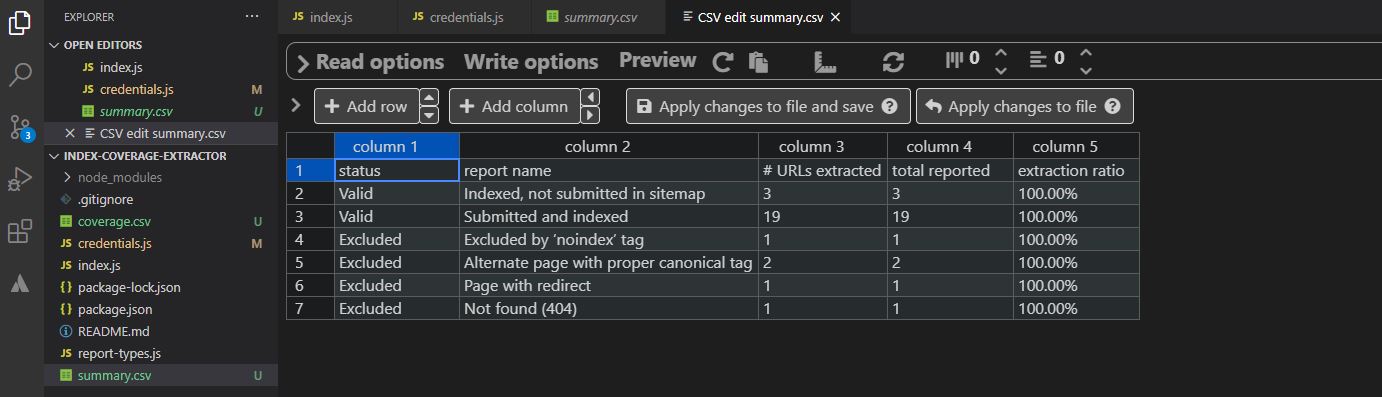

The "sitename_SUM" tab and the "summary.csv" file will contain the amount of urls per report that have been extracted, the total number that GSC reports in the user interface (either the same or higher) and an "extraction ratio" which is a division between the URLs extracted and the total number of URLs reported by GSC.

The "sitename_MAPS" tab and the "sitemap.csv" file will contain all the URLs that have been extracted from each individual sitemap coverage report.

The "sitename_SUM_MAPS" tab and the "sum-sitemap.csv" file will contain a summary of the top-level coverage numbers per sitemap reported by GSC.

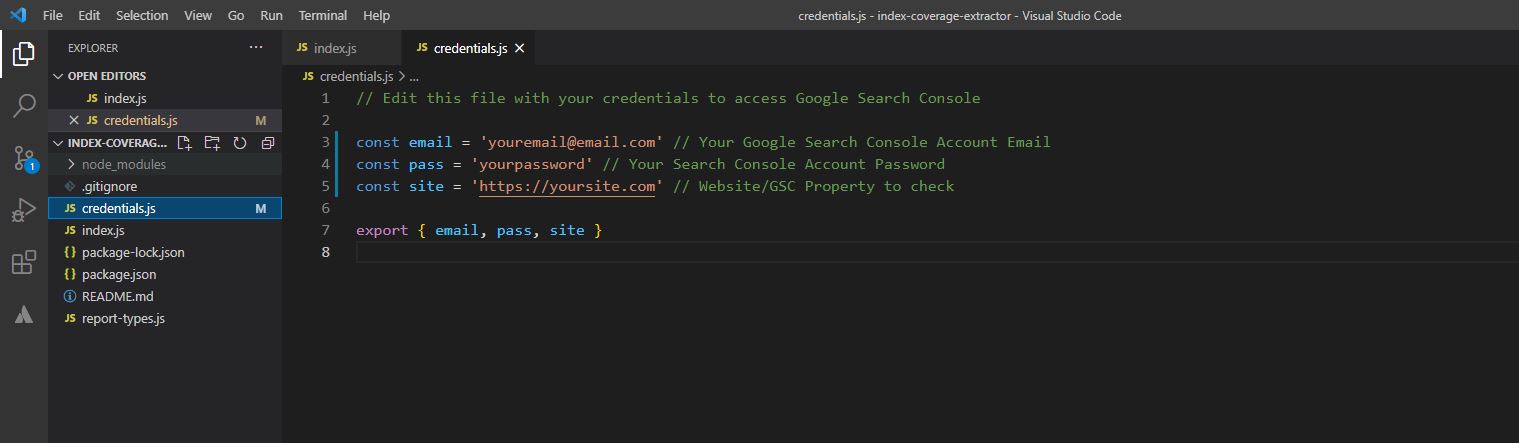

You can choose to fill in the credentials.js file with your email and password to avoid adding them in the terminal during the running of the script.

Verified GSC properties can be added in the credentials.js file. You can add them as a single string for only 1 property OR as an array for multiple properties.

Remember that if you want to extract data from a Domain Property you should add sc-domain: in front of the domain (sc-domain:yourdomain.com).

// Single property

const site = 'https://yoursite.com/';

// OR Multiple properties

const site = ['https://yoursite.com/', 'sc-domain:yourdomain.com'];After your first login using the tool a cookies.json file will be created to avoid the log in process multiple times. If you use multiple accounts remember to delete this file.

In some cases you might want to see how the browser automation is happenning in real-time. For that, you can change the headless variable.

// Change to false to see the automation

const headless = false;Since each GSC property can contain many sitemaps and this can take more time, you can choose wether you would like to extract sitemap coverage data or not.

// Change to false to prevent the script from extracting sitemap coverage data

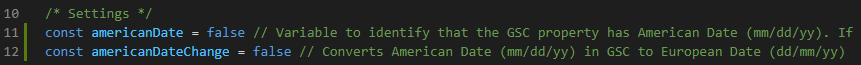

const sitemapExtract = false;The script extracts the "Latest updated" dates that GSC provides. Hence the date can be in two different formats: American date (mm/dd/yyyy) and European date (dd/mm/yyyy). Therefore there is an option to set which date format you would like the script to output the dates:

The default setting assumes your property shows the dates in European date format (dd/mm/yyyy). If your GSC property shows the dates in American date format then you would need to change americanDate = true. Also if your property is in American date format but you'd like to change it to European date format you can do that by changing americanDateChange = true.

A big difference in this version is that it will only extract the reports that are available instead of looping through all the coverage reports GSC offers (old report-types.js). This minimises the amount of requests to Google Search Console tot he absolute minimum required.

- All Indexed URLs

- Indexed, though blocked by robots.txt

- Page indexed without content

- Excluded by ‘noindex’ tag

- Blocked by page removal tool

- Blocked by robots.txt

- Blocked due to unauthorized request (401)

- Crawled - currently not indexed

- Discovered - currently not indexed

- Alternate page with proper canonical tag

- Duplicate without user-selected canonical

- Duplicate, Google chose different canonical than user

- Not found (404)

- Page with redirect

- Soft 404

- Duplicate, submitted URL not selected as canonical

- Blocked due to access forbidden (403)

- Blocked due to other 4xx issue

- Server error (5xx)

- Redirect error

- Submitted URL blocked by robots.txt

- Submitted URL marked ‘noindex’

- Submitted URL seems to be a Soft 404

- Submitted URL has crawl issue

- Submitted URL not found (404)

- Submitted URL returned 403

- Submitted URL returns unauthorized request (401)

- Submitted URL blocked due to other 4xx issue