diff --git a/projects/pose_anything/README.md b/projects/pose_anything/README.md

new file mode 100644

index 0000000000..c00b4a914e

--- /dev/null

+++ b/projects/pose_anything/README.md

@@ -0,0 +1,82 @@

+# Pose Anything: A Graph-Based Approach for Category-Agnostic Pose Estimation

+

+## [Paper](https://arxiv.org/pdf/2311.17891.pdf) | [Project Page](https://orhir.github.io/pose-anything/) | [Official Repo](https://github.com/orhir/PoseAnything)

+

+By [Or Hirschorn](https://scholar.google.co.il/citations?user=GgFuT_QAAAAJ&hl=iw&oi=ao)

+and [Shai Avidan](https://scholar.google.co.il/citations?hl=iw&user=hpItE1QAAAAJ)

+

+

+

+## Introduction

+

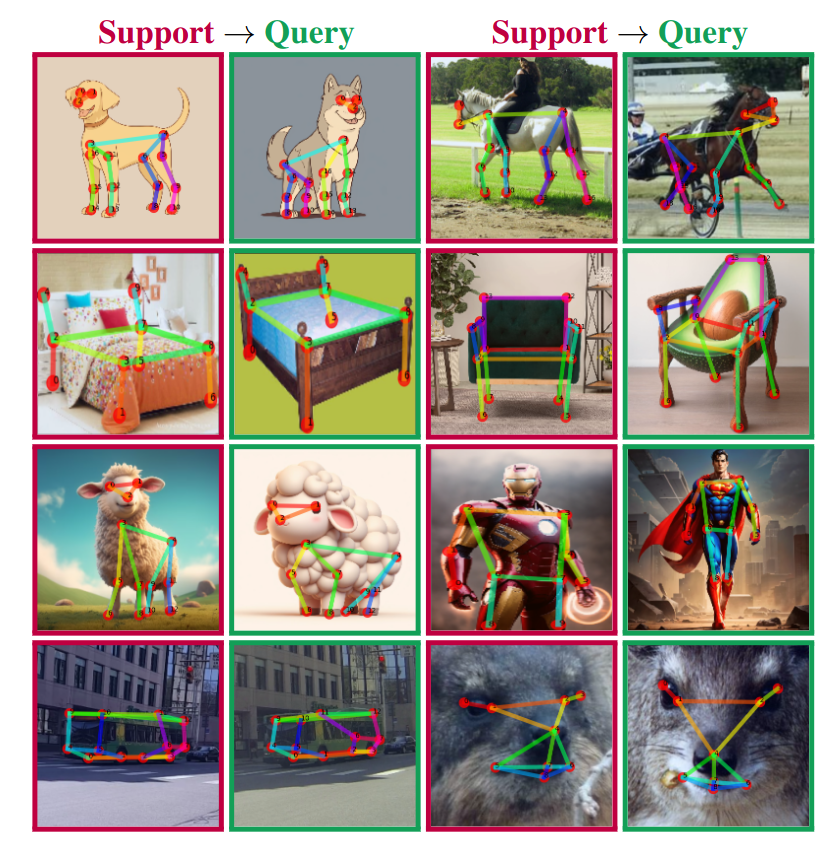

+We present a novel approach to CAPE that leverages the inherent geometrical

+relations between keypoints through a newly designed Graph Transformer Decoder.

+By capturing and incorporating this crucial structural information, our method

+enhances the accuracy of keypoint localization, marking a significant departure

+from conventional CAPE techniques that treat keypoints as isolated entities.

+

+## Citation

+

+If you find this useful, please cite this work as follows:

+

+```bibtex

+@misc{hirschorn2023pose,

+ title={Pose Anything: A Graph-Based Approach for Category-Agnostic Pose Estimation},

+ author={Or Hirschorn and Shai Avidan},

+ year={2023},

+ eprint={2311.17891},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+## Getting Started

+

+📣 Pose Anything is available on OpenXLab now. [\[Try it online\]](https://openxlab.org.cn/apps/detail/orhir/Pose-Anything)

+

+### Install Dependencies

+

+We recommend using a virtual environment for running our code.

+After installing MMPose, you can install the rest of the dependencies by

+running:

+

+```

+pip install timm

+```

+

+### Pretrained Weights

+

+The full list of pretrained models can be found in

+the [Official Repo](https://github.com/orhir/PoseAnything).

+

+## Demo on Custom Images

+

+***A bigger and more accurate version of the model - COMING SOON!***

+

+Download

+the [pretrained model](https://drive.google.com/file/d/1RT1Q8AMEa1kj6k9ZqrtWIKyuR4Jn4Pqc/view?usp=drive_link)

+and run:

+

+```

+python demo.py --support [path_to_support_image] --query [path_to_query_image] --config configs/demo_b.py --checkpoint [path_to_pretrained_ckpt]

+```

+

+***Note:*** The demo code supports any config with suitable checkpoint file.

+More pre-trained models can be found in the official repo.

+

+## Training and Testing on MP-100 Dataset

+

+**We currently only support demo on custom images through the MMPose repo.**

+

+**For training and testing on the MP-100 dataset, please refer to

+the [Official Repo](https://github.com/orhir/PoseAnything).**

+

+## Acknowledgement

+

+Our code is based on code from:

+

+- [CapeFormer](https://github.com/flyinglynx/CapeFormer)

+

+## License

+

+This project is released under the Apache 2.0 license.

diff --git a/projects/pose_anything/configs/demo.py b/projects/pose_anything/configs/demo.py

new file mode 100644

index 0000000000..70a468a8f4

--- /dev/null

+++ b/projects/pose_anything/configs/demo.py

@@ -0,0 +1,207 @@

+log_level = 'INFO'

+load_from = None

+resume_from = None

+dist_params = dict(backend='nccl')

+workflow = [('train', 1)]

+checkpoint_config = dict(interval=20)

+evaluation = dict(

+ interval=25,

+ metric=['PCK', 'NME', 'AUC', 'EPE'],

+ key_indicator='PCK',

+ gpu_collect=True,

+ res_folder='')

+optimizer = dict(

+ type='Adam',

+ lr=1e-5,

+)

+

+optimizer_config = dict(grad_clip=None)

+# learning policy

+lr_config = dict(

+ policy='step',

+ warmup='linear',

+ warmup_iters=1000,

+ warmup_ratio=0.001,

+ step=[160, 180])

+total_epochs = 200

+log_config = dict(

+ interval=50,

+ hooks=[dict(type='TextLoggerHook'),

+ dict(type='TensorboardLoggerHook')])

+

+channel_cfg = dict(

+ num_output_channels=1,

+ dataset_joints=1,

+ dataset_channel=[

+ [

+ 0,

+ ],

+ ],

+ inference_channel=[

+ 0,

+ ],

+ max_kpt_num=100)

+

+# model settings

+model = dict(

+ type='TransformerPoseTwoStage',

+ pretrained='swinv2_large',

+ encoder_config=dict(

+ type='SwinTransformerV2',

+ embed_dim=192,

+ depths=[2, 2, 18, 2],

+ num_heads=[6, 12, 24, 48],

+ window_size=16,

+ pretrained_window_sizes=[12, 12, 12, 6],

+ drop_path_rate=0.2,

+ img_size=256,

+ ),

+ keypoint_head=dict(

+ type='TwoStageHead',

+ in_channels=1536,

+ transformer=dict(

+ type='TwoStageSupportRefineTransformer',

+ d_model=384,

+ nhead=8,

+ num_encoder_layers=3,

+ num_decoder_layers=3,

+ dim_feedforward=1536,

+ dropout=0.1,

+ similarity_proj_dim=384,

+ dynamic_proj_dim=192,

+ activation='relu',

+ normalize_before=False,

+ return_intermediate_dec=True),

+ share_kpt_branch=False,

+ num_decoder_layer=3,

+ with_heatmap_loss=True,

+ support_pos_embed=False,

+ heatmap_loss_weight=2.0,

+ skeleton_loss_weight=0.02,

+ num_samples=0,

+ support_embedding_type='fixed',

+ num_support=100,

+ support_order_dropout=-1,

+ positional_encoding=dict(

+ type='SinePositionalEncoding', num_feats=192, normalize=True)),

+ # training and testing settings

+ train_cfg=dict(),

+ test_cfg=dict(

+ flip_test=False,

+ post_process='default',

+ shift_heatmap=True,

+ modulate_kernel=11))

+

+data_cfg = dict(

+ image_size=[256, 256],

+ heatmap_size=[64, 64],

+ num_output_channels=channel_cfg['num_output_channels'],

+ num_joints=channel_cfg['dataset_joints'],

+ dataset_channel=channel_cfg['dataset_channel'],

+ inference_channel=channel_cfg['inference_channel'])

+

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='TopDownGetRandomScaleRotation', rot_factor=15,

+ scale_factor=0.15),

+ dict(type='TopDownAffineFewShot'),

+ dict(type='ToTensor'),

+ dict(

+ type='NormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(type='TopDownGenerateTargetFewShot', sigma=1),

+ dict(

+ type='Collect',

+ keys=['img', 'target', 'target_weight'],

+ meta_keys=[

+ 'image_file',

+ 'joints_3d',

+ 'joints_3d_visible',

+ 'center',

+ 'scale',

+ 'rotation',

+ 'bbox_score',

+ 'flip_pairs',

+ 'category_id',

+ 'skeleton',

+ ]),

+]

+

+valid_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='TopDownAffineFewShot'),

+ dict(type='ToTensor'),

+ dict(

+ type='NormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(type='TopDownGenerateTargetFewShot', sigma=1),

+ dict(

+ type='Collect',

+ keys=['img', 'target', 'target_weight'],

+ meta_keys=[

+ 'image_file',

+ 'joints_3d',

+ 'joints_3d_visible',

+ 'center',

+ 'scale',

+ 'rotation',

+ 'bbox_score',

+ 'flip_pairs',

+ 'category_id',

+ 'skeleton',

+ ]),

+]

+

+test_pipeline = valid_pipeline

+

+data_root = 'data/mp100'

+data = dict(

+ samples_per_gpu=8,

+ workers_per_gpu=8,

+ train=dict(

+ type='TransformerPoseDataset',

+ ann_file=f'{data_root}/annotations/mp100_all.json',

+ img_prefix=f'{data_root}/images/',

+ # img_prefix=f'{data_root}',

+ data_cfg=data_cfg,

+ valid_class_ids=None,

+ max_kpt_num=channel_cfg['max_kpt_num'],

+ num_shots=1,

+ pipeline=train_pipeline),

+ val=dict(

+ type='TransformerPoseDataset',

+ ann_file=f'{data_root}/annotations/mp100_split1_val.json',

+ img_prefix=f'{data_root}/images/',

+ # img_prefix=f'{data_root}',

+ data_cfg=data_cfg,

+ valid_class_ids=None,

+ max_kpt_num=channel_cfg['max_kpt_num'],

+ num_shots=1,

+ num_queries=15,

+ num_episodes=100,

+ pipeline=valid_pipeline),

+ test=dict(

+ type='TestPoseDataset',

+ ann_file=f'{data_root}/annotations/mp100_split1_test.json',

+ img_prefix=f'{data_root}/images/',

+ # img_prefix=f'{data_root}',

+ data_cfg=data_cfg,

+ valid_class_ids=None,

+ max_kpt_num=channel_cfg['max_kpt_num'],

+ num_shots=1,

+ num_queries=15,

+ num_episodes=200,

+ pck_threshold_list=[0.05, 0.10, 0.15, 0.2, 0.25],

+ pipeline=test_pipeline),

+)

+vis_backends = [

+ dict(type='LocalVisBackend'),

+ dict(type='TensorboardVisBackend'),

+]

+visualizer = dict(

+ type='PoseLocalVisualizer', vis_backends=vis_backends, name='visualizer')

+

+shuffle_cfg = dict(interval=1)

diff --git a/projects/pose_anything/configs/demo_b.py b/projects/pose_anything/configs/demo_b.py

new file mode 100644

index 0000000000..2b7d8b30ff

--- /dev/null

+++ b/projects/pose_anything/configs/demo_b.py

@@ -0,0 +1,205 @@

+custom_imports = dict(imports=['models'])

+

+log_level = 'INFO'

+load_from = None

+resume_from = None

+dist_params = dict(backend='nccl')

+workflow = [('train', 1)]

+checkpoint_config = dict(interval=20)

+evaluation = dict(

+ interval=25,

+ metric=['PCK', 'NME', 'AUC', 'EPE'],

+ key_indicator='PCK',

+ gpu_collect=True,

+ res_folder='')

+optimizer = dict(

+ type='Adam',

+ lr=1e-5,

+)

+

+optimizer_config = dict(grad_clip=None)

+# learning policy

+lr_config = dict(

+ policy='step',

+ warmup='linear',

+ warmup_iters=1000,

+ warmup_ratio=0.001,

+ step=[160, 180])

+total_epochs = 200

+log_config = dict(

+ interval=50,

+ hooks=[dict(type='TextLoggerHook'),

+ dict(type='TensorboardLoggerHook')])

+

+channel_cfg = dict(

+ num_output_channels=1,

+ dataset_joints=1,

+ dataset_channel=[

+ [

+ 0,

+ ],

+ ],

+ inference_channel=[

+ 0,

+ ],

+ max_kpt_num=100)

+

+# model settings

+model = dict(

+ type='PoseAnythingModel',

+ pretrained='swinv2_base',

+ encoder_config=dict(

+ type='SwinTransformerV2',

+ embed_dim=128,

+ depths=[2, 2, 18, 2],

+ num_heads=[4, 8, 16, 32],

+ window_size=14,

+ pretrained_window_sizes=[12, 12, 12, 6],

+ drop_path_rate=0.1,

+ img_size=224,

+ ),

+ keypoint_head=dict(

+ type='PoseHead',

+ in_channels=1024,

+ transformer=dict(

+ type='EncoderDecoder',

+ d_model=256,

+ nhead=8,

+ num_encoder_layers=3,

+ num_decoder_layers=3,

+ graph_decoder='pre',

+ dim_feedforward=1024,

+ dropout=0.1,

+ similarity_proj_dim=256,

+ dynamic_proj_dim=128,

+ activation='relu',

+ normalize_before=False,

+ return_intermediate_dec=True),

+ share_kpt_branch=False,

+ num_decoder_layer=3,

+ with_heatmap_loss=True,

+ heatmap_loss_weight=2.0,

+ support_order_dropout=-1,

+ positional_encoding=dict(

+ type='SinePositionalEncoding', num_feats=128, normalize=True)),

+ # training and testing settings

+ train_cfg=dict(),

+ test_cfg=dict(

+ flip_test=False,

+ post_process='default',

+ shift_heatmap=True,

+ modulate_kernel=11))

+

+data_cfg = dict(

+ image_size=[224, 224],

+ heatmap_size=[64, 64],

+ num_output_channels=channel_cfg['num_output_channels'],

+ num_joints=channel_cfg['dataset_joints'],

+ dataset_channel=channel_cfg['dataset_channel'],

+ inference_channel=channel_cfg['inference_channel'])

+

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='TopDownGetRandomScaleRotation', rot_factor=15,

+ scale_factor=0.15),

+ dict(type='TopDownAffineFewShot'),

+ dict(type='ToTensor'),

+ dict(

+ type='NormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(type='TopDownGenerateTargetFewShot', sigma=1),

+ dict(

+ type='Collect',

+ keys=['img', 'target', 'target_weight'],

+ meta_keys=[

+ 'image_file',

+ 'joints_3d',

+ 'joints_3d_visible',

+ 'center',

+ 'scale',

+ 'rotation',

+ 'bbox_score',

+ 'flip_pairs',

+ 'category_id',

+ 'skeleton',

+ ]),

+]

+

+valid_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='TopDownAffineFewShot'),

+ dict(type='ToTensor'),

+ dict(

+ type='NormalizeTensor',

+ mean=[0.485, 0.456, 0.406],

+ std=[0.229, 0.224, 0.225]),

+ dict(type='TopDownGenerateTargetFewShot', sigma=1),

+ dict(

+ type='Collect',

+ keys=['img', 'target', 'target_weight'],

+ meta_keys=[

+ 'image_file',

+ 'joints_3d',

+ 'joints_3d_visible',

+ 'center',

+ 'scale',

+ 'rotation',

+ 'bbox_score',

+ 'flip_pairs',

+ 'category_id',

+ 'skeleton',

+ ]),

+]

+

+test_pipeline = valid_pipeline

+

+data_root = 'data/mp100'

+data = dict(

+ samples_per_gpu=8,

+ workers_per_gpu=8,

+ train=dict(

+ type='TransformerPoseDataset',

+ ann_file=f'{data_root}/annotations/mp100_split1_train.json',

+ img_prefix=f'{data_root}/images/',

+ # img_prefix=f'{data_root}',

+ data_cfg=data_cfg,

+ valid_class_ids=None,

+ max_kpt_num=channel_cfg['max_kpt_num'],

+ num_shots=1,

+ pipeline=train_pipeline),

+ val=dict(

+ type='TransformerPoseDataset',

+ ann_file=f'{data_root}/annotations/mp100_split1_val.json',

+ img_prefix=f'{data_root}/images/',

+ # img_prefix=f'{data_root}',

+ data_cfg=data_cfg,

+ valid_class_ids=None,

+ max_kpt_num=channel_cfg['max_kpt_num'],

+ num_shots=1,

+ num_queries=15,

+ num_episodes=100,

+ pipeline=valid_pipeline),

+ test=dict(

+ type='TestPoseDataset',

+ ann_file=f'{data_root}/annotations/mp100_split1_test.json',

+ img_prefix=f'{data_root}/images/',

+ # img_prefix=f'{data_root}',

+ data_cfg=data_cfg,

+ valid_class_ids=None,

+ max_kpt_num=channel_cfg['max_kpt_num'],

+ num_shots=1,

+ num_queries=15,

+ num_episodes=200,

+ pck_threshold_list=[0.05, 0.10, 0.15, 0.2, 0.25],

+ pipeline=test_pipeline),

+)

+vis_backends = [

+ dict(type='LocalVisBackend'),

+ dict(type='TensorboardVisBackend'),

+]

+visualizer = dict(

+ type='PoseLocalVisualizer', vis_backends=vis_backends, name='visualizer')

+

+shuffle_cfg = dict(interval=1)

diff --git a/projects/pose_anything/datasets/__init__.py b/projects/pose_anything/datasets/__init__.py

new file mode 100644

index 0000000000..86bb3ce651

--- /dev/null

+++ b/projects/pose_anything/datasets/__init__.py

@@ -0,0 +1 @@

+from .pipelines import * # noqa

diff --git a/projects/pose_anything/datasets/builder.py b/projects/pose_anything/datasets/builder.py

new file mode 100644

index 0000000000..9f44687143

--- /dev/null

+++ b/projects/pose_anything/datasets/builder.py

@@ -0,0 +1,56 @@

+from mmengine.dataset import RepeatDataset

+from mmengine.registry import build_from_cfg

+from torch.utils.data.dataset import ConcatDataset

+

+from mmpose.datasets.builder import DATASETS

+

+

+def _concat_cfg(cfg):

+ replace = ['ann_file', 'img_prefix']

+ channels = ['num_joints', 'dataset_channel']

+ concat_cfg = []

+ for i in range(len(cfg['type'])):

+ cfg_tmp = cfg.deepcopy()

+ cfg_tmp['type'] = cfg['type'][i]

+ for item in replace:

+ assert item in cfg_tmp

+ assert len(cfg['type']) == len(cfg[item]), (cfg[item])

+ cfg_tmp[item] = cfg[item][i]

+ for item in channels:

+ assert item in cfg_tmp['data_cfg']

+ assert len(cfg['type']) == len(cfg['data_cfg'][item])

+ cfg_tmp['data_cfg'][item] = cfg['data_cfg'][item][i]

+ concat_cfg.append(cfg_tmp)

+ return concat_cfg

+

+

+def _check_vaild(cfg):

+ replace = ['num_joints', 'dataset_channel']

+ if isinstance(cfg['data_cfg'][replace[0]], (list, tuple)):

+ for item in replace:

+ cfg['data_cfg'][item] = cfg['data_cfg'][item][0]

+ return cfg

+

+

+def build_dataset(cfg, default_args=None):

+ """Build a dataset from config dict.

+

+ Args:

+ cfg (dict): Config dict. It should at least contain the key "type".

+ default_args (dict, optional): Default initialization arguments.

+ Default: None.

+

+ Returns:

+ Dataset: The constructed dataset.

+ """

+ if isinstance(cfg['type'],

+ (list, tuple)): # In training, type=TransformerPoseDataset

+ dataset = ConcatDataset(

+ [build_dataset(c, default_args) for c in _concat_cfg(cfg)])

+ elif cfg['type'] == 'RepeatDataset':

+ dataset = RepeatDataset(

+ build_dataset(cfg['dataset'], default_args), cfg['times'])

+ else:

+ cfg = _check_vaild(cfg)

+ dataset = build_from_cfg(cfg, DATASETS, default_args)

+ return dataset

diff --git a/projects/pose_anything/datasets/datasets/__init__.py b/projects/pose_anything/datasets/datasets/__init__.py

new file mode 100644

index 0000000000..124dcc3b15

--- /dev/null

+++ b/projects/pose_anything/datasets/datasets/__init__.py

@@ -0,0 +1,7 @@

+from .mp100 import (FewShotBaseDataset, FewShotKeypointDataset,

+ TransformerBaseDataset, TransformerPoseDataset)

+

+__all__ = [

+ 'FewShotBaseDataset', 'FewShotKeypointDataset', 'TransformerBaseDataset',

+ 'TransformerPoseDataset'

+]

diff --git a/projects/pose_anything/datasets/datasets/mp100/__init__.py b/projects/pose_anything/datasets/datasets/mp100/__init__.py

new file mode 100644

index 0000000000..229353517b

--- /dev/null

+++ b/projects/pose_anything/datasets/datasets/mp100/__init__.py

@@ -0,0 +1,11 @@

+from .fewshot_base_dataset import FewShotBaseDataset

+from .fewshot_dataset import FewShotKeypointDataset

+from .test_base_dataset import TestBaseDataset

+from .test_dataset import TestPoseDataset

+from .transformer_base_dataset import TransformerBaseDataset

+from .transformer_dataset import TransformerPoseDataset

+

+__all__ = [

+ 'FewShotKeypointDataset', 'FewShotBaseDataset', 'TransformerPoseDataset',

+ 'TransformerBaseDataset', 'TestBaseDataset', 'TestPoseDataset'

+]

diff --git a/projects/pose_anything/datasets/datasets/mp100/fewshot_base_dataset.py b/projects/pose_anything/datasets/datasets/mp100/fewshot_base_dataset.py

new file mode 100644

index 0000000000..2746a0a35c

--- /dev/null

+++ b/projects/pose_anything/datasets/datasets/mp100/fewshot_base_dataset.py

@@ -0,0 +1,235 @@

+import copy

+from abc import ABCMeta, abstractmethod

+

+import json_tricks as json

+import numpy as np

+from mmcv.parallel import DataContainer as DC

+from torch.utils.data import Dataset

+

+from mmpose.core.evaluation.top_down_eval import keypoint_pck_accuracy

+from mmpose.datasets import DATASETS

+from mmpose.datasets.pipelines import Compose

+

+

+@DATASETS.register_module()

+class FewShotBaseDataset(Dataset, metaclass=ABCMeta):

+

+ def __init__(self,

+ ann_file,

+ img_prefix,

+ data_cfg,

+ pipeline,

+ test_mode=False):

+ self.image_info = {}

+ self.ann_info = {}

+

+ self.annotations_path = ann_file

+ if not img_prefix.endswith('/'):

+ img_prefix = img_prefix + '/'

+ self.img_prefix = img_prefix

+ self.pipeline = pipeline

+ self.test_mode = test_mode

+

+ self.ann_info['image_size'] = np.array(data_cfg['image_size'])

+ self.ann_info['heatmap_size'] = np.array(data_cfg['heatmap_size'])

+ self.ann_info['num_joints'] = data_cfg['num_joints']

+

+ self.ann_info['flip_pairs'] = None

+

+ self.ann_info['inference_channel'] = data_cfg['inference_channel']

+ self.ann_info['num_output_channels'] = data_cfg['num_output_channels']

+ self.ann_info['dataset_channel'] = data_cfg['dataset_channel']

+

+ self.db = []

+ self.num_shots = 1

+ self.paired_samples = []

+ self.pipeline = Compose(self.pipeline)

+

+ @abstractmethod

+ def _get_db(self):

+ """Load dataset."""

+ raise NotImplementedError

+

+ @abstractmethod

+ def _select_kpt(self, obj, kpt_id):

+ """Select kpt."""

+ raise NotImplementedError

+

+ @abstractmethod

+ def evaluate(self, cfg, preds, output_dir, *args, **kwargs):

+ """Evaluate keypoint results."""

+ raise NotImplementedError

+

+ @staticmethod

+ def _write_keypoint_results(keypoints, res_file):

+ """Write results into a json file."""

+

+ with open(res_file, 'w') as f:

+ json.dump(keypoints, f, sort_keys=True, indent=4)

+

+ def _report_metric(self,

+ res_file,

+ metrics,

+ pck_thr=0.2,

+ pckh_thr=0.7,

+ auc_nor=30):

+ """Keypoint evaluation.

+

+ Args:

+ res_file (str): Json file stored prediction results.

+ metrics (str | list[str]): Metric to be performed.

+ Options: 'PCK', 'PCKh', 'AUC', 'EPE'.

+ pck_thr (float): PCK threshold, default as 0.2.

+ pckh_thr (float): PCKh threshold, default as 0.7.

+ auc_nor (float): AUC normalization factor, default as 30 pixel.

+

+ Returns:

+ List: Evaluation results for evaluation metric.

+ """

+ info_str = []

+

+ with open(res_file, 'r') as fin:

+ preds = json.load(fin)

+ assert len(preds) == len(self.paired_samples)

+

+ outputs = []

+ gts = []

+ masks = []

+ threshold_bbox = []

+ threshold_head_box = []

+

+ for pred, pair in zip(preds, self.paired_samples):

+ item = self.db[pair[-1]]

+ outputs.append(np.array(pred['keypoints'])[:, :-1])

+ gts.append(np.array(item['joints_3d'])[:, :-1])

+

+ mask_query = ((np.array(item['joints_3d_visible'])[:, 0]) > 0)

+ mask_sample = ((np.array(

+ self.db[pair[0]]['joints_3d_visible'])[:, 0]) > 0)

+ for id_s in pair[:-1]:

+ mask_sample = np.bitwise_and(

+ mask_sample,

+ ((np.array(self.db[id_s]['joints_3d_visible'])[:, 0]) > 0))

+ masks.append(np.bitwise_and(mask_query, mask_sample))

+

+ if 'PCK' in metrics:

+ bbox = np.array(item['bbox'])

+ bbox_thr = np.max(bbox[2:])

+ threshold_bbox.append(np.array([bbox_thr, bbox_thr]))

+ if 'PCKh' in metrics:

+ head_box_thr = item['head_size']

+ threshold_head_box.append(

+ np.array([head_box_thr, head_box_thr]))

+

+ if 'PCK' in metrics:

+ pck_avg = []

+ for (output, gt, mask, thr_bbox) in zip(outputs, gts, masks,

+ threshold_bbox):

+ _, pck, _ = keypoint_pck_accuracy(

+ np.expand_dims(output, 0), np.expand_dims(gt, 0),

+ np.expand_dims(mask, 0), pck_thr,

+ np.expand_dims(thr_bbox, 0))

+ pck_avg.append(pck)

+ info_str.append(('PCK', np.mean(pck_avg)))

+

+ return info_str

+

+ def _merge_obj(self, Xs_list, Xq, idx):

+ """merge Xs_list and Xq.

+

+ :param Xs_list: N-shot samples X

+ :param Xq: query X

+ :param idx: id of paired_samples

+ :return: Xall

+ """

+ Xall = dict()

+ Xall['img_s'] = [Xs['img'] for Xs in Xs_list]

+ Xall['target_s'] = [Xs['target'] for Xs in Xs_list]

+ Xall['target_weight_s'] = [Xs['target_weight'] for Xs in Xs_list]

+ xs_img_metas = [Xs['img_metas'].data for Xs in Xs_list]

+

+ Xall['img_q'] = Xq['img']

+ Xall['target_q'] = Xq['target']

+ Xall['target_weight_q'] = Xq['target_weight']

+ xq_img_metas = Xq['img_metas'].data

+

+ img_metas = dict()

+ for key in xq_img_metas.keys():

+ img_metas['sample_' + key] = [

+ xs_img_meta[key] for xs_img_meta in xs_img_metas

+ ]

+ img_metas['query_' + key] = xq_img_metas[key]

+ img_metas['bbox_id'] = idx

+

+ Xall['img_metas'] = DC(img_metas, cpu_only=True)

+

+ return Xall

+

+ def __len__(self):

+ """Get the size of the dataset."""

+ return len(self.paired_samples)

+

+ def __getitem__(self, idx):

+ """Get the sample given index."""

+

+ pair_ids = self.paired_samples[idx]

+ assert len(pair_ids) == self.num_shots + 1

+ sample_id_list = pair_ids[:self.num_shots]

+ query_id = pair_ids[-1]

+

+ sample_obj_list = []

+ for sample_id in sample_id_list:

+ sample_obj = copy.deepcopy(self.db[sample_id])

+ sample_obj['ann_info'] = copy.deepcopy(self.ann_info)

+ sample_obj_list.append(sample_obj)

+

+ query_obj = copy.deepcopy(self.db[query_id])

+ query_obj['ann_info'] = copy.deepcopy(self.ann_info)

+

+ if not self.test_mode:

+ # randomly select "one" keypoint

+ sample_valid = (sample_obj_list[0]['joints_3d_visible'][:, 0] > 0)

+ for sample_obj in sample_obj_list:

+ sample_valid = sample_valid & (

+ sample_obj['joints_3d_visible'][:, 0] > 0)

+ query_valid = (query_obj['joints_3d_visible'][:, 0] > 0)

+

+ valid_s = np.where(sample_valid)[0]

+ valid_q = np.where(query_valid)[0]

+ valid_sq = np.where(sample_valid & query_valid)[0]

+ if len(valid_sq) > 0:

+ kpt_id = np.random.choice(valid_sq)

+ elif len(valid_s) > 0:

+ kpt_id = np.random.choice(valid_s)

+ elif len(valid_q) > 0:

+ kpt_id = np.random.choice(valid_q)

+ else:

+ kpt_id = np.random.choice(np.array(range(len(query_valid))))

+

+ for i in range(self.num_shots):

+ sample_obj_list[i] = self._select_kpt(sample_obj_list[i],

+ kpt_id)

+ query_obj = self._select_kpt(query_obj, kpt_id)

+

+ # when test, all keypoints will be preserved.

+

+ Xs_list = []

+ for sample_obj in sample_obj_list:

+ Xs = self.pipeline(sample_obj)

+ Xs_list.append(Xs)

+ Xq = self.pipeline(query_obj)

+

+ Xall = self._merge_obj(Xs_list, Xq, idx)

+ Xall['skeleton'] = self.db[query_id]['skeleton']

+

+ return Xall

+

+ def _sort_and_unique_bboxes(self, kpts, key='bbox_id'):

+ """sort kpts and remove the repeated ones."""

+ kpts = sorted(kpts, key=lambda x: x[key])

+ num = len(kpts)

+ for i in range(num - 1, 0, -1):

+ if kpts[i][key] == kpts[i - 1][key]:

+ del kpts[i]

+

+ return kpts

diff --git a/projects/pose_anything/datasets/datasets/mp100/fewshot_dataset.py b/projects/pose_anything/datasets/datasets/mp100/fewshot_dataset.py

new file mode 100644

index 0000000000..bd8287966c

--- /dev/null

+++ b/projects/pose_anything/datasets/datasets/mp100/fewshot_dataset.py

@@ -0,0 +1,334 @@

+import os

+import random

+from collections import OrderedDict

+

+import numpy as np

+from xtcocotools.coco import COCO

+

+from mmpose.datasets import DATASETS

+from .fewshot_base_dataset import FewShotBaseDataset

+

+

+@DATASETS.register_module()

+class FewShotKeypointDataset(FewShotBaseDataset):

+

+ def __init__(self,

+ ann_file,

+ img_prefix,

+ data_cfg,

+ pipeline,

+ valid_class_ids,

+ num_shots=1,

+ num_queries=100,

+ num_episodes=1,

+ test_mode=False):

+ super().__init__(

+ ann_file, img_prefix, data_cfg, pipeline, test_mode=test_mode)

+

+ self.ann_info['flip_pairs'] = []

+

+ self.ann_info['upper_body_ids'] = []

+ self.ann_info['lower_body_ids'] = []

+

+ self.ann_info['use_different_joint_weights'] = False

+ self.ann_info['joint_weights'] = np.array([

+ 1.,

+ ], dtype=np.float32).reshape((self.ann_info['num_joints'], 1))

+

+ self.coco = COCO(ann_file)

+

+ self.id2name, self.name2id = self._get_mapping_id_name(self.coco.imgs)

+ self.img_ids = self.coco.getImgIds()

+ self.classes = [

+ cat['name'] for cat in self.coco.loadCats(self.coco.getCatIds())

+ ]

+

+ self.num_classes = len(self.classes)

+ self._class_to_ind = dict(zip(self.classes, self.coco.getCatIds()))

+ self._ind_to_class = dict(zip(self.coco.getCatIds(), self.classes))

+

+ if valid_class_ids is not None:

+ self.valid_class_ids = valid_class_ids

+ else:

+ self.valid_class_ids = self.coco.getCatIds()

+ self.valid_classes = [

+ self._ind_to_class[ind] for ind in self.valid_class_ids

+ ]

+

+ self.cats = self.coco.cats

+

+ # Also update self.cat2obj

+ self.db = self._get_db()

+

+ self.num_shots = num_shots

+

+ if not test_mode:

+ # Update every training epoch

+ self.random_paired_samples()

+ else:

+ self.num_queries = num_queries

+ self.num_episodes = num_episodes

+ self.make_paired_samples()

+

+ def random_paired_samples(self):

+ num_datas = [

+ len(self.cat2obj[self._class_to_ind[cls]])

+ for cls in self.valid_classes

+ ]

+

+ # balance the dataset

+ max_num_data = max(num_datas)

+

+ all_samples = []

+ for cls in self.valid_class_ids:

+ for i in range(max_num_data):

+ shot = random.sample(self.cat2obj[cls], self.num_shots + 1)

+ all_samples.append(shot)

+

+ self.paired_samples = np.array(all_samples)

+ np.random.shuffle(self.paired_samples)

+

+ def make_paired_samples(self):

+ random.seed(1)

+ np.random.seed(0)

+

+ all_samples = []

+ for cls in self.valid_class_ids:

+ for _ in range(self.num_episodes):

+ shots = random.sample(self.cat2obj[cls],

+ self.num_shots + self.num_queries)

+ sample_ids = shots[:self.num_shots]

+ query_ids = shots[self.num_shots:]

+ for query_id in query_ids:

+ all_samples.append(sample_ids + [query_id])

+

+ self.paired_samples = np.array(all_samples)

+

+ def _select_kpt(self, obj, kpt_id):

+ obj['joints_3d'] = obj['joints_3d'][kpt_id:kpt_id + 1]

+ obj['joints_3d_visible'] = obj['joints_3d_visible'][kpt_id:kpt_id + 1]

+ obj['kpt_id'] = kpt_id

+

+ return obj

+

+ @staticmethod

+ def _get_mapping_id_name(imgs):

+ """

+ Args:

+ imgs (dict): dict of image info.

+

+ Returns:

+ tuple: Image name & id mapping dicts.

+

+ - id2name (dict): Mapping image id to name.

+ - name2id (dict): Mapping image name to id.

+ """

+ id2name = {}

+ name2id = {}

+ for image_id, image in imgs.items():

+ file_name = image['file_name']

+ id2name[image_id] = file_name

+ name2id[file_name] = image_id

+

+ return id2name, name2id

+

+ def _get_db(self):

+ """Ground truth bbox and keypoints."""

+ self.obj_id = 0

+

+ self.cat2obj = {}

+ for i in self.coco.getCatIds():

+ self.cat2obj.update({i: []})

+

+ gt_db = []

+ for img_id in self.img_ids:

+ gt_db.extend(self._load_coco_keypoint_annotation_kernel(img_id))

+ return gt_db

+

+ def _load_coco_keypoint_annotation_kernel(self, img_id):

+ """load annotation from COCOAPI.

+

+ Note:

+ bbox:[x1, y1, w, h]

+ Args:

+ img_id: coco image id

+ Returns:

+ dict: db entry

+ """

+ img_ann = self.coco.loadImgs(img_id)[0]

+ width = img_ann['width']

+ height = img_ann['height']

+

+ ann_ids = self.coco.getAnnIds(imgIds=img_id, iscrowd=False)

+ objs = self.coco.loadAnns(ann_ids)

+

+ # sanitize bboxes

+ valid_objs = []

+ for obj in objs:

+ if 'bbox' not in obj:

+ continue

+ x, y, w, h = obj['bbox']

+ x1 = max(0, x)

+ y1 = max(0, y)

+ x2 = min(width - 1, x1 + max(0, w - 1))

+ y2 = min(height - 1, y1 + max(0, h - 1))

+ if ('area' not in obj or obj['area'] > 0) and x2 > x1 and y2 > y1:

+ obj['clean_bbox'] = [x1, y1, x2 - x1, y2 - y1]

+ valid_objs.append(obj)

+ objs = valid_objs

+

+ bbox_id = 0

+ rec = []

+ for obj in objs:

+ if 'keypoints' not in obj:

+ continue

+ if max(obj['keypoints']) == 0:

+ continue

+ if 'num_keypoints' in obj and obj['num_keypoints'] == 0:

+ continue

+

+ category_id = obj['category_id']

+ # the number of keypoint for this specific category

+ cat_kpt_num = int(len(obj['keypoints']) / 3)

+

+ joints_3d = np.zeros((cat_kpt_num, 3), dtype=np.float32)

+ joints_3d_visible = np.zeros((cat_kpt_num, 3), dtype=np.float32)

+

+ keypoints = np.array(obj['keypoints']).reshape(-1, 3)

+ joints_3d[:, :2] = keypoints[:, :2]

+ joints_3d_visible[:, :2] = np.minimum(1, keypoints[:, 2:3])

+

+ center, scale = self._xywh2cs(*obj['clean_bbox'][:4])

+

+ image_file = os.path.join(self.img_prefix, self.id2name[img_id])

+

+ self.cat2obj[category_id].append(self.obj_id)

+

+ rec.append({

+ 'image_file':

+ image_file,

+ 'center':

+ center,

+ 'scale':

+ scale,

+ 'rotation':

+ 0,

+ 'bbox':

+ obj['clean_bbox'][:4],

+ 'bbox_score':

+ 1,

+ 'joints_3d':

+ joints_3d,

+ 'joints_3d_visible':

+ joints_3d_visible,

+ 'category_id':

+ category_id,

+ 'cat_kpt_num':

+ cat_kpt_num,

+ 'bbox_id':

+ self.obj_id,

+ 'skeleton':

+ self.coco.cats[obj['category_id']]['skeleton'],

+ })

+ bbox_id = bbox_id + 1

+ self.obj_id += 1

+

+ return rec

+

+ def _xywh2cs(self, x, y, w, h):

+ """This encodes bbox(x,y,w,w) into (center, scale)

+

+ Args:

+ x, y, w, h

+

+ Returns:

+ tuple: A tuple containing center and scale.

+

+ - center (np.ndarray[float32](2,)): center of the bbox (x, y).

+ - scale (np.ndarray[float32](2,)): scale of the bbox w & h.

+ """

+ aspect_ratio = self.ann_info['image_size'][0] / self.ann_info[

+ 'image_size'][1]

+ center = np.array([x + w * 0.5, y + h * 0.5], dtype=np.float32)

+ #

+ # if (not self.test_mode) and np.random.rand() < 0.3:

+ # center += 0.4 * (np.random.rand(2) - 0.5) * [w, h]

+

+ if w > aspect_ratio * h:

+ h = w * 1.0 / aspect_ratio

+ elif w < aspect_ratio * h:

+ w = h * aspect_ratio

+

+ # pixel std is 200.0

+ scale = np.array([w / 200.0, h / 200.0], dtype=np.float32)

+ # padding to include proper amount of context

+ scale = scale * 1.25

+

+ return center, scale

+

+ def evaluate(self, outputs, res_folder, metric='PCK', **kwargs):

+ """Evaluate interhand2d keypoint results. The pose prediction results

+ will be saved in `${res_folder}/result_keypoints.json`.

+

+ Note:

+ batch_size: N

+ num_keypoints: K

+ heatmap height: H

+ heatmap width: W

+

+ Args:

+ outputs (list(preds, boxes, image_path, output_heatmap))

+ :preds (np.ndarray[N,K,3]): The first two dimensions are

+ coordinates, score is the third dimension of the array.

+ :boxes (np.ndarray[N,6]): [center[0], center[1], scale[0]

+ , scale[1],area, score]

+ :image_paths (list[str]): For example, ['C', 'a', 'p', 't',

+ 'u', 'r', 'e', '1', '2', '/', '0', '3', '9', '0', '_',

+ 'd', 'h', '_', 't', 'o', 'u', 'c', 'h', 'R', 'O', 'M',

+ '/', 'c', 'a', 'm', '4', '1', '0', '2', '0', '9', '/',

+ 'i', 'm', 'a', 'g', 'e', '6', '2', '4', '3', '4', '.',

+ 'j', 'p', 'g']

+ :output_heatmap (np.ndarray[N, K, H, W]): model outputs.

+

+ res_folder (str): Path of directory to save the results.

+ metric (str | list[str]): Metric to be performed.

+ Options: 'PCK', 'AUC', 'EPE'.

+

+ Returns:

+ dict: Evaluation results for evaluation metric.

+ """

+ metrics = metric if isinstance(metric, list) else [metric]

+ allowed_metrics = ['PCK', 'AUC', 'EPE']

+ for metric in metrics:

+ if metric not in allowed_metrics:

+ raise KeyError(f'metric {metric} is not supported')

+

+ res_file = os.path.join(res_folder, 'result_keypoints.json')

+

+ kpts = []

+ for output in outputs:

+ preds = output['preds']

+ boxes = output['boxes']

+ image_paths = output['image_paths']

+ bbox_ids = output['bbox_ids']

+

+ batch_size = len(image_paths)

+ for i in range(batch_size):

+ image_id = self.name2id[image_paths[i][len(self.img_prefix):]]

+

+ kpts.append({

+ 'keypoints': preds[i].tolist(),

+ 'center': boxes[i][0:2].tolist(),

+ 'scale': boxes[i][2:4].tolist(),

+ 'area': float(boxes[i][4]),

+ 'score': float(boxes[i][5]),

+ 'image_id': image_id,

+ 'bbox_id': bbox_ids[i]

+ })

+ kpts = self._sort_and_unique_bboxes(kpts)

+

+ self._write_keypoint_results(kpts, res_file)

+ info_str = self._report_metric(res_file, metrics)

+ name_value = OrderedDict(info_str)

+

+ return name_value

diff --git a/projects/pose_anything/datasets/datasets/mp100/test_base_dataset.py b/projects/pose_anything/datasets/datasets/mp100/test_base_dataset.py

new file mode 100644

index 0000000000..8fffc84239

--- /dev/null

+++ b/projects/pose_anything/datasets/datasets/mp100/test_base_dataset.py

@@ -0,0 +1,248 @@

+import copy

+from abc import ABCMeta, abstractmethod

+

+import json_tricks as json

+import numpy as np

+from mmcv.parallel import DataContainer as DC

+from torch.utils.data import Dataset

+

+from mmpose.core.evaluation.top_down_eval import (keypoint_auc, keypoint_epe,

+ keypoint_nme,

+ keypoint_pck_accuracy)

+from mmpose.datasets import DATASETS

+from mmpose.datasets.pipelines import Compose

+

+

+@DATASETS.register_module()

+class TestBaseDataset(Dataset, metaclass=ABCMeta):

+

+ def __init__(self,

+ ann_file,

+ img_prefix,

+ data_cfg,

+ pipeline,

+ test_mode=True,

+ PCK_threshold_list=[0.05, 0.1, 0.15, 0.2, 0.25]):

+ self.image_info = {}

+ self.ann_info = {}

+

+ self.annotations_path = ann_file

+ if not img_prefix.endswith('/'):

+ img_prefix = img_prefix + '/'

+ self.img_prefix = img_prefix

+ self.pipeline = pipeline

+ self.test_mode = test_mode

+ self.PCK_threshold_list = PCK_threshold_list

+

+ self.ann_info['image_size'] = np.array(data_cfg['image_size'])

+ self.ann_info['heatmap_size'] = np.array(data_cfg['heatmap_size'])

+ self.ann_info['num_joints'] = data_cfg['num_joints']

+

+ self.ann_info['flip_pairs'] = None

+

+ self.ann_info['inference_channel'] = data_cfg['inference_channel']

+ self.ann_info['num_output_channels'] = data_cfg['num_output_channels']

+ self.ann_info['dataset_channel'] = data_cfg['dataset_channel']

+

+ self.db = []

+ self.num_shots = 1

+ self.paired_samples = []

+ self.pipeline = Compose(self.pipeline)

+

+ @abstractmethod

+ def _get_db(self):

+ """Load dataset."""

+ raise NotImplementedError

+

+ @abstractmethod

+ def _select_kpt(self, obj, kpt_id):

+ """Select kpt."""

+ raise NotImplementedError

+

+ @abstractmethod

+ def evaluate(self, cfg, preds, output_dir, *args, **kwargs):

+ """Evaluate keypoint results."""

+ raise NotImplementedError

+

+ @staticmethod

+ def _write_keypoint_results(keypoints, res_file):

+ """Write results into a json file."""

+

+ with open(res_file, 'w') as f:

+ json.dump(keypoints, f, sort_keys=True, indent=4)

+

+ def _report_metric(self, res_file, metrics):

+ """Keypoint evaluation.

+

+ Args:

+ res_file (str): Json file stored prediction results.

+ metrics (str | list[str]): Metric to be performed.

+ Options: 'PCK', 'PCKh', 'AUC', 'EPE'.

+ pck_thr (float): PCK threshold, default as 0.2.

+ pckh_thr (float): PCKh threshold, default as 0.7.

+ auc_nor (float): AUC normalization factor, default as 30 pixel.

+

+ Returns:

+ List: Evaluation results for evaluation metric.

+ """

+ info_str = []

+

+ with open(res_file, 'r') as fin:

+ preds = json.load(fin)

+ assert len(preds) == len(self.paired_samples)

+

+ outputs = []

+ gts = []

+ masks = []

+ threshold_bbox = []

+ threshold_head_box = []

+

+ for pred, pair in zip(preds, self.paired_samples):

+ item = self.db[pair[-1]]

+ outputs.append(np.array(pred['keypoints'])[:, :-1])

+ gts.append(np.array(item['joints_3d'])[:, :-1])

+

+ mask_query = ((np.array(item['joints_3d_visible'])[:, 0]) > 0)

+ mask_sample = ((np.array(

+ self.db[pair[0]]['joints_3d_visible'])[:, 0]) > 0)

+ for id_s in pair[:-1]:

+ mask_sample = np.bitwise_and(

+ mask_sample,

+ ((np.array(self.db[id_s]['joints_3d_visible'])[:, 0]) > 0))

+ masks.append(np.bitwise_and(mask_query, mask_sample))

+

+ if 'PCK' in metrics or 'NME' in metrics or 'AUC' in metrics:

+ bbox = np.array(item['bbox'])

+ bbox_thr = np.max(bbox[2:])

+ threshold_bbox.append(np.array([bbox_thr, bbox_thr]))

+ if 'PCKh' in metrics:

+ head_box_thr = item['head_size']

+ threshold_head_box.append(

+ np.array([head_box_thr, head_box_thr]))

+

+ if 'PCK' in metrics:

+ pck_results = dict()

+ for pck_thr in self.PCK_threshold_list:

+ pck_results[pck_thr] = []

+

+ for (output, gt, mask, thr_bbox) in zip(outputs, gts, masks,

+ threshold_bbox):

+ for pck_thr in self.PCK_threshold_list:

+ _, pck, _ = keypoint_pck_accuracy(

+ np.expand_dims(output, 0), np.expand_dims(gt, 0),

+ np.expand_dims(mask, 0), pck_thr,

+ np.expand_dims(thr_bbox, 0))

+ pck_results[pck_thr].append(pck)

+

+ mPCK = 0

+ for pck_thr in self.PCK_threshold_list:

+ info_str.append(

+ ['PCK@' + str(pck_thr),

+ np.mean(pck_results[pck_thr])])

+ mPCK += np.mean(pck_results[pck_thr])

+ info_str.append(['mPCK', mPCK / len(self.PCK_threshold_list)])

+

+ if 'NME' in metrics:

+ nme_results = []

+ for (output, gt, mask, thr_bbox) in zip(outputs, gts, masks,

+ threshold_bbox):

+ nme = keypoint_nme(

+ np.expand_dims(output, 0), np.expand_dims(gt, 0),

+ np.expand_dims(mask, 0), np.expand_dims(thr_bbox, 0))

+ nme_results.append(nme)

+ info_str.append(['NME', np.mean(nme_results)])

+

+ if 'AUC' in metrics:

+ auc_results = []

+ for (output, gt, mask, thr_bbox) in zip(outputs, gts, masks,

+ threshold_bbox):

+ auc = keypoint_auc(

+ np.expand_dims(output, 0), np.expand_dims(gt, 0),

+ np.expand_dims(mask, 0), thr_bbox[0])

+ auc_results.append(auc)

+ info_str.append(['AUC', np.mean(auc_results)])

+

+ if 'EPE' in metrics:

+ epe_results = []

+ for (output, gt, mask) in zip(outputs, gts, masks):

+ epe = keypoint_epe(

+ np.expand_dims(output, 0), np.expand_dims(gt, 0),

+ np.expand_dims(mask, 0))

+ epe_results.append(epe)

+ info_str.append(['EPE', np.mean(epe_results)])

+ return info_str

+

+ def _merge_obj(self, Xs_list, Xq, idx):

+ """merge Xs_list and Xq.

+

+ :param Xs_list: N-shot samples X

+ :param Xq: query X

+ :param idx: id of paired_samples

+ :return: Xall

+ """

+ Xall = dict()

+ Xall['img_s'] = [Xs['img'] for Xs in Xs_list]

+ Xall['target_s'] = [Xs['target'] for Xs in Xs_list]

+ Xall['target_weight_s'] = [Xs['target_weight'] for Xs in Xs_list]

+ xs_img_metas = [Xs['img_metas'].data for Xs in Xs_list]

+

+ Xall['img_q'] = Xq['img']

+ Xall['target_q'] = Xq['target']

+ Xall['target_weight_q'] = Xq['target_weight']

+ xq_img_metas = Xq['img_metas'].data

+

+ img_metas = dict()

+ for key in xq_img_metas.keys():

+ img_metas['sample_' + key] = [

+ xs_img_meta[key] for xs_img_meta in xs_img_metas

+ ]

+ img_metas['query_' + key] = xq_img_metas[key]

+ img_metas['bbox_id'] = idx

+

+ Xall['img_metas'] = DC(img_metas, cpu_only=True)

+

+ return Xall

+

+ def __len__(self):

+ """Get the size of the dataset."""

+ return len(self.paired_samples)

+

+ def __getitem__(self, idx):

+ """Get the sample given index."""

+

+ pair_ids = self.paired_samples[idx] # [supported id * shots, query id]

+ assert len(pair_ids) == self.num_shots + 1

+ sample_id_list = pair_ids[:self.num_shots]

+ query_id = pair_ids[-1]

+

+ sample_obj_list = []

+ for sample_id in sample_id_list:

+ sample_obj = copy.deepcopy(self.db[sample_id])

+ sample_obj['ann_info'] = copy.deepcopy(self.ann_info)

+ sample_obj_list.append(sample_obj)

+

+ query_obj = copy.deepcopy(self.db[query_id])

+ query_obj['ann_info'] = copy.deepcopy(self.ann_info)

+

+ Xs_list = []

+ for sample_obj in sample_obj_list:

+ Xs = self.pipeline(

+ sample_obj

+ ) # dict with ['img', 'target', 'target_weight', 'img_metas'],

+ Xs_list.append(Xs) # Xs['target'] is of shape [100, map_h, map_w]

+ Xq = self.pipeline(query_obj)

+

+ Xall = self._merge_obj(Xs_list, Xq, idx)

+ Xall['skeleton'] = self.db[query_id]['skeleton']

+

+ return Xall

+

+ def _sort_and_unique_bboxes(self, kpts, key='bbox_id'):

+ """sort kpts and remove the repeated ones."""

+ kpts = sorted(kpts, key=lambda x: x[key])

+ num = len(kpts)

+ for i in range(num - 1, 0, -1):

+ if kpts[i][key] == kpts[i - 1][key]:

+ del kpts[i]

+

+ return kpts

diff --git a/projects/pose_anything/datasets/datasets/mp100/test_dataset.py b/projects/pose_anything/datasets/datasets/mp100/test_dataset.py

new file mode 100644

index 0000000000..ca0dc7ac6a

--- /dev/null

+++ b/projects/pose_anything/datasets/datasets/mp100/test_dataset.py

@@ -0,0 +1,347 @@

+import os

+import random

+from collections import OrderedDict

+

+import numpy as np

+from xtcocotools.coco import COCO

+

+from mmpose.datasets import DATASETS

+from .test_base_dataset import TestBaseDataset

+

+

+@DATASETS.register_module()

+class TestPoseDataset(TestBaseDataset):

+

+ def __init__(self,

+ ann_file,

+ img_prefix,

+ data_cfg,

+ pipeline,

+ valid_class_ids,

+ max_kpt_num=None,

+ num_shots=1,

+ num_queries=100,

+ num_episodes=1,

+ pck_threshold_list=[0.05, 0.1, 0.15, 0.20, 0.25],

+ test_mode=True):

+ super().__init__(

+ ann_file,

+ img_prefix,

+ data_cfg,

+ pipeline,

+ test_mode=test_mode,

+ PCK_threshold_list=pck_threshold_list)

+

+ self.ann_info['flip_pairs'] = []

+

+ self.ann_info['upper_body_ids'] = []

+ self.ann_info['lower_body_ids'] = []

+

+ self.ann_info['use_different_joint_weights'] = False

+ self.ann_info['joint_weights'] = np.array([

+ 1.,

+ ], dtype=np.float32).reshape((self.ann_info['num_joints'], 1))

+

+ self.coco = COCO(ann_file)

+

+ self.id2name, self.name2id = self._get_mapping_id_name(self.coco.imgs)

+ self.img_ids = self.coco.getImgIds()

+ self.classes = [

+ cat['name'] for cat in self.coco.loadCats(self.coco.getCatIds())

+ ]

+

+ self.num_classes = len(self.classes)

+ self._class_to_ind = dict(zip(self.classes, self.coco.getCatIds()))

+ self._ind_to_class = dict(zip(self.coco.getCatIds(), self.classes))

+

+ if valid_class_ids is not None: # None by default

+ self.valid_class_ids = valid_class_ids

+ else:

+ self.valid_class_ids = self.coco.getCatIds()

+ self.valid_classes = [

+ self._ind_to_class[ind] for ind in self.valid_class_ids

+ ]

+

+ self.cats = self.coco.cats

+ self.max_kpt_num = max_kpt_num

+

+ # Also update self.cat2obj

+ self.db = self._get_db()

+

+ self.num_shots = num_shots

+

+ if not test_mode:

+ # Update every training epoch

+ self.random_paired_samples()

+ else:

+ self.num_queries = num_queries

+ self.num_episodes = num_episodes

+ self.make_paired_samples()

+

+ def random_paired_samples(self):

+ num_datas = [

+ len(self.cat2obj[self._class_to_ind[cls]])

+ for cls in self.valid_classes

+ ]

+

+ # balance the dataset

+ max_num_data = max(num_datas)

+

+ all_samples = []

+ for cls in self.valid_class_ids:

+ for i in range(max_num_data):

+ shot = random.sample(self.cat2obj[cls], self.num_shots + 1)

+ all_samples.append(shot)

+

+ self.paired_samples = np.array(all_samples)

+ np.random.shuffle(self.paired_samples)

+

+ def make_paired_samples(self):

+ random.seed(1)

+ np.random.seed(0)

+

+ all_samples = []

+ for cls in self.valid_class_ids:

+ for _ in range(self.num_episodes):

+ shots = random.sample(self.cat2obj[cls],

+ self.num_shots + self.num_queries)

+ sample_ids = shots[:self.num_shots]

+ query_ids = shots[self.num_shots:]

+ for query_id in query_ids:

+ all_samples.append(sample_ids + [query_id])

+

+ self.paired_samples = np.array(all_samples)

+

+ def _select_kpt(self, obj, kpt_id):

+ obj['joints_3d'] = obj['joints_3d'][kpt_id:kpt_id + 1]

+ obj['joints_3d_visible'] = obj['joints_3d_visible'][kpt_id:kpt_id + 1]

+ obj['kpt_id'] = kpt_id

+

+ return obj

+

+ @staticmethod

+ def _get_mapping_id_name(imgs):

+ """

+ Args:

+ imgs (dict): dict of image info.

+

+ Returns:

+ tuple: Image name & id mapping dicts.

+

+ - id2name (dict): Mapping image id to name.

+ - name2id (dict): Mapping image name to id.

+ """

+ id2name = {}

+ name2id = {}

+ for image_id, image in imgs.items():

+ file_name = image['file_name']

+ id2name[image_id] = file_name

+ name2id[file_name] = image_id

+

+ return id2name, name2id

+

+ def _get_db(self):

+ """Ground truth bbox and keypoints."""

+ self.obj_id = 0

+

+ self.cat2obj = {}

+ for i in self.coco.getCatIds():

+ self.cat2obj.update({i: []})

+

+ gt_db = []

+ for img_id in self.img_ids:

+ gt_db.extend(self._load_coco_keypoint_annotation_kernel(img_id))

+ return gt_db

+

+ def _load_coco_keypoint_annotation_kernel(self, img_id):

+ """load annotation from COCOAPI.

+

+ Note:

+ bbox:[x1, y1, w, h]

+ Args:

+ img_id: coco image id

+ Returns:

+ dict: db entry

+ """

+ img_ann = self.coco.loadImgs(img_id)[0]

+ width = img_ann['width']

+ height = img_ann['height']

+

+ ann_ids = self.coco.getAnnIds(imgIds=img_id, iscrowd=False)

+ objs = self.coco.loadAnns(ann_ids)

+

+ # sanitize bboxes

+ valid_objs = []

+ for obj in objs:

+ if 'bbox' not in obj:

+ continue

+ x, y, w, h = obj['bbox']

+ x1 = max(0, x)

+ y1 = max(0, y)

+ x2 = min(width - 1, x1 + max(0, w - 1))

+ y2 = min(height - 1, y1 + max(0, h - 1))

+ if ('area' not in obj or obj['area'] > 0) and x2 > x1 and y2 > y1:

+ obj['clean_bbox'] = [x1, y1, x2 - x1, y2 - y1]

+ valid_objs.append(obj)

+ objs = valid_objs

+

+ bbox_id = 0

+ rec = []

+ for obj in objs:

+ if 'keypoints' not in obj:

+ continue

+ if max(obj['keypoints']) == 0:

+ continue

+ if 'num_keypoints' in obj and obj['num_keypoints'] == 0:

+ continue

+

+ category_id = obj['category_id']

+ # the number of keypoint for this specific category

+ cat_kpt_num = int(len(obj['keypoints']) / 3)

+ if self.max_kpt_num is None:

+ kpt_num = cat_kpt_num

+ else:

+ kpt_num = self.max_kpt_num

+

+ joints_3d = np.zeros((kpt_num, 3), dtype=np.float32)

+ joints_3d_visible = np.zeros((kpt_num, 3), dtype=np.float32)

+

+ keypoints = np.array(obj['keypoints']).reshape(-1, 3)

+ joints_3d[:cat_kpt_num, :2] = keypoints[:, :2]

+ joints_3d_visible[:cat_kpt_num, :2] = np.minimum(

+ 1, keypoints[:, 2:3])

+

+ center, scale = self._xywh2cs(*obj['clean_bbox'][:4])

+

+ image_file = os.path.join(self.img_prefix, self.id2name[img_id])

+

+ self.cat2obj[category_id].append(self.obj_id)

+

+ rec.append({

+ 'image_file':

+ image_file,

+ 'center':

+ center,

+ 'scale':

+ scale,

+ 'rotation':

+ 0,

+ 'bbox':

+ obj['clean_bbox'][:4],

+ 'bbox_score':

+ 1,

+ 'joints_3d':

+ joints_3d,

+ 'joints_3d_visible':

+ joints_3d_visible,

+ 'category_id':

+ category_id,

+ 'cat_kpt_num':

+ cat_kpt_num,

+ 'bbox_id':

+ self.obj_id,

+ 'skeleton':

+ self.coco.cats[obj['category_id']]['skeleton'],

+ })

+ bbox_id = bbox_id + 1

+ self.obj_id += 1

+

+ return rec

+

+ def _xywh2cs(self, x, y, w, h):

+ """This encodes bbox(x,y,w,w) into (center, scale)

+

+ Args:

+ x, y, w, h

+

+ Returns:

+ tuple: A tuple containing center and scale.

+

+ - center (np.ndarray[float32](2,)): center of the bbox (x, y).

+ - scale (np.ndarray[float32](2,)): scale of the bbox w & h.

+ """

+ aspect_ratio = self.ann_info['image_size'][0] / self.ann_info[

+ 'image_size'][1]

+ center = np.array([x + w * 0.5, y + h * 0.5], dtype=np.float32)

+ #

+ # if (not self.test_mode) and np.random.rand() < 0.3:

+ # center += 0.4 * (np.random.rand(2) - 0.5) * [w, h]

+

+ if w > aspect_ratio * h:

+ h = w * 1.0 / aspect_ratio

+ elif w < aspect_ratio * h:

+ w = h * aspect_ratio

+

+ # pixel std is 200.0

+ scale = np.array([w / 200.0, h / 200.0], dtype=np.float32)

+ # padding to include proper amount of context

+ scale = scale * 1.25

+

+ return center, scale

+

+ def evaluate(self, outputs, res_folder, metric='PCK', **kwargs):

+ """Evaluate interhand2d keypoint results. The pose prediction results

+ will be saved in `${res_folder}/result_keypoints.json`.

+

+ Note:

+ batch_size: N

+ num_keypoints: K

+ heatmap height: H

+ heatmap width: W

+

+ Args:

+ outputs (list(preds, boxes, image_path, output_heatmap))

+ :preds (np.ndarray[N,K,3]): The first two dimensions are

+ coordinates, score is the third dimension of the array.

+ :boxes (np.ndarray[N,6]): [center[0], center[1], scale[0]

+ , scale[1],area, score]

+ :image_paths (list[str]): For example, ['C', 'a', 'p', 't',

+ 'u', 'r', 'e', '1', '2', '/', '0', '3', '9', '0', '_',

+ 'd', 'h', '_', 't', 'o', 'u', 'c', 'h', 'R', 'O', 'M',

+ '/', 'c', 'a', 'm', '4', '1', '0', '2', '0', '9', '/',

+ 'i', 'm', 'a', 'g', 'e', '6', '2', '4', '3', '4', '.',

+ 'j', 'p', 'g']

+ :output_heatmap (np.ndarray[N, K, H, W]): model outputs.

+

+ res_folder (str): Path of directory to save the results.

+ metric (str | list[str]): Metric to be performed.

+ Options: 'PCK', 'AUC', 'EPE'.

+

+ Returns:

+ dict: Evaluation results for evaluation metric.

+ """

+ metrics = metric if isinstance(metric, list) else [metric]

+ allowed_metrics = ['PCK', 'AUC', 'EPE', 'NME']

+ for metric in metrics:

+ if metric not in allowed_metrics:

+ raise KeyError(f'metric {metric} is not supported')

+

+ res_file = os.path.join(res_folder, 'result_keypoints.json')

+

+ kpts = []

+ for output in outputs:

+ preds = output['preds']

+ boxes = output['boxes']

+ image_paths = output['image_paths']

+ bbox_ids = output['bbox_ids']

+

+ batch_size = len(image_paths)

+ for i in range(batch_size):

+ image_id = self.name2id[image_paths[i][len(self.img_prefix):]]

+

+ kpts.append({

+ 'keypoints': preds[i].tolist(),

+ 'center': boxes[i][0:2].tolist(),

+ 'scale': boxes[i][2:4].tolist(),

+ 'area': float(boxes[i][4]),

+ 'score': float(boxes[i][5]),

+ 'image_id': image_id,

+ 'bbox_id': bbox_ids[i]

+ })

+ kpts = self._sort_and_unique_bboxes(kpts)

+

+ self._write_keypoint_results(kpts, res_file)

+ info_str = self._report_metric(res_file, metrics)

+ name_value = OrderedDict(info_str)

+

+ return name_value

diff --git a/projects/pose_anything/datasets/datasets/mp100/transformer_base_dataset.py b/projects/pose_anything/datasets/datasets/mp100/transformer_base_dataset.py

new file mode 100644

index 0000000000..9433596a0e

--- /dev/null

+++ b/projects/pose_anything/datasets/datasets/mp100/transformer_base_dataset.py

@@ -0,0 +1,209 @@

+import copy

+from abc import ABCMeta, abstractmethod

+

+import json_tricks as json

+import numpy as np

+from mmcv.parallel import DataContainer as DC

+from mmengine.dataset import Compose

+from torch.utils.data import Dataset

+

+from mmpose.core.evaluation.top_down_eval import keypoint_pck_accuracy

+from mmpose.datasets import DATASETS

+

+

+@DATASETS.register_module()

+class TransformerBaseDataset(Dataset, metaclass=ABCMeta):

+

+ def __init__(self,

+ ann_file,

+ img_prefix,

+ data_cfg,

+ pipeline,

+ test_mode=False):

+ self.image_info = {}

+ self.ann_info = {}

+

+ self.annotations_path = ann_file

+ if not img_prefix.endswith('/'):

+ img_prefix = img_prefix + '/'

+ self.img_prefix = img_prefix

+ self.pipeline = pipeline

+ self.test_mode = test_mode

+

+ self.ann_info['image_size'] = np.array(data_cfg['image_size'])

+ self.ann_info['heatmap_size'] = np.array(data_cfg['heatmap_size'])

+ self.ann_info['num_joints'] = data_cfg['num_joints']

+

+ self.ann_info['flip_pairs'] = None

+

+ self.ann_info['inference_channel'] = data_cfg['inference_channel']

+ self.ann_info['num_output_channels'] = data_cfg['num_output_channels']

+ self.ann_info['dataset_channel'] = data_cfg['dataset_channel']

+

+ self.db = []

+ self.num_shots = 1

+ self.paired_samples = []

+ self.pipeline = Compose(self.pipeline)

+

+ @abstractmethod

+ def _get_db(self):

+ """Load dataset."""

+ raise NotImplementedError

+

+ @abstractmethod

+ def _select_kpt(self, obj, kpt_id):

+ """Select kpt."""

+ raise NotImplementedError

+

+ @abstractmethod

+ def evaluate(self, cfg, preds, output_dir, *args, **kwargs):

+ """Evaluate keypoint results."""

+ raise NotImplementedError

+

+ @staticmethod

+ def _write_keypoint_results(keypoints, res_file):

+ """Write results into a json file."""

+

+ with open(res_file, 'w') as f:

+ json.dump(keypoints, f, sort_keys=True, indent=4)

+

+ def _report_metric(self,

+ res_file,

+ metrics,

+ pck_thr=0.2,

+ pckh_thr=0.7,

+ auc_nor=30):

+ """Keypoint evaluation.

+

+ Args:

+ res_file (str): Json file stored prediction results.

+ metrics (str | list[str]): Metric to be performed.

+ Options: 'PCK', 'PCKh', 'AUC', 'EPE'.

+ pck_thr (float): PCK threshold, default as 0.2.

+ pckh_thr (float): PCKh threshold, default as 0.7.

+ auc_nor (float): AUC normalization factor, default as 30 pixel.

+

+ Returns:

+ List: Evaluation results for evaluation metric.

+ """

+ info_str = []

+

+ with open(res_file, 'r') as fin:

+ preds = json.load(fin)

+ assert len(preds) == len(self.paired_samples)

+

+ outputs = []

+ gts = []

+ masks = []

+ threshold_bbox = []

+ threshold_head_box = []

+

+ for pred, pair in zip(preds, self.paired_samples):

+ item = self.db[pair[-1]]

+ outputs.append(np.array(pred['keypoints'])[:, :-1])

+ gts.append(np.array(item['joints_3d'])[:, :-1])

+

+ mask_query = ((np.array(item['joints_3d_visible'])[:, 0]) > 0)

+ mask_sample = ((np.array(

+ self.db[pair[0]]['joints_3d_visible'])[:, 0]) > 0)

+ for id_s in pair[:-1]:

+ mask_sample = np.bitwise_and(

+ mask_sample,

+ ((np.array(self.db[id_s]['joints_3d_visible'])[:, 0]) > 0))

+ masks.append(np.bitwise_and(mask_query, mask_sample))

+

+ if 'PCK' in metrics:

+ bbox = np.array(item['bbox'])

+ bbox_thr = np.max(bbox[2:])

+ threshold_bbox.append(np.array([bbox_thr, bbox_thr]))

+ if 'PCKh' in metrics:

+ head_box_thr = item['head_size']

+ threshold_head_box.append(

+ np.array([head_box_thr, head_box_thr]))

+

+ if 'PCK' in metrics:

+ pck_avg = []

+ for (output, gt, mask, thr_bbox) in zip(outputs, gts, masks,

+ threshold_bbox):

+ _, pck, _ = keypoint_pck_accuracy(

+ np.expand_dims(output, 0), np.expand_dims(gt, 0),

+ np.expand_dims(mask, 0), pck_thr,

+ np.expand_dims(thr_bbox, 0))

+ pck_avg.append(pck)

+ info_str.append(('PCK', np.mean(pck_avg)))

+

+ return info_str

+

+ def _merge_obj(self, Xs_list, Xq, idx):

+ """merge Xs_list and Xq.

+

+ :param Xs_list: N-shot samples X

+ :param Xq: query X

+ :param idx: id of paired_samples

+ :return: Xall

+ """

+ Xall = dict()

+ Xall['img_s'] = [Xs['img'] for Xs in Xs_list]

+ Xall['target_s'] = [Xs['target'] for Xs in Xs_list]

+ Xall['target_weight_s'] = [Xs['target_weight'] for Xs in Xs_list]

+ xs_img_metas = [Xs['img_metas'].data for Xs in Xs_list]

+

+ Xall['img_q'] = Xq['img']

+ Xall['target_q'] = Xq['target']

+ Xall['target_weight_q'] = Xq['target_weight']

+ xq_img_metas = Xq['img_metas'].data

+

+ img_metas = dict()

+ for key in xq_img_metas.keys():

+ img_metas['sample_' + key] = [

+ xs_img_meta[key] for xs_img_meta in xs_img_metas

+ ]

+ img_metas['query_' + key] = xq_img_metas[key]

+ img_metas['bbox_id'] = idx

+

+ Xall['img_metas'] = DC(img_metas, cpu_only=True)

+

+ return Xall

+

+ def __len__(self):

+ """Get the size of the dataset."""

+ return len(self.paired_samples)

+

+ def __getitem__(self, idx):

+ """Get the sample given index."""

+

+ pair_ids = self.paired_samples[idx] # [supported id * shots, query id]

+ assert len(pair_ids) == self.num_shots + 1

+ sample_id_list = pair_ids[:self.num_shots]

+ query_id = pair_ids[-1]

+

+ sample_obj_list = []

+ for sample_id in sample_id_list:

+ sample_obj = copy.deepcopy(self.db[sample_id])

+ sample_obj['ann_info'] = copy.deepcopy(self.ann_info)

+ sample_obj_list.append(sample_obj)

+

+ query_obj = copy.deepcopy(self.db[query_id])

+ query_obj['ann_info'] = copy.deepcopy(self.ann_info)

+

+ Xs_list = []

+ for sample_obj in sample_obj_list:

+ Xs = self.pipeline(

+ sample_obj

+ ) # dict with ['img', 'target', 'target_weight', 'img_metas'],

+ Xs_list.append(Xs) # Xs['target'] is of shape [100, map_h, map_w]

+ Xq = self.pipeline(query_obj)

+

+ Xall = self._merge_obj(Xs_list, Xq, idx)

+ Xall['skeleton'] = self.db[query_id]['skeleton']

+ return Xall

+

+ def _sort_and_unique_bboxes(self, kpts, key='bbox_id'):

+ """sort kpts and remove the repeated ones."""

+ kpts = sorted(kpts, key=lambda x: x[key])

+ num = len(kpts)

+ for i in range(num - 1, 0, -1):

+ if kpts[i][key] == kpts[i - 1][key]:

+ del kpts[i]

+

+ return kpts

diff --git a/projects/pose_anything/datasets/datasets/mp100/transformer_dataset.py b/projects/pose_anything/datasets/datasets/mp100/transformer_dataset.py

new file mode 100644

index 0000000000..3244f2a0d7

--- /dev/null

+++ b/projects/pose_anything/datasets/datasets/mp100/transformer_dataset.py

@@ -0,0 +1,342 @@

+import os

+import random

+from collections import OrderedDict

+

+import numpy as np

+from xtcocotools.coco import COCO

+

+from mmpose.datasets import DATASETS

+from .transformer_base_dataset import TransformerBaseDataset

+

+

+@DATASETS.register_module()

+class TransformerPoseDataset(TransformerBaseDataset):

+

+ def __init__(self,

+ ann_file,

+ img_prefix,

+ data_cfg,

+ pipeline,

+ valid_class_ids,

+ max_kpt_num=None,

+ num_shots=1,

+ num_queries=100,

+ num_episodes=1,

+ test_mode=False):

+ super().__init__(

+ ann_file, img_prefix, data_cfg, pipeline, test_mode=test_mode)

+

+ self.ann_info['flip_pairs'] = []

+

+ self.ann_info['upper_body_ids'] = []

+ self.ann_info['lower_body_ids'] = []

+

+ self.ann_info['use_different_joint_weights'] = False

+ self.ann_info['joint_weights'] = np.array([

+ 1.,

+ ], dtype=np.float32).reshape((self.ann_info['num_joints'], 1))

+

+ self.coco = COCO(ann_file)

+

+ self.id2name, self.name2id = self._get_mapping_id_name(self.coco.imgs)

+ self.img_ids = self.coco.getImgIds()

+ self.classes = [

+ cat['name'] for cat in self.coco.loadCats(self.coco.getCatIds())

+ ]

+

+ self.num_classes = len(self.classes)

+ self._class_to_ind = dict(zip(self.classes, self.coco.getCatIds()))

+ self._ind_to_class = dict(zip(self.coco.getCatIds(), self.classes))

+

+ if valid_class_ids is not None: # None by default

+ self.valid_class_ids = valid_class_ids

+ else:

+ self.valid_class_ids = self.coco.getCatIds()

+ self.valid_classes = [

+ self._ind_to_class[ind] for ind in self.valid_class_ids

+ ]

+

+ self.cats = self.coco.cats

+ self.max_kpt_num = max_kpt_num

+

+ # Also update self.cat2obj

+ self.db = self._get_db()

+

+ self.num_shots = num_shots

+

+ if not test_mode:

+ # Update every training epoch

+ self.random_paired_samples()

+ else:

+ self.num_queries = num_queries

+ self.num_episodes = num_episodes

+ self.make_paired_samples()

+

+ def random_paired_samples(self):

+ num_datas = [

+ len(self.cat2obj[self._class_to_ind[cls]])

+ for cls in self.valid_classes

+ ]

+

+ # balance the dataset

+ max_num_data = max(num_datas)

+

+ all_samples = []

+ for cls in self.valid_class_ids:

+ for i in range(max_num_data):

+ shot = random.sample(self.cat2obj[cls], self.num_shots + 1)

+ all_samples.append(shot)

+

+ self.paired_samples = np.array(all_samples)

+ np.random.shuffle(self.paired_samples)

+

+ def make_paired_samples(self):

+ random.seed(1)

+ np.random.seed(0)

+

+ all_samples = []

+ for cls in self.valid_class_ids:

+ for _ in range(self.num_episodes):

+ shots = random.sample(self.cat2obj[cls],

+ self.num_shots + self.num_queries)

+ sample_ids = shots[:self.num_shots]

+ query_ids = shots[self.num_shots:]

+ for query_id in query_ids:

+ all_samples.append(sample_ids + [query_id])

+

+ self.paired_samples = np.array(all_samples)

+

+ def _select_kpt(self, obj, kpt_id):

+ obj['joints_3d'] = obj['joints_3d'][kpt_id:kpt_id + 1]

+ obj['joints_3d_visible'] = obj['joints_3d_visible'][kpt_id:kpt_id + 1]

+ obj['kpt_id'] = kpt_id

+

+ return obj

+

+ @staticmethod

+ def _get_mapping_id_name(imgs):

+ """

+ Args:

+ imgs (dict): dict of image info.

+

+ Returns:

+ tuple: Image name & id mapping dicts.

+

+ - id2name (dict): Mapping image id to name.

+ - name2id (dict): Mapping image name to id.

+ """

+ id2name = {}

+ name2id = {}

+ for image_id, image in imgs.items():

+ file_name = image['file_name']

+ id2name[image_id] = file_name

+ name2id[file_name] = image_id

+

+ return id2name, name2id

+

+ def _get_db(self):

+ """Ground truth bbox and keypoints."""

+ self.obj_id = 0

+

+ self.cat2obj = {}

+ for i in self.coco.getCatIds():

+ self.cat2obj.update({i: []})

+

+ gt_db = []

+ for img_id in self.img_ids:

+ gt_db.extend(self._load_coco_keypoint_annotation_kernel(img_id))

+

+ return gt_db

+

+ def _load_coco_keypoint_annotation_kernel(self, img_id):

+ """load annotation from COCOAPI.

+

+ Note:

+ bbox:[x1, y1, w, h]

+ Args:

+ img_id: coco image id

+ Returns:

+ dict: db entry

+ """

+ img_ann = self.coco.loadImgs(img_id)[0]

+ width = img_ann['width']

+ height = img_ann['height']

+

+ ann_ids = self.coco.getAnnIds(imgIds=img_id, iscrowd=False)

+ objs = self.coco.loadAnns(ann_ids)

+

+ # sanitize bboxes

+ valid_objs = []

+ for obj in objs:

+ if 'bbox' not in obj:

+ continue

+ x, y, w, h = obj['bbox']

+ x1 = max(0, x)

+ y1 = max(0, y)

+ x2 = min(width - 1, x1 + max(0, w - 1))

+ y2 = min(height - 1, y1 + max(0, h - 1))

+ if ('area' not in obj or obj['area'] > 0) and x2 > x1 and y2 > y1:

+ obj['clean_bbox'] = [x1, y1, x2 - x1, y2 - y1]

+ valid_objs.append(obj)

+ objs = valid_objs

+

+ bbox_id = 0

+ rec = []

+ for obj in objs:

+ if 'keypoints' not in obj:

+ continue

+ if max(obj['keypoints']) == 0:

+ continue

+ if 'num_keypoints' in obj and obj['num_keypoints'] == 0:

+ continue

+

+ category_id = obj['category_id']

+ # the number of keypoint for this specific category

+ cat_kpt_num = int(len(obj['keypoints']) / 3)

+ if self.max_kpt_num is None:

+ kpt_num = cat_kpt_num

+ else:

+ kpt_num = self.max_kpt_num

+

+ joints_3d = np.zeros((kpt_num, 3), dtype=np.float32)

+ joints_3d_visible = np.zeros((kpt_num, 3), dtype=np.float32)

+

+ keypoints = np.array(obj['keypoints']).reshape(-1, 3)

+ joints_3d[:cat_kpt_num, :2] = keypoints[:, :2]

+ joints_3d_visible[:cat_kpt_num, :2] = np.minimum(

+ 1, keypoints[:, 2:3])

+

+ center, scale = self._xywh2cs(*obj['clean_bbox'][:4])

+

+ image_file = os.path.join(self.img_prefix, self.id2name[img_id])

+ if os.path.exists(image_file):

+ self.cat2obj[category_id].append(self.obj_id)

+

+ rec.append({

+ 'image_file':

+ image_file,

+ 'center':

+ center,

+ 'scale':

+ scale,

+ 'rotation':

+ 0,

+ 'bbox':

+ obj['clean_bbox'][:4],

+ 'bbox_score':

+ 1,

+ 'joints_3d':

+ joints_3d,

+ 'joints_3d_visible':

+ joints_3d_visible,

+ 'category_id':

+ category_id,

+ 'cat_kpt_num':

+ cat_kpt_num,

+ 'bbox_id':

+ self.obj_id,

+ 'skeleton':

+ self.coco.cats[obj['category_id']]['skeleton'],

+ })

+ bbox_id = bbox_id + 1

+ self.obj_id += 1

+

+ return rec

+

+ def _xywh2cs(self, x, y, w, h):

+ """This encodes bbox(x,y,w,w) into (center, scale)

+

+ Args:

+ x, y, w, h

+

+ Returns:

+ tuple: A tuple containing center and scale.

+

+ - center (np.ndarray[float32](2,)): center of the bbox (x, y).

+ - scale (np.ndarray[float32](2,)): scale of the bbox w & h.

+ """

+ aspect_ratio = self.ann_info['image_size'][0] / self.ann_info[

+ 'image_size'][1]

+ center = np.array([x + w * 0.5, y + h * 0.5], dtype=np.float32)

+ #

+ # if (not self.test_mode) and np.random.rand() < 0.3:

+ # center += 0.4 * (np.random.rand(2) - 0.5) * [w, h]

+

+ if w > aspect_ratio * h:

+ h = w * 1.0 / aspect_ratio

+ elif w < aspect_ratio * h:

+ w = h * aspect_ratio

+

+ # pixel std is 200.0

+ scale = np.array([w / 200.0, h / 200.0], dtype=np.float32)

+ # padding to include proper amount of context

+ scale = scale * 1.25

+

+ return center, scale

+

+ def evaluate(self, outputs, res_folder, metric='PCK', **kwargs):

+ """Evaluate interhand2d keypoint results. The pose prediction results

+ will be saved in `${res_folder}/result_keypoints.json`.

+

+ Note:

+ batch_size: N

+ num_keypoints: K

+ heatmap height: H

+ heatmap width: W

+

+ Args:

+ outputs (list(preds, boxes, image_path, output_heatmap))

+ :preds (np.ndarray[N,K,3]): The first two dimensions are

+ coordinates, score is the third dimension of the array.

+ :boxes (np.ndarray[N,6]): [center[0], center[1], scale[0]

+ , scale[1],area, score]

+ :image_paths (list[str]): For example, ['C', 'a', 'p', 't',

+ 'u', 'r', 'e', '1', '2', '/', '0', '3', '9', '0', '_',

+ 'd', 'h', '_', 't', 'o', 'u', 'c', 'h', 'R', 'O', 'M',

+ '/', 'c', 'a', 'm', '4', '1', '0', '2', '0', '9', '/',

+ 'i', 'm', 'a', 'g', 'e', '6', '2', '4', '3', '4', '.',

+ 'j', 'p', 'g']

+ :output_heatmap (np.ndarray[N, K, H, W]): model outputs.

+

+ res_folder (str): Path of directory to save the results.

+ metric (str | list[str]): Metric to be performed.

+ Options: 'PCK', 'AUC', 'EPE'.

+

+ Returns:

+ dict: Evaluation results for evaluation metric.

+ """

+ metrics = metric if isinstance(metric, list) else [metric]

+ allowed_metrics = ['PCK', 'AUC', 'EPE', 'NME']

+ for metric in metrics:

+ if metric not in allowed_metrics:

+ raise KeyError(f'metric {metric} is not supported')

+

+ res_file = os.path.join(res_folder, 'result_keypoints.json')

+

+ kpts = []

+ for output in outputs:

+ preds = output['preds']

+ boxes = output['boxes']

+ image_paths = output['image_paths']

+ bbox_ids = output['bbox_ids']

+

+ batch_size = len(image_paths)

+ for i in range(batch_size):

+ image_id = self.name2id[image_paths[i][len(self.img_prefix):]]

+

+ kpts.append({

+ 'keypoints': preds[i].tolist(),

+ 'center': boxes[i][0:2].tolist(),

+ 'scale': boxes[i][2:4].tolist(),

+ 'area': float(boxes[i][4]),

+ 'score': float(boxes[i][5]),

+ 'image_id': image_id,

+ 'bbox_id': bbox_ids[i]

+ })

+ kpts = self._sort_and_unique_bboxes(kpts)

+

+ self._write_keypoint_results(kpts, res_file)

+ info_str = self._report_metric(res_file, metrics)

+ name_value = OrderedDict(info_str)

+

+ return name_value