Supported algorithms:

- [x] [DeepPose](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/algorithms.html#deeppose-cvpr-2014) (CVPR'2014) @@ -231,7 +211,7 @@ A summary can be found in the [Model Zoo](https://mmpose.readthedocs.io/en/lates - [x] [SCNet](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/backbones.html#scnet-cvpr-2020) (CVPR'2020) - [ ] [HigherHRNet](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/backbones.html#higherhrnet-cvpr-2020) (CVPR'2020) - [x] [RSN](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/backbones.html#rsn-eccv-2020) (ECCV'2020) -- [ ] [InterNet](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/algorithms.html#internet-eccv-2020) (ECCV'2020) +- [x] [InterNet](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/algorithms.html#internet-eccv-2020) (ECCV'2020) - [ ] [VoxelPose](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/algorithms.html#voxelpose-eccv-2020) (ECCV'2020) - [x] [LiteHRNet](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/backbones.html#litehrnet-cvpr-2021) (CVPR'2021) - [x] [ViPNAS](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/backbones.html#vipnas-cvpr-2021) (CVPR'2021) @@ -240,7 +220,7 @@ A summary can be found in the [Model Zoo](https://mmpose.readthedocs.io/en/lates

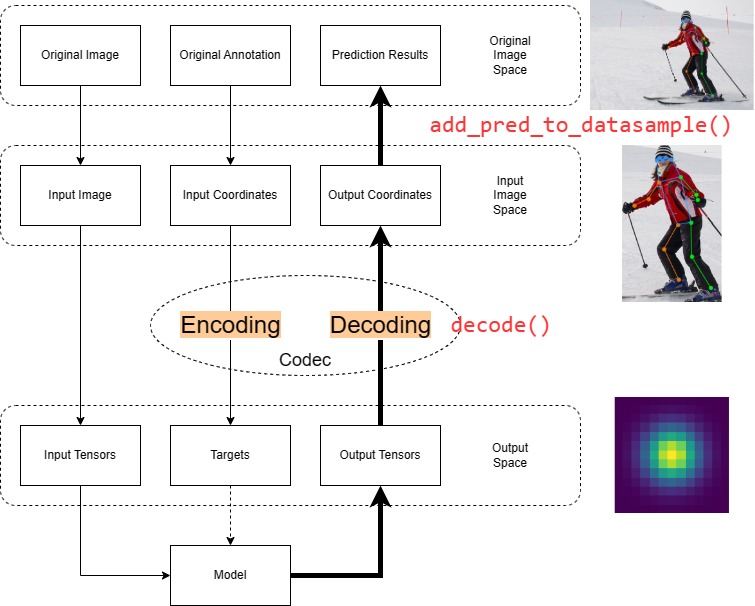

+

-

Supported techniques:

- [x] [FPN](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/techniques.html#fpn-cvpr-2017) (CVPR'2017) @@ -255,7 +235,7 @@ A summary can be found in the [Model Zoo](https://mmpose.readthedocs.io/en/lates

+

-

Supported datasets:

- [x] [AFLW](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/datasets.html#aflw-iccvw-2011) \[[homepage](https://www.tugraz.at/institute/icg/research/team-bischof/lrs/downloads/aflw/)\] (ICCVW'2011) @@ -291,10 +271,11 @@ A summary can be found in the [Model Zoo](https://mmpose.readthedocs.io/en/lates - [x] [Horse-10](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/datasets.html#horse-10-wacv-2021) \[[homepage](http://www.mackenziemathislab.org/horse10)\] (WACV'2021) - [x] [Human-Art](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/datasets.html#human-art-cvpr-2023) \[[homepage](https://idea-research.github.io/HumanArt/)\] (CVPR'2023) - [x] [LaPa](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/datasets.html#lapa-aaai-2020) \[[homepage](https://github.com/JDAI-CV/lapa-dataset)\] (AAAI'2020) +- [x] [UBody](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/datasets.html#ubody-cvpr-2023) \[[homepage](https://github.com/IDEA-Research/OSX)\] (CVPR'2023)

+

[](https://mmpose.readthedocs.io/en/latest/?badge=latest)

-[](https://github.com/open-mmlab/mmpose/actions)

+[](https://github.com/open-mmlab/mmpose/actions)

[](https://codecov.io/gh/open-mmlab/mmpose)

[](https://pypi.org/project/mmpose/)

[](https://github.com/open-mmlab/mmpose/blob/main/LICENSE)

[](https://github.com/open-mmlab/mmpose/issues)

[](https://github.com/open-mmlab/mmpose/issues)

+[](https://openxlab.org.cn/apps?search=mmpose)

[📘文档](https://mmpose.readthedocs.io/zh_CN/latest/) |

[🛠️安装](https://mmpose.readthedocs.io/zh_CN/latest/installation.html) |

@@ -95,12 +96,21 @@ https://user-images.githubusercontent.com/15977946/124654387-0fd3c500-ded1-11eb-

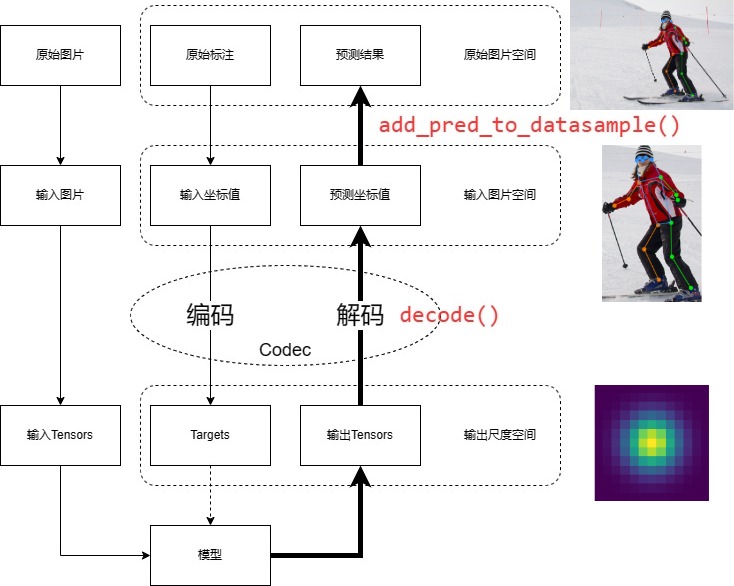

## 最新进展

-- 我们支持了三个新的数据集:

- - (CVPR 2023) [Human-Art](https://github.com/IDEA-Research/HumanArt)

- - (CVPR 2022) [Animal Kingdom](https://github.com/sutdcv/Animal-Kingdom)

- - (AAAI 2020) [LaPa](https://github.com/JDAI-CV/lapa-dataset/)

+- 我们支持了两个新的数据集:

-

+ - (CVPR 2023) [UBody](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/datasets.html#ubody-cvpr-2023)

+ - [300W-LP](https://github.com/open-mmlab/mmpose/tree/main/configs/face_2d_keypoint/topdown_heatmap/300wlp)

+

+- 支持四个新算法:

+

+ - (ICCV 2023) [MotionBERT](https://github.com/open-mmlab/mmpose/tree/main/configs/body_3d_keypoint/motionbert)

+ - (ICCVW 2023) [DWPose](https://github.com/open-mmlab/mmpose/tree/main/configs/wholebody_2d_keypoint/dwpose)

+ - (ICLR 2023) [EDPose](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo/body_2d_keypoint.html#edpose-edpose-on-coco)

+ - (ICLR 2022) [Uniformer](https://github.com/open-mmlab/mmpose/tree/main/projects/uniformer)

+

+- 发布首个在 COCO-Wholebody 上精度超过 70 AP 的全身姿态估计模型 RTMW,具体请参考 [RTMPose](/projects/rtmpose/)。[在线试玩](https://openxlab.org.cn/apps/detail/mmpose/RTMPose)

+

+

- 欢迎使用 [*MMPose 项目*](/projects/README.md)。在这里,您可以发现 MMPose 中的最新功能和算法,并且可以通过最快的方式与社区分享自己的创意和代码实现。向 MMPose 中添加新功能从此变得简单丝滑:

@@ -112,59 +122,25 @@ https://user-images.githubusercontent.com/15977946/124654387-0fd3c500-ded1-11eb-

- [YOLOX-Pose](/projects/yolox_pose/)

- [MMPose4AIGC](/projects/mmpose4aigc/)

- [Simple Keypoints](/projects/skps/)

+ - [Just Dance](/projects/just_dance/)

+ - [Uniformer](/projects/uniformer/)

- 从简单的 [示例项目](/projects/example_project/) 开启您的 MMPose 代码贡献者之旅吧,让我们共同打造更好用的 MMPose!

-- 2023-07-04:MMPose [v1.1.0](https://github.com/open-mmlab/mmpose/releases/tag/v1.1.0) 正式发布了,主要更新包括: +- 2023-10-12:MMPose [v1.2.0](https://github.com/open-mmlab/mmpose/releases/tag/v1.2.0) 正式发布了,主要更新包括: - - 支持新数据集:Human-Art、Animal Kingdom、LaPa。 - - 支持新的配置文件风格,支持 IDE 跳转和搜索。 - - 提供更强性能的 RTMPose 模型。 - - 迁移 3D 姿态估计算法。 - - 加速推理脚本,全部 demo 脚本支持摄像头推理。 + - 支持新数据集:UBody、300W-LP。 + - 支持新算法:MotionBERT、DWPose、EDPose、Uniformer + - 迁移 Associate Embedding、InterNet、YOLOX-Pose 算法。 + - 迁移 DeepFashion2 数据集。 + - 支持 Badcase 可视化分析、多数据集评测、关键点可见性预测功能。 - 请查看完整的 [版本说明](https://github.com/open-mmlab/mmpose/releases/tag/v1.1.0) 以了解更多 MMPose v1.1.0 带来的更新! + 请查看完整的 [版本说明](https://github.com/open-mmlab/mmpose/releases/tag/v1.2.0) 以了解更多 MMPose v1.2.0 带来的更新! ## 0.x / 1.x 迁移 -MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的变化。目前 v1.0.0 中已经完成了一部分算法的迁移工作,剩余的算法将在后续的版本中陆续完成,我们将在下面的列表中展示迁移进度。 - -

Supported backbones:

- [x] [AlexNet](https://mmpose.readthedocs.io/en/latest/model_zoo_papers/backbones.html#alexnet-neurips-2012) (NeurIPS'2012) diff --git a/README_CN.md b/README_CN.md index 48672c2a88..1fe1a50a43 100644 --- a/README_CN.md +++ b/README_CN.md @@ -19,12 +19,13 @@-- 2023-07-04:MMPose [v1.1.0](https://github.com/open-mmlab/mmpose/releases/tag/v1.1.0) 正式发布了,主要更新包括: +- 2023-10-12:MMPose [v1.2.0](https://github.com/open-mmlab/mmpose/releases/tag/v1.2.0) 正式发布了,主要更新包括: - - 支持新数据集:Human-Art、Animal Kingdom、LaPa。 - - 支持新的配置文件风格,支持 IDE 跳转和搜索。 - - 提供更强性能的 RTMPose 模型。 - - 迁移 3D 姿态估计算法。 - - 加速推理脚本,全部 demo 脚本支持摄像头推理。 + - 支持新数据集:UBody、300W-LP。 + - 支持新算法:MotionBERT、DWPose、EDPose、Uniformer + - 迁移 Associate Embedding、InterNet、YOLOX-Pose 算法。 + - 迁移 DeepFashion2 数据集。 + - 支持 Badcase 可视化分析、多数据集评测、关键点可见性预测功能。 - 请查看完整的 [版本说明](https://github.com/open-mmlab/mmpose/releases/tag/v1.1.0) 以了解更多 MMPose v1.1.0 带来的更新! + 请查看完整的 [版本说明](https://github.com/open-mmlab/mmpose/releases/tag/v1.2.0) 以了解更多 MMPose v1.2.0 带来的更新! ## 0.x / 1.x 迁移 -MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的变化。目前 v1.0.0 中已经完成了一部分算法的迁移工作,剩余的算法将在后续的版本中陆续完成,我们将在下面的列表中展示迁移进度。 - -

-

+MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的变化。目前 v1.0.0 中已经完成了一部分算法的迁移工作,剩余的算法将在后续的版本中陆续完成,我们将在这个 [Issue 页面](https://github.com/open-mmlab/mmpose/issues/2258) 中展示迁移进度。

如果您使用的算法还没有完成迁移,您也可以继续使用访问 [0.x 分支](https://github.com/open-mmlab/mmpose/tree/0.x) 和 [旧版文档](https://mmpose.readthedocs.io/zh_CN/0.x/)

@@ -184,6 +160,9 @@ MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的

- [配置文件](https://mmpose.readthedocs.io/zh_CN/latest/user_guides/configs.html)

- [准备数据集](https://mmpose.readthedocs.io/zh_CN/latest/user_guides/prepare_datasets.html)

- [训练与测试](https://mmpose.readthedocs.io/zh_CN/latest/user_guides/train_and_test.html)

+ - [模型部署](https://mmpose.readthedocs.io/zh_CN/latest/user_guides/how_to_deploy.html)

+ - [模型分析工具](https://mmpose.readthedocs.io/zh_CN/latest/user_guides/model_analysis.html)

+ - [数据集标注与预处理脚本](https://mmpose.readthedocs.io/zh_CN/latest/user_guides/dataset_tools.html)

2. 对于希望基于 MMPose 进行开发的研究者和开发者:

@@ -192,10 +171,9 @@ MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的

- [实现新模型](https://mmpose.readthedocs.io/zh_CN/latest/advanced_guides/implement_new_models.html)

- [自定义数据集](https://mmpose.readthedocs.io/zh_CN/latest/advanced_guides/customize_datasets.html)

- [自定义数据变换](https://mmpose.readthedocs.io/zh_CN/latest/advanced_guides/customize_transforms.html)

+ - [自定义指标](https://mmpose.readthedocs.io/zh_CN/latest/advanced_guides/customize_evaluation.html)

- [自定义优化器](https://mmpose.readthedocs.io/zh_CN/latest/advanced_guides/customize_optimizer.html)

- [自定义日志](https://mmpose.readthedocs.io/zh_CN/latest/advanced_guides/customize_logging.html)

- - [模型部署](https://mmpose.readthedocs.io/zh_CN/latest/advanced_guides/how_to_deploy.html)

- - [模型分析工具](https://mmpose.readthedocs.io/zh_CN/latest/advanced_guides/model_analysis.html)

- [迁移指南](https://mmpose.readthedocs.io/zh_CN/latest/migration.html)

3. 对于希望加入开源社区,向 MMPose 贡献代码的研究者和开发者:

@@ -211,7 +189,7 @@ MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的

各个模型的结果和设置都可以在对应的 config(配置)目录下的 **README.md** 中查看。

整体的概况也可也在 [模型库](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo.html) 页面中查看。

-迁移进度

- -| 算法名称 | 迁移进度 | -| :-------------------------------- | :---------: | -| MTUT (CVPR 2019) | | -| MSPN (ArXiv 2019) | done | -| InterNet (ECCV 2020) | | -| DEKR (CVPR 2021) | done | -| HigherHRNet (CVPR 2020) | | -| DeepPose (CVPR 2014) | done | -| RLE (ICCV 2021) | done | -| SoftWingloss (TIP 2021) | done | -| VideoPose3D (CVPR 2019) | done | -| Hourglass (ECCV 2016) | done | -| LiteHRNet (CVPR 2021) | done | -| AdaptiveWingloss (ICCV 2019) | done | -| SimpleBaseline2D (ECCV 2018) | done | -| PoseWarper (NeurIPS 2019) | | -| SimpleBaseline3D (ICCV 2017) | done | -| HMR (CVPR 2018) | | -| UDP (CVPR 2020) | done | -| VIPNAS (CVPR 2021) | done | -| Wingloss (CVPR 2018) | done | -| DarkPose (CVPR 2020) | done | -| Associative Embedding (NIPS 2017) | in progress | -| VoxelPose (ECCV 2020) | | -| RSN (ECCV 2020) | done | -| CID (CVPR 2022) | done | -| CPM (CVPR 2016) | done | -| HRNet (CVPR 2019) | done | -| HRNetv2 (TPAMI 2019) | done | -| SCNet (CVPR 2020) | done | - -

+

-

支持的算法

- [x] [DeepPose](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/algorithms.html#deeppose-cvpr-2014) (CVPR'2014) @@ -229,7 +207,7 @@ MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的 - [x] [SCNet](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/backbones.html#scnet-cvpr-2020) (CVPR'2020) - [ ] [HigherHRNet](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/backbones.html#higherhrnet-cvpr-2020) (CVPR'2020) - [x] [RSN](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/backbones.html#rsn-eccv-2020) (ECCV'2020) -- [ ] [InterNet](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/algorithms.html#internet-eccv-2020) (ECCV'2020) +- [x] [InterNet](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/algorithms.html#internet-eccv-2020) (ECCV'2020) - [ ] [VoxelPose](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/algorithms.html#voxelpose-eccv-2020) (ECCV'2020) - [x] [LiteHRNet](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/backbones.html#litehrnet-cvpr-2021) (CVPR'2021) - [x] [ViPNAS](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/backbones.html#vipnas-cvpr-2021) (CVPR'2021) @@ -238,7 +216,7 @@ MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的

+

-

支持的技术

- [x] [FPN](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/techniques.html#fpn-cvpr-2017) (CVPR'2017) @@ -253,7 +231,7 @@ MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的

+

-

支持的数据集

- [x] [AFLW](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/datasets.html#aflw-iccvw-2011) \[[主页](https://www.tugraz.at/institute/icg/research/team-bischof/lrs/downloads/aflw/)\] (ICCVW'2011) @@ -289,10 +267,11 @@ MMPose v1.0.0 是一个重大更新,包括了大量的 API 和配置文件的 - [x] [Horse-10](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/datasets.html#horse-10-wacv-2021) \[[主页](http://www.mackenziemathislab.org/horse10)\] (WACV'2021) - [x] [Human-Art](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/datasets.html#human-art-cvpr-2023) \[[主页](https://idea-research.github.io/HumanArt/)\] (CVPR'2023) - [x] [LaPa](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/datasets.html#lapa-aaai-2020) \[[主页](https://github.com/JDAI-CV/lapa-dataset)\] (AAAI'2020) +- [x] [UBody](https://mmpose.readthedocs.io/zh_CN/latest/model_zoo_papers/datasets.html#ubody-cvpr-2023) \[[主页](https://github.com/IDEA-Research/OSX)\] (CVPR'2023)

+

(LaPa) | FLOPS

(G) | Download | | :--------------------------------------------------------------------------: | :--------: | :----------------: | :---------------: | :-----------------------------------------------------------------------------: | -| [RTMPose-t\*](./rtmpose/face_2d_keypoint/rtmpose-t_8xb256-120e_lapa-256x256.py) | 256x256 | 1.67 | 0.652 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-t_simcc-face6_pt-in1k_120e-256x256-df79d9a5_20230529.pth) | -| [RTMPose-s\*](./rtmpose/face_2d_keypoint/rtmpose-m_8xb256-120e_lapa-256x256.py) | 256x256 | 1.59 | 1.119 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-s_simcc-face6_pt-in1k_120e-256x256-d779fdef_20230529.pth) | -| [RTMPose-m\*](./rtmpose/face_2d_keypoint/rtmpose-m_8xb256-120e_lapa-256x256.py) | 256x256 | 1.44 | 2.852 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-face6_pt-in1k_120e-256x256-72a37400_20230529.pth) | +| [RTMPose-t\*](./rtmpose/face_2d_keypoint/rtmpose-t_8xb256-120e_face6-256x256.py) | 256x256 | 1.67 | 0.652 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-t_simcc-face6_pt-in1k_120e-256x256-df79d9a5_20230529.pth) | +| [RTMPose-s\*](./rtmpose/face_2d_keypoint/rtmpose-s_8xb256-120e_face6-256x256.py) | 256x256 | 1.59 | 1.119 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-s_simcc-face6_pt-in1k_120e-256x256-d779fdef_20230529.pth) | +| [RTMPose-m\*](./rtmpose/face_2d_keypoint/rtmpose-m_8xb256-120e_face6-256x256.py) | 256x256 | 1.44 | 2.852 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-face6_pt-in1k_120e-256x256-72a37400_20230529.pth) | diff --git a/configs/face_2d_keypoint/rtmpose/face6/rtmpose_face6.yml b/configs/face_2d_keypoint/rtmpose/face6/rtmpose_face6.yml index 2cd822a337..38b8395bd9 100644 --- a/configs/face_2d_keypoint/rtmpose/face6/rtmpose_face6.yml +++ b/configs/face_2d_keypoint/rtmpose/face6/rtmpose_face6.yml @@ -42,6 +42,7 @@ Models: Architecture: *id001 Training Data: *id002 Name: rtmpose-m_8xb256-120e_face6-256x256 + Alias: face Results: - Dataset: Face6 Metrics: diff --git a/configs/face_2d_keypoint/rtmpose/wflw/rtmpose_wflw.yml b/configs/face_2d_keypoint/rtmpose/wflw/rtmpose_wflw.yml index deee03a7dd..1112fdf69d 100644 --- a/configs/face_2d_keypoint/rtmpose/wflw/rtmpose_wflw.yml +++ b/configs/face_2d_keypoint/rtmpose/wflw/rtmpose_wflw.yml @@ -1,7 +1,6 @@ Models: - Config: configs/face_2d_keypoint/rtmpose/wflw/rtmpose-m_8xb64-60e_wflw-256x256.py In Collection: RTMPose - Alias: face Metadata: Architecture: - RTMPose diff --git a/configs/face_2d_keypoint/topdown_heatmap/300wlp/hrnetv2_300wlp.md b/configs/face_2d_keypoint/topdown_heatmap/300wlp/hrnetv2_300wlp.md new file mode 100644 index 0000000000..773bc602ae --- /dev/null +++ b/configs/face_2d_keypoint/topdown_heatmap/300wlp/hrnetv2_300wlp.md @@ -0,0 +1,42 @@ + + +

(G) | ORT-Latency

(ms)

(i7-11700) | TRT-FP16-Latency

(ms)

(GTX 1660Ti) | Download | +| :----------- | :-----------------: | :-----------------: | :--------: | :------: | :------: | :---------------: | :-----------------------------------------: | :------------------------------------------------: | :------------: | +| [DWPose-t](../rtmpose/ubody/rtmpose-t_8xb64-270e_coco-ubody-wholebody-256x192.py) | [DW l-t](../dwpose/ubody/s1_dis/dwpose_l_dis_t_coco-ubody-256x192.py) | [DW t-t](../dwpose/ubody/s2_dis/dwpose_t-tt_coco-ubody-256x192.py) | 256x192 | 48.5 | 58.4 | 0.5 | - | - | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-t_simcc-ucoco_dw-ucoco_270e-256x192-dcf277bf_20230728.pth) | +| [DWPose-s](../rtmpose/ubody/rtmpose-s_8xb64-270e_coco-ubody-wholebody-256x192.py) | [DW l-s](../dwpose/ubody/s1_dis/dwpose_l_dis_s_coco-ubody-256x192.py) | [DW s-s](../dwpose/ubody/s2_dis/dwpose_s-ss_coco-ubody-256x192.py) | 256x192 | 53.8 | 63.2 | 0.9 | - | - | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-s_simcc-ucoco_dw-ucoco_270e-256x192-3fd922c8_20230728.pth) | +| [DWPose-m](../rtmpose/ubody/rtmpose-m_8xb64-270e_coco-ubody-wholebody-256x192.py) | [DW l-m](../dwpose/ubody/s1_dis/dwpose_l_dis_m_coco-ubody-256x192.py) | [DW m-m](../dwpose/ubody/s2_dis/dwpose_m-mm_coco-ubody-256x192.py) | 256x192 | 60.6 | 69.5 | 2.22 | 13.50 | 4.00 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-ucoco_dw-ucoco_270e-256x192-c8b76419_20230728.pth) | +| [DWPose-l](../rtmpose/ubody/rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py) | [DW x-l](../dwpose/ubody/s1_dis/dwpose_x_dis_l_coco-ubody-256x192.py) | [DW l-l](../dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-256x192.py) | 256x192 | 63.1 | 71.7 | 4.52 | 23.41 | 5.67 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-l_simcc-ucoco_dw-ucoco_270e-256x192-4d6dfc62_20230728.pth) | +| [DWPose-l](../rtmpose/ubody/rtmpose-l_8xb32-270e_coco-ubody-wholebody-384x288.py) | [DW x-l](../dwpose/ubody/s1_dis/dwpose_x_dis_l_coco-ubody-384x288.py) | [DW l-l](../dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-384x288.py) | 384x288 | 66.5 | 74.3 | 10.07 | 44.58 | 7.68 | [Model](https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-l_simcc-ucoco_dw-ucoco_270e-384x288-2438fd99_20230728.pth) | + +## Train a model + +### Train DWPose with the first stage distillation + +``` +bash tools/dist_train.sh configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/rtmpose_x_dis_l_coco-ubody-384x288.py 8 +``` + +### Tansfer the S1 distillation models into regular models + +``` +# first stage distillation +python pth_transfer.py $dis_ckpt $new_pose_ckpt +``` + +⭐Before S2 distillation, you should add your model path into 'teacher_pretrained' of your S2 dis_config. + +### Train DWPose with the second stage distillation + +``` +bash tools/dist_train.sh configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-384x288.py 8 +``` + +### Tansfer the S2 distillation models into regular models + +``` +# second stage distillation +python pth_transfer.py $dis_ckpt $new_pose_ckpt --two_dis +``` + +## Citation + +``` +@article{yang2023effective, + title={Effective Whole-body Pose Estimation with Two-stages Distillation}, + author={Yang, Zhendong and Zeng, Ailing and Yuan, Chun and Li, Yu}, + journal={arXiv preprint arXiv:2307.15880}, + year={2023} +} +``` diff --git a/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s1_dis/dwpose_l_dis_m_coco-256x192.py b/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s1_dis/dwpose_l_dis_m_coco-256x192.py new file mode 100644 index 0000000000..422871acbb --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s1_dis/dwpose_l_dis_m_coco-256x192.py @@ -0,0 +1,48 @@ +_base_ = [ + '../../../rtmpose/coco-wholebody/rtmpose-m_8xb64-270e_coco-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = False + +# config settings +fea = True +logit = True + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + teacher_pretrained='https://download.openmmlab.com/mmpose/v1/projects/' + 'rtmpose/rtmpose-l_simcc-coco-wholebody_pt-aic-coco_270e-256x192-6f206314_20230124.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/coco-wholebody/' + 'rtmpose-l_8xb64-270e_coco-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/coco-wholebody/' + 'rtmpose-m_8xb64-270e_coco-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='FeaLoss', + name='loss_fea', + use_this=fea, + student_channels=768, + teacher_channels=1024, + alpha_fea=0.00007, + ) + ]), + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=0.1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), +) +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s1_dis/dwpose_x_dis_l_coco-384x288.py b/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s1_dis/dwpose_x_dis_l_coco-384x288.py new file mode 100644 index 0000000000..150cb2bbe6 --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s1_dis/dwpose_x_dis_l_coco-384x288.py @@ -0,0 +1,48 @@ +_base_ = [ + '../../../rtmpose/coco-wholebody/rtmpose-l_8xb32-270e_coco-wholebody-384x288.py' # noqa: E501 +] + +# model settings +find_unused_parameters = False + +# config settings +fea = True +logit = True + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + teacher_pretrained='https://download.openmmlab.com/mmpose/v1/projects/' + 'rtmposev1/rtmpose-x_simcc-coco-wholebody_pt-body7_270e-384x288-401dfc90_20230629.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/coco-wholebody/' + 'rtmpose-x_8xb32-270e_coco-wholebody-384x288.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/coco-wholebody/' + 'rtmpose-l_8xb32-270e_coco-wholebody-384x288.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='FeaLoss', + name='loss_fea', + use_this=fea, + student_channels=1024, + teacher_channels=1280, + alpha_fea=0.00007, + ) + ]), + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=0.1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), +) +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s2_dis/dwpose_l-ll_coco-384x288.py b/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s2_dis/dwpose_l-ll_coco-384x288.py new file mode 100644 index 0000000000..6c63f99b0c --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s2_dis/dwpose_l-ll_coco-384x288.py @@ -0,0 +1,45 @@ +_base_ = [ + '../../../rtmpose/coco-wholebody/rtmpose-l_8xb32-270e_coco-wholebody-384x288.py' # noqa: E501 +] + +# model settings +find_unused_parameters = True + +# dis settings +second_dis = True + +# config settings +logit = True + +train_cfg = dict(max_epochs=60, val_interval=10) + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + two_dis=second_dis, + teacher_pretrained='work_dirs/' + 'dwpose_x_dis_l_coco-384x288/dw-x-l_coco_384.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/coco-wholebody/' + 'rtmpose-l_8xb32-270e_coco-wholebody-384x288.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/coco-wholebody/' + 'rtmpose-l_8xb32-270e_coco-wholebody-384x288.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + train_cfg=train_cfg, +) + +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s2_dis/dwpose_m-mm_coco-256x192.py b/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s2_dis/dwpose_m-mm_coco-256x192.py new file mode 100644 index 0000000000..943ec60184 --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/coco-wholebody/s2_dis/dwpose_m-mm_coco-256x192.py @@ -0,0 +1,45 @@ +_base_ = [ + '../../../rtmpose/coco-wholebody/rtmpose-m_8xb64-270e_coco-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = True + +# dis settings +second_dis = True + +# config settings +logit = True + +train_cfg = dict(max_epochs=60, val_interval=10) + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + two_dis=second_dis, + teacher_pretrained='work_dirs/' + 'dwpose_l_dis_m_coco-256x192/dw-l-m_coco_256.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/coco-wholebody/' + 'rtmpose-m_8xb64-270e_coco-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/coco-wholebody/' + 'rtmpose-m_8xb64-270e_coco-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + train_cfg=train_cfg, +) + +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_m_coco-ubody-256x192.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_m_coco-ubody-256x192.py new file mode 100644 index 0000000000..b3a917b96e --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_m_coco-ubody-256x192.py @@ -0,0 +1,48 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-m_8xb64-270e_coco-ubody-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = False + +# config settings +fea = True +logit = True + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + teacher_pretrained='https://download.openmmlab.com/mmpose/v1/projects/' + 'rtmposev1/rtmpose-l_ucoco_256x192-95bb32f5_20230822.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-m_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='FeaLoss', + name='loss_fea', + use_this=fea, + student_channels=768, + teacher_channels=1024, + alpha_fea=0.00007, + ) + ]), + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=0.1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), +) +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_s_coco-ubody-256x192.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_s_coco-ubody-256x192.py new file mode 100644 index 0000000000..c90a0ea6a7 --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_s_coco-ubody-256x192.py @@ -0,0 +1,48 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-s_8xb64-270e_coco-ubody-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = False + +# config settings +fea = True +logit = True + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + teacher_pretrained='https://download.openmmlab.com/mmpose/v1/projects/' + 'rtmposev1/rtmpose-l_ucoco_256x192-95bb32f5_20230822.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-s_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='FeaLoss', + name='loss_fea', + use_this=fea, + student_channels=512, + teacher_channels=1024, + alpha_fea=0.00007, + ) + ]), + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=0.1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), +) +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_t_coco-ubody-256x192.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_t_coco-ubody-256x192.py new file mode 100644 index 0000000000..01618f146a --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_l_dis_t_coco-ubody-256x192.py @@ -0,0 +1,48 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-s_8xb64-270e_coco-ubody-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = False + +# config settings +fea = True +logit = True + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + teacher_pretrained='https://download.openmmlab.com/mmpose/v1/projects/' + 'rtmposev1/rtmpose-l_ucoco_256x192-95bb32f5_20230822.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-t_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='FeaLoss', + name='loss_fea', + use_this=fea, + student_channels=384, + teacher_channels=1024, + alpha_fea=0.00007, + ) + ]), + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=0.1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), +) +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_x_dis_l_coco-ubody-256x192.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_x_dis_l_coco-ubody-256x192.py new file mode 100644 index 0000000000..85a287324b --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/dwpose_x_dis_l_coco-ubody-256x192.py @@ -0,0 +1,48 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = False + +# config settings +fea = True +logit = True + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + teacher_pretrained='https://download.openmmlab.com/mmpose/v1/projects/' + 'rtmposev1/rtmpose-x_ucoco_256x192-05f5bcb7_20230822.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-x_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='FeaLoss', + name='loss_fea', + use_this=fea, + student_channels=1024, + teacher_channels=1280, + alpha_fea=0.00007, + ) + ]), + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=0.1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), +) +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/rtmpose_x_dis_l_coco-ubody-384x288.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/rtmpose_x_dis_l_coco-ubody-384x288.py new file mode 100644 index 0000000000..acde64a03a --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/rtmpose_x_dis_l_coco-ubody-384x288.py @@ -0,0 +1,48 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-l_8xb32-270e_coco-ubody-wholebody-384x288.py' # noqa: E501 +] + +# model settings +find_unused_parameters = False + +# config settings +fea = True +logit = True + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + teacher_pretrained='https://download.openmmlab.com/mmpose/v1/projects/' + 'rtmposev1/rtmpose-x_ucoco_384x288-f5b50679_20230822.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-x_8xb32-270e_coco-ubody-wholebody-384x288.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb32-270e_coco-ubody-wholebody-384x288.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='FeaLoss', + name='loss_fea', + use_this=fea, + student_channels=1024, + teacher_channels=1280, + alpha_fea=0.00007, + ) + ]), + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=0.1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), +) +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-256x192.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-256x192.py new file mode 100644 index 0000000000..e3f456a2b9 --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-256x192.py @@ -0,0 +1,45 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = True + +# dis settings +second_dis = True + +# config settings +logit = True + +train_cfg = dict(max_epochs=60, val_interval=10) + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + two_dis=second_dis, + teacher_pretrained='work_dirs/' + 'dwpose_x_dis_l_coco-ubody-256x192/dw-x-l_ucoco_256.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + train_cfg=train_cfg, +) + +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-384x288.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-384x288.py new file mode 100644 index 0000000000..3815fad1e2 --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-384x288.py @@ -0,0 +1,45 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-l_8xb32-270e_coco-ubody-wholebody-384x288.py' # noqa: E501 +] + +# model settings +find_unused_parameters = True + +# dis settings +second_dis = True + +# config settings +logit = True + +train_cfg = dict(max_epochs=60, val_interval=10) + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + two_dis=second_dis, + teacher_pretrained='work_dirs/' + 'dwpose_x_dis_l_coco-ubody-384x288/dw-x-l_ucoco_384.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb32-270e_coco-ubody-wholebody-384x288.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-l_8xb32-270e_coco-ubody-wholebody-384x288.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + train_cfg=train_cfg, +) + +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_m-mm_coco-ubody-256x192.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_m-mm_coco-ubody-256x192.py new file mode 100644 index 0000000000..1e6834ffca --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_m-mm_coco-ubody-256x192.py @@ -0,0 +1,45 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-m_8xb64-270e_coco-ubody-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = True + +# dis settings +second_dis = True + +# config settings +logit = True + +train_cfg = dict(max_epochs=60, val_interval=10) + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + two_dis=second_dis, + teacher_pretrained='work_dirs/' + 'dwpose_l_dis_m_coco-ubody-256x192/dw-l-m_ucoco_256.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-m_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-m_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + train_cfg=train_cfg, +) + +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_s-ss_coco-ubody-256x192.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_s-ss_coco-ubody-256x192.py new file mode 100644 index 0000000000..24a4a94642 --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_s-ss_coco-ubody-256x192.py @@ -0,0 +1,45 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-s_8xb64-270e_coco-ubody-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = True + +# dis settings +second_dis = True + +# config settings +logit = True + +train_cfg = dict(max_epochs=60, val_interval=10) + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + two_dis=second_dis, + teacher_pretrained='work_dirs/' + 'dwpose_l_dis_s_coco-ubody-256x192/dw-l-s_ucoco_256.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-s_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-s_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + train_cfg=train_cfg, +) + +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_t-tt_coco-ubody-256x192.py b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_t-tt_coco-ubody-256x192.py new file mode 100644 index 0000000000..c7c322ece2 --- /dev/null +++ b/configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_t-tt_coco-ubody-256x192.py @@ -0,0 +1,45 @@ +_base_ = [ + '../../../rtmpose/ubody/rtmpose-t_8xb64-270e_coco-ubody-wholebody-256x192.py' # noqa: E501 +] + +# model settings +find_unused_parameters = True + +# dis settings +second_dis = True + +# config settings +logit = True + +train_cfg = dict(max_epochs=60, val_interval=10) + +# method details +model = dict( + _delete_=True, + type='DWPoseDistiller', + two_dis=second_dis, + teacher_pretrained='work_dirs/' + 'dwpose_l_dis_t_coco-ubody-256x192/dw-l-t_ucoco_256.pth', # noqa: E501 + teacher_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-t_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + student_cfg='configs/wholebody_2d_keypoint/rtmpose/ubody/' + 'rtmpose-t_8xb64-270e_coco-ubody-wholebody-256x192.py', # noqa: E501 + distill_cfg=[ + dict(methods=[ + dict( + type='KDLoss', + name='loss_logit', + use_this=logit, + weight=1, + ) + ]), + ], + data_preprocessor=dict( + type='PoseDataPreprocessor', + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + train_cfg=train_cfg, +) + +optim_wrapper = dict(clip_grad=dict(max_norm=1., norm_type=2)) diff --git a/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw-x_8xb320-270e_cocktail13-384x288.py b/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw-x_8xb320-270e_cocktail13-384x288.py new file mode 100644 index 0000000000..55d07b61a8 --- /dev/null +++ b/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw-x_8xb320-270e_cocktail13-384x288.py @@ -0,0 +1,638 @@ +# Copyright (c) OpenMMLab. All rights reserved. +from mmengine.config import read_base + +with read_base(): + from mmpose.configs._base_.default_runtime import * # noqa + +from albumentations.augmentations import Blur, CoarseDropout, MedianBlur +from mmdet.engine.hooks import PipelineSwitchHook +from mmengine.dataset import DefaultSampler +from mmengine.hooks import EMAHook +from mmengine.model import PretrainedInit +from mmengine.optim import CosineAnnealingLR, LinearLR, OptimWrapper +from torch.nn import SiLU, SyncBatchNorm +from torch.optim import AdamW + +from mmpose.codecs import SimCCLabel +from mmpose.datasets import (AicDataset, CocoWholeBodyDataset, COFWDataset, + CombinedDataset, CrowdPoseDataset, + Face300WDataset, GenerateTarget, + GetBBoxCenterScale, HalpeDataset, + HumanArt21Dataset, InterHand2DDoubleDataset, + JhmdbDataset, KeypointConverter, LapaDataset, + LoadImage, MpiiDataset, PackPoseInputs, + PoseTrack18Dataset, RandomFlip, RandomHalfBody, + TopdownAffine, UBody2dDataset, WFLWDataset) +from mmpose.datasets.transforms.common_transforms import ( + Albumentation, PhotometricDistortion, RandomBBoxTransform) +from mmpose.engine.hooks import ExpMomentumEMA +from mmpose.evaluation import CocoWholeBodyMetric +from mmpose.models import (CSPNeXt, CSPNeXtPAFPN, KLDiscretLoss, + PoseDataPreprocessor, RTMWHead, + TopdownPoseEstimator) + +# common setting +num_keypoints = 133 +input_size = (288, 384) + +# runtime +max_epochs = 270 +stage2_num_epochs = 10 +base_lr = 5e-4 +train_batch_size = 320 +val_batch_size = 32 + +train_cfg.update(max_epochs=max_epochs, val_interval=10) # noqa +randomness = dict(seed=21) + +# optimizer +optim_wrapper = dict( + type=OptimWrapper, + optimizer=dict(type=AdamW, lr=base_lr, weight_decay=0.05), + clip_grad=dict(max_norm=35, norm_type=2), + paramwise_cfg=dict( + norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True)) + +# learning rate +param_scheduler = [ + dict( + type=LinearLR, start_factor=1.0e-5, by_epoch=False, begin=0, end=1000), + dict( + type=CosineAnnealingLR, + eta_min=base_lr * 0.05, + begin=max_epochs // 2, + end=max_epochs, + T_max=max_epochs // 2, + by_epoch=True, + convert_to_iter_based=True), +] + +# automatically scaling LR based on the actual training batch size +auto_scale_lr = dict(base_batch_size=5632) + +# codec settings +codec = dict( + type=SimCCLabel, + input_size=input_size, + sigma=(6., 6.93), + simcc_split_ratio=2.0, + normalize=False, + use_dark=False) + +# model settings +model = dict( + type=TopdownPoseEstimator, + data_preprocessor=dict( + type=PoseDataPreprocessor, + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + backbone=dict( + type=CSPNeXt, + arch='P5', + expand_ratio=0.5, + deepen_factor=1.33, + widen_factor=1.25, + channel_attention=True, + norm_cfg=dict(type='BN'), + act_cfg=dict(type=SiLU), + init_cfg=dict( + type=PretrainedInit, + prefix='backbone.', + checkpoint='https://download.openmmlab.com/mmpose/v1/' + 'wholebody_2d_keypoint/rtmpose/ubody/rtmpose-x_simcc-ucoco_pt-aic-coco_270e-384x288-f5b50679_20230822.pth' # noqa + )), + neck=dict( + type=CSPNeXtPAFPN, + in_channels=[320, 640, 1280], + out_channels=None, + out_indices=( + 1, + 2, + ), + num_csp_blocks=2, + expand_ratio=0.5, + norm_cfg=dict(type=SyncBatchNorm), + act_cfg=dict(type=SiLU, inplace=True)), + head=dict( + type=RTMWHead, + in_channels=1280, + out_channels=num_keypoints, + input_size=input_size, + in_featuremap_size=tuple([s // 32 for s in input_size]), + simcc_split_ratio=codec['simcc_split_ratio'], + final_layer_kernel_size=7, + gau_cfg=dict( + hidden_dims=256, + s=128, + expansion_factor=2, + dropout_rate=0., + drop_path=0., + act_fn=SiLU, + use_rel_bias=False, + pos_enc=False), + loss=dict( + type=KLDiscretLoss, + use_target_weight=True, + beta=10., + label_softmax=True), + decoder=codec), + test_cfg=dict(flip_test=True)) + +# base dataset settings +dataset_type = CocoWholeBodyDataset +data_mode = 'topdown' +data_root = 'data/' + +backend_args = dict(backend='local') + +# pipelines +train_pipeline = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale), + dict(type=RandomFlip, direction='horizontal'), + dict(type=RandomHalfBody), + dict(type=RandomBBoxTransform, scale_factor=[0.5, 1.5], rotate_factor=90), + dict(type=TopdownAffine, input_size=codec['input_size']), + dict(type=PhotometricDistortion), + dict( + type=Albumentation, + transforms=[ + dict(type=Blur, p=0.1), + dict(type=MedianBlur, p=0.1), + dict( + type=CoarseDropout, + max_holes=1, + max_height=0.4, + max_width=0.4, + min_holes=1, + min_height=0.2, + min_width=0.2, + p=1.0), + ]), + dict( + type=GenerateTarget, encoder=codec, use_dataset_keypoint_weights=True), + dict(type=PackPoseInputs) +] +val_pipeline = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale), + dict(type=TopdownAffine, input_size=codec['input_size']), + dict(type=PackPoseInputs) +] +train_pipeline_stage2 = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale), + dict(type=RandomFlip, direction='horizontal'), + dict(type=RandomHalfBody), + dict( + type=RandomBBoxTransform, + shift_factor=0., + scale_factor=[0.5, 1.5], + rotate_factor=90), + dict(type=TopdownAffine, input_size=codec['input_size']), + dict( + type=Albumentation, + transforms=[ + dict(type=Blur, p=0.1), + dict(type=MedianBlur, p=0.1), + ]), + dict( + type=GenerateTarget, encoder=codec, use_dataset_keypoint_weights=True), + dict(type=PackPoseInputs) +] + +# mapping + +aic_coco133 = [(0, 6), (1, 8), (2, 10), (3, 5), (4, 7), (5, 9), (6, 12), + (7, 14), (8, 16), (9, 11), (10, 13), (11, 15)] + +crowdpose_coco133 = [(0, 5), (1, 6), (2, 7), (3, 8), (4, 9), (5, 10), (6, 11), + (7, 12), (8, 13), (9, 14), (10, 15), (11, 16)] + +mpii_coco133 = [ + (0, 16), + (1, 14), + (2, 12), + (3, 11), + (4, 13), + (5, 15), + (8, 18), + (9, 17), + (10, 10), + (11, 8), + (12, 6), + (13, 5), + (14, 7), + (15, 9), +] + +jhmdb_coco133 = [ + (0, 18), + (2, 17), + (3, 6), + (4, 5), + (5, 12), + (6, 11), + (7, 8), + (8, 7), + (9, 14), + (10, 13), + (11, 10), + (12, 9), + (13, 16), + (14, 15), +] + +halpe_coco133 = [(i, i) + for i in range(17)] + [(20, 17), (21, 20), (22, 18), (23, 21), + (24, 19), + (25, 22)] + [(i, i - 3) + for i in range(26, 136)] + +posetrack_coco133 = [ + (0, 0), + (2, 17), + (3, 3), + (4, 4), + (5, 5), + (6, 6), + (7, 7), + (8, 8), + (9, 9), + (10, 10), + (11, 11), + (12, 12), + (13, 13), + (14, 14), + (15, 15), + (16, 16), +] + +humanart_coco133 = [(i, i) for i in range(17)] + [(17, 99), (18, 120), + (19, 17), (20, 20)] + +# train datasets +dataset_coco = dict( + type=dataset_type, + data_root=data_root, + data_mode=data_mode, + ann_file='coco/annotations/coco_wholebody_train_v1.0.json', + data_prefix=dict(img='detection/coco/train2017/'), + pipeline=[], +) + +dataset_aic = dict( + type=AicDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='aic/annotations/aic_train.json', + data_prefix=dict(img='pose/ai_challenge/ai_challenger_keypoint' + '_train_20170902/keypoint_train_images_20170902/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=aic_coco133) + ], +) + +dataset_crowdpose = dict( + type=CrowdPoseDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='crowdpose/annotations/mmpose_crowdpose_trainval.json', + data_prefix=dict(img='pose/CrowdPose/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=crowdpose_coco133) + ], +) + +dataset_mpii = dict( + type=MpiiDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='mpii/annotations/mpii_train.json', + data_prefix=dict(img='pose/MPI/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=mpii_coco133) + ], +) + +dataset_jhmdb = dict( + type=JhmdbDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='jhmdb/annotations/Sub1_train.json', + data_prefix=dict(img='pose/JHMDB/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=jhmdb_coco133) + ], +) + +dataset_halpe = dict( + type=HalpeDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='halpe/annotations/halpe_train_v1.json', + data_prefix=dict(img='pose/Halpe/hico_20160224_det/images/train2015'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=halpe_coco133) + ], +) + +dataset_posetrack = dict( + type=PoseTrack18Dataset, + data_root=data_root, + data_mode=data_mode, + ann_file='posetrack18/annotations/posetrack18_train.json', + data_prefix=dict(img='pose/PoseChallenge2018/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=posetrack_coco133) + ], +) + +dataset_humanart = dict( + type=HumanArt21Dataset, + data_root=data_root, + data_mode=data_mode, + ann_file='HumanArt/annotations/training_humanart.json', + filter_cfg=dict(scenes=['real_human']), + data_prefix=dict(img='pose/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=humanart_coco133) + ]) + +ubody_scenes = [ + 'Magic_show', 'Entertainment', 'ConductMusic', 'Online_class', 'TalkShow', + 'Speech', 'Fitness', 'Interview', 'Olympic', 'TVShow', 'Singing', + 'SignLanguage', 'Movie', 'LiveVlog', 'VideoConference' +] + +ubody_datasets = [] +for scene in ubody_scenes: + each = dict( + type=UBody2dDataset, + data_root=data_root, + data_mode=data_mode, + ann_file=f'Ubody/annotations/{scene}/train_annotations.json', + data_prefix=dict(img='pose/UBody/images/'), + pipeline=[], + sample_interval=10) + ubody_datasets.append(each) + +dataset_ubody = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/ubody2d.py'), + datasets=ubody_datasets, + pipeline=[], + test_mode=False, +) + +face_pipeline = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale, padding=1.25), + dict( + type=RandomBBoxTransform, + shift_factor=0., + scale_factor=[1.5, 2.0], + rotate_factor=0), +] + +wflw_coco133 = [(i * 2, 23 + i) + for i in range(17)] + [(33 + i, 40 + i) for i in range(5)] + [ + (42 + i, 45 + i) for i in range(5) + ] + [(51 + i, 50 + i) + for i in range(9)] + [(60, 59), (61, 60), (63, 61), + (64, 62), (65, 63), (67, 64), + (68, 65), (69, 66), (71, 67), + (72, 68), (73, 69), + (75, 70)] + [(76 + i, 71 + i) + for i in range(20)] +dataset_wflw = dict( + type=WFLWDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='wflw/annotations/face_landmarks_wflw_train.json', + data_prefix=dict(img='pose/WFLW/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=wflw_coco133), *face_pipeline + ], +) + +mapping_300w_coco133 = [(i, 23 + i) for i in range(68)] +dataset_300w = dict( + type=Face300WDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='300w/annotations/face_landmarks_300w_train.json', + data_prefix=dict(img='pose/300w/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=mapping_300w_coco133), *face_pipeline + ], +) + +cofw_coco133 = [(0, 40), (2, 44), (4, 42), (1, 49), (3, 45), (6, 47), (8, 59), + (10, 62), (9, 68), (11, 65), (18, 54), (19, 58), (20, 53), + (21, 56), (22, 71), (23, 77), (24, 74), (25, 85), (26, 89), + (27, 80), (28, 31)] +dataset_cofw = dict( + type=COFWDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='cofw/annotations/cofw_train.json', + data_prefix=dict(img='pose/COFW/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=cofw_coco133), *face_pipeline + ], +) + +lapa_coco133 = [(i * 2, 23 + i) for i in range(17)] + [ + (33 + i, 40 + i) for i in range(5) +] + [(42 + i, 45 + i) for i in range(5)] + [ + (51 + i, 50 + i) for i in range(4) +] + [(58 + i, 54 + i) for i in range(5)] + [(66, 59), (67, 60), (69, 61), + (70, 62), (71, 63), (73, 64), + (75, 65), (76, 66), (78, 67), + (79, 68), (80, 69), + (82, 70)] + [(84 + i, 71 + i) + for i in range(20)] +dataset_lapa = dict( + type=LapaDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='LaPa/annotations/lapa_trainval.json', + data_prefix=dict(img='pose/LaPa/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=lapa_coco133), *face_pipeline + ], +) + +dataset_wb = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=[dataset_coco, dataset_halpe, dataset_ubody], + pipeline=[], + test_mode=False, +) + +dataset_body = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=[ + dataset_aic, + dataset_crowdpose, + dataset_mpii, + dataset_jhmdb, + dataset_posetrack, + dataset_humanart, + ], + pipeline=[], + test_mode=False, +) + +dataset_face = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=[ + dataset_wflw, + dataset_300w, + dataset_cofw, + dataset_lapa, + ], + pipeline=[], + test_mode=False, +) + +hand_pipeline = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale), + dict( + type=RandomBBoxTransform, + shift_factor=0., + scale_factor=[1.5, 2.0], + rotate_factor=0), +] + +interhand_left = [(21, 95), (22, 94), (23, 93), (24, 92), (25, 99), (26, 98), + (27, 97), (28, 96), (29, 103), (30, 102), (31, 101), + (32, 100), (33, 107), (34, 106), (35, 105), (36, 104), + (37, 111), (38, 110), (39, 109), (40, 108), (41, 91)] +interhand_right = [(i - 21, j + 21) for i, j in interhand_left] +interhand_coco133 = interhand_right + interhand_left + +dataset_interhand2d = dict( + type=InterHand2DDoubleDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='interhand26m/annotations/all/InterHand2.6M_train_data.json', + camera_param_file='interhand26m/annotations/all/' + 'InterHand2.6M_train_camera.json', + joint_file='interhand26m/annotations/all/' + 'InterHand2.6M_train_joint_3d.json', + data_prefix=dict(img='interhand2.6m/images/train/'), + sample_interval=10, + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=interhand_coco133, + ), *hand_pipeline + ], +) + +dataset_hand = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=[dataset_interhand2d], + pipeline=[], + test_mode=False, +) + +train_datasets = [dataset_wb, dataset_body, dataset_face, dataset_hand] + +# data loaders +train_dataloader = dict( + batch_size=train_batch_size, + num_workers=4, + pin_memory=False, + persistent_workers=True, + sampler=dict(type=DefaultSampler, shuffle=True), + dataset=dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=train_datasets, + pipeline=train_pipeline, + test_mode=False, + )) + +val_dataloader = dict( + batch_size=val_batch_size, + num_workers=4, + persistent_workers=True, + drop_last=False, + sampler=dict(type=DefaultSampler, shuffle=False, round_up=False), + dataset=dict( + type=CocoWholeBodyDataset, + ann_file='data/coco/annotations/coco_wholebody_val_v1.0.json', + data_prefix=dict(img='data/detection/coco/val2017/'), + pipeline=val_pipeline, + bbox_file='data/coco/person_detection_results/' + 'COCO_val2017_detections_AP_H_56_person.json', + test_mode=True)) + +test_dataloader = val_dataloader + +# hooks +default_hooks.update( # noqa + checkpoint=dict( + save_best='coco-wholebody/AP', rule='greater', max_keep_ckpts=1)) + +custom_hooks = [ + dict( + type=EMAHook, + ema_type=ExpMomentumEMA, + momentum=0.0002, + update_buffers=True, + priority=49), + dict( + type=PipelineSwitchHook, + switch_epoch=max_epochs - stage2_num_epochs, + switch_pipeline=train_pipeline_stage2) +] + +# evaluators +val_evaluator = dict( + type=CocoWholeBodyMetric, + ann_file='data/coco/annotations/coco_wholebody_val_v1.0.json') +test_evaluator = val_evaluator diff --git a/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw-x_8xb704-270e_cocktail13-256x192.py b/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw-x_8xb704-270e_cocktail13-256x192.py new file mode 100644 index 0000000000..48275c3c11 --- /dev/null +++ b/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw-x_8xb704-270e_cocktail13-256x192.py @@ -0,0 +1,639 @@ +# Copyright (c) OpenMMLab. All rights reserved. +from mmengine.config import read_base + +with read_base(): + from mmpose.configs._base_.default_runtime import * # noqa + +from albumentations.augmentations import Blur, CoarseDropout, MedianBlur +from mmdet.engine.hooks import PipelineSwitchHook +from mmengine.dataset import DefaultSampler +from mmengine.hooks import EMAHook +from mmengine.model import PretrainedInit +from mmengine.optim import CosineAnnealingLR, LinearLR, OptimWrapper +from torch.nn import SiLU, SyncBatchNorm +from torch.optim import AdamW + +from mmpose.codecs import SimCCLabel +from mmpose.datasets import (AicDataset, CocoWholeBodyDataset, COFWDataset, + CombinedDataset, CrowdPoseDataset, + Face300WDataset, GenerateTarget, + GetBBoxCenterScale, HalpeDataset, + HumanArt21Dataset, InterHand2DDoubleDataset, + JhmdbDataset, KeypointConverter, LapaDataset, + LoadImage, MpiiDataset, PackPoseInputs, + PoseTrack18Dataset, RandomFlip, RandomHalfBody, + TopdownAffine, UBody2dDataset, WFLWDataset) +from mmpose.datasets.transforms.common_transforms import ( + Albumentation, PhotometricDistortion, RandomBBoxTransform) +from mmpose.engine.hooks import ExpMomentumEMA +from mmpose.evaluation import CocoWholeBodyMetric +from mmpose.models import (CSPNeXt, CSPNeXtPAFPN, KLDiscretLoss, + PoseDataPreprocessor, RTMWHead, + TopdownPoseEstimator) + +# common setting +num_keypoints = 133 +input_size = (192, 256) + +# runtime +max_epochs = 270 +stage2_num_epochs = 10 +base_lr = 5e-4 +train_batch_size = 704 +val_batch_size = 32 + +train_cfg.update(max_epochs=max_epochs, val_interval=10) # noqa +randomness = dict(seed=21) + +# optimizer +optim_wrapper = dict( + type=OptimWrapper, + optimizer=dict(type=AdamW, lr=base_lr, weight_decay=0.05), + clip_grad=dict(max_norm=35, norm_type=2), + paramwise_cfg=dict( + norm_decay_mult=0, bias_decay_mult=0, bypass_duplicate=True)) + +# learning rate +param_scheduler = [ + dict( + type=LinearLR, start_factor=1.0e-5, by_epoch=False, begin=0, end=1000), + dict( + type=CosineAnnealingLR, + eta_min=base_lr * 0.05, + begin=max_epochs // 2, + end=max_epochs, + T_max=max_epochs // 2, + by_epoch=True, + convert_to_iter_based=True), +] + +# automatically scaling LR based on the actual training batch size +auto_scale_lr = dict(base_batch_size=5632) + +# codec settings +codec = dict( + type=SimCCLabel, + input_size=input_size, + sigma=(4.9, 5.66), + simcc_split_ratio=2.0, + normalize=False, + use_dark=False) + +# model settings +model = dict( + type=TopdownPoseEstimator, + data_preprocessor=dict( + type=PoseDataPreprocessor, + mean=[123.675, 116.28, 103.53], + std=[58.395, 57.12, 57.375], + bgr_to_rgb=True), + backbone=dict( + type=CSPNeXt, + arch='P5', + expand_ratio=0.5, + deepen_factor=1.33, + widen_factor=1.25, + channel_attention=True, + norm_cfg=dict(type='BN'), + act_cfg=dict(type=SiLU), + init_cfg=dict( + type=PretrainedInit, + prefix='backbone.', + checkpoint='https://download.openmmlab.com/mmpose/v1/' + 'wholebody_2d_keypoint/rtmpose/ubody/rtmpose-x_simcc-ucoco_pt-aic-coco_270e-256x192-05f5bcb7_20230822.pth' # noqa + )), + neck=dict( + type=CSPNeXtPAFPN, + in_channels=[320, 640, 1280], + out_channels=None, + out_indices=( + 1, + 2, + ), + num_csp_blocks=2, + expand_ratio=0.5, + norm_cfg=dict(type=SyncBatchNorm), + act_cfg=dict(type=SiLU, inplace=True)), + head=dict( + type=RTMWHead, + in_channels=1280, + out_channels=num_keypoints, + input_size=input_size, + in_featuremap_size=tuple([s // 32 for s in input_size]), + simcc_split_ratio=codec['simcc_split_ratio'], + final_layer_kernel_size=7, + gau_cfg=dict( + hidden_dims=256, + s=128, + expansion_factor=2, + dropout_rate=0., + drop_path=0., + act_fn=SiLU, + use_rel_bias=False, + pos_enc=False), + loss=dict( + type=KLDiscretLoss, + use_target_weight=True, + beta=10., + label_softmax=True), + decoder=codec), + test_cfg=dict(flip_test=True)) + +# base dataset settings +dataset_type = CocoWholeBodyDataset +data_mode = 'topdown' +data_root = 'data/' + +backend_args = dict(backend='local') + +# pipelines +train_pipeline = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale), + dict(type=RandomFlip, direction='horizontal'), + dict(type=RandomHalfBody), + dict(type=RandomBBoxTransform, scale_factor=[0.5, 1.5], rotate_factor=90), + dict(type=TopdownAffine, input_size=codec['input_size']), + dict(type=PhotometricDistortion), + dict( + type=Albumentation, + transforms=[ + dict(type=Blur, p=0.1), + dict(type=MedianBlur, p=0.1), + dict( + type=CoarseDropout, + max_holes=1, + max_height=0.4, + max_width=0.4, + min_holes=1, + min_height=0.2, + min_width=0.2, + p=1.0), + ]), + dict( + type=GenerateTarget, encoder=codec, use_dataset_keypoint_weights=True), + dict(type=PackPoseInputs) +] +val_pipeline = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale), + dict(type=TopdownAffine, input_size=codec['input_size']), + dict(type=PackPoseInputs) +] + +train_pipeline_stage2 = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale), + dict(type=RandomFlip, direction='horizontal'), + dict(type=RandomHalfBody), + dict( + type=RandomBBoxTransform, + shift_factor=0., + scale_factor=[0.5, 1.5], + rotate_factor=90), + dict(type=TopdownAffine, input_size=codec['input_size']), + dict( + type=Albumentation, + transforms=[ + dict(type=Blur, p=0.1), + dict(type=MedianBlur, p=0.1), + ]), + dict( + type=GenerateTarget, encoder=codec, use_dataset_keypoint_weights=True), + dict(type=PackPoseInputs) +] + +# mapping + +aic_coco133 = [(0, 6), (1, 8), (2, 10), (3, 5), (4, 7), (5, 9), (6, 12), + (7, 14), (8, 16), (9, 11), (10, 13), (11, 15)] + +crowdpose_coco133 = [(0, 5), (1, 6), (2, 7), (3, 8), (4, 9), (5, 10), (6, 11), + (7, 12), (8, 13), (9, 14), (10, 15), (11, 16)] + +mpii_coco133 = [ + (0, 16), + (1, 14), + (2, 12), + (3, 11), + (4, 13), + (5, 15), + (8, 18), + (9, 17), + (10, 10), + (11, 8), + (12, 6), + (13, 5), + (14, 7), + (15, 9), +] + +jhmdb_coco133 = [ + (0, 18), + (2, 17), + (3, 6), + (4, 5), + (5, 12), + (6, 11), + (7, 8), + (8, 7), + (9, 14), + (10, 13), + (11, 10), + (12, 9), + (13, 16), + (14, 15), +] + +halpe_coco133 = [(i, i) + for i in range(17)] + [(20, 17), (21, 20), (22, 18), (23, 21), + (24, 19), + (25, 22)] + [(i, i - 3) + for i in range(26, 136)] + +posetrack_coco133 = [ + (0, 0), + (2, 17), + (3, 3), + (4, 4), + (5, 5), + (6, 6), + (7, 7), + (8, 8), + (9, 9), + (10, 10), + (11, 11), + (12, 12), + (13, 13), + (14, 14), + (15, 15), + (16, 16), +] + +humanart_coco133 = [(i, i) for i in range(17)] + [(17, 99), (18, 120), + (19, 17), (20, 20)] + +# train datasets +dataset_coco = dict( + type=dataset_type, + data_root=data_root, + data_mode=data_mode, + ann_file='coco/annotations/coco_wholebody_train_v1.0.json', + data_prefix=dict(img='detection/coco/train2017/'), + pipeline=[], +) + +dataset_aic = dict( + type=AicDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='aic/annotations/aic_train.json', + data_prefix=dict(img='pose/ai_challenge/ai_challenger_keypoint' + '_train_20170902/keypoint_train_images_20170902/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=aic_coco133) + ], +) + +dataset_crowdpose = dict( + type=CrowdPoseDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='crowdpose/annotations/mmpose_crowdpose_trainval.json', + data_prefix=dict(img='pose/CrowdPose/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=crowdpose_coco133) + ], +) + +dataset_mpii = dict( + type=MpiiDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='mpii/annotations/mpii_train.json', + data_prefix=dict(img='pose/MPI/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=mpii_coco133) + ], +) + +dataset_jhmdb = dict( + type=JhmdbDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='jhmdb/annotations/Sub1_train.json', + data_prefix=dict(img='pose/JHMDB/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=jhmdb_coco133) + ], +) + +dataset_halpe = dict( + type=HalpeDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='halpe/annotations/halpe_train_v1.json', + data_prefix=dict(img='pose/Halpe/hico_20160224_det/images/train2015'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=halpe_coco133) + ], +) + +dataset_posetrack = dict( + type=PoseTrack18Dataset, + data_root=data_root, + data_mode=data_mode, + ann_file='posetrack18/annotations/posetrack18_train.json', + data_prefix=dict(img='pose/PoseChallenge2018/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=posetrack_coco133) + ], +) + +dataset_humanart = dict( + type=HumanArt21Dataset, + data_root=data_root, + data_mode=data_mode, + ann_file='HumanArt/annotations/training_humanart.json', + filter_cfg=dict(scenes=['real_human']), + data_prefix=dict(img='pose/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=humanart_coco133) + ]) + +ubody_scenes = [ + 'Magic_show', 'Entertainment', 'ConductMusic', 'Online_class', 'TalkShow', + 'Speech', 'Fitness', 'Interview', 'Olympic', 'TVShow', 'Singing', + 'SignLanguage', 'Movie', 'LiveVlog', 'VideoConference' +] + +ubody_datasets = [] +for scene in ubody_scenes: + each = dict( + type=UBody2dDataset, + data_root=data_root, + data_mode=data_mode, + ann_file=f'Ubody/annotations/{scene}/train_annotations.json', + data_prefix=dict(img='pose/UBody/images/'), + pipeline=[], + sample_interval=10) + ubody_datasets.append(each) + +dataset_ubody = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/ubody2d.py'), + datasets=ubody_datasets, + pipeline=[], + test_mode=False, +) + +face_pipeline = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale, padding=1.25), + dict( + type=RandomBBoxTransform, + shift_factor=0., + scale_factor=[1.5, 2.0], + rotate_factor=0), +] + +wflw_coco133 = [(i * 2, 23 + i) + for i in range(17)] + [(33 + i, 40 + i) for i in range(5)] + [ + (42 + i, 45 + i) for i in range(5) + ] + [(51 + i, 50 + i) + for i in range(9)] + [(60, 59), (61, 60), (63, 61), + (64, 62), (65, 63), (67, 64), + (68, 65), (69, 66), (71, 67), + (72, 68), (73, 69), + (75, 70)] + [(76 + i, 71 + i) + for i in range(20)] +dataset_wflw = dict( + type=WFLWDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='wflw/annotations/face_landmarks_wflw_train.json', + data_prefix=dict(img='pose/WFLW/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=wflw_coco133), *face_pipeline + ], +) + +mapping_300w_coco133 = [(i, 23 + i) for i in range(68)] +dataset_300w = dict( + type=Face300WDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='300w/annotations/face_landmarks_300w_train.json', + data_prefix=dict(img='pose/300w/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=mapping_300w_coco133), *face_pipeline + ], +) + +cofw_coco133 = [(0, 40), (2, 44), (4, 42), (1, 49), (3, 45), (6, 47), (8, 59), + (10, 62), (9, 68), (11, 65), (18, 54), (19, 58), (20, 53), + (21, 56), (22, 71), (23, 77), (24, 74), (25, 85), (26, 89), + (27, 80), (28, 31)] +dataset_cofw = dict( + type=COFWDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='cofw/annotations/cofw_train.json', + data_prefix=dict(img='pose/COFW/images/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=cofw_coco133), *face_pipeline + ], +) + +lapa_coco133 = [(i * 2, 23 + i) for i in range(17)] + [ + (33 + i, 40 + i) for i in range(5) +] + [(42 + i, 45 + i) for i in range(5)] + [ + (51 + i, 50 + i) for i in range(4) +] + [(58 + i, 54 + i) for i in range(5)] + [(66, 59), (67, 60), (69, 61), + (70, 62), (71, 63), (73, 64), + (75, 65), (76, 66), (78, 67), + (79, 68), (80, 69), + (82, 70)] + [(84 + i, 71 + i) + for i in range(20)] +dataset_lapa = dict( + type=LapaDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='LaPa/annotations/lapa_trainval.json', + data_prefix=dict(img='pose/LaPa/'), + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=lapa_coco133), *face_pipeline + ], +) + +dataset_wb = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=[dataset_coco, dataset_halpe, dataset_ubody], + pipeline=[], + test_mode=False, +) + +dataset_body = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=[ + dataset_aic, + dataset_crowdpose, + dataset_mpii, + dataset_jhmdb, + dataset_posetrack, + dataset_humanart, + ], + pipeline=[], + test_mode=False, +) + +dataset_face = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=[ + dataset_wflw, + dataset_300w, + dataset_cofw, + dataset_lapa, + ], + pipeline=[], + test_mode=False, +) + +hand_pipeline = [ + dict(type=LoadImage, backend_args=backend_args), + dict(type=GetBBoxCenterScale), + dict( + type=RandomBBoxTransform, + shift_factor=0., + scale_factor=[1.5, 2.0], + rotate_factor=0), +] + +interhand_left = [(21, 95), (22, 94), (23, 93), (24, 92), (25, 99), (26, 98), + (27, 97), (28, 96), (29, 103), (30, 102), (31, 101), + (32, 100), (33, 107), (34, 106), (35, 105), (36, 104), + (37, 111), (38, 110), (39, 109), (40, 108), (41, 91)] +interhand_right = [(i - 21, j + 21) for i, j in interhand_left] +interhand_coco133 = interhand_right + interhand_left + +dataset_interhand2d = dict( + type=InterHand2DDoubleDataset, + data_root=data_root, + data_mode=data_mode, + ann_file='interhand26m/annotations/all/InterHand2.6M_train_data.json', + camera_param_file='interhand26m/annotations/all/' + 'InterHand2.6M_train_camera.json', + joint_file='interhand26m/annotations/all/' + 'InterHand2.6M_train_joint_3d.json', + data_prefix=dict(img='interhand2.6m/images/train/'), + sample_interval=10, + pipeline=[ + dict( + type=KeypointConverter, + num_keypoints=num_keypoints, + mapping=interhand_coco133, + ), *hand_pipeline + ], +) + +dataset_hand = dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=[dataset_interhand2d], + pipeline=[], + test_mode=False, +) + +train_datasets = [dataset_wb, dataset_body, dataset_face, dataset_hand] + +# data loaders +train_dataloader = dict( + batch_size=train_batch_size, + num_workers=4, + pin_memory=False, + persistent_workers=True, + sampler=dict(type=DefaultSampler, shuffle=True), + dataset=dict( + type=CombinedDataset, + metainfo=dict(from_file='configs/_base_/datasets/coco_wholebody.py'), + datasets=train_datasets, + pipeline=train_pipeline, + test_mode=False, + )) + +val_dataloader = dict( + batch_size=val_batch_size, + num_workers=4, + persistent_workers=True, + drop_last=False, + sampler=dict(type=DefaultSampler, shuffle=False, round_up=False), + dataset=dict( + type=CocoWholeBodyDataset, + ann_file='data/coco/annotations/coco_wholebody_val_v1.0.json', + data_prefix=dict(img='data/detection/coco/val2017/'), + pipeline=val_pipeline, + bbox_file='data/coco/person_detection_results/' + 'COCO_val2017_detections_AP_H_56_person.json', + test_mode=True)) + +test_dataloader = val_dataloader + +# hooks +default_hooks.update( # noqa + checkpoint=dict( + save_best='coco-wholebody/AP', rule='greater', max_keep_ckpts=1)) + +custom_hooks = [ + dict( + type=EMAHook, + ema_type=ExpMomentumEMA, + momentum=0.0002, + update_buffers=True, + priority=49), + dict( + type=PipelineSwitchHook, + switch_epoch=max_epochs - stage2_num_epochs, + switch_pipeline=train_pipeline_stage2) +] + +# evaluators +val_evaluator = dict( + type=CocoWholeBodyMetric, + ann_file='data/coco/annotations/coco_wholebody_val_v1.0.json') +test_evaluator = val_evaluator diff --git a/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw_cocktail13.md b/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw_cocktail13.md new file mode 100644 index 0000000000..54e75383ba --- /dev/null +++ b/configs/wholebody_2d_keypoint/rtmpose/cocktail13/rtmw_cocktail13.md @@ -0,0 +1,76 @@ + + + \

diff --git a/demo/docs/en/2d_face_demo.md b/demo/docs/en/2d_face_demo.md

index 9c60f68487..4e8dd70684 100644

--- a/demo/docs/en/2d_face_demo.md

+++ b/demo/docs/en/2d_face_demo.md

@@ -23,15 +23,15 @@ Take [aflw model](https://download.openmmlab.com/mmpose/face/hrnetv2/hrnetv2_w18

python demo/topdown_demo_with_mmdet.py \

demo/mmdetection_cfg/yolox-s_8xb8-300e_coco-face.py \

https://download.openmmlab.com/mmpose/mmdet_pretrained/yolo-x_8xb8-300e_coco-face_13274d7c.pth \

- configs/face_2d_keypoint/topdown_heatmap/aflw/td-hm_hrnetv2-w18_8xb64-60e_aflw-256x256.py \

- https://download.openmmlab.com/mmpose/face/hrnetv2/hrnetv2_w18_aflw_256x256-f2bbc62b_20210125.pth \

+ configs/face_2d_keypoint/rtmpose/face6/rtmpose-m_8xb256-120e_face6-256x256.py \

+ https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-face6_pt-in1k_120e-256x256-72a37400_20230529.pth \

--input tests/data/cofw/001766.jpg \

--show --draw-heatmap

```

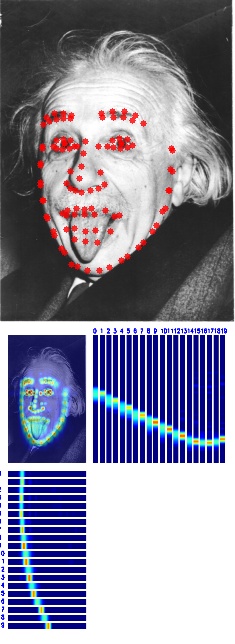

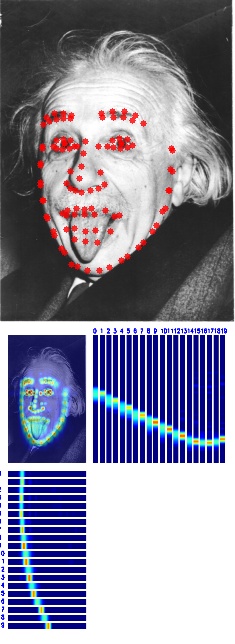

Visualization result:

-

+

If you use a heatmap-based model and set argument `--draw-heatmap`, the predicted heatmap will be visualized together with the keypoints. @@ -41,8 +41,8 @@ To save visualized results on disk: python demo/topdown_demo_with_mmdet.py \ demo/mmdetection_cfg/yolox-s_8xb8-300e_coco-face.py \ https://download.openmmlab.com/mmpose/mmdet_pretrained/yolo-x_8xb8-300e_coco-face_13274d7c.pth \ - configs/face_2d_keypoint/topdown_heatmap/aflw/td-hm_hrnetv2-w18_8xb64-60e_aflw-256x256.py \ - https://download.openmmlab.com/mmpose/face/hrnetv2/hrnetv2_w18_aflw_256x256-f2bbc62b_20210125.pth \ + configs/face_2d_keypoint/rtmpose/face6/rtmpose-m_8xb256-120e_face6-256x256.py \ + https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-face6_pt-in1k_120e-256x256-72a37400_20230529.pth \ --input tests/data/cofw/001766.jpg \ --draw-heatmap --output-root vis_results ``` @@ -55,8 +55,8 @@ To run demos on CPU: python demo/topdown_demo_with_mmdet.py \ demo/mmdetection_cfg/yolox-s_8xb8-300e_coco-face.py \ https://download.openmmlab.com/mmpose/mmdet_pretrained/yolo-x_8xb8-300e_coco-face_13274d7c.pth \ - configs/face_2d_keypoint/topdown_heatmap/aflw/td-hm_hrnetv2-w18_8xb64-60e_aflw-256x256.py \ - https://download.openmmlab.com/mmpose/face/hrnetv2/hrnetv2_w18_aflw_256x256-f2bbc62b_20210125.pth \ + configs/face_2d_keypoint/rtmpose/face6/rtmpose-m_8xb256-120e_face6-256x256.py \ + https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-face6_pt-in1k_120e-256x256-72a37400_20230529.pth \ --input tests/data/cofw/001766.jpg \ --show --draw-heatmap --device=cpu ``` @@ -69,13 +69,13 @@ Videos share the same interface with images. The difference is that the `${INPUT python demo/topdown_demo_with_mmdet.py \ demo/mmdetection_cfg/yolox-s_8xb8-300e_coco-face.py \ https://download.openmmlab.com/mmpose/mmdet_pretrained/yolo-x_8xb8-300e_coco-face_13274d7c.pth \ - configs/face_2d_keypoint/topdown_heatmap/aflw/td-hm_hrnetv2-w18_8xb64-60e_aflw-256x256.py \ - https://download.openmmlab.com/mmpose/face/hrnetv2/hrnetv2_w18_aflw_256x256-f2bbc62b_20210125.pth \ + configs/face_2d_keypoint/rtmpose/face6/rtmpose-m_8xb256-120e_face6-256x256.py \ + https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-face6_pt-in1k_120e-256x256-72a37400_20230529.pth \ --input demo/resources/ \

- --show --draw-heatmap --output-root vis_results

+ --show --output-root vis_results --radius 1

```

-

+

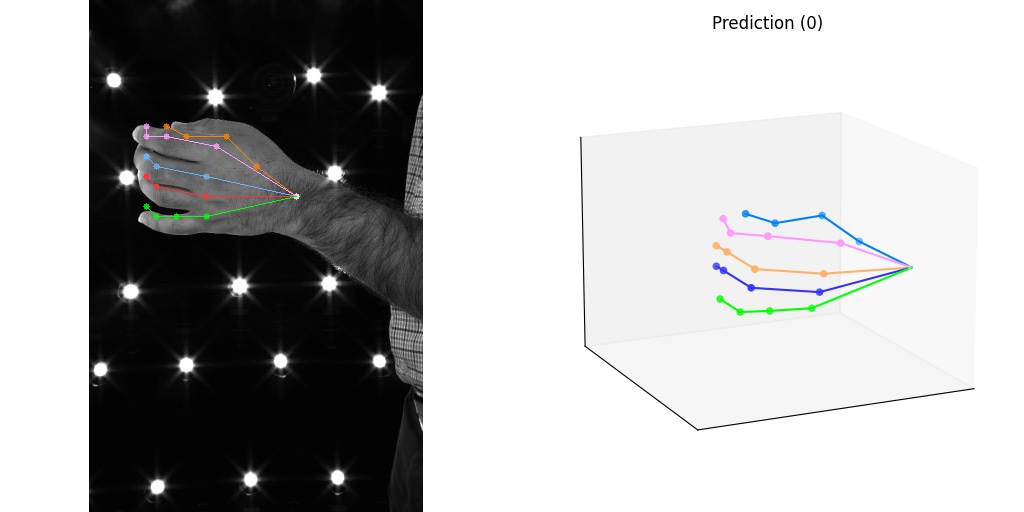

The original video can be downloaded from [Google Drive](https://drive.google.com/file/d/1kQt80t6w802b_vgVcmiV_QfcSJ3RWzmb/view?usp=sharing). diff --git a/demo/docs/en/2d_hand_demo.md b/demo/docs/en/2d_hand_demo.md index f47b3695e3..cea74e2be4 100644 --- a/demo/docs/en/2d_hand_demo.md +++ b/demo/docs/en/2d_hand_demo.md @@ -21,17 +21,17 @@ Take [onehand10k model](https://download.openmmlab.com/mmpose/hand/hrnetv2/hrnet ```shell python demo/topdown_demo_with_mmdet.py \ - demo/mmdetection_cfg/cascade_rcnn_x101_64x4d_fpn_1class.py \ - https://download.openmmlab.com/mmpose/mmdet_pretrained/cascade_rcnn_x101_64x4d_fpn_20e_onehand10k-dac19597_20201030.pth \ - configs/hand_2d_keypoint/topdown_heatmap/onehand10k/td-hm_hrnetv2-w18_8xb64-210e_onehand10k-256x256.py \ - https://download.openmmlab.com/mmpose/hand/hrnetv2/hrnetv2_w18_onehand10k_256x256-30bc9c6b_20210330.pth \ + demo/mmdetection_cfg/rtmdet_nano_320-8xb32_hand.py \ + https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmdet_nano_8xb32-300e_hand-267f9c8f.pth \ + configs/hand_2d_keypoint/rtmpose/hand5/rtmpose-m_8xb256-210e_hand5-256x256.py \ + https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-hand5_pt-aic-coco_210e-256x256-74fb594_20230320.pth \ --input tests/data/onehand10k/9.jpg \ --show --draw-heatmap ``` Visualization result: -

+