@@ -12,7 +14,7 @@ MMYOLO 中,将使用 MMEngine 提供的 `Visualizer` 可视化器进行特征

- 支持基础绘图接口以及特征图可视化。

- 支持选择模型中的不同层来得到特征图,包含 `squeeze_mean` , `select_max` , `topk` 三种显示方式,用户还可以使用 `arrangement` 自定义特征图显示的布局方式。

-## 特征图绘制

+### 特征图绘制

你可以调用 `demo/featmap_vis_demo.py` 来简单快捷地得到可视化结果,为了方便理解,将其主要参数的功能梳理如下:

@@ -50,7 +52,7 @@ MMYOLO 中,将使用 MMEngine 提供的 `Visualizer` 可视化器进行特征

**注意:当图片和特征图尺度不一样时候,`draw_featmap` 函数会自动进行上采样对齐。如果你的图片在推理过程中前处理存在类似 Pad 的操作此时得到的特征图也是 Pad 过的,那么直接上采样就可能会出现不对齐问题。**

-## 用法示例

+### 用法示例

以预训练好的 YOLOv5-s 模型为例:

@@ -167,7 +169,7 @@ python demo/featmap_vis_demo.py demo/dog.jpg \

```

-

+

(5) 存储绘制后的图片,在绘制完成后,可以选择本地窗口显示,也可以存储到本地,只需要加入参数 `--out-file xxx.jpg`:

@@ -180,3 +182,113 @@ python demo/featmap_vis_demo.py demo/dog.jpg \

--channel-reduction select_max \

--out-file featmap_backbone.jpg

```

+

+## Grad-Based 和 Grad-Free CAM 可视化

+

+目标检测 CAM 可视化相比于分类 CAM 复杂很多且差异很大。本文只是简要说明用法,后续会单独开文档详细描述实现原理和注意事项。

+

+你可以调用 `demo/boxmap_vis_demo.py` 来简单快捷地得到 Box 级别的 AM 可视化结果,目前已经支持 `YOLOv5/YOLOv6/YOLOX/RTMDet`。

+

+以 YOLOv5 为例,和特征图可视化绘制一样,你需要先修改 `test_pipeline`,否则会出现特征图和原图不对齐问题。

+

+旧的 `test_pipeline` 为:

+

+```python

+test_pipeline = [

+ dict(

+ type='LoadImageFromFile',

+ file_client_args=_base_.file_client_args),

+ dict(type='YOLOv5KeepRatioResize', scale=img_scale),

+ dict(

+ type='LetterResize',

+ scale=img_scale,

+ allow_scale_up=False,

+ pad_val=dict(img=114)),

+ dict(type='LoadAnnotations', with_bbox=True, _scope_='mmdet'),

+ dict(

+ type='mmdet.PackDetInputs',

+ meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

+ 'scale_factor', 'pad_param'))

+]

+```

+

+修改为如下配置:

+

+```python

+test_pipeline = [

+ dict(

+ type='LoadImageFromFile',

+ file_client_args=_base_.file_client_args),

+ dict(type='mmdet.Resize', scale=img_scale, keep_ratio=False), # 这里将 LetterResize 修改成 mmdet.Resize

+ dict(type='LoadAnnotations', with_bbox=True, _scope_='mmdet'),

+ dict(

+ type='mmdet.PackDetInputs',

+ meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

+ 'scale_factor'))

+]

+```

+

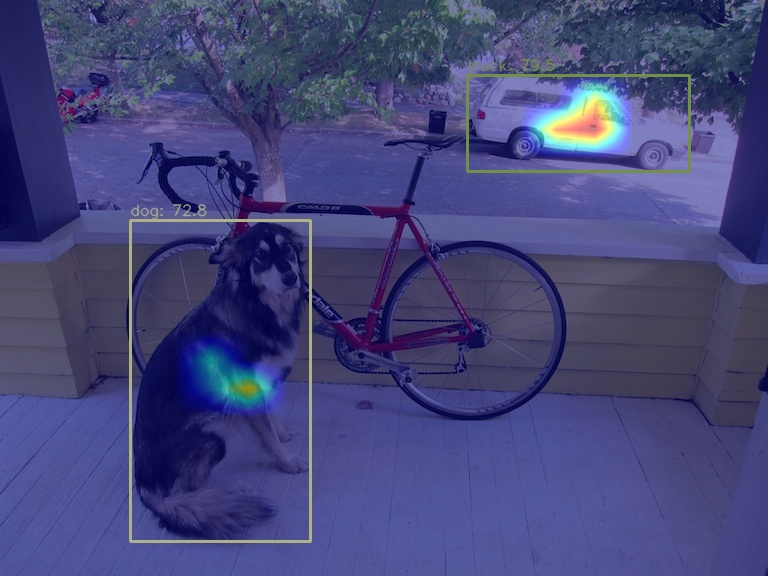

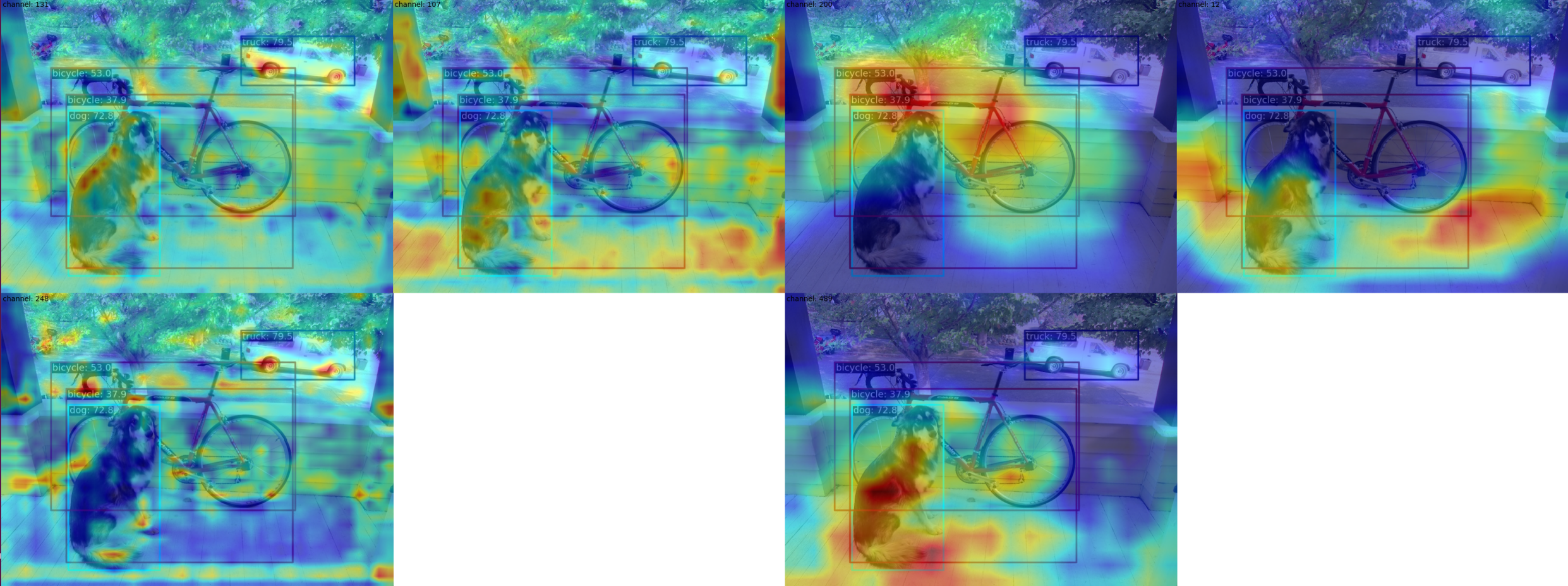

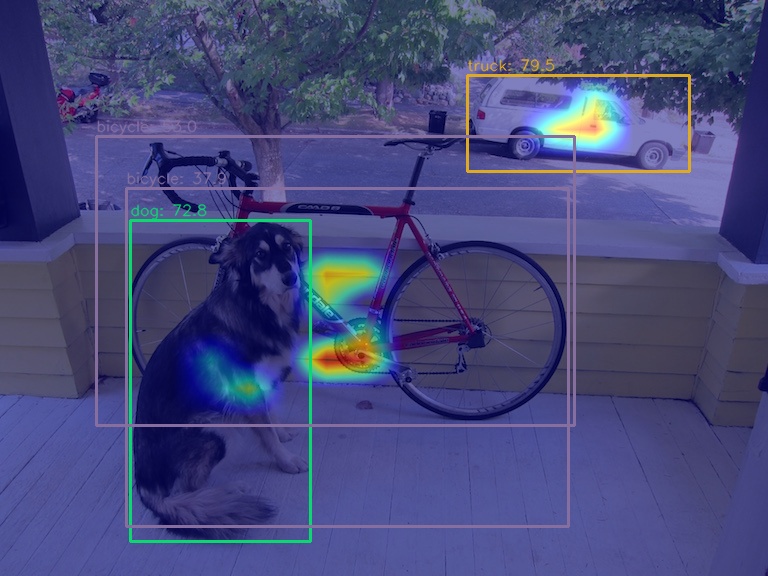

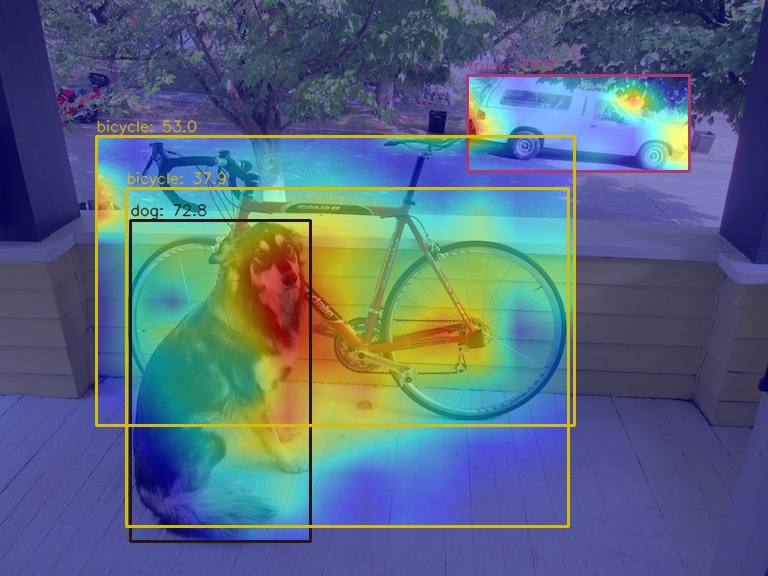

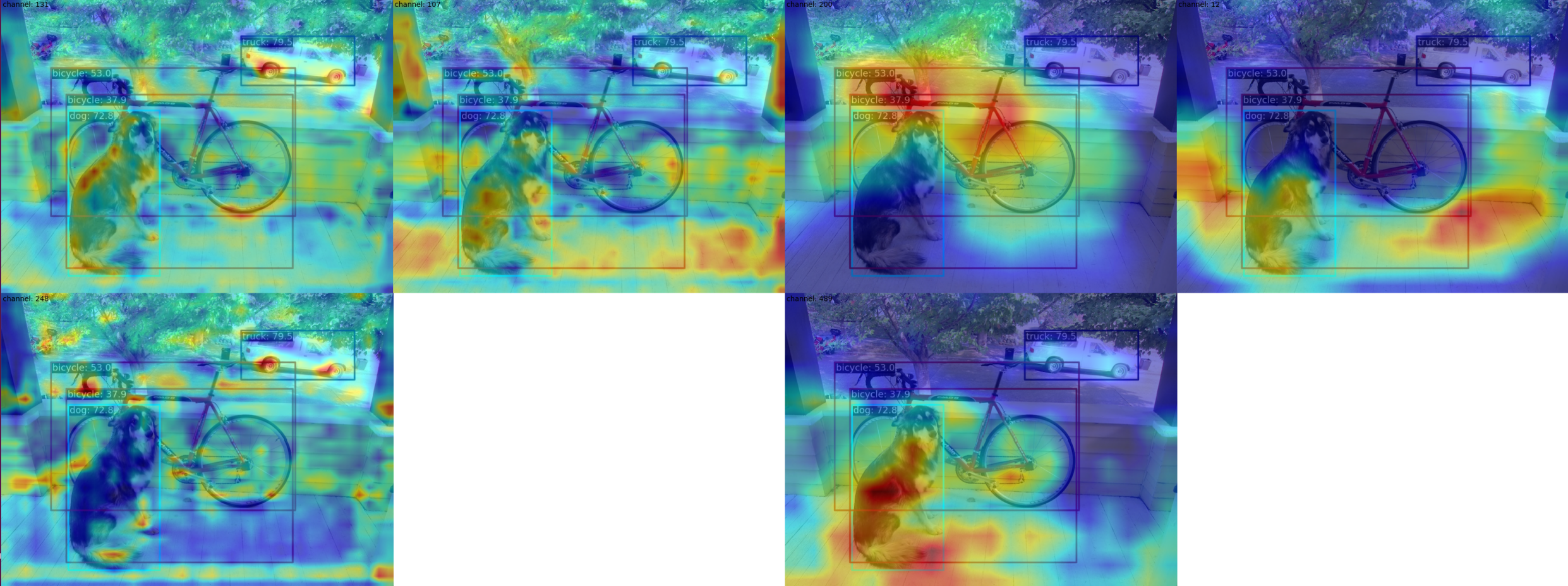

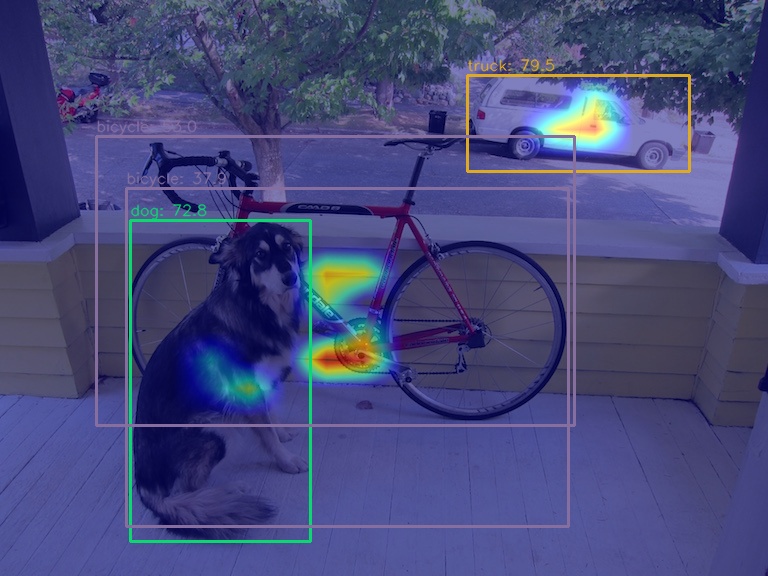

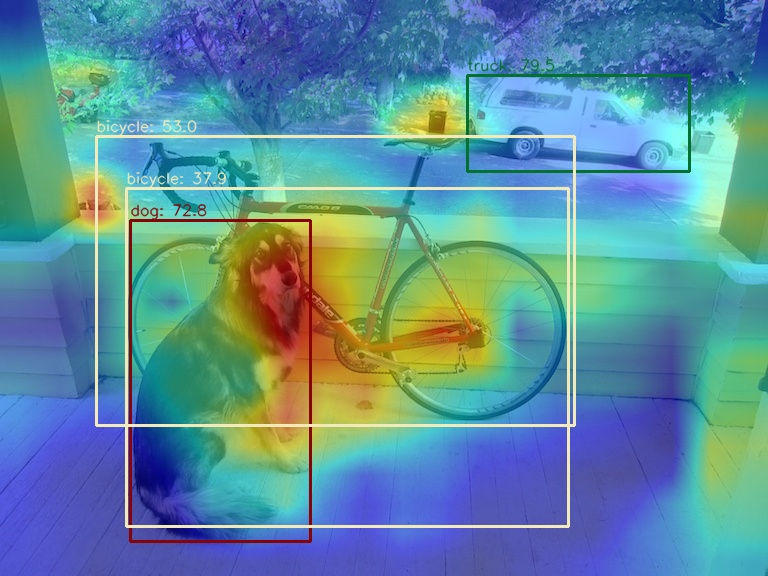

+(1) 使用 `GradCAM` 方法可视化 neck 模块的最后一个输出层的 AM 图

+

+```shell

+python demo/boxam_vis_demo.py \

+ demo/dog.jpg \

+ configs/yolov5/yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth

+

+```

+

+

+

+

+

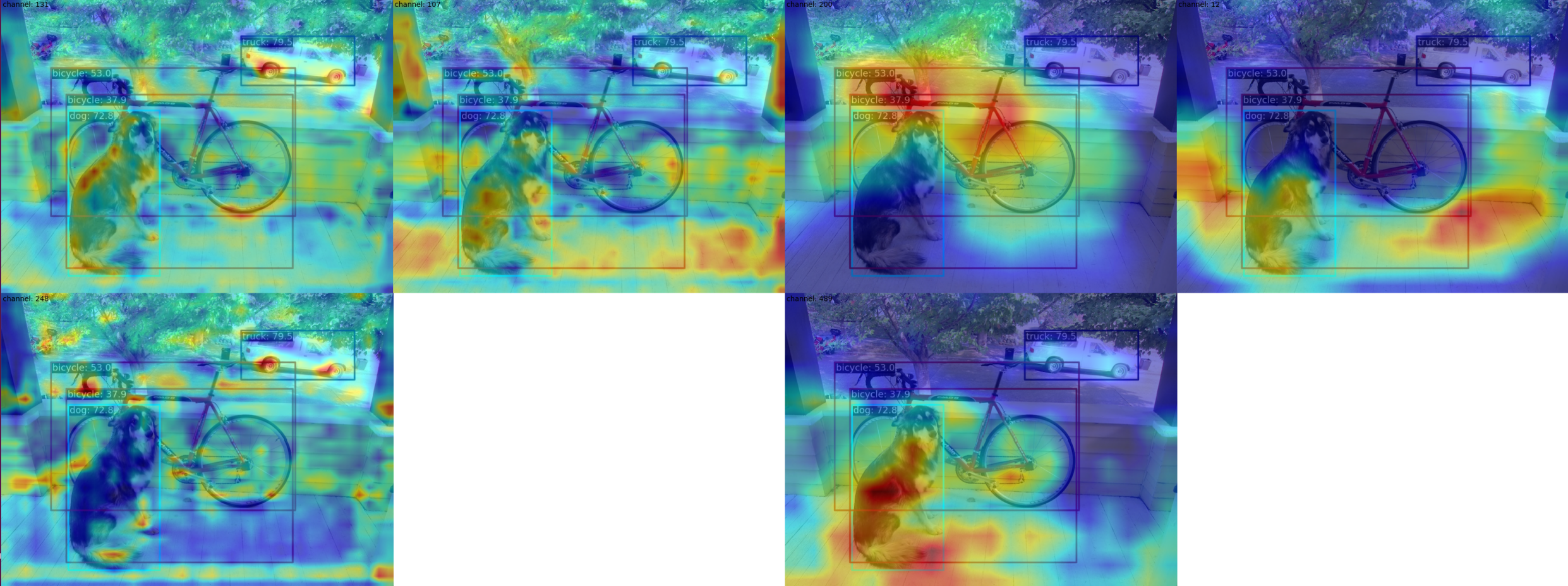

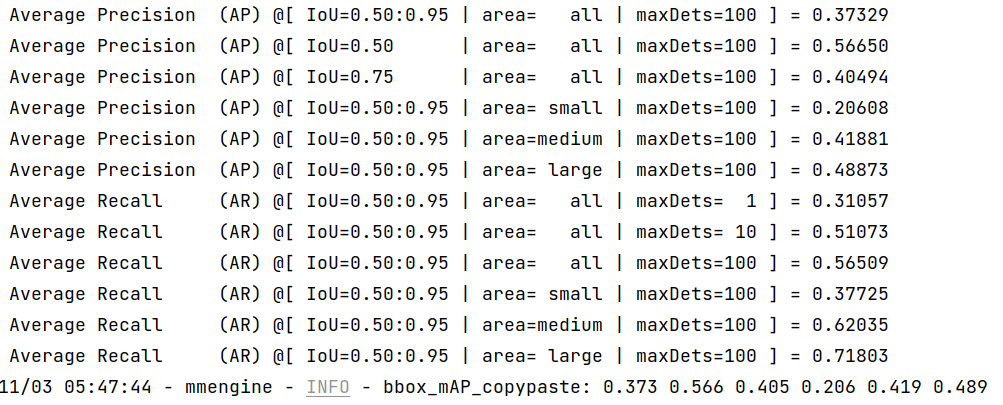

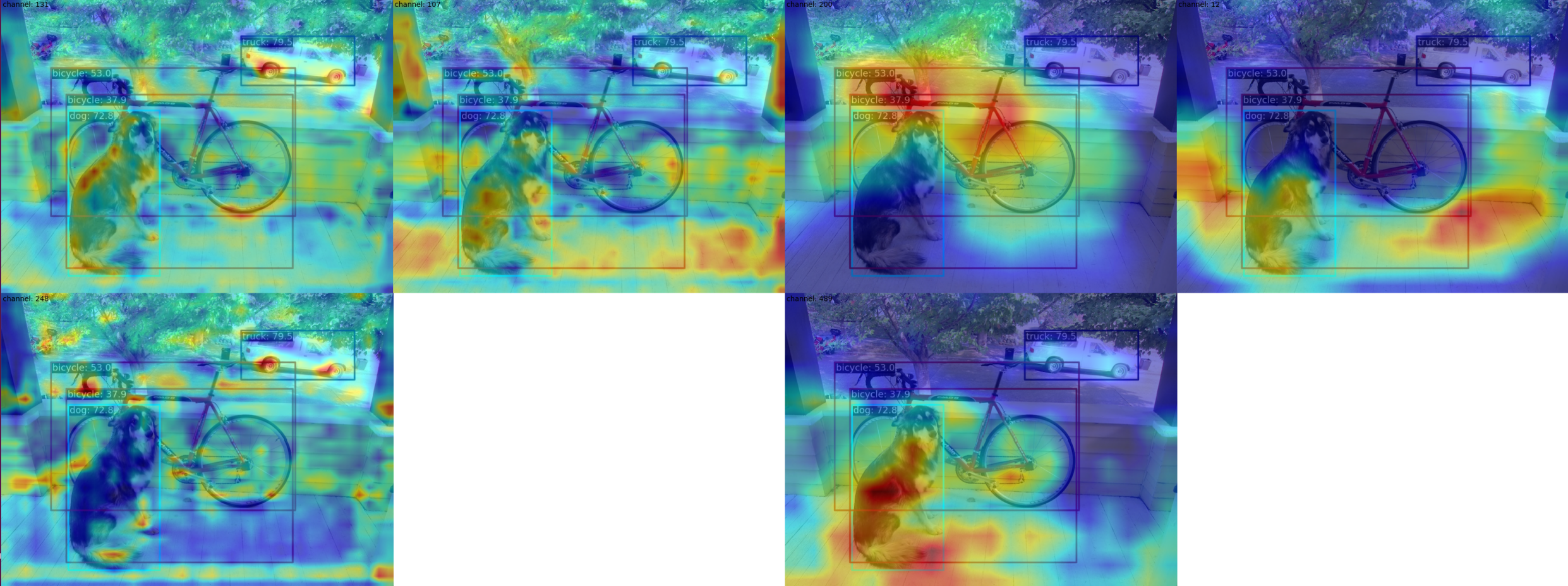

+相对应的特征图 AM 图如下:

+

+

+

+

+

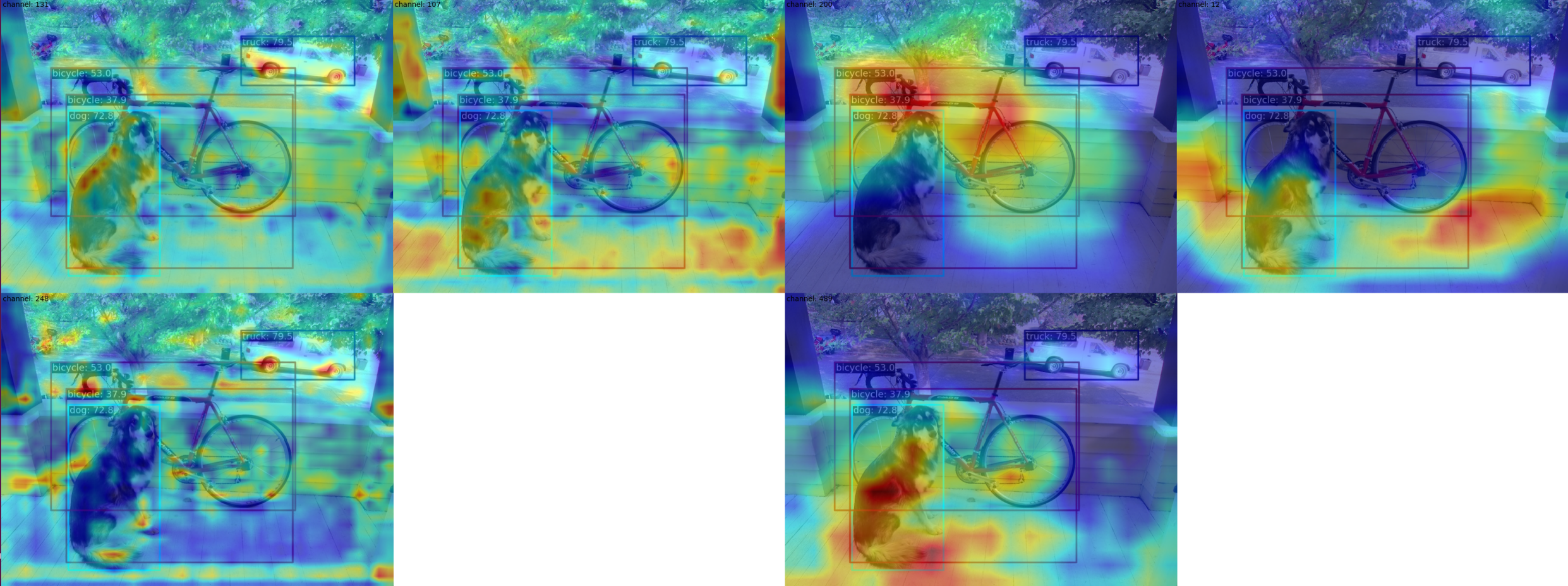

+可以看出 `GradCAM` 效果可以突出 box 级别的 AM 信息。

+

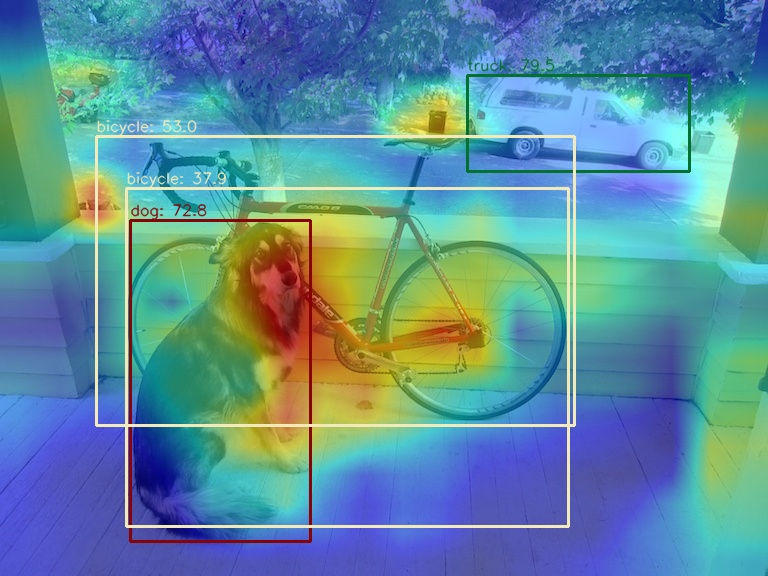

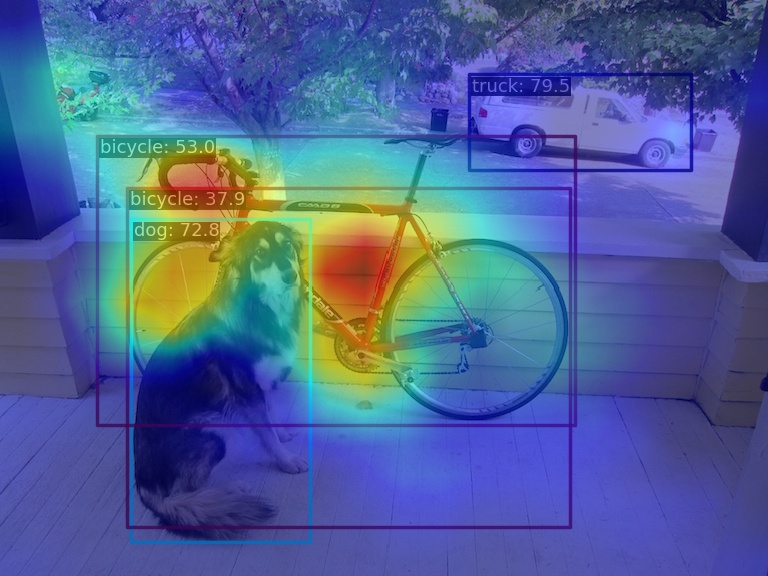

+你可以通过 `--topk` 参数选择仅仅可视化预测分值最高的前几个预测框

+

+```shell

+python demo/boxam_vis_demo.py \

+ demo/dog.jpg \

+ configs/yolov5/yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth \

+ --topk 2

+```

+

+

+

+

+

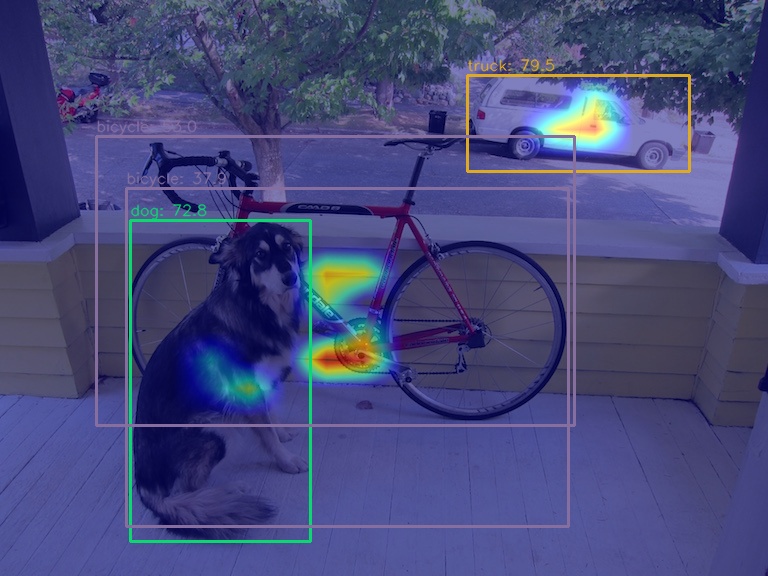

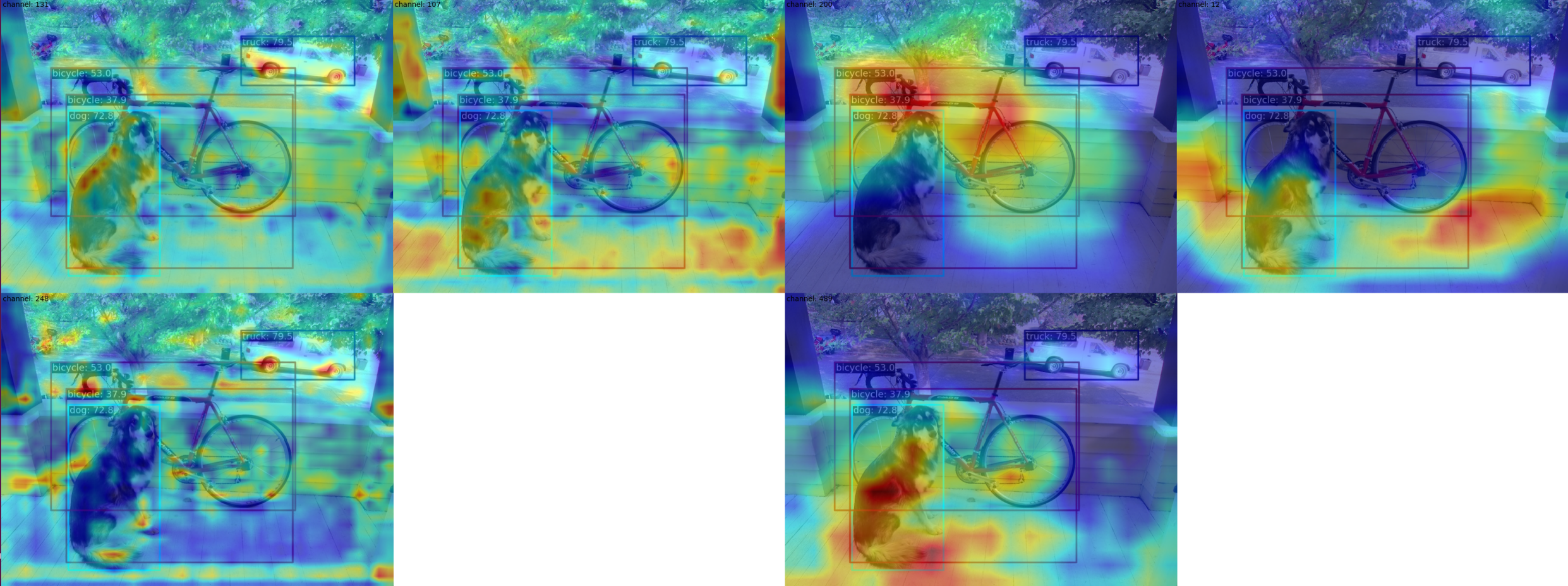

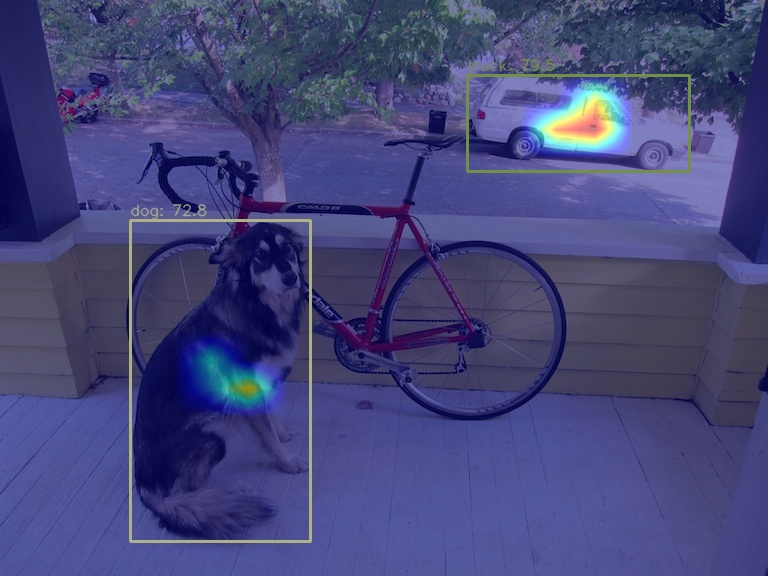

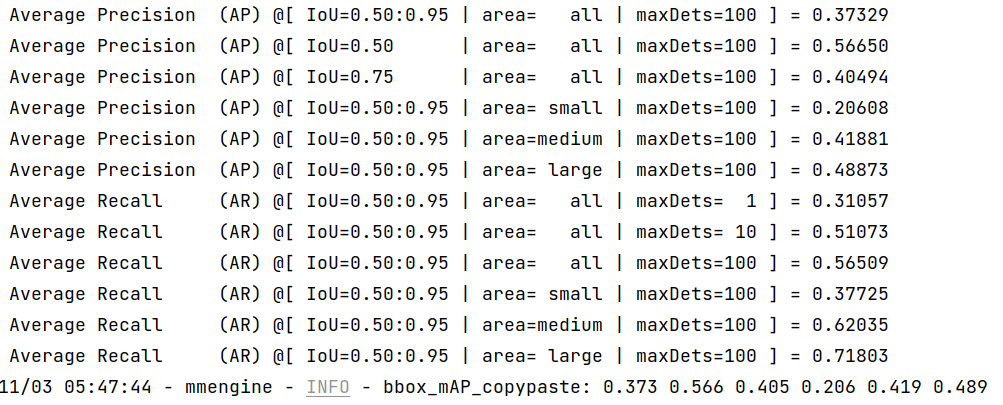

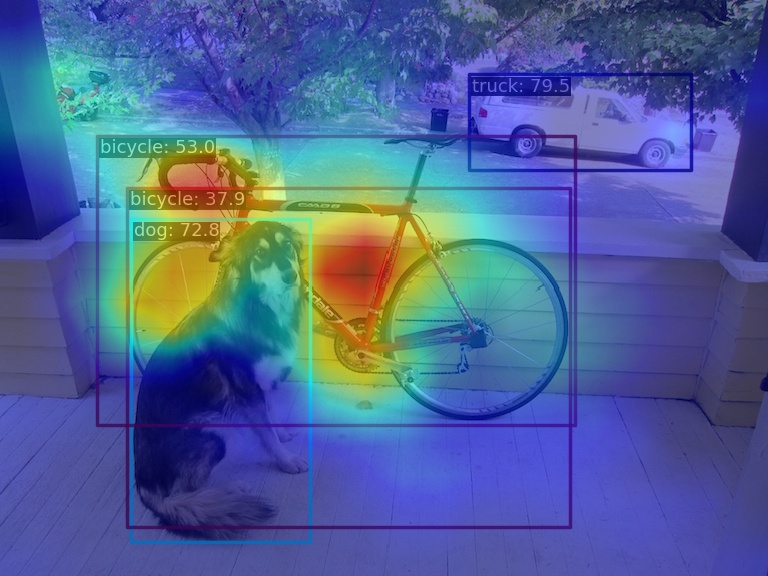

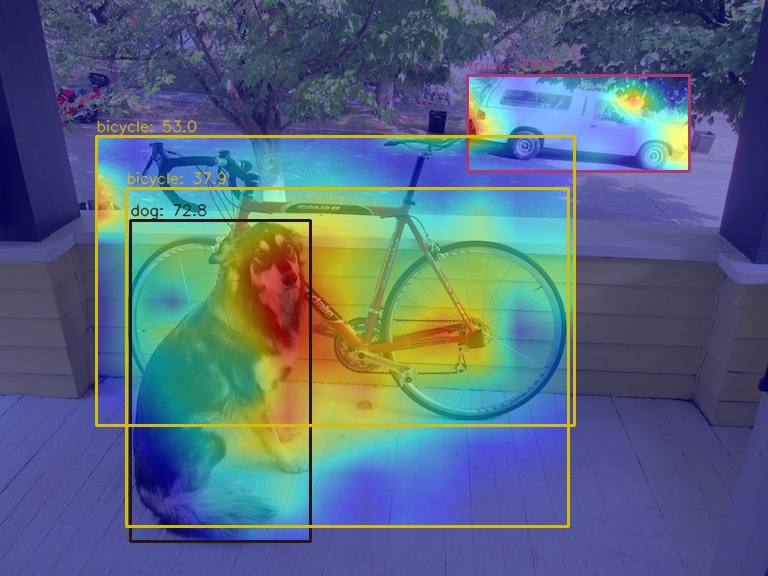

+(2) 使用 `AblationCAM` 方法可视化 neck 模块的最后一个输出层的 AM 图

+

+```shell

+python demo/boxam_vis_demo.py \

+ demo/dog.jpg \

+ configs/yolov5/yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth \

+ --method ablationcam

+```

+

+

+

+

+

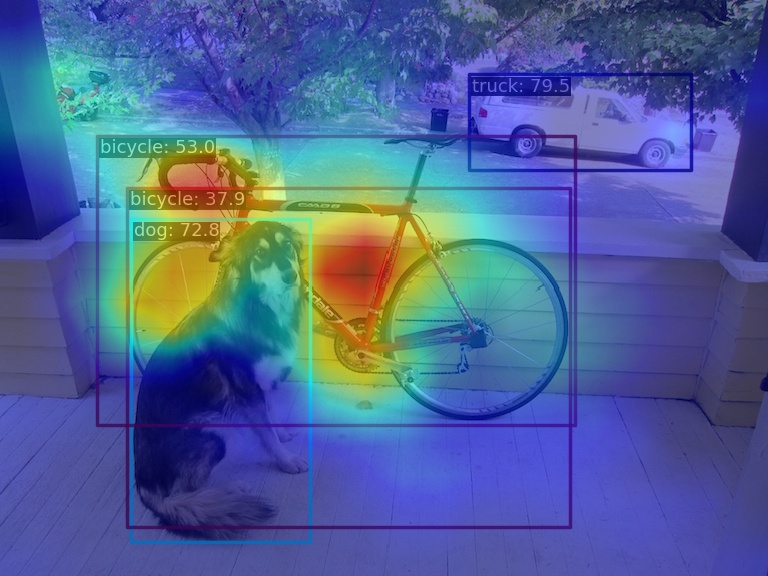

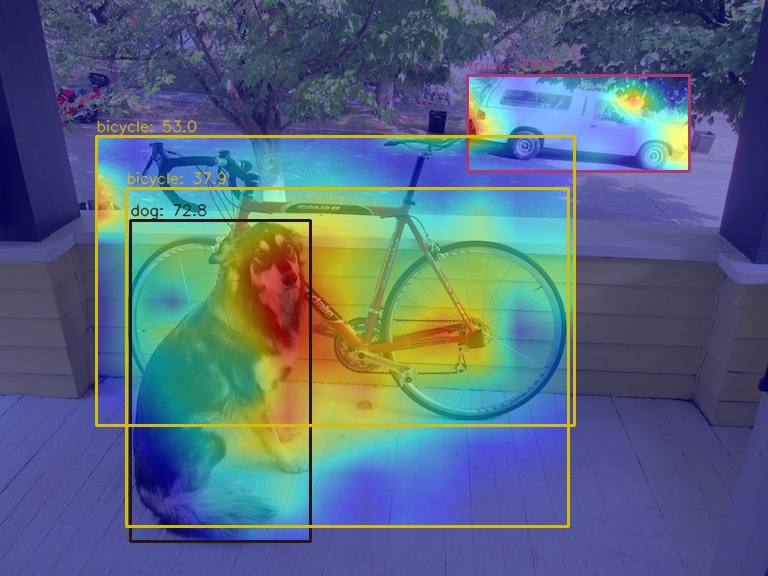

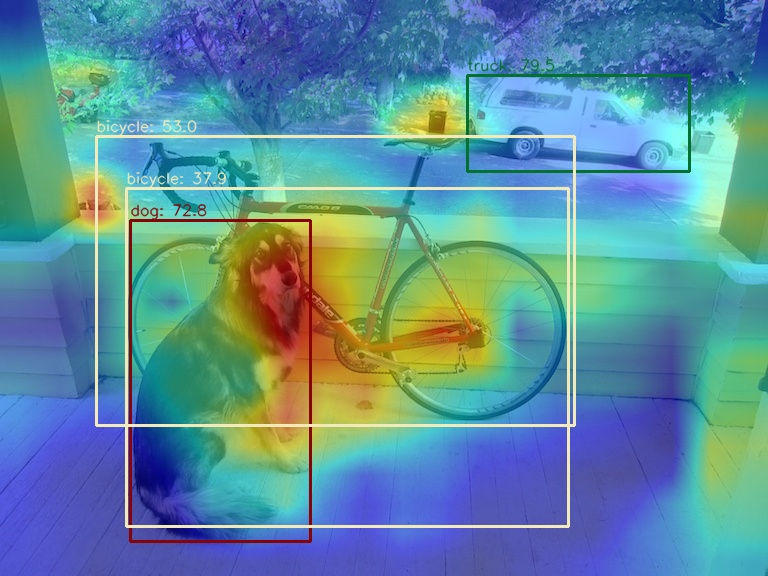

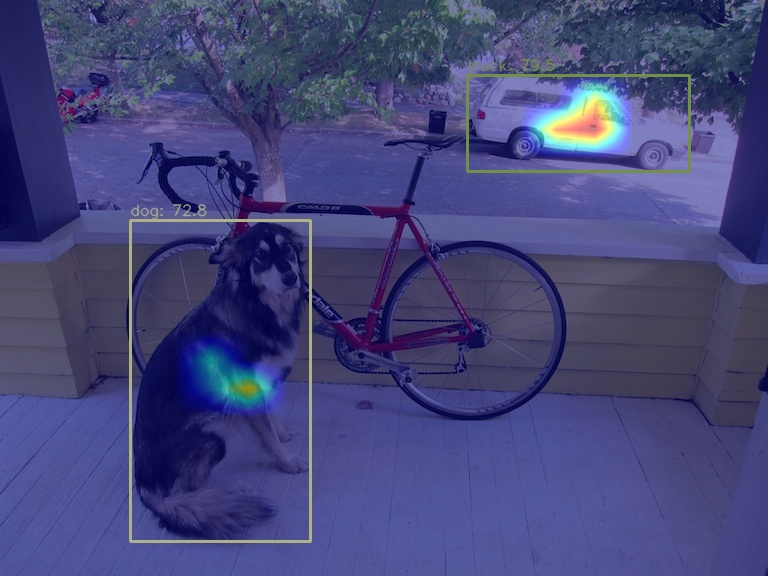

+由于 `AblationCAM` 是通过每个通道对分值的贡献程度来加权,因此无法实现类似 `GradCAM` 的仅仅可视化 box 级别的 AM 信息, 但是你可以使用 `--norm-in-bbox` 来仅仅显示 bbox 内部 AM

+

+```shell

+python demo/boxam_vis_demo.py \

+ demo/dog.jpg \

+ configs/yolov5/yolov5_s-v61_syncbn_fast_8xb16-300e_coco.py \

+ yolov5_s-v61_syncbn_fast_8xb16-300e_coco_20220918_084700-86e02187.pth \

+ --method ablationcam \

+ --norm-in-bbox

+```

+

+

+

+

diff --git a/docs/zh_cn/user_guides/yolov5_tutorial.md b/docs/zh_cn/user_guides/yolov5_tutorial.md

index 2cd7ccf68..20a24cbd9 100644

--- a/docs/zh_cn/user_guides/yolov5_tutorial.md

+++ b/docs/zh_cn/user_guides/yolov5_tutorial.md

@@ -30,7 +30,7 @@ mim install -v -e .

本文选取不到 40MB 大小的 balloon 气球数据集作为 MMYOLO 的学习数据集。

```shell

-python tools/misc/download_dataset.py --dataset-name balloon --save-dir data --unzip

+python tools/misc/download_dataset.py --dataset-name balloon --save-dir data --unzip

python tools/dataset_converters/balloon2coco.py

```

diff --git a/mmyolo/datasets/transforms/__init__.py b/mmyolo/datasets/transforms/__init__.py

index 2ff6ad7b0..842ad641a 100644

--- a/mmyolo/datasets/transforms/__init__.py

+++ b/mmyolo/datasets/transforms/__init__.py

@@ -1,10 +1,10 @@

# Copyright (c) OpenMMLab. All rights reserved.

-from .mix_img_transforms import Mosaic, YOLOv5MixUp, YOLOXMixUp

+from .mix_img_transforms import Mosaic, Mosaic9, YOLOv5MixUp, YOLOXMixUp

from .transforms import (LetterResize, LoadAnnotations, YOLOv5HSVRandomAug,

YOLOv5KeepRatioResize, YOLOv5RandomAffine)

__all__ = [

'YOLOv5KeepRatioResize', 'LetterResize', 'Mosaic', 'YOLOXMixUp',

'YOLOv5MixUp', 'YOLOv5HSVRandomAug', 'LoadAnnotations',

- 'YOLOv5RandomAffine'

+ 'YOLOv5RandomAffine', 'Mosaic9'

]

diff --git a/mmyolo/datasets/transforms/mix_img_transforms.py b/mmyolo/datasets/transforms/mix_img_transforms.py

index 42b82318e..1b85ab2a5 100644

--- a/mmyolo/datasets/transforms/mix_img_transforms.py

+++ b/mmyolo/datasets/transforms/mix_img_transforms.py

@@ -195,15 +195,15 @@ class Mosaic(BaseMixImageTransform):

mosaic transform

center_x

+------------------------------+

- | pad | pad |

- | +-----------+ |

+ | pad | |

+ | +-----------+ pad |

| | | |

- | | image1 |--------+ |

- | | | | |

- | | | image2 | |

- center_y |----+-------------+-----------|

+ | | image1 +-----------+

+ | | | |

+ | | | image2 |

+ center_y |----+-+-----------+-----------+

| | cropped | |

- |pad | image3 | image4 |

+ |pad | image3 | image4 |

| | | |

+----|-------------+-----------+

| |

@@ -465,13 +465,306 @@ def __repr__(self) -> str:

return repr_str

+@TRANSFORMS.register_module()

+class Mosaic9(BaseMixImageTransform):

+ """Mosaic9 augmentation.

+

+ Given 9 images, mosaic transform combines them into

+ one output image. The output image is composed of the parts from each sub-

+ image.

+

+ +-------------------------------+------------+

+ | pad | pad | |

+ | +----------+ | |

+ | | +---------------+ top_right |

+ | | | top | image2 |

+ | | top_left | image1 | |

+ | | image8 o--------+------+--------+---+

+ | | | | | |

+ +----+----------+ | right |pad|

+ | | center | image3 | |

+ | left | image0 +---------------+---|

+ | image7 | | | |

+ +---+-----------+---+--------+ | |

+ | | cropped | | bottom_right |pad|

+ | |bottom_left| | image4 | |

+ | | image6 | bottom | | |

+ +---|-----------+ image5 +---------------+---|

+ | pad | | pad |

+ +-----------+------------+-------------------+

+

+ The mosaic transform steps are as follows:

+

+ 1. Get the center image according to the index, and randomly

+ sample another 8 images from the custom dataset.

+ 2. Randomly offset the image after Mosaic

+

+ Required Keys:

+

+ - img

+ - gt_bboxes (BaseBoxes[torch.float32]) (optional)

+ - gt_bboxes_labels (np.int64) (optional)

+ - gt_ignore_flags (np.bool) (optional)

+ - mix_results (List[dict])

+

+ Modified Keys:

+

+ - img

+ - img_shape

+ - gt_bboxes (optional)

+ - gt_bboxes_labels (optional)

+ - gt_ignore_flags (optional)

+

+ Args:

+ img_scale (Sequence[int]): Image size after mosaic pipeline of single

+ image. The shape order should be (height, width).

+ Defaults to (640, 640).

+ bbox_clip_border (bool, optional): Whether to clip the objects outside

+ the border of the image. In some dataset like MOT17, the gt bboxes

+ are allowed to cross the border of images. Therefore, we don't

+ need to clip the gt bboxes in these cases. Defaults to True.

+ pad_val (int): Pad value. Defaults to 114.

+ pre_transform(Sequence[dict]): Sequence of transform object or

+ config dict to be composed.

+ prob (float): Probability of applying this transformation.

+ Defaults to 1.0.

+ use_cached (bool): Whether to use cache. Defaults to False.

+ max_cached_images (int): The maximum length of the cache. The larger

+ the cache, the stronger the randomness of this transform. As a

+ rule of thumb, providing 5 caches for each image suffices for

+ randomness. Defaults to 50.

+ random_pop (bool): Whether to randomly pop a result from the cache

+ when the cache is full. If set to False, use FIFO popping method.

+ Defaults to True.

+ max_refetch (int): The maximum number of retry iterations for getting

+ valid results from the pipeline. If the number of iterations is

+ greater than `max_refetch`, but results is still None, then the

+ iteration is terminated and raise the error. Defaults to 15.

+ """

+

+ def __init__(self,

+ img_scale: Tuple[int, int] = (640, 640),

+ bbox_clip_border: bool = True,

+ pad_val: Union[float, int] = 114.0,

+ pre_transform: Sequence[dict] = None,

+ prob: float = 1.0,

+ use_cached: bool = False,

+ max_cached_images: int = 50,

+ random_pop: bool = True,

+ max_refetch: int = 15):

+ assert isinstance(img_scale, tuple)

+ assert 0 <= prob <= 1.0, 'The probability should be in range [0,1]. ' \

+ f'got {prob}.'

+ if use_cached:

+ assert max_cached_images >= 9, 'The length of cache must >= 9, ' \

+ f'but got {max_cached_images}.'

+

+ super().__init__(

+ pre_transform=pre_transform,

+ prob=prob,

+ use_cached=use_cached,

+ max_cached_images=max_cached_images,

+ random_pop=random_pop,

+ max_refetch=max_refetch)

+

+ self.img_scale = img_scale

+ self.bbox_clip_border = bbox_clip_border

+ self.pad_val = pad_val

+

+ # intermediate variables

+ self._current_img_shape = [0, 0]

+ self._center_img_shape = [0, 0]

+ self._previous_img_shape = [0, 0]

+

+ def get_indexes(self, dataset: Union[BaseDataset, list]) -> list:

+ """Call function to collect indexes.

+

+ Args:

+ dataset (:obj:`Dataset` or list): The dataset or cached list.

+

+ Returns:

+ list: indexes.

+ """

+ indexes = [random.randint(0, len(dataset)) for _ in range(8)]

+ return indexes

+

+ def mix_img_transform(self, results: dict) -> dict:

+ """Mixed image data transformation.

+

+ Args:

+ results (dict): Result dict.

+

+ Returns:

+ results (dict): Updated result dict.

+ """

+ assert 'mix_results' in results

+

+ mosaic_bboxes = []

+ mosaic_bboxes_labels = []

+ mosaic_ignore_flags = []

+

+ img_scale_h, img_scale_w = self.img_scale

+

+ if len(results['img'].shape) == 3:

+ mosaic_img = np.full(

+ (int(img_scale_h * 3), int(img_scale_w * 3), 3),

+ self.pad_val,

+ dtype=results['img'].dtype)

+ else:

+ mosaic_img = np.full((int(img_scale_h * 3), int(img_scale_w * 3)),

+ self.pad_val,

+ dtype=results['img'].dtype)

+

+ # index = 0 is mean original image

+ # len(results['mix_results']) = 8

+ loc_strs = ('center', 'top', 'top_right', 'right', 'bottom_right',

+ 'bottom', 'bottom_left', 'left', 'top_left')

+

+ results_all = [results, *results['mix_results']]

+ for index, results_patch in enumerate(results_all):

+ img_i = results_patch['img']

+ # keep_ratio resize

+ img_i_h, img_i_w = img_i.shape[:2]

+ scale_ratio_i = min(img_scale_h / img_i_h, img_scale_w / img_i_w)

+ img_i = mmcv.imresize(

+ img_i,

+ (int(img_i_w * scale_ratio_i), int(img_i_h * scale_ratio_i)))

+

+ paste_coord = self._mosaic_combine(loc_strs[index],

+ img_i.shape[:2])

+

+ padw, padh = paste_coord[:2]

+ x1, y1, x2, y2 = (max(x, 0) for x in paste_coord)

+ mosaic_img[y1:y2, x1:x2] = img_i[y1 - padh:, x1 - padw:]

+

+ gt_bboxes_i = results_patch['gt_bboxes']

+ gt_bboxes_labels_i = results_patch['gt_bboxes_labels']

+ gt_ignore_flags_i = results_patch['gt_ignore_flags']

+ gt_bboxes_i.rescale_([scale_ratio_i, scale_ratio_i])

+ gt_bboxes_i.translate_([padw, padh])

+

+ mosaic_bboxes.append(gt_bboxes_i)

+ mosaic_bboxes_labels.append(gt_bboxes_labels_i)

+ mosaic_ignore_flags.append(gt_ignore_flags_i)

+

+ # Offset

+ offset_x = int(random.uniform(0, img_scale_w))

+ offset_y = int(random.uniform(0, img_scale_h))

+ mosaic_img = mosaic_img[offset_y:offset_y + 2 * img_scale_h,

+ offset_x:offset_x + 2 * img_scale_w]

+

+ mosaic_bboxes = mosaic_bboxes[0].cat(mosaic_bboxes, 0)

+ mosaic_bboxes.translate_([-offset_x, -offset_y])

+ mosaic_bboxes_labels = np.concatenate(mosaic_bboxes_labels, 0)

+ mosaic_ignore_flags = np.concatenate(mosaic_ignore_flags, 0)

+

+ if self.bbox_clip_border:

+ mosaic_bboxes.clip_([2 * img_scale_h, 2 * img_scale_w])

+ else:

+ # remove outside bboxes

+ inside_inds = mosaic_bboxes.is_inside(

+ [2 * img_scale_h, 2 * img_scale_w]).numpy()

+ mosaic_bboxes = mosaic_bboxes[inside_inds]

+ mosaic_bboxes_labels = mosaic_bboxes_labels[inside_inds]

+ mosaic_ignore_flags = mosaic_ignore_flags[inside_inds]

+

+ results['img'] = mosaic_img

+ results['img_shape'] = mosaic_img.shape

+ results['gt_bboxes'] = mosaic_bboxes

+ results['gt_bboxes_labels'] = mosaic_bboxes_labels

+ results['gt_ignore_flags'] = mosaic_ignore_flags

+ return results

+

+ def _mosaic_combine(self, loc: str,

+ img_shape_hw: Tuple[int, int]) -> Tuple[int, ...]:

+ """Calculate global coordinate of mosaic image.

+

+ Args:

+ loc (str): Index for the sub-image.

+ img_shape_hw (Sequence[int]): Height and width of sub-image

+

+ Returns:

+ paste_coord (tuple): paste corner coordinate in mosaic image.

+ """

+ assert loc in ('center', 'top', 'top_right', 'right', 'bottom_right',

+ 'bottom', 'bottom_left', 'left', 'top_left')

+

+ img_scale_h, img_scale_w = self.img_scale

+

+ self._current_img_shape = img_shape_hw

+ current_img_h, current_img_w = self._current_img_shape

+ previous_img_h, previous_img_w = self._previous_img_shape

+ center_img_h, center_img_w = self._center_img_shape

+

+ if loc == 'center':

+ self._center_img_shape = self._current_img_shape

+ # xmin, ymin, xmax, ymax

+ paste_coord = img_scale_w, \

+ img_scale_h, \

+ img_scale_w + current_img_w, \

+ img_scale_h + current_img_h

+ elif loc == 'top':

+ paste_coord = img_scale_w, \

+ img_scale_h - current_img_h, \

+ img_scale_w + current_img_w, \

+ img_scale_h

+ elif loc == 'top_right':

+ paste_coord = img_scale_w + previous_img_w, \

+ img_scale_h - current_img_h, \

+ img_scale_w + previous_img_w + current_img_w, \

+ img_scale_h

+ elif loc == 'right':

+ paste_coord = img_scale_w + center_img_w, \

+ img_scale_h, \

+ img_scale_w + center_img_w + current_img_w, \

+ img_scale_h + current_img_h

+ elif loc == 'bottom_right':

+ paste_coord = img_scale_w + center_img_w, \

+ img_scale_h + previous_img_h, \

+ img_scale_w + center_img_w + current_img_w, \

+ img_scale_h + previous_img_h + current_img_h

+ elif loc == 'bottom':

+ paste_coord = img_scale_w + center_img_w - current_img_w, \

+ img_scale_h + center_img_h, \

+ img_scale_w + center_img_w, \

+ img_scale_h + center_img_h + current_img_h

+ elif loc == 'bottom_left':

+ paste_coord = img_scale_w + center_img_w - \

+ previous_img_w - current_img_w, \

+ img_scale_h + center_img_h, \

+ img_scale_w + center_img_w - previous_img_w, \

+ img_scale_h + center_img_h + current_img_h

+ elif loc == 'left':

+ paste_coord = img_scale_w - current_img_w, \

+ img_scale_h + center_img_h - current_img_h, \

+ img_scale_w, \

+ img_scale_h + center_img_h

+ elif loc == 'top_left':

+ paste_coord = img_scale_w - current_img_w, \

+ img_scale_h + center_img_h - \

+ previous_img_h - current_img_h, \

+ img_scale_w, \

+ img_scale_h + center_img_h - previous_img_h

+

+ self._previous_img_shape = self._current_img_shape

+ # xmin, ymin, xmax, ymax

+ return paste_coord

+

+ def __repr__(self) -> str:

+ repr_str = self.__class__.__name__

+ repr_str += f'(img_scale={self.img_scale}, '

+ repr_str += f'pad_val={self.pad_val}, '

+ repr_str += f'prob={self.prob})'

+ return repr_str

+

+

@TRANSFORMS.register_module()

class YOLOv5MixUp(BaseMixImageTransform):

"""MixUp data augmentation for YOLOv5.

.. code:: text

- The mixup transform steps are as follows:

+ The mixup transform steps are as follows:

1. Another random image is picked by dataset.

2. Randomly obtain the fusion ratio from the beta distribution,

@@ -514,7 +807,7 @@ class YOLOv5MixUp(BaseMixImageTransform):

when the cache is full. If set to False, use FIFO popping method.

Defaults to True.

max_refetch (int): The maximum number of iterations. If the number of

- iterations is greater than `max_iters`, but gt_bbox is still

+ iterations is greater than `max_refetch`, but gt_bbox is still

empty, then the iteration is terminated. Defaults to 15.

"""

@@ -599,20 +892,20 @@ class YOLOXMixUp(BaseMixImageTransform):

.. code:: text

mixup transform

- +------------------------------+

+ +---------------+--------------+

| mixup image | |

| +--------|--------+ |

| | | | |

- |---------------+ | |

+ +---------------+ | |

| | | |

| | image | |

| | | |

| | | |

- | |-----------------+ |

+ | +-----------------+ |

| pad |

+------------------------------+

- The mixup transform steps are as follows:

+ The mixup transform steps are as follows:

1. Another random image is picked by dataset and embedded in

the top left patch(after padding and resizing)

@@ -662,7 +955,7 @@ class YOLOXMixUp(BaseMixImageTransform):

when the cache is full. If set to False, use FIFO popping method.

Defaults to True.

max_refetch (int): The maximum number of iterations. If the number of

- iterations is greater than `max_iters`, but gt_bbox is still

+ iterations is greater than `max_refetch`, but gt_bbox is still

empty, then the iteration is terminated. Defaults to 15.

"""

@@ -759,9 +1052,9 @@ def mix_img_transform(self, results: dict) -> dict:

ori_img = results['img']

origin_h, origin_w = out_img.shape[:2]

target_h, target_w = ori_img.shape[:2]

- padded_img = np.zeros(

- (max(origin_h, target_h), max(origin_w,

- target_w), 3)).astype(np.uint8)

+ padded_img = np.ones((max(origin_h, target_h), max(

+ origin_w, target_w), 3)) * self.pad_val

+ padded_img = padded_img.astype(np.uint8)

padded_img[:origin_h, :origin_w] = out_img

x_offset, y_offset = 0, 0

@@ -823,6 +1116,6 @@ def __repr__(self) -> str:

repr_str += f'ratio_range={self.ratio_range}, '

repr_str += f'flip_ratio={self.flip_ratio}, '

repr_str += f'pad_val={self.pad_val}, '

- repr_str += f'max_iters={self.max_iters}, '

+ repr_str += f'max_refetch={self.max_refetch}, '

repr_str += f'bbox_clip_border={self.bbox_clip_border})'

return repr_str

diff --git a/mmyolo/datasets/transforms/transforms.py b/mmyolo/datasets/transforms/transforms.py

index 17dc961db..890df8ac2 100644

--- a/mmyolo/datasets/transforms/transforms.py

+++ b/mmyolo/datasets/transforms/transforms.py

@@ -104,8 +104,7 @@ def _resize_img(self, results: dict):

resized_h, resized_w = image.shape[:2]

scale_ratio = resized_h / original_h

- scale_factor = np.array([scale_ratio, scale_ratio],

- dtype=np.float32)

+ scale_factor = (scale_ratio, scale_ratio)

results['img'] = image

results['img_shape'] = image.shape[:2]

@@ -208,10 +207,13 @@ def _resize_img(self, results: dict):

interpolation=self.interpolation,

backend=self.backend)

- scale_factor = np.array([ratio[0], ratio[1]], dtype=np.float32)

+ scale_factor = (ratio[1], ratio[0]) # mmcv scale factor is (w, h)

if 'scale_factor' in results:

- results['scale_factor'] = results['scale_factor'] * scale_factor

+ results['scale_factor'] = (results['scale_factor'][0] *

+ scale_factor[0],

+ results['scale_factor'][1] *

+ scale_factor[1])

else:

results['scale_factor'] = scale_factor

diff --git a/mmyolo/datasets/yolov5_coco.py b/mmyolo/datasets/yolov5_coco.py

index 048571186..55bc899ab 100644

--- a/mmyolo/datasets/yolov5_coco.py

+++ b/mmyolo/datasets/yolov5_coco.py

@@ -7,6 +7,9 @@

class BatchShapePolicyDataset(BaseDetDataset):

+ """Dataset with the batch shape policy that makes paddings with least

+ pixels during batch inference process, which does not require the image

+ scales of all batches to be the same throughout validation."""

def __init__(self,

*args,

@@ -17,7 +20,7 @@ def __init__(self,

def full_init(self):

"""rewrite full_init() to be compatible with serialize_data in

- BatchShapesPolicy."""

+ BatchShapePolicy."""

if self._fully_initialized:

return

# load data information

diff --git a/mmyolo/deploy/models/dense_heads/yolov5_head.py b/mmyolo/deploy/models/dense_heads/yolov5_head.py

index cf61fb3ca..ecbe24437 100644

--- a/mmyolo/deploy/models/dense_heads/yolov5_head.py

+++ b/mmyolo/deploy/models/dense_heads/yolov5_head.py

@@ -146,3 +146,34 @@ def yolov5_head__predict_by_feat(ctx,

return nms_func(bboxes, scores, max_output_boxes_per_class, iou_threshold,

score_threshold, pre_top_k, keep_top_k)

+

+

+@FUNCTION_REWRITER.register_rewriter(

+ func_name='mmyolo.models.dense_heads.yolov5_head.'

+ 'YOLOv5Head.predict',

+ backend='rknn')

+def yolov5_head__predict__rknn(ctx, self, x: Tuple[Tensor], *args,

+ **kwargs) -> Tuple[Tensor, Tensor, Tensor]:

+ """Perform forward propagation of the detection head and predict detection

+ results on the features of the upstream network.

+

+ Args:

+ x (tuple[Tensor]): Multi-level features from the

+ upstream network, each is a 4D-tensor.

+ """

+ outs = self(x)

+ return outs

+

+

+@FUNCTION_REWRITER.register_rewriter(

+ func_name='mmyolo.models.dense_heads.yolov5_head.'

+ 'YOLOv5HeadModule.forward',

+ backend='rknn')

+def yolov5_head_module__forward__rknn(

+ ctx, self, x: Tensor, *args,

+ **kwargs) -> Tuple[Tensor, Tensor, Tensor]:

+ """Forward feature of a single scale level."""

+ out = []

+ for i, feat in enumerate(x):

+ out.append(self.convs_pred[i](feat))

+ return out

diff --git a/mmyolo/deploy/object_detection.py b/mmyolo/deploy/object_detection.py

index 2317ec915..ba8c69ea8 100644

--- a/mmyolo/deploy/object_detection.py

+++ b/mmyolo/deploy/object_detection.py

@@ -1,6 +1,7 @@

# Copyright (c) OpenMMLab. All rights reserved.

-from typing import Callable

+from typing import Callable, Dict, Optional

+import torch

from mmdeploy.codebase.base import CODEBASE, MMCodebase

from mmdeploy.codebase.mmdet.deploy import ObjectDetection

from mmdeploy.utils import Codebase, Task

@@ -16,13 +17,23 @@ class MMYOLO(MMCodebase):

task_registry = MMYOLO_TASK

+ @classmethod

+ def register_deploy_modules(cls):

+ """register all rewriters for mmdet."""

+ import mmdeploy.codebase.mmdet.models # noqa: F401

+ import mmdeploy.codebase.mmdet.ops # noqa: F401

+ import mmdeploy.codebase.mmdet.structures # noqa: F401

+

@classmethod

def register_all_modules(cls):

+ """register all modules."""

from mmdet.utils.setup_env import \

register_all_modules as register_all_modules_mmdet

from mmyolo.utils.setup_env import \

register_all_modules as register_all_modules_mmyolo

+

+ cls.register_deploy_modules()

register_all_modules_mmyolo(True)

register_all_modules_mmdet(False)

@@ -72,3 +83,40 @@ def get_visualizer(self, name: str, save_dir: str):

if metainfo is not None:

visualizer.dataset_meta = metainfo

return visualizer

+

+ def build_pytorch_model(self,

+ model_checkpoint: Optional[str] = None,

+ cfg_options: Optional[Dict] = None,

+ **kwargs) -> torch.nn.Module:

+ """Initialize torch model.

+

+ Args:

+ model_checkpoint (str): The checkpoint file of torch model,

+ defaults to `None`.

+ cfg_options (dict): Optional config key-pair parameters.

+ Returns:

+ nn.Module: An initialized torch model generated by other OpenMMLab

+ codebases.

+ """

+ from copy import deepcopy

+

+ from mmengine.model import revert_sync_batchnorm

+ from mmengine.registry import MODELS

+

+ from mmyolo.utils import switch_to_deploy

+

+ model = deepcopy(self.model_cfg.model)

+ preprocess_cfg = deepcopy(self.model_cfg.get('preprocess_cfg', {}))

+ preprocess_cfg.update(

+ deepcopy(self.model_cfg.get('data_preprocessor', {})))

+ model.setdefault('data_preprocessor', preprocess_cfg)

+ model = MODELS.build(model)

+ if model_checkpoint is not None:

+ from mmengine.runner.checkpoint import load_checkpoint

+ load_checkpoint(model, model_checkpoint, map_location=self.device)

+

+ model = revert_sync_batchnorm(model)

+ switch_to_deploy(model)

+ model = model.to(self.device)

+ model.eval()

+ return model

diff --git a/mmyolo/engine/hooks/switch_to_deploy_hook.py b/mmyolo/engine/hooks/switch_to_deploy_hook.py

index e597eb22b..28ac345f4 100644

--- a/mmyolo/engine/hooks/switch_to_deploy_hook.py

+++ b/mmyolo/engine/hooks/switch_to_deploy_hook.py

@@ -17,4 +17,5 @@ class SwitchToDeployHook(Hook):

"""

def before_test_epoch(self, runner: Runner):

+ """Switch to deploy mode before testing."""

switch_to_deploy(runner.model)

diff --git a/mmyolo/engine/optimizers/__init__.py b/mmyolo/engine/optimizers/__init__.py

index 3ad91894a..b598020d0 100644

--- a/mmyolo/engine/optimizers/__init__.py

+++ b/mmyolo/engine/optimizers/__init__.py

@@ -1,4 +1,5 @@

# Copyright (c) OpenMMLab. All rights reserved.

from .yolov5_optim_constructor import YOLOv5OptimizerConstructor

+from .yolov7_optim_wrapper_constructor import YOLOv7OptimWrapperConstructor

-__all__ = ['YOLOv5OptimizerConstructor']

+__all__ = ['YOLOv5OptimizerConstructor', 'YOLOv7OptimWrapperConstructor']

diff --git a/mmyolo/engine/optimizers/yolov5_optim_constructor.py b/mmyolo/engine/optimizers/yolov5_optim_constructor.py

index 8abe5db89..5e5f42cb5 100644

--- a/mmyolo/engine/optimizers/yolov5_optim_constructor.py

+++ b/mmyolo/engine/optimizers/yolov5_optim_constructor.py

@@ -120,6 +120,10 @@ def __call__(self, model: nn.Module) -> OptimWrapper:

# bias

optimizer_cfg['params'].append({'params': params_groups[2]})

+ print_log(

+ 'Optimizer groups: %g .bias, %g conv.weight, %g other' %

+ (len(params_groups[2]), len(params_groups[0]), len(

+ params_groups[1])), 'current')

del params_groups

optimizer = OPTIMIZERS.build(optimizer_cfg)

diff --git a/mmyolo/engine/optimizers/yolov7_optim_wrapper_constructor.py b/mmyolo/engine/optimizers/yolov7_optim_wrapper_constructor.py

new file mode 100644

index 000000000..79ea8b699

--- /dev/null

+++ b/mmyolo/engine/optimizers/yolov7_optim_wrapper_constructor.py

@@ -0,0 +1,139 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+from typing import Optional

+

+import torch.nn as nn

+from mmengine.dist import get_world_size

+from mmengine.logging import print_log

+from mmengine.model import is_model_wrapper

+from mmengine.optim import OptimWrapper

+

+from mmyolo.models.dense_heads.yolov7_head import ImplicitA, ImplicitM

+from mmyolo.registry import (OPTIM_WRAPPER_CONSTRUCTORS, OPTIM_WRAPPERS,

+ OPTIMIZERS)

+

+

+# TODO: Consider merging into YOLOv5OptimizerConstructor

+@OPTIM_WRAPPER_CONSTRUCTORS.register_module()

+class YOLOv7OptimWrapperConstructor:

+ """YOLOv7 constructor for optimizer wrappers.

+

+ It has the following functions:

+

+ - divides the optimizer parameters into 3 groups:

+ Conv, Bias and BN/ImplicitA/ImplicitM

+

+ - support `weight_decay` parameter adaption based on

+ `batch_size_per_gpu`

+

+ Args:

+ optim_wrapper_cfg (dict): The config dict of the optimizer wrapper.

+ Positional fields are

+

+ - ``type``: class name of the OptimizerWrapper

+ - ``optimizer``: The configuration of optimizer.

+

+ Optional fields are

+

+ - any arguments of the corresponding optimizer wrapper type,

+ e.g., accumulative_counts, clip_grad, etc.

+

+ The positional fields of ``optimizer`` are

+

+ - `type`: class name of the optimizer.

+

+ Optional fields are

+

+ - any arguments of the corresponding optimizer type, e.g.,

+ lr, weight_decay, momentum, etc.

+

+ paramwise_cfg (dict, optional): Parameter-wise options. Must include

+ `base_total_batch_size` if not None. If the total input batch

+ is smaller than `base_total_batch_size`, the `weight_decay`

+ parameter will be kept unchanged, otherwise linear scaling.

+

+ Example:

+ >>> model = torch.nn.modules.Conv1d(1, 1, 1)

+ >>> optim_wrapper_cfg = dict(

+ >>> dict(type='OptimWrapper', optimizer=dict(type='SGD', lr=0.01,

+ >>> momentum=0.9, weight_decay=0.0001, batch_size_per_gpu=16))

+ >>> paramwise_cfg = dict(base_total_batch_size=64)

+ >>> optim_wrapper_builder = YOLOv7OptimWrapperConstructor(

+ >>> optim_wrapper_cfg, paramwise_cfg)

+ >>> optim_wrapper = optim_wrapper_builder(model)

+ """

+

+ def __init__(self,

+ optim_wrapper_cfg: dict,

+ paramwise_cfg: Optional[dict] = None):

+ if paramwise_cfg is None:

+ paramwise_cfg = {'base_total_batch_size': 64}

+ assert 'base_total_batch_size' in paramwise_cfg

+

+ if not isinstance(optim_wrapper_cfg, dict):

+ raise TypeError('optimizer_cfg should be a dict',

+ f'but got {type(optim_wrapper_cfg)}')

+ assert 'optimizer' in optim_wrapper_cfg, (

+ '`optim_wrapper_cfg` must contain "optimizer" config')

+

+ self.optim_wrapper_cfg = optim_wrapper_cfg

+ self.optimizer_cfg = self.optim_wrapper_cfg.pop('optimizer')

+ self.base_total_batch_size = paramwise_cfg['base_total_batch_size']

+

+ def __call__(self, model: nn.Module) -> OptimWrapper:

+ if is_model_wrapper(model):

+ model = model.module

+ optimizer_cfg = self.optimizer_cfg.copy()

+ weight_decay = optimizer_cfg.pop('weight_decay', 0)

+

+ if 'batch_size_per_gpu' in optimizer_cfg:

+ batch_size_per_gpu = optimizer_cfg.pop('batch_size_per_gpu')

+ # No scaling if total_batch_size is less than

+ # base_total_batch_size, otherwise linear scaling.

+ total_batch_size = get_world_size() * batch_size_per_gpu

+ accumulate = max(

+ round(self.base_total_batch_size / total_batch_size), 1)

+ scale_factor = total_batch_size * \

+ accumulate / self.base_total_batch_size

+

+ if scale_factor != 1:

+ weight_decay *= scale_factor

+ print_log(f'Scaled weight_decay to {weight_decay}', 'current')

+

+ params_groups = [], [], []

+ for v in model.modules():

+ # no decay

+ # Caution: Coupling with model

+ if isinstance(v, (ImplicitA, ImplicitM)):

+ params_groups[0].append(v.implicit)

+ elif isinstance(v, nn.modules.batchnorm._NormBase):

+ params_groups[0].append(v.weight)

+ # apply decay

+ elif hasattr(v, 'weight') and isinstance(v.weight, nn.Parameter):

+ params_groups[1].append(v.weight) # apply decay

+

+ # biases, no decay

+ if hasattr(v, 'bias') and isinstance(v.bias, nn.Parameter):

+ params_groups[2].append(v.bias)

+

+ # Note: Make sure bias is in the last parameter group

+ optimizer_cfg['params'] = []

+ # conv

+ optimizer_cfg['params'].append({

+ 'params': params_groups[1],

+ 'weight_decay': weight_decay

+ })

+ # bn ...

+ optimizer_cfg['params'].append({'params': params_groups[0]})

+ # bias

+ optimizer_cfg['params'].append({'params': params_groups[2]})

+

+ print_log(

+ 'Optimizer groups: %g .bias, %g conv.weight, %g other' %

+ (len(params_groups[2]), len(params_groups[1]), len(

+ params_groups[0])), 'current')

+ del params_groups

+

+ optimizer = OPTIMIZERS.build(optimizer_cfg)

+ optim_wrapper = OPTIM_WRAPPERS.build(

+ self.optim_wrapper_cfg, default_args=dict(optimizer=optimizer))

+ return optim_wrapper

diff --git a/mmyolo/models/backbones/__init__.py b/mmyolo/models/backbones/__init__.py

index 851e8917c..0c5015376 100644

--- a/mmyolo/models/backbones/__init__.py

+++ b/mmyolo/models/backbones/__init__.py

@@ -3,10 +3,10 @@

from .csp_darknet import YOLOv5CSPDarknet, YOLOXCSPDarknet

from .csp_resnet import PPYOLOECSPResNet

from .cspnext import CSPNeXt

-from .efficient_rep import YOLOv6EfficientRep

+from .efficient_rep import YOLOv6CSPBep, YOLOv6EfficientRep

from .yolov7_backbone import YOLOv7Backbone

__all__ = [

- 'YOLOv5CSPDarknet', 'BaseBackbone', 'YOLOv6EfficientRep',

+ 'YOLOv5CSPDarknet', 'BaseBackbone', 'YOLOv6EfficientRep', 'YOLOv6CSPBep',

'YOLOXCSPDarknet', 'CSPNeXt', 'YOLOv7Backbone', 'PPYOLOECSPResNet'

]

diff --git a/mmyolo/models/backbones/base_backbone.py b/mmyolo/models/backbones/base_backbone.py

index 57a00eae0..730c7095e 100644

--- a/mmyolo/models/backbones/base_backbone.py

+++ b/mmyolo/models/backbones/base_backbone.py

@@ -48,7 +48,7 @@ class BaseBackbone(BaseModule, metaclass=ABCMeta):

In P6 model, n=5

Args:

- arch_setting (dict): Architecture of BaseBackbone.

+ arch_setting (list): Architecture of BaseBackbone.

plugins (list[dict]): List of plugins for stages, each dict contains:

- cfg (dict, required): Cfg dict to build plugin.

@@ -75,7 +75,7 @@ class BaseBackbone(BaseModule, metaclass=ABCMeta):

"""

def __init__(self,

- arch_setting: dict,

+ arch_setting: list,

deepen_factor: float = 1.0,

widen_factor: float = 1.0,

input_channels: int = 3,

@@ -87,7 +87,6 @@ def __init__(self,

norm_eval: bool = False,

init_cfg: OptMultiConfig = None):

super().__init__(init_cfg)

-

self.num_stages = len(arch_setting)

self.arch_setting = arch_setting

@@ -135,7 +134,7 @@ def build_stage_layer(self, stage_idx: int, setting: list):

"""

pass

- def make_stage_plugins(self, plugins, idx, setting):

+ def make_stage_plugins(self, plugins, stage_idx, setting):

"""Make plugins for backbone ``stage_idx`` th stage.

Currently we support to insert ``context_block``,

@@ -154,7 +153,7 @@ def make_stage_plugins(self, plugins, idx, setting):

... ]

>>> model = YOLOv5CSPDarknet()

>>> stage_plugins = model.make_stage_plugins(plugins, 0, setting)

- >>> assert len(stage_plugins) == 3

+ >>> assert len(stage_plugins) == 1

Suppose ``stage_idx=0``, the structure of blocks in the stage would be:

@@ -162,7 +161,7 @@ def make_stage_plugins(self, plugins, idx, setting):

conv1 -> conv2 -> conv3 -> yyy

- Suppose 'stage_idx=1', the structure of blocks in the stage would be:

+ Suppose ``stage_idx=1``, the structure of blocks in the stage would be:

.. code-block:: none

@@ -188,7 +187,7 @@ def make_stage_plugins(self, plugins, idx, setting):

plugin = plugin.copy()

stages = plugin.pop('stages', None)

assert stages is None or len(stages) == self.num_stages

- if stages is None or stages[idx]:

+ if stages is None or stages[stage_idx]:

name, layer = build_plugin_layer(

plugin['cfg'], in_channels=in_channels)

plugin_layers.append(layer)

diff --git a/mmyolo/models/backbones/csp_darknet.py b/mmyolo/models/backbones/csp_darknet.py

index 88d99c79d..2ce0fb669 100644

--- a/mmyolo/models/backbones/csp_darknet.py

+++ b/mmyolo/models/backbones/csp_darknet.py

@@ -3,7 +3,7 @@

import torch

import torch.nn as nn

-from mmcv.cnn import ConvModule

+from mmcv.cnn import ConvModule, DepthwiseSeparableConvModule

from mmdet.models.backbones.csp_darknet import CSPLayer, Focus

from mmdet.utils import ConfigType, OptMultiConfig

@@ -146,8 +146,8 @@ def build_stage_layer(self, stage_idx: int, setting: list) -> list:

return stage

def init_weights(self):

+ """Initialize the parameters."""

if self.init_cfg is None:

- """Initialize the parameters."""

for m in self.modules():

if isinstance(m, torch.nn.Conv2d):

# In order to be consistent with the source code,

@@ -178,6 +178,8 @@ class YOLOXCSPDarknet(BaseBackbone):

Defaults to (2, 3, 4).

frozen_stages (int): Stages to be frozen (stop grad and set eval

mode). -1 means not freezing any parameters. Defaults to -1.

+ use_depthwise (bool): Whether to use depthwise separable convolution.

+ Defaults to False.

spp_kernal_sizes: (tuple[int]): Sequential of kernel sizes of SPP

layers. Defaults to (5, 9, 13).

norm_cfg (dict): Dictionary to construct and config norm layer.

@@ -218,12 +220,14 @@ def __init__(self,

input_channels: int = 3,

out_indices: Tuple[int] = (2, 3, 4),

frozen_stages: int = -1,

+ use_depthwise: bool = False,

spp_kernal_sizes: Tuple[int] = (5, 9, 13),

norm_cfg: ConfigType = dict(

type='BN', momentum=0.03, eps=0.001),

act_cfg: ConfigType = dict(type='SiLU', inplace=True),

norm_eval: bool = False,

init_cfg: OptMultiConfig = None):

+ self.use_depthwise = use_depthwise

self.spp_kernal_sizes = spp_kernal_sizes

super().__init__(self.arch_settings[arch], deepen_factor, widen_factor,

input_channels, out_indices, frozen_stages, plugins,

@@ -251,7 +255,9 @@ def build_stage_layer(self, stage_idx: int, setting: list) -> list:

out_channels = make_divisible(out_channels, self.widen_factor)

num_blocks = make_round(num_blocks, self.deepen_factor)

stage = []

- conv_layer = ConvModule(

+ conv = DepthwiseSeparableConvModule \

+ if self.use_depthwise else ConvModule

+ conv_layer = conv(

in_channels,

out_channels,

kernel_size=3,

diff --git a/mmyolo/models/backbones/efficient_rep.py b/mmyolo/models/backbones/efficient_rep.py

index 9ac1b81be..691c5b846 100644

--- a/mmyolo/models/backbones/efficient_rep.py

+++ b/mmyolo/models/backbones/efficient_rep.py

@@ -8,20 +8,18 @@

from mmyolo.models.layers.yolo_bricks import SPPFBottleneck

from mmyolo.registry import MODELS

-from ..layers import RepStageBlock, RepVGGBlock

-from ..utils import make_divisible, make_round

+from ..layers import BepC3StageBlock, RepStageBlock

+from ..utils import make_round

from .base_backbone import BaseBackbone

@MODELS.register_module()

class YOLOv6EfficientRep(BaseBackbone):

"""EfficientRep backbone used in YOLOv6.

-

Args:

arch (str): Architecture of BaseDarknet, from {P5, P6}.

Defaults to P5.

plugins (list[dict]): List of plugins for stages, each dict contains:

-

- cfg (dict, required): Cfg dict to build plugin.

- stages (tuple[bool], optional): Stages to apply plugin, length

should be same as 'num_stages'.

@@ -41,10 +39,10 @@ class YOLOv6EfficientRep(BaseBackbone):

norm_eval (bool): Whether to set norm layers to eval mode, namely,

freeze running stats (mean and var). Note: Effect on Batch Norm

and its variants only. Defaults to False.

- block (nn.Module): block used to build each stage.

+ block_cfg (dict): Config dict for the block used to build each

+ layer. Defaults to dict(type='RepVGGBlock').

init_cfg (Union[dict, list[dict]], optional): Initialization config

dict. Defaults to None.

-

Example:

>>> from mmyolo.models import YOLOv6EfficientRep

>>> import torch

@@ -78,9 +76,9 @@ def __init__(self,

type='BN', momentum=0.03, eps=0.001),

act_cfg: ConfigType = dict(type='ReLU', inplace=True),

norm_eval: bool = False,

- block: nn.Module = RepVGGBlock,

+ block_cfg: ConfigType = dict(type='RepVGGBlock'),

init_cfg: OptMultiConfig = None):

- self.block = block

+ self.block_cfg = block_cfg

super().__init__(

self.arch_settings[arch],

deepen_factor,

@@ -96,12 +94,16 @@ def __init__(self,

def build_stem_layer(self) -> nn.Module:

"""Build a stem layer."""

- return self.block(

- in_channels=self.input_channels,

- out_channels=make_divisible(self.arch_setting[0][0],

- self.widen_factor),

- kernel_size=3,

- stride=2)

+

+ block_cfg = self.block_cfg.copy()

+ block_cfg.update(

+ dict(

+ in_channels=self.input_channels,

+ out_channels=int(self.arch_setting[0][0] * self.widen_factor),

+ kernel_size=3,

+ stride=2,

+ ))

+ return MODELS.build(block_cfg)

def build_stage_layer(self, stage_idx: int, setting: list) -> list:

"""Build a stage layer.

@@ -112,24 +114,28 @@ def build_stage_layer(self, stage_idx: int, setting: list) -> list:

"""

in_channels, out_channels, num_blocks, use_spp = setting

- in_channels = make_divisible(in_channels, self.widen_factor)

- out_channels = make_divisible(out_channels, self.widen_factor)

+ in_channels = int(in_channels * self.widen_factor)

+ out_channels = int(out_channels * self.widen_factor)

num_blocks = make_round(num_blocks, self.deepen_factor)

- stage = []

+ rep_stage_block = RepStageBlock(

+ in_channels=out_channels,

+ out_channels=out_channels,

+ num_blocks=num_blocks,

+ block_cfg=self.block_cfg,

+ )

- ef_block = nn.Sequential(

- self.block(

+ block_cfg = self.block_cfg.copy()

+ block_cfg.update(

+ dict(

in_channels=in_channels,

out_channels=out_channels,

kernel_size=3,

- stride=2),

- RepStageBlock(

- in_channels=out_channels,

- out_channels=out_channels,

- n=num_blocks,

- block=self.block,

- ))

+ stride=2))

+ stage = []

+

+ ef_block = nn.Sequential(MODELS.build(block_cfg), rep_stage_block)

+

stage.append(ef_block)

if use_spp:

@@ -152,3 +158,130 @@ def init_weights(self):

m.reset_parameters()

else:

super().init_weights()

+

+

+@MODELS.register_module()

+class YOLOv6CSPBep(YOLOv6EfficientRep):

+ """CSPBep backbone used in YOLOv6.

+ Args:

+ arch (str): Architecture of BaseDarknet, from {P5, P6}.

+ Defaults to P5.

+ plugins (list[dict]): List of plugins for stages, each dict contains:

+ - cfg (dict, required): Cfg dict to build plugin.

+ - stages (tuple[bool], optional): Stages to apply plugin, length

+ should be same as 'num_stages'.

+ deepen_factor (float): Depth multiplier, multiply number of

+ blocks in CSP layer by this amount. Defaults to 1.0.

+ widen_factor (float): Width multiplier, multiply number of

+ channels in each layer by this amount. Defaults to 1.0.

+ input_channels (int): Number of input image channels. Defaults to 3.

+ out_indices (Tuple[int]): Output from which stages.

+ Defaults to (2, 3, 4).

+ frozen_stages (int): Stages to be frozen (stop grad and set eval

+ mode). -1 means not freezing any parameters. Defaults to -1.

+ norm_cfg (dict): Dictionary to construct and config norm layer.

+ Defaults to dict(type='BN', requires_grad=True).

+ act_cfg (dict): Config dict for activation layer.

+ Defaults to dict(type='LeakyReLU', negative_slope=0.1).

+ norm_eval (bool): Whether to set norm layers to eval mode, namely,

+ freeze running stats (mean and var). Note: Effect on Batch Norm

+ and its variants only. Defaults to False.

+ block_cfg (dict): Config dict for the block used to build each

+ layer. Defaults to dict(type='RepVGGBlock').

+ block_act_cfg (dict): Config dict for activation layer used in each

+ stage. Defaults to dict(type='SiLU', inplace=True).

+ init_cfg (Union[dict, list[dict]], optional): Initialization config

+ dict. Defaults to None.

+ Example:

+ >>> from mmyolo.models import YOLOv6CSPBep

+ >>> import torch

+ >>> model = YOLOv6CSPBep()

+ >>> model.eval()

+ >>> inputs = torch.rand(1, 3, 416, 416)

+ >>> level_outputs = model(inputs)

+ >>> for level_out in level_outputs:

+ ... print(tuple(level_out.shape))

+ ...

+ (1, 256, 52, 52)

+ (1, 512, 26, 26)

+ (1, 1024, 13, 13)

+ """

+ # From left to right:

+ # in_channels, out_channels, num_blocks, use_spp

+ arch_settings = {

+ 'P5': [[64, 128, 6, False], [128, 256, 12, False],

+ [256, 512, 18, False], [512, 1024, 6, True]]

+ }

+

+ def __init__(self,

+ arch: str = 'P5',

+ plugins: Union[dict, List[dict]] = None,

+ deepen_factor: float = 1.0,

+ widen_factor: float = 1.0,

+ input_channels: int = 3,

+ hidden_ratio: float = 0.5,

+ out_indices: Tuple[int] = (2, 3, 4),

+ frozen_stages: int = -1,

+ norm_cfg: ConfigType = dict(

+ type='BN', momentum=0.03, eps=0.001),

+ act_cfg: ConfigType = dict(type='SiLU', inplace=True),

+ norm_eval: bool = False,

+ block_cfg: ConfigType = dict(type='ConvWrapper'),

+ init_cfg: OptMultiConfig = None):

+ self.hidden_ratio = hidden_ratio

+ super().__init__(

+ arch=arch,

+ deepen_factor=deepen_factor,

+ widen_factor=widen_factor,

+ input_channels=input_channels,

+ out_indices=out_indices,

+ plugins=plugins,

+ frozen_stages=frozen_stages,

+ norm_cfg=norm_cfg,

+ act_cfg=act_cfg,

+ norm_eval=norm_eval,

+ block_cfg=block_cfg,

+ init_cfg=init_cfg)

+

+ def build_stage_layer(self, stage_idx: int, setting: list) -> list:

+ """Build a stage layer.

+

+ Args:

+ stage_idx (int): The index of a stage layer.

+ setting (list): The architecture setting of a stage layer.

+ """

+ in_channels, out_channels, num_blocks, use_spp = setting

+ in_channels = int(in_channels * self.widen_factor)

+ out_channels = int(out_channels * self.widen_factor)

+ num_blocks = make_round(num_blocks, self.deepen_factor)

+

+ rep_stage_block = BepC3StageBlock(

+ in_channels=out_channels,

+ out_channels=out_channels,

+ num_blocks=num_blocks,

+ hidden_ratio=self.hidden_ratio,

+ block_cfg=self.block_cfg,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg)

+ block_cfg = self.block_cfg.copy()

+ block_cfg.update(

+ dict(

+ in_channels=in_channels,

+ out_channels=out_channels,

+ kernel_size=3,

+ stride=2))

+ stage = []

+

+ ef_block = nn.Sequential(MODELS.build(block_cfg), rep_stage_block)

+

+ stage.append(ef_block)

+

+ if use_spp:

+ spp = SPPFBottleneck(

+ in_channels=out_channels,

+ out_channels=out_channels,

+ kernel_sizes=5,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg)

+ stage.append(spp)

+ return stage

diff --git a/mmyolo/models/backbones/yolov7_backbone.py b/mmyolo/models/backbones/yolov7_backbone.py

index c016e277d..bb9a5eed8 100644

--- a/mmyolo/models/backbones/yolov7_backbone.py

+++ b/mmyolo/models/backbones/yolov7_backbone.py

@@ -1,12 +1,13 @@

# Copyright (c) OpenMMLab. All rights reserved.

-from typing import List, Tuple, Union

+from typing import List, Optional, Tuple, Union

import torch.nn as nn

from mmcv.cnn import ConvModule

+from mmdet.models.backbones.csp_darknet import Focus

from mmdet.utils import ConfigType, OptMultiConfig

from mmyolo.registry import MODELS

-from ..layers import ELANBlock, MaxPoolAndStrideConvBlock

+from ..layers import MaxPoolAndStrideConvBlock

from .base_backbone import BaseBackbone

@@ -15,8 +16,7 @@ class YOLOv7Backbone(BaseBackbone):

"""Backbone used in YOLOv7.

Args:

- arch (str): Architecture of YOLOv7, from {P5, P6}.

- Defaults to P5.

+ arch (str): Architecture of YOLOv7Defaults to L.

deepen_factor (float): Depth multiplier, multiply number of

blocks in CSP layer by this amount. Defaults to 1.0.

widen_factor (float): Width multiplier, multiply number of

@@ -40,28 +40,107 @@ class YOLOv7Backbone(BaseBackbone):

init_cfg (:obj:`ConfigDict` or dict or list[dict] or

list[:obj:`ConfigDict`]): Initialization config dict.

"""

+ _tiny_stage1_cfg = dict(type='TinyDownSampleBlock', middle_ratio=0.5)

+ _tiny_stage2_4_cfg = dict(type='TinyDownSampleBlock', middle_ratio=1.0)

+ _l_expand_channel_2x = dict(

+ type='ELANBlock',

+ middle_ratio=0.5,

+ block_ratio=0.5,

+ num_blocks=2,

+ num_convs_in_block=2)

+ _l_no_change_channel = dict(

+ type='ELANBlock',

+ middle_ratio=0.25,

+ block_ratio=0.25,

+ num_blocks=2,

+ num_convs_in_block=2)

+ _x_expand_channel_2x = dict(

+ type='ELANBlock',

+ middle_ratio=0.4,

+ block_ratio=0.4,

+ num_blocks=3,

+ num_convs_in_block=2)

+ _x_no_change_channel = dict(

+ type='ELANBlock',

+ middle_ratio=0.2,

+ block_ratio=0.2,

+ num_blocks=3,

+ num_convs_in_block=2)

+ _w_no_change_channel = dict(

+ type='ELANBlock',

+ middle_ratio=0.5,

+ block_ratio=0.5,

+ num_blocks=2,

+ num_convs_in_block=2)

+ _e_no_change_channel = dict(

+ type='ELANBlock',

+ middle_ratio=0.4,

+ block_ratio=0.4,

+ num_blocks=3,

+ num_convs_in_block=2)

+ _d_no_change_channel = dict(

+ type='ELANBlock',

+ middle_ratio=1 / 3,

+ block_ratio=1 / 3,

+ num_blocks=4,

+ num_convs_in_block=2)

+ _e2e_no_change_channel = dict(

+ type='EELANBlock',

+ num_elan_block=2,

+ middle_ratio=0.4,

+ block_ratio=0.4,

+ num_blocks=3,

+ num_convs_in_block=2)

# From left to right:

- # in_channels, out_channels, ELAN mode

+ # in_channels, out_channels, Block_params

arch_settings = {

- 'P5': [[64, 128, 'expand_channel_2x'], [256, 512, 'expand_channel_2x'],

- [512, 1024, 'expand_channel_2x'],

- [1024, 1024, 'no_change_channel']]

+ 'Tiny': [[64, 64, _tiny_stage1_cfg], [64, 128, _tiny_stage2_4_cfg],

+ [128, 256, _tiny_stage2_4_cfg],

+ [256, 512, _tiny_stage2_4_cfg]],

+ 'L': [[64, 256, _l_expand_channel_2x],

+ [256, 512, _l_expand_channel_2x],

+ [512, 1024, _l_expand_channel_2x],

+ [1024, 1024, _l_no_change_channel]],

+ 'X': [[80, 320, _x_expand_channel_2x],

+ [320, 640, _x_expand_channel_2x],

+ [640, 1280, _x_expand_channel_2x],

+ [1280, 1280, _x_no_change_channel]],

+ 'W':

+ [[64, 128, _w_no_change_channel], [128, 256, _w_no_change_channel],

+ [256, 512, _w_no_change_channel], [512, 768, _w_no_change_channel],

+ [768, 1024, _w_no_change_channel]],

+ 'E':

+ [[80, 160, _e_no_change_channel], [160, 320, _e_no_change_channel],

+ [320, 640, _e_no_change_channel], [640, 960, _e_no_change_channel],

+ [960, 1280, _e_no_change_channel]],

+ 'D': [[96, 192,

+ _d_no_change_channel], [192, 384, _d_no_change_channel],

+ [384, 768, _d_no_change_channel],

+ [768, 1152, _d_no_change_channel],

+ [1152, 1536, _d_no_change_channel]],

+ 'E2E': [[80, 160, _e2e_no_change_channel],

+ [160, 320, _e2e_no_change_channel],

+ [320, 640, _e2e_no_change_channel],

+ [640, 960, _e2e_no_change_channel],

+ [960, 1280, _e2e_no_change_channel]],

}

def __init__(self,

- arch: str = 'P5',

- plugins: Union[dict, List[dict]] = None,

+ arch: str = 'L',

deepen_factor: float = 1.0,

widen_factor: float = 1.0,

input_channels: int = 3,

out_indices: Tuple[int] = (2, 3, 4),

frozen_stages: int = -1,

+ plugins: Union[dict, List[dict]] = None,

norm_cfg: ConfigType = dict(

type='BN', momentum=0.03, eps=0.001),

act_cfg: ConfigType = dict(type='SiLU', inplace=True),

norm_eval: bool = False,

init_cfg: OptMultiConfig = None):

+ assert arch in self.arch_settings.keys()

+ self.arch = arch

super().__init__(

self.arch_settings[arch],

deepen_factor,

@@ -77,31 +156,57 @@ def __init__(self,

def build_stem_layer(self) -> nn.Module:

"""Build a stem layer."""

- stem = nn.Sequential(

- ConvModule(

- 3,

- int(self.arch_setting[0][0] * self.widen_factor // 2),

+ if self.arch in ['L', 'X']:

+ stem = nn.Sequential(

+ ConvModule(

+ 3,

+ int(self.arch_setting[0][0] * self.widen_factor // 2),

+ 3,

+ padding=1,

+ stride=1,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg),

+ ConvModule(

+ int(self.arch_setting[0][0] * self.widen_factor // 2),

+ int(self.arch_setting[0][0] * self.widen_factor),

+ 3,

+ padding=1,

+ stride=2,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg),

+ ConvModule(

+ int(self.arch_setting[0][0] * self.widen_factor),

+ int(self.arch_setting[0][0] * self.widen_factor),

+ 3,

+ padding=1,

+ stride=1,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg))

+ elif self.arch == 'Tiny':

+ stem = nn.Sequential(

+ ConvModule(

+ 3,

+ int(self.arch_setting[0][0] * self.widen_factor // 2),

+ 3,

+ padding=1,

+ stride=2,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg),

+ ConvModule(

+ int(self.arch_setting[0][0] * self.widen_factor // 2),

+ int(self.arch_setting[0][0] * self.widen_factor),

+ 3,

+ padding=1,

+ stride=2,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg))

+ elif self.arch in ['W', 'E', 'D', 'E2E']:

+ stem = Focus(

3,

- padding=1,

- stride=1,

- norm_cfg=self.norm_cfg,

- act_cfg=self.act_cfg),

- ConvModule(

- int(self.arch_setting[0][0] * self.widen_factor // 2),

int(self.arch_setting[0][0] * self.widen_factor),

- 3,

- padding=1,

- stride=2,

+ kernel_size=3,

norm_cfg=self.norm_cfg,

- act_cfg=self.act_cfg),

- ConvModule(

- int(self.arch_setting[0][0] * self.widen_factor),

- int(self.arch_setting[0][0] * self.widen_factor),

- 3,

- padding=1,

- stride=1,

- norm_cfg=self.norm_cfg,

- act_cfg=self.act_cfg))

+ act_cfg=self.act_cfg)

return stem

def build_stage_layer(self, stage_idx: int, setting: list) -> list:

@@ -111,39 +216,70 @@ def build_stage_layer(self, stage_idx: int, setting: list) -> list:

stage_idx (int): The index of a stage layer.

setting (list): The architecture setting of a stage layer.

"""

- in_channels, out_channels, elan_mode = setting

-

+ in_channels, out_channels, stage_block_cfg = setting

in_channels = int(in_channels * self.widen_factor)

out_channels = int(out_channels * self.widen_factor)

+ stage_block_cfg = stage_block_cfg.copy()

+ stage_block_cfg.setdefault('norm_cfg', self.norm_cfg)

+ stage_block_cfg.setdefault('act_cfg', self.act_cfg)

+

+ stage_block_cfg['in_channels'] = in_channels

+ stage_block_cfg['out_channels'] = out_channels

+

stage = []

- if stage_idx == 0:

- pre_layer = ConvModule(

+ if self.arch in ['W', 'E', 'D', 'E2E']:

+ stage_block_cfg['in_channels'] = out_channels

+ elif self.arch in ['L', 'X']:

+ if stage_idx == 0:

+ stage_block_cfg['in_channels'] = out_channels // 2

+

+ downsample_layer = self._build_downsample_layer(

+ stage_idx, in_channels, out_channels)

+ stage.append(MODELS.build(stage_block_cfg))

+ if downsample_layer is not None:

+ stage.insert(0, downsample_layer)

+ return stage

+

+ def _build_downsample_layer(self, stage_idx: int, in_channels: int,

+ out_channels: int) -> Optional[nn.Module]:

+ """Build a downsample layer pre stage."""

+ if self.arch in ['E', 'D', 'E2E']:

+ downsample_layer = MaxPoolAndStrideConvBlock(

in_channels,

out_channels,

- 3,

- stride=2,

- padding=1,

- norm_cfg=self.norm_cfg,

- act_cfg=self.act_cfg)

- elan_layer = ELANBlock(

- out_channels,

- mode=elan_mode,

- num_blocks=2,

+ use_in_channels_of_middle=True,

norm_cfg=self.norm_cfg,

act_cfg=self.act_cfg)

- stage.extend([pre_layer, elan_layer])

- else:

- pre_layer = MaxPoolAndStrideConvBlock(

+ elif self.arch == 'W':

+ downsample_layer = ConvModule(

in_channels,

- mode='reduce_channel_2x',

- norm_cfg=self.norm_cfg,

- act_cfg=self.act_cfg)

- elan_layer = ELANBlock(

- in_channels,

- mode=elan_mode,

- num_blocks=2,

+ out_channels,

+ 3,

+ stride=2,

+ padding=1,

norm_cfg=self.norm_cfg,

act_cfg=self.act_cfg)

- stage.extend([pre_layer, elan_layer])

- return stage

+ elif self.arch == 'Tiny':

+ if stage_idx != 0:

+ downsample_layer = nn.MaxPool2d(2, 2)

+ else:

+ downsample_layer = None

+ elif self.arch in ['L', 'X']:

+ if stage_idx == 0:

+ downsample_layer = ConvModule(

+ in_channels,

+ out_channels // 2,

+ 3,

+ stride=2,

+ padding=1,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg)

+ else:

+ downsample_layer = MaxPoolAndStrideConvBlock(

+ in_channels,

+ in_channels,

+ use_in_channels_of_middle=False,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg)

+ return downsample_layer

diff --git a/mmyolo/models/dense_heads/__init__.py b/mmyolo/models/dense_heads/__init__.py

index 469880688..57fd668c0 100644

--- a/mmyolo/models/dense_heads/__init__.py

+++ b/mmyolo/models/dense_heads/__init__.py

@@ -3,11 +3,12 @@

from .rtmdet_head import RTMDetHead, RTMDetSepBNHeadModule

from .yolov5_head import YOLOv5Head, YOLOv5HeadModule

from .yolov6_head import YOLOv6Head, YOLOv6HeadModule

-from .yolov7_head import YOLOv7Head

+from .yolov7_head import YOLOv7Head, YOLOv7HeadModule, YOLOv7p6HeadModule

from .yolox_head import YOLOXHead, YOLOXHeadModule

__all__ = [

'YOLOv5Head', 'YOLOv6Head', 'YOLOXHead', 'YOLOv5HeadModule',

'YOLOv6HeadModule', 'YOLOXHeadModule', 'RTMDetHead',

- 'RTMDetSepBNHeadModule', 'YOLOv7Head', 'PPYOLOEHead', 'PPYOLOEHeadModule'

+ 'RTMDetSepBNHeadModule', 'YOLOv7Head', 'PPYOLOEHead', 'PPYOLOEHeadModule',

+ 'YOLOv7HeadModule', 'YOLOv7p6HeadModule'

]

diff --git a/mmyolo/models/dense_heads/yolov5_head.py b/mmyolo/models/dense_heads/yolov5_head.py

index 50115bbab..57913ca6e 100644

--- a/mmyolo/models/dense_heads/yolov5_head.py

+++ b/mmyolo/models/dense_heads/yolov5_head.py

@@ -167,6 +167,7 @@ def __init__(self,

reduction='mean',

loss_weight=1.0),

prior_match_thr: float = 4.0,

+ near_neighbor_thr: float = 0.5,

obj_level_weights: List[float] = [4.0, 1.0, 0.4],

train_cfg: OptConfigType = None,

test_cfg: OptConfigType = None,

@@ -192,6 +193,7 @@ def __init__(self,

self.featmap_sizes = [torch.empty(1)] * self.num_levels

self.prior_match_thr = prior_match_thr

+ self.near_neighbor_thr = near_neighbor_thr

self.obj_level_weights = obj_level_weights

self.special_init()

@@ -231,7 +233,7 @@ def special_init(self):

[0, 1], # up

[-1, 0], # right

[0, -1], # bottom

- ]).float() * 0.5

+ ]).float()

self.register_buffer(

'grid_offset', grid_offset[:, None], persistent=False)

@@ -534,9 +536,10 @@ def loss_by_feat(

# them as positive samples as well.

batch_targets_cxcy = batch_targets_scaled[:, 2:4]

grid_xy = scaled_factor[[2, 3]] - batch_targets_cxcy

- left, up = ((batch_targets_cxcy % 1 < 0.5) &

+ left, up = ((batch_targets_cxcy % 1 < self.near_neighbor_thr) &

(batch_targets_cxcy > 1)).T

- right, bottom = ((grid_xy % 1 < 0.5) & (grid_xy > 1)).T

+ right, bottom = ((grid_xy % 1 < self.near_neighbor_thr) &

+ (grid_xy > 1)).T

offset_inds = torch.stack(

(torch.ones_like(left), left, up, right, bottom))

@@ -552,7 +555,8 @@ def loss_by_feat(

priors_inds, (img_inds, class_inds) = priors_inds.long().view(

-1), img_class_inds.long().T

- grid_xy_long = (grid_xy - retained_offsets).long()

+ grid_xy_long = (grid_xy -

+ retained_offsets * self.near_neighbor_thr).long()

grid_x_inds, grid_y_inds = grid_xy_long.T

bboxes_targets = torch.cat((grid_xy - grid_xy_long, grid_wh), 1)

diff --git a/mmyolo/models/dense_heads/yolov6_head.py b/mmyolo/models/dense_heads/yolov6_head.py

index cf56ea405..b2581ef5f 100644

--- a/mmyolo/models/dense_heads/yolov6_head.py

+++ b/mmyolo/models/dense_heads/yolov6_head.py

@@ -14,7 +14,6 @@

from torch import Tensor

from mmyolo.registry import MODELS, TASK_UTILS

-from ..utils import make_divisible

from .yolov5_head import YOLOv5Head

@@ -31,7 +30,7 @@ class YOLOv6HeadModule(BaseModule):

feature map.

widen_factor (float): Width multiplier, multiply number of

channels in each layer by this amount. Default: 1.0.

- num_base_priors:int: The number of priors (points) at a point

+ num_base_priors: (int): The number of priors (points) at a point

on the feature grid.

featmap_strides (Sequence[int]): Downsample factor of each feature map.

Defaults to [8, 16, 32].

@@ -65,12 +64,10 @@ def __init__(self,

self.act_cfg = act_cfg

if isinstance(in_channels, int):

- self.in_channels = [make_divisible(in_channels, widen_factor)

+ self.in_channels = [int(in_channels * widen_factor)

] * self.num_levels

else:

- self.in_channels = [

- make_divisible(i, widen_factor) for i in in_channels

- ]

+ self.in_channels = [int(i * widen_factor) for i in in_channels]

self._init_layers()

@@ -380,7 +377,7 @@ def loss_by_feat(

loss_cls=loss_cls * world_size, loss_bbox=loss_bbox * world_size)

@staticmethod

- def gt_instances_preprocess(batch_gt_instances: Tensor,

+ def gt_instances_preprocess(batch_gt_instances: Union[Tensor, Sequence],

batch_size: int) -> Tensor:

"""Split batch_gt_instances with batch size, from [all_gt_bboxes, 6]

to.

@@ -396,28 +393,51 @@ def gt_instances_preprocess(batch_gt_instances: Tensor,

Returns:

Tensor: batch gt instances data, shape [batch_size, number_gt, 5]

"""

-

- # sqlit batch gt instance [all_gt_bboxes, 6] ->

- # [batch_size, number_gt_each_batch, 5]

- batch_instance_list = []

- max_gt_bbox_len = 0

- for i in range(batch_size):

- single_batch_instance = \

- batch_gt_instances[batch_gt_instances[:, 0] == i, :]

- single_batch_instance = single_batch_instance[:, 1:]

- batch_instance_list.append(single_batch_instance)

- if len(single_batch_instance) > max_gt_bbox_len:

- max_gt_bbox_len = len(single_batch_instance)

-

- # fill [-1., 0., 0., 0., 0.] if some shape of

- # single batch not equal max_gt_bbox_len

- for index, gt_instance in enumerate(batch_instance_list):

- if gt_instance.shape[0] >= max_gt_bbox_len:

- continue

- fill_tensor = batch_gt_instances.new_full(

- [max_gt_bbox_len - gt_instance.shape[0], 5], 0)

- fill_tensor[:, 0] = -1.

- batch_instance_list[index] = torch.cat(

- (batch_instance_list[index], fill_tensor), dim=0)

-

- return torch.stack(batch_instance_list)

+ if isinstance(batch_gt_instances, Sequence):

+ max_gt_bbox_len = max(

+ [len(gt_instances) for gt_instances in batch_gt_instances])

+ # fill [-1., 0., 0., 0., 0.] if some shape of

+ # single batch not equal max_gt_bbox_len

+ batch_instance_list = []

+ for index, gt_instance in enumerate(batch_gt_instances):

+ bboxes = gt_instance.bboxes

+ labels = gt_instance.labels

+ batch_instance_list.append(

+ torch.cat((labels[:, None], bboxes), dim=-1))

+

+ if bboxes.shape[0] >= max_gt_bbox_len:

+ continue

+

+ fill_tensor = bboxes.new_full(

+ [max_gt_bbox_len - bboxes.shape[0], 5], 0)

+ fill_tensor[:, 0] = -1.

+ batch_instance_list[index] = torch.cat(

+ (batch_instance_list[-1], fill_tensor), dim=0)

+

+ return torch.stack(batch_instance_list)

+ else:

+ # faster version

+ # sqlit batch gt instance [all_gt_bboxes, 6] ->

+ # [batch_size, number_gt_each_batch, 5]

+ batch_instance_list = []

+ max_gt_bbox_len = 0

+ for i in range(batch_size):

+ single_batch_instance = \

+ batch_gt_instances[batch_gt_instances[:, 0] == i, :]

+ single_batch_instance = single_batch_instance[:, 1:]

+ batch_instance_list.append(single_batch_instance)

+ if len(single_batch_instance) > max_gt_bbox_len:

+ max_gt_bbox_len = len(single_batch_instance)

+

+ # fill [-1., 0., 0., 0., 0.] if some shape of

+ # single batch not equal max_gt_bbox_len

+ for index, gt_instance in enumerate(batch_instance_list):

+ if gt_instance.shape[0] >= max_gt_bbox_len:

+ continue

+ fill_tensor = batch_gt_instances.new_full(

+ [max_gt_bbox_len - gt_instance.shape[0], 5], 0)

+ fill_tensor[:, 0] = -1.

+ batch_instance_list[index] = torch.cat(

+ (batch_instance_list[index], fill_tensor), dim=0)

+

+ return torch.stack(batch_instance_list)

diff --git a/mmyolo/models/dense_heads/yolov7_head.py b/mmyolo/models/dense_heads/yolov7_head.py

index 532c86434..80e6aadd2 100644

--- a/mmyolo/models/dense_heads/yolov7_head.py

+++ b/mmyolo/models/dense_heads/yolov7_head.py

@@ -1,84 +1,210 @@

# Copyright (c) OpenMMLab. All rights reserved.

-from typing import Sequence

+import math

+from typing import List, Optional, Sequence, Tuple, Union

+import torch

import torch.nn as nn

-from mmdet.utils import (ConfigType, OptConfigType, OptInstanceList,

- OptMultiConfig)

+from mmcv.cnn import ConvModule

+from mmdet.models.utils import multi_apply

+from mmdet.utils import ConfigType, OptInstanceList

+from mmengine.dist import get_dist_info

from mmengine.structures import InstanceData

from torch import Tensor

from mmyolo.registry import MODELS

-from .yolov5_head import YOLOv5Head

+from ..layers import ImplicitA, ImplicitM

+from ..task_modules.assigners.batch_yolov7_assigner import BatchYOLOv7Assigner

+from .yolov5_head import YOLOv5Head, YOLOv5HeadModule

+

+

+@MODELS.register_module()

+class YOLOv7HeadModule(YOLOv5HeadModule):

+ """YOLOv7Head head module used in YOLOv7."""

+

+ def _init_layers(self):

+ """initialize conv layers in YOLOv7 head."""

+ self.convs_pred = nn.ModuleList()

+ for i in range(self.num_levels):

+ conv_pred = nn.Sequential(

+ ImplicitA(self.in_channels[i]),

+ nn.Conv2d(self.in_channels[i],

+ self.num_base_priors * self.num_out_attrib, 1),

+ ImplicitM(self.num_base_priors * self.num_out_attrib),

+ )

+ self.convs_pred.append(conv_pred)

+

+ def init_weights(self):

+ """Initialize the bias of YOLOv7 head."""

+ super(YOLOv5HeadModule, self).init_weights()

+ for mi, s in zip(self.convs_pred, self.featmap_strides): # from

+ mi = mi[1] # nn.Conv2d

+

+ b = mi.bias.data.view(3, -1)

+ # obj (8 objects per 640 image)

+ b.data[:, 4] += math.log(8 / (640 / s)**2)

+ b.data[:, 5:] += math.log(0.6 / (self.num_classes - 0.99))

+

+ mi.bias.data = b.view(-1)

+

+

+@MODELS.register_module()

+class YOLOv7p6HeadModule(YOLOv5HeadModule):

+ """YOLOv7Head head module used in YOLOv7."""

+

+ def __init__(self,

+ *args,

+ main_out_channels: Sequence[int] = [256, 512, 768, 1024],

+ aux_out_channels: Sequence[int] = [320, 640, 960, 1280],

+ use_aux: bool = True,

+ norm_cfg: ConfigType = dict(

+ type='BN', momentum=0.03, eps=0.001),

+ act_cfg: ConfigType = dict(type='SiLU', inplace=True),

+ **kwargs):

+ self.main_out_channels = main_out_channels

+ self.aux_out_channels = aux_out_channels

+ self.use_aux = use_aux

+ self.norm_cfg = norm_cfg

+ self.act_cfg = act_cfg

+ super().__init__(*args, **kwargs)

+

+ def _init_layers(self):

+ """initialize conv layers in YOLOv7 head."""

+ self.main_convs_pred = nn.ModuleList()

+ for i in range(self.num_levels):

+ conv_pred = nn.Sequential(

+ ConvModule(

+ self.in_channels[i],

+ self.main_out_channels[i],

+ 3,

+ padding=1,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg),

+ ImplicitA(self.main_out_channels[i]),

+ nn.Conv2d(self.main_out_channels[i],

+ self.num_base_priors * self.num_out_attrib, 1),

+ ImplicitM(self.num_base_priors * self.num_out_attrib),

+ )

+ self.main_convs_pred.append(conv_pred)

+

+ if self.use_aux:

+ self.aux_convs_pred = nn.ModuleList()

+ for i in range(self.num_levels):

+ aux_pred = nn.Sequential(

+ ConvModule(

+ self.in_channels[i],

+ self.aux_out_channels[i],

+ 3,

+ padding=1,

+ norm_cfg=self.norm_cfg,

+ act_cfg=self.act_cfg),

+ nn.Conv2d(self.aux_out_channels[i],

+ self.num_base_priors * self.num_out_attrib, 1))

+ self.aux_convs_pred.append(aux_pred)

+ else:

+ self.aux_convs_pred = [None] * len(self.main_convs_pred)

+

+ def init_weights(self):

+ """Initialize the bias of YOLOv5 head."""

+ super(YOLOv5HeadModule, self).init_weights()

+ for mi, aux, s in zip(self.main_convs_pred, self.aux_convs_pred,

+ self.featmap_strides): # from

+ mi = mi[2] # nn.Conv2d

+ b = mi.bias.data.view(3, -1)

+ # obj (8 objects per 640 image)

+ b.data[:, 4] += math.log(8 / (640 / s)**2)

+ b.data[:, 5:] += math.log(0.6 / (self.num_classes - 0.99))

+ mi.bias.data = b.view(-1)

+

+ if self.use_aux:

+ aux = aux[1] # nn.Conv2d

+ b = aux.bias.data.view(3, -1)

+ # obj (8 objects per 640 image)

+ b.data[:, 4] += math.log(8 / (640 / s)**2)

+ b.data[:, 5:] += math.log(0.6 / (self.num_classes - 0.99))

+ mi.bias.data = b.view(-1)

+

+ def forward(self, x: Tuple[Tensor]) -> Tuple[List]:

+ """Forward features from the upstream network.

+

+ Args:

+ x (Tuple[Tensor]): Features from the upstream network, each is

+ a 4D-tensor.

+ Returns:

+ Tuple[List]: A tuple of multi-level classification scores, bbox

+ predictions, and objectnesses.

+ """

+ assert len(x) == self.num_levels

+ return multi_apply(self.forward_single, x, self.main_convs_pred,

+ self.aux_convs_pred)

+

+ def forward_single(self, x: Tensor, convs: nn.Module,

+ aux_convs: Optional[nn.Module]) \

+ -> Tuple[Union[Tensor, List], Union[Tensor, List],

+ Union[Tensor, List]]:

+ """Forward feature of a single scale level."""

+

+ pred_map = convs(x)

+ bs, _, ny, nx = pred_map.shape

+ pred_map = pred_map.view(bs, self.num_base_priors, self.num_out_attrib,

+ ny, nx)

+

+ cls_score = pred_map[:, :, 5:, ...].reshape(bs, -1, ny, nx)

+ bbox_pred = pred_map[:, :, :4, ...].reshape(bs, -1, ny, nx)

+ objectness = pred_map[:, :, 4:5, ...].reshape(bs, -1, ny, nx)

+

+ if not self.training or not self.use_aux:

+ return cls_score, bbox_pred, objectness

+ else:

+ aux_pred_map = aux_convs(x)

+ aux_pred_map = aux_pred_map.view(bs, self.num_base_priors,

+ self.num_out_attrib, ny, nx)

+ aux_cls_score = aux_pred_map[:, :, 5:, ...].reshape(bs, -1, ny, nx)

+ aux_bbox_pred = aux_pred_map[:, :, :4, ...].reshape(bs, -1, ny, nx)

+ aux_objectness = aux_pred_map[:, :, 4:5,

+ ...].reshape(bs, -1, ny, nx)

+

+ return [cls_score,

+ aux_cls_score], [bbox_pred, aux_bbox_pred

+ ], [objectness, aux_objectness]

-# Training mode is currently not supported

@MODELS.register_module()

class YOLOv7Head(YOLOv5Head):

"""YOLOv7Head head used in `YOLOv7

`_.

Args:

- head_module(nn.Module): Base module used for YOLOv6Head

- prior_generator(dict): Points generator feature maps

- in 2D points-based detectors.

- loss_cls (:obj:`ConfigDict` or dict): Config of classification loss.

- loss_bbox (:obj:`ConfigDict` or dict): Config of localization loss.

- loss_obj (:obj:`ConfigDict` or dict): Config of objectness loss.

- train_cfg (:obj:`ConfigDict` or dict, optional): Training config of

- anchor head. Defaults to None.

- test_cfg (:obj:`ConfigDict` or dict, optional): Testing config of

- anchor head. Defaults to None.

- init_cfg (:obj:`ConfigDict` or list[:obj:`ConfigDict`] or dict or

- list[dict], optional): Initialization config dict.

- Defaults to None.

+ simota_candidate_topk (int): The candidate top-k which used to

+ get top-k ious to calculate dynamic-k in BatchYOLOv7Assigner.

+ Defaults to 10.

+ simota_iou_weight (float): The scale factor for regression

+ iou cost in BatchYOLOv7Assigner. Defaults to 3.0.

+ simota_cls_weight (float): The scale factor for classification

+ cost in BatchYOLOv7Assigner. Defaults to 1.0.

"""

def __init__(self,

- head_module: nn.Module,

- prior_generator: ConfigType = dict(

- type='mmdet.YOLOAnchorGenerator',

- base_sizes=[[(10, 13), (16, 30), (33, 23)],

- [(30, 61), (62, 45), (59, 119)],

- [(116, 90), (156, 198), (373, 326)]],

- strides=[8, 16, 32]),

- bbox_coder: ConfigType = dict(type='YOLOv5BBoxCoder'),

- loss_cls: ConfigType = dict(

- type='mmdet.CrossEntropyLoss',

- use_sigmoid=True,

- reduction='sum',

- loss_weight=1.0),

- loss_bbox: ConfigType = dict(

- type='mmdet.GIoULoss', reduction='sum', loss_weight=5.0),

- loss_obj: ConfigType = dict(

- type='mmdet.CrossEntropyLoss',

- use_sigmoid=True,

- reduction='sum',

- loss_weight=1.0),

- train_cfg: OptConfigType = None,

- test_cfg: OptConfigType = None,

- init_cfg: OptMultiConfig = None):

- super().__init__(

- head_module=head_module,

- prior_generator=prior_generator,

- bbox_coder=bbox_coder,

- loss_cls=loss_cls,

- loss_bbox=loss_bbox,

- loss_obj=loss_obj,

- train_cfg=train_cfg,

- test_cfg=test_cfg,

- init_cfg=init_cfg)

-

- def special_init(self):

- """Since YOLO series algorithms will inherit from YOLOv5Head, but

- different algorithms have special initialization process.

-

- The special_init function is designed to deal with this situation.

- """

- pass

+ *args,

+ simota_candidate_topk: int = 20,

+ simota_iou_weight: float = 3.0,

+ simota_cls_weight: float = 1.0,