Table of Contents

+| Start Date | 22 Oct 2024 |

| Description | XCM Asset Metadata definition and a way of communicating it via XCM |

| Authors | Daniel Shiposha |

This RFC proposes a metadata format for XCM-identifiable assets (i.e., for fungible/non-fungible collections and non-fungible tokens) and a set of instructions to communicate it across chains.

+Currently, there is no way to communicate metadata of an asset (or an asset instance) via XCM.

+The ability to query and modify the metadata is useful for two kinds of entities:

+Asset collections (both fungible and nonfungible).

+Any collection has some metadata, such as the name of the collection. The standard way of communicating metadata could help with registering foreign assets within a consensus system. Therefore, this RFC could complement or supersede the RFC for initializing fully-backed derivatives (note that this RFC is related to the old XCM RFC process; it's not the Fellowship RFC and hasn't been migrated yet).

+NFTs (i.e., asset instances).

+The metadata is the crucial aspect of any nonfungible object since metadata assigns meaning to such an object. The metadata for NFTs is just as important as the notion of "amount" for fungibles (there is no sense in fungibles if they have no amount).

+An NFT is always a representation of some object. The metadata describes the object represented by the NFT.

+NFTs can be transferred to another chain via XCM. However, there are limitations due to the inability to communicate its metadata:

+Besides metadata modification, the ability to read it is also valuable. On-chain logic can interpret the NFT metadata, i.e., the metadata could have not only the media meaning but also a utility function within a consensus system. Currently, such a way of using NFT metadata is possible only within one consensus system. This RFC proposes making it possible between different systems via XCM so different chains can fetch and analyze the asset metadata from other chains.

+Runtime users, Runtime devs, Cross-chain dApps, Wallets.

+The Asset Metadata is information bound to an asset class (fungible or NFT collection) or an asset instance (an NFT). +The Asset Metadata could be represented differently on different chains (or in other consensus entities). +However, to communicate metadata between consensus entities via XCM, we need a general format so that any consensus entity can make sense of such information.

+We can name this format "XCM Asset Metadata".

+This RFC proposes:

+Using key-value pairs as XCM Asset Metadata since it is a general concept useful for both structured and unstructured data. Both key and value can be raw bytes with interpretation up to the communicating entities.

+The XCM Asset Metadata should be represented as a map SCALE-encoded equivalent to the BTreeMap<Vec<u8>, Vec<u8>>.

As such, the XCM Asset Metadata types are defined as follows:

++#![allow(unused)] +fn main() { +type MetadataKey = Vec<u8>; +type MetadataValue = Vec<u8>; +type MetadataMap = BTreeMap<MetadataKey, MetadataValue>; +}

Communicating only the demanded part of the metadata, not the whole metadata.

+A consensus entity should be able to query the values of interested keys to read the metadata.

+We need a set-like type to specify the keys to read, a SCALE-encoded equivalent to the BTreeSet<Vec<u8>>.

+Let's define that type as follows:

+#![allow(unused)] +fn main() { +type MetadataKeySet = BTreeSet<MetadataKey>; +}

A consensus entity should be able to write the values for specified keys.

+New XCM instructions to communicate the metadata.

+Note: the maximum lengths of MetadataKey, MetadataValue, MetadataMap, and MetadataKeySet are implementation-defined.

ReportMetadataThe ReportMetadata is a new instruction to query metadata information.

+It can be used to query metadata key list or to query values of interested keys.

This instruction allows querying the metadata of:

+If an asset (or an asset instance) for which the query is made doesn't exist, the Response::Null should be reported via the existing QueryResponse instruction.

The ReportMetadata can be used without origin (i.e., following the ClearOrigin instruction) since it only reads state.

Safety: The reporter origin should be trusted to hold the true metadata. If the reserve-based model is considered, the asset's reserve location must be viewed as the only source of truth about the metadata.

+The use case for this instruction is when the metadata information of a foreign asset (or asset instance) is used in the logic of a consensus entity that requested it.

++#![allow(unused)] +fn main() { +/// An instruction to query metadata of an asset or an asset instance. +ReportMetadata { + /// The ID of an asset (a collection, fungible or nonfungible). + asset_id: AssetId, + + /// The ID of an asset instance. + /// + /// If the value is `Undefined`, the metadata of the collection is reported. + instance: AssetInstance, + + /// See `MetadataQueryKind` below. + query_kind: MetadataQueryKind, + + /// The usual field for Report<something> XCM instructions. + /// + /// Information regarding the query response. + /// The `QueryResponseInfo` type is already defined in the XCM spec. + response_info: QueryResponseInfo, +} +}

Where the MetadataQueryKind is:

+#![allow(unused)] +fn main() { +enum MetadataQueryKind { + /// Query metadata key set. + KeySet, + + /// Query values of the specified keys. + Values(MetadataKeySet), +} +}

The ReportMetadata works in conjunction with the existing QueryResponse instruction. The Response type should be modified accordingly: we need to add a new AssetMetadata variant to it.

+#![allow(unused)] +fn main() { +/// The struct used in the existing `QueryResponse` instruction. +pub enum Response { + // ... snip, existing variants ... + + /// The metadata info. + AssetMetadata { + /// The ID of an asset (a collection, fungible or nonfungible). + asset_id: AssetId, + + /// The ID of an asset instance. + /// + /// If the value is `Undefined`, the reported metadata is related to the collection, not a token. + instance: AssetInstance, + + /// See `MetadataResponseData` below. + data: MetadataResponseData, + } +} + +pub enum MetadataResponseData { + /// The metadata key list to be reported + /// in response to the `KeySet` metadata query kind. + KeySet(MetadataKeySet), + + /// The values of the keys that were specified in the + /// `Values` variant of the metadata query kind. + Values(MetadataMap), +} +}

ModifyMetadataThe ModifyMetadata is a new instruction to request a remote chain to modify the values of the specified keys.

This instruction can be used to update the metadata of a collection (fungible or nonfungible) or of an NFT.

+The remote chain handles the modification request and may reject it based on its internal rules. +The request can only be executed or rejected in its entirety. It must not be executed partially.

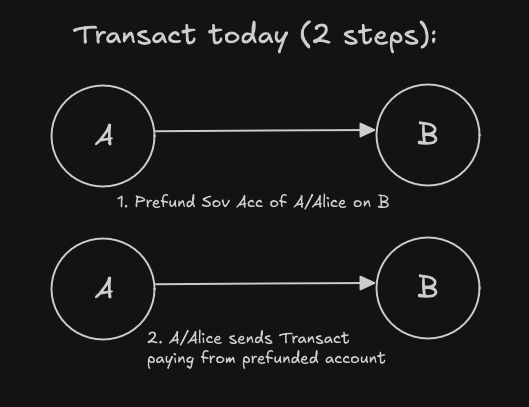

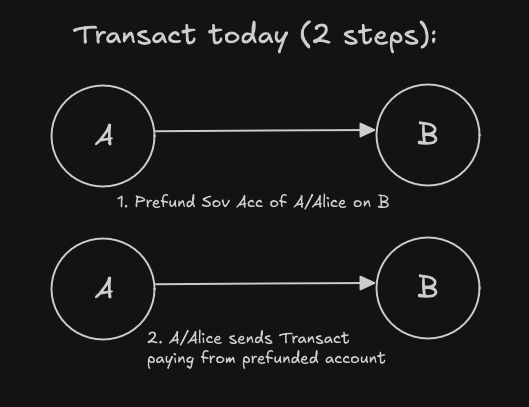

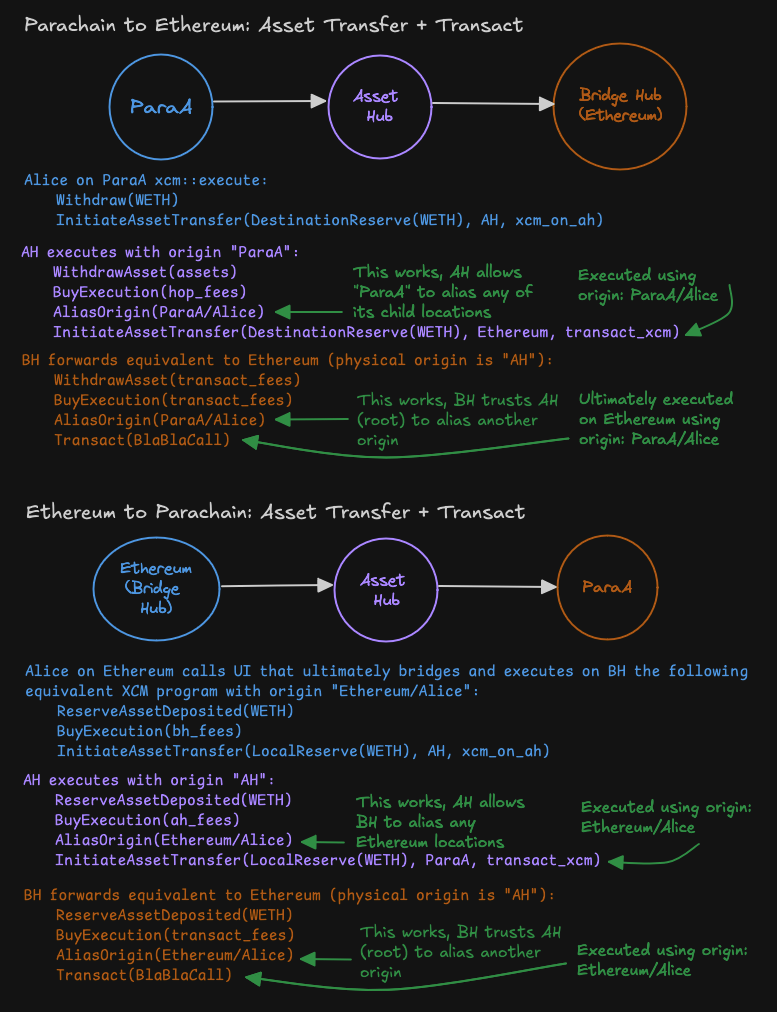

+To execute the ModifyMetadata, an origin is required so that the handling logic can authorize the metadata modification request from a known source. Since this instruction requires an origin, the assets used to cover the execution fees must be transferred in a way that preserves the origin. For instance, one can use the approach described in RFC #122 if the handling chain configured aliasing rules accordingly.

The example use case of this instruction is to ask the reserve location of the asset to modify the metadata. So that, the original asset's metadata is updated according to the reserve location's rules.

++#![allow(unused)] +fn main() { +ModifyMetadata { + /// The ID of an asset (a collection, fungible or nonfungible). + asset_id: AssetId, + + /// The ID of an asset instance. + /// + /// If the value is `Undefined`, the modification request targets the collection, not a token. + instance: AssetInstance, + + /// The map contains the keys mapped to the requested new values. + modification: MetadataMap, +} +}

AssetInstance::UndefinedAs the new instructions show, this RFC reframes the purpose of the Undefined variant of the AssetInstance enum.

+This RFC proposes to use the Undefined variant of a collection identified by an AssetId as a synonym of the collection itself. I.e., an asset Asset { id: <AssetId>, fun: NonFungible(AssetInstance::Undefined) } is considered an NFT representing the collection itself.

As a singleton non-fungible instance is barely distinguishable from its collection, this convention shouldn't cause any problems.

+Thus, the AssetInstance docs must be updated accordingly in the implementations.

Regarding ergonomics, no drawbacks were noticed.

+As for the user experience, it could discover new cross-chain use cases involving asset collections and NFTs, indicating a positive impact.

+There are no security concerns except for the ReportMetadata instruction, which implies that the source of the information must be trusted.

In terms of performance and privacy, there will be no changes.

+The implementations must honor the contract for the new instructions. Namely, if the instance field has the value of AssetInstance::Undefined, the metadata must relate to the asset collection but not to a non-fungible token inside it.

No significant impact.

+Introducing a standard metadata format and a way of communicating it is a valuable addition to the XCM format that potentially increases cross-chain interoperability without the need to form ad-hoc chain-to-chain integrations via Transact.

This RFC proposes new functionality, so there are no compatibility issues.

+The original RFC draft contained additional metadata instructions. Though they could be useful, they're clearly outside the basic logic. So, this RFC version omits them to make the metadata discussion more focused on the core things. Nonetheless, there is hope that metadata approval instructions might be useful in the future, so they are mentioned here.

+You can read about the details in the original draft.

Table of Contents

This proposal introduces XCQ (Cross Consensus Query), which aims to serve as an intermediary layer between different chain runtime implementations and tools/UIs, to provide a unified interface for cross-chain queries.

XCQ abstracts away concrete implementations across chains and supports custom query computations.

Use cases benefiting from XCQ include:

In Substrate, runtime APIs facilitate off-chain clients in reading the state of the consensus system.

However, different chains may expose different APIs for a similar query or have varying data types, such as doing custom transformations on direct data, or differing AccountId types.

This diversity also extends to client-side, which may require custom computations over runtime APIs in various use cases.

Therefore, tools and UI developers often access storage directly and reimplement custom computations to convert data into user-friendly representations, leading to duplicated code between Rust runtime logic and UI JS/TS logic.

This duplication increases workload and potential for bugs.

Therefore, a system is needed to serve as an intermediary layer between concrete chain runtime implementations and tools/UIs, to provide a unified interface for cross-chain queries.

-The overall query pattern of XCQ consists of three components:

Testing:

@@ -965,14 +1188,14 @@It's a new functionality, which doesn't modify the existing implementations.

-The proposal facilitate the wallets and dApps developers. Developers no longer need to examine every concrete implementation to support conceptually similar operations across different chains. Additionally, they gain a more modular development experience through encapsulating custom computations over the exposed APIs in PolkaVM programs.

-The proposal defines new apis, which doesn't break compatibility with existing interfaces.

-There are several discussions related to the proposal, including:

frame-metadata's CustomMetadata field, but the trade-offs (i.e. compatibility between versions) need examination.Table of Contents

This RFC proposes a change that makes it possible to identify types of compressed blobs stored on-chain, as well as used off-chain, without the need for decompression.

-Currently, a compressed blob does not give any idea of what's inside because the only thing that can be inside, according to the spec, is Wasm. In reality, other blob types are already being used, and more are to come. Apart from being error-prone by itself, the current approach does not allow to properly route the blob through the execution paths before its decompression, which will result in suboptimal implementations when more blob types are used. Thus, it is necessary to introduce a mechanism allowing to identify the blob type without decompressing it.

This proposal is intended to support future work enabling Polkadot to execute PolkaVM and, more generally, other-than-Wasm parachain runtimes, and allow developers to introduce arbitrary compression methods seamlessly in the future.

-Node developers are the main stakeholders for this proposal. It also creates a foundation on which parachain runtime developers will build.

-The current approach to compressing binary blobs involves using zstd compression, and the resulting compressed blob is prefixed with a unique 64-bit magic value specified in that subsection. The same procedure is used to compress both Wasm code blobs and proofs-of-validity. Currently, having solely a compressed blob, it's impossible to tell what's inside it without decompression, a Wasm blob, or a PoV. That doesn't cause problems in the current protocol, as Wasm blobs and PoV blobs take completely different execution paths in the code.

The changes proposed below are intended to define the means for distinguishing compressed blob types in a backward-compatible and future-proof way.

@@ -1048,26 +1271,26 @@CBLOB_ZSTD_LEGACY remain on-chain. That may take quite some time. Alternatively, create a migration that alters prefixes of existing blobs;CBLOB_ZSTD_LEGACY prefix will be possible after all the nodes in all the networks cease using the prefix which is a long process, and additional incentives should be offered to the community to make people upgrade.Currently, the only requirement for a compressed blob prefix is not to coincide with Wasm magic bytes (as stated in code comments). Changes proposed here increase prefix collision risk, given that arbitrary data may be compressed in the future. However, it must be taken into account that:

As the change increases granularity, it will positively affect both testing possibilities and security, allowing developers to check what's inside a given compressed blob precisely. Testing the change itself is trivial. Privacy is not affected by this change.

-The current implementation's performance is not affected by this change. Future implementations allowing for the execution of other-than-Wasm parachain runtimes will benefit from this change performance-wise.

-The end-user ergonomics is not affected. The ergonomics for developers will benefit from this change as it enables exact checks and less guessing.

-The change is designed to be backward-compatible.

-SDK PR#6704 (WIP) introduces a mechanism similar to that described in this proposal and proves the necessity of such a change.

None

-This proposal creates a foundation for two future work directions:

This proposes a periodic, sale-based method for assigning Polkadot Coretime, the analogue of "block space" within the Polkadot Network. The method takes into account the need for long-term capital expenditure planning for teams building on Polkadot, yet also provides a means to allow Polkadot to capture long-term value in the resource which it sells. It supports the possibility of building rich and dynamic secondary markets to optimize resource allocation and largely avoids the need for parameterization.

-The Polkadot Ubiquitous Computer, or just Polkadot UC, represents the public service provided by the Polkadot Network. It is a trust-free, WebAssembly-based, multicore, internet-native omnipresent virtual machine which is highly resilient to interference and corruption.

The present system of allocating the limited resources of the Polkadot Ubiquitous Computer is through a process known as parachain slot auctions. This is a parachain-centric paradigm whereby a single core is long-term allocated to a single parachain which itself implies a Substrate/Cumulus-based chain secured and connected via the Relay-chain. Slot auctions are on-chain candle auctions which proceed for several days and result in the core being assigned to the parachain for six months at a time up to 24 months in advance. Practically speaking, we only see two year periods being bid upon and leased.

@@ -1136,7 +1359,7 @@Furthermore, the design SHOULD be implementable and deployable in a timely fashion; three months from the acceptance of this RFC should not be unreasonable.

-Primary stakeholder sets are:

Socialization:

The essensials of this proposal were presented at Polkadot Decoded 2023 Copenhagen on the Main Stage. A small amount of socialization at the Parachain Summit preceeded it and some substantial discussion followed it. Parity Ecosystem team is currently soliciting views from ecosystem teams who would be key stakeholders.

-Upon implementation of this proposal, the parachain-centric slot auctions and associated crowdloans cease. Instead, Coretime on the Polkadot UC is sold by the Polkadot System in two separate formats: Bulk Coretime and Instantaneous Coretime.

When a Polkadot Core is utilized, we say it is dedicated to a Task rather than a "parachain". The Task to which a Core is dedicated may change at every Relay-chain block and while one predominant type of Task is to secure a Cumulus-based blockchain (i.e. a parachain), other types of Tasks are envisioned.

@@ -1577,16 +1800,16 @@No specific considerations.

Parachains already deployed into the Polkadot UC must have a clear plan of action to migrate to an agile Coretime market.

While this proposal does not introduce documentable features per se, adequate documentation must be provided to potential purchasers of Polkadot Coretime. This SHOULD include any alterations to the Polkadot-SDK software collection.

-Regular testing through unit tests, integration tests, manual testnet tests, zombie-net tests and fuzzing SHOULD be conducted.

A regular security review SHOULD be conducted prior to deployment through a review by the Web3 Foundation economic research group.

Any final implementation MUST pass a professional external security audit.

The proposal introduces no new privacy concerns.

-RFC-3 proposes a means of implementing the high-level allocations within the Relay-chain.

RFC-5 proposes the API for interacting with Relay-chain.

Additional work should specify the interface for the instantaneous market revenue so that the Coretime-chain can ensure Bulk Coretime placed in the instantaneous market is properly compensated.

@@ -1602,7 +1825,7 @@Robert Habermeier initially wrote on the subject of Polkadot blockspace-centric in the article Polkadot Blockspace over Blockchains. While not going into details, the article served as an early reframing piece for moving beyond one-slot-per-chain models and building out secondary market infrastructure for resource allocation.

Table of Contents

@@ -1635,10 +1858,10 @@In the Agile Coretime model of the Polkadot Ubiquitous Computer, as proposed in RFC-1 and RFC-3, it is necessary for the allocating parachain (envisioned to be one or more pallets on a specialised Brokerage System Chain) to communicate the core assignments to the Relay-chain, which is responsible for ensuring those assignments are properly enacted.

This is a proposal for the interface which will exist around the Relay-chain in order to communicate this information and instructions.

-The background motivation for this interface is splitting out coretime allocation functions and secondary markets from the Relay-chain onto System parachains. A well-understood and general interface is necessary for ensuring the Relay-chain receives coretime allocation instructions from one or more System chains without introducing dependencies on the implementation details of either side.

Primary stakeholder sets are:

Socialization:

This content of this RFC was discussed in the Polkdot Fellows channel.

-The interface has two sections: The messages which the Relay-chain is able to receive from the allocating parachain (the UMP message types), and messages which the Relay-chain is able to send to the allocating parachain (the DMP message types). These messages are expected to be able to be implemented in a well-known pallet and called with the XCM Transact instruction.

Future work may include these messages being introduced into the XCM standard.

Realistic Limits of the Usage

For request_revenue_info, a successful request should be possible if when is no less than the Relay-chain block number on arrival of the message less 100,000.

For assign_core, a successful request should be possible if begin is no less than the Relay-chain block number on arrival of the message plus 10 and workload contains no more than 100 items.

-Performance, Ergonomics and Compatibility

+Performance, Ergonomics and Compatibility

No specific considerations.

-Testing, Security and Privacy

+Testing, Security and Privacy

Standard Polkadot testing and security auditing applies.

The proposal introduces no new privacy concerns.

-Future Directions and Related Material

+Future Directions and Related Material

RFC-1 proposes a means of determining allocation of Coretime using this interface.

RFC-3 proposes a means of implementing the high-level allocations within the Relay-chain.

Drawbacks, Alternatives and Unknowns

None at present.

-Prior Art and References

+Prior Art and References

None.

Table of Contents

@@ -1789,13 +2012,13 @@ Summary

+Summary

As core functionality moves from the Relay Chain into system chains, so increases the reliance on

the liveness of these chains for the use of the network. It is not economically scalable, nor

necessary from a game-theoretic perspective, to pay collators large rewards. This RFC proposes a

mechanism -- part technical and part social -- for ensuring reliable collator sets that are

resilient to attemps to stop any subsytem of the Polkadot protocol.

-Motivation

+Motivation

In order to guarantee access to Polkadot's system, the collators on its system chains must propose

blocks (provide liveness) and allow all transactions to eventually be included. That is, some

collators may censor transactions, but there must exist one collator in the set who will include a

@@ -1831,12 +2054,12 @@

RequirementsCollators selected by governance SHOULD have a reasonable expectation that the Treasury will

reimburse their operating costs.

This protocol builds on the existing

Collator Selection pallet

and its notion of Invulnerables. Invulnerables are collators (identified by their AccountIds) who

@@ -1872,27 +2095,27 @@

The primary drawback is a reliance on governance for continued treasury funding of infrastructure costs for Invulnerable collators.

-The vast majority of cases can be covered by unit testing. Integration test should ensure that the

Collator Selection UpdateOrigin, which has permission to modify the Invulnerables and desired

number of Candidates, can handle updates over XCM from the system's governance location.

This proposal has very little impact on most users of Polkadot, and should improve the performance of system chains by reducing the number of missed blocks.

-As chains have strict PoV size limits, care must be taken in the PoV impact of the session manager. Appropriate benchmarking and tests should ensure that conservative limits are placed on the number of Invulnerables and Candidates.

-The primary group affected is Candidate collators, who, after implementation of this RFC, will need to compete in a bond-based election rather than a race to claim a Candidate spot.

-This RFC is compatible with the existing implementation and can be handled via upgrades and migration.

-None at this time.

-There may exist in the future system chains for which this model of collator selection is not appropriate. These chains should be evaluated on a case-by-case basis.

@@ -1948,10 +2171,10 @@The full nodes of the Polkadot peer-to-peer network maintain a distributed hash table (DHT), which is currently used for full nodes discovery and validators discovery purposes.

This RFC proposes to extend this DHT to be used to discover full nodes of the parachains of Polkadot.

-The maintenance of bootnodes has long been an annoyance for everyone.

When a bootnode is newly-deployed or removed, every chain specification must be updated in order to take the update into account. This has lead to various non-optimal solutions, such as pulling chain specifications from GitHub repositories. When it comes to RPC nodes, UX developers often have trouble finding up-to-date addresses of parachain RPC nodes. With the ongoing migration from RPC nodes to light clients, similar problems would happen with chain specifications as well.

@@ -1960,9 +2183,9 @@Because the list of bootnodes in chain specifications is so annoying to modify, the consequence is that the number of bootnodes is rather low (typically between 2 and 15). In order to better resist downtimes and DoS attacks, a better solution would be to use every node of a certain chain as potential bootnode, rather than special-casing some specific nodes.

While this RFC doesn't solve these problems for relay chains, it aims at solving it for parachains by storing the list of all the full nodes of a parachain on the relay chain DHT.

Assuming that this RFC is implemented, and that light clients are used, deploying a parachain wouldn't require more work than registering it onto the relay chain and starting the collators. There wouldn't be any need for special infrastructure nodes anymore.

-This RFC has been opened on my own initiative because I think that this is a good technical solution to a usability problem that many people are encountering and that they don't realize can be solved.

-The content of this RFC only applies for parachains and parachain nodes that are "Substrate-compatible". It is in no way mandatory for parachains to comply to this RFC.

Note that "Substrate-compatible" is very loosely defined as "implements the same mechanisms and networking protocols as Substrate". The author of this RFC believes that "Substrate-compatible" should be very precisely specified, but there is controversy on this topic.

While a lot of this RFC concerns the implementation of parachain nodes, it makes use of the resources of the Polkadot chain, and as such it is important to describe them in the Polkadot specification.

@@ -1999,10 +2222,10 @@The peer_id and addrs fields are in theory not strictly needed, as the PeerId and addresses could be always equal to the PeerId and addresses of the node being registered as the provider and serving the response. However, the Cumulus implementation currently uses two different networking stacks, one of the parachain and one for the relay chain, using two separate PeerIds and addresses, and as such the PeerId and addresses of the other networking stack must be indicated. Asking them to use only one networking stack wouldn't feasible in a realistic time frame.

The values of the genesis_hash and fork_id fields cannot be verified by the requester and are expected to be unused at the moment. Instead, a client that desires connecting to a parachain is expected to obtain the genesis hash and fork ID of the parachain from the parachain chain specification. These fields are included in the networking protocol nonetheless in case an acceptable solution is found in the future, and in order to allow use cases such as discovering parachains in a not-strictly-trusted way.

Because not all nodes want to be used as bootnodes, implementers are encouraged to provide a way to disable this mechanism. However, it is very much encouraged to leave this mechanism on by default for all parachain nodes.

This mechanism doesn't add or remove any security by itself, as it relies on existing mechanisms. However, if the principle of chain specification bootnodes is entirely replaced with the mechanism described in this RFC (which is the objective), then it becomes important whether the mechanism in this RFC can be abused in order to make a parachain unreachable.

@@ -2011,22 +2234,22 @@The DHT mechanism generally has a low overhead, especially given that publishing providers is done only every 24 hours.

Doing a Kademlia iterative query then sending a provider record shouldn't take more than around 50 kiB in total of bandwidth for the parachain bootnode.

Assuming 1000 parachain full nodes, the 20 Polkadot full nodes corresponding to a specific parachain will each receive a sudden spike of a few megabytes of networking traffic when the key rotates. Again, this is relatively negligible. If this becomes a problem, one can add a random delay before a parachain full node registers itself to be the provider of the key corresponding to BabeApi_next_epoch.

Maybe the biggest uncertainty is the traffic that the 20 Polkadot full nodes will receive from light clients that desire knowing the bootnodes of a parachain. Light clients are generally encouraged to cache the peers that they use between restarts, so they should only query these 20 Polkadot full nodes at their first initialization. If this every becomes a problem, this value of 20 is an arbitrary constant that can be increased for more redundancy.

-Irrelevant.

-Irrelevant.

-None.

While it fundamentally doesn't change much to this RFC, using BabeApi_currentEpoch and BabeApi_nextEpoch might be inappropriate. I'm not familiar enough with good practices within the runtime to have an opinion here. Should it be an entirely new pallet?

It is possible that in the future a client could connect to a parachain without having to rely on a trusted parachain specification.

Table of Contents

@@ -2059,9 +2282,9 @@Improve the networking messages that query storage items from the remote, in order to reduce the bandwidth usage and number of round trips of light clients.

-Clients on the Polkadot peer-to-peer network can be divided into two categories: full nodes and light clients. So-called full nodes are nodes that store the content of the chain locally on their disk, while light clients are nodes that don't. In order to access for example the balance of an account, a full node can do a disk read, while a light client needs to send a network message to a full node and wait for the full node to reply with the desired value. This reply is in the form of a Merkle proof, which makes it possible for the light client to verify the exactness of the value.

Unfortunately, this network protocol is suffering from some issues:

Once Polkadot and Kusama will have transitioned to state_version = 1, which modifies the format of the trie entries, it will be possible to generate Merkle proofs that contain only the hashes of values in the storage. Thanks to this, it is already possible to prove the existence of a key without sending its entire value (only its hash), or to prove that a value has changed or not between two blocks (by sending just their hashes).

Thus, the only reason why aforementioned issues exist is because the existing networking messages don't give the possibility for the querier to query this. This is what this proposal aims at fixing.

This is the continuation of https://github.com/w3f/PPPs/pull/10, which itself is the continuation of https://github.com/w3f/PPPs/pull/5.

-The protobuf schema of the networking protocol can be found here: https://github.com/paritytech/substrate/blob/5b6519a7ff4a2d3cc424d78bc4830688f3b184c0/client/network/light/src/schema/light.v1.proto

The proposal is to modify this protocol in this way:

@@ -11,6 +11,7 @@ message Request { @@ -2131,26 +2354,26 @@flag. In other words, if the request concerns the main trie, no content coming from child tries is ever sent back.ExplanationincludeDescendants

This protocol keeps the same maximum response size limit as currently exists (16 MiB). It is not possible for the querier to know in advance whether its query will lead to a reply that exceeds the maximum size. If the reply is too large, the replier should send back only a limited number (but at least one) of requested items in the proof. The querier should then send additional requests for the rest of the items. A response containing none of the requested items is invalid.

The server is allowed to silently discard some keys of the request if it judges that the number of requested keys is too high. This is in line with the fact that the server might truncate the response.

-Drawbacks

+Drawbacks

This proposal doesn't handle one specific situation: what if a proof containing a single specific item would exceed the response size limit? For example, if the response size limit was 1 MiB, querying the runtime code (which is typically 1.0 to 1.5 MiB) would be impossible as it's impossible to generate a proof less than 1 MiB. The response size limit is currently 16 MiB, meaning that no single storage item must exceed 16 MiB.

Unfortunately, because it's impossible to verify a Merkle proof before having received it entirely, parsing the proof in a streaming way is also not possible.

A way to solve this issue would be to Merkle-ize large storage items, so that a proof could include only a portion of a large storage item. Since this would require a change to the trie format, it is not realistically feasible in a short time frame.

-Testing, Security, and Privacy

+Testing, Security, and Privacy

The main security consideration concerns the size of replies and the resources necessary to generate them. It is for example easily possible to ask for all keys and values of the chain, which would take a very long time to generate. Since responses to this networking protocol have a maximum size, the replier should truncate proofs that would lead to the response being too large. Note that it is already possible to send a query that would lead to a very large reply with the existing network protocol. The only thing that this proposal changes is that it would make it less complicated to perform such an attack.

Implementers of the replier side should be careful to detect early on when a reply would exceed the maximum reply size, rather than inconditionally generate a reply, as this could take a very large amount of CPU, disk I/O, and memory. Existing implementations might currently be accidentally protected from such an attack thanks to the fact that requests have a maximum size, and thus that the list of keys in the query was bounded. After this proposal, this accidental protection would no longer exist.

Malicious server nodes might truncate Merkle proofs even when they don't strictly need to, and it is not possible for the client to (easily) detect this situation. However, malicious server nodes can already do undesirable things such as throttle down their upload bandwidth or simply not respond. There is no need to handle unnecessarily truncated Merkle proofs any differently than a server simply not answering the request.

-Performance, Ergonomics, and Compatibility

-Performance

+Performance, Ergonomics, and Compatibility

+Performance

It is unclear to the author of the RFC what the performance implications are. Servers are supposed to have limits to the amount of resources they use to respond to requests, and as such the worst that can happen is that light client requests become a bit slower than they currently are.

-Ergonomics

+Ergonomics

Irrelevant.

-Compatibility

+Compatibility

The prior networking protocol is maintained for now. The older version of this protocol could get removed in a long time.

-Prior Art and References

+Prior Art and References

None. This RFC is a clean-up of an existing mechanism.

Unresolved Questions

None

-Future Directions and Related Material

+Future Directions and Related Material

The current networking protocol could be deprecated in a long time. Additionally, the current "state requests" protocol (used for warp syncing) could also be deprecated in favor of this one.

Table of Contents

@@ -2171,13 +2394,13 @@Summary +

Summary

The Polkadot UC will generate revenue from the sale of available Coretime. The question then arises: how should we handle these revenues? Broadly, there are two reasonable paths – burning the revenue and thereby removing it from total issuance or divert it to the Treasury. This Request for Comment (RFC) presents arguments favoring burning as the preferred mechanism for handling revenues from Coretime sales.

-Motivation

+Motivation

How to handle the revenue accrued from Coretime sales is an important economic question that influences the value of DOT and should be properly discussed before deciding for either of the options. Now is the best time to start this discussion.

-Stakeholders

+Stakeholders

Polkadot DOT token holders.

-Explanation

+Explanation

This RFC discusses potential benefits of burning the revenue accrued from Coretime sales instead of diverting them to Treasury. Here are the following arguments for it.

It's in the interest of the Polkadot community to have a consistent and predictable Treasury income, because volatility in the inflow can be damaging, especially in situations when it is insufficient. As such, this RFC operates under the presumption of a steady and sustainable Treasury income flow, which is crucial for the Polkadot community's stability. The assurance of a predictable Treasury income, as outlined in a prior discussion here, or through other equally effective measures, serves as a baseline assumption for this argument.

Consequently, we need not concern ourselves with this particular issue here. This naturally begs the question - why should we introduce additional volatility to the Treasury by aligning it with the variable Coretime sales? It's worth noting that Coretime revenues often exhibit an inverse relationship with periods when Treasury spending should ideally be ramped up. During periods of low Coretime utilization (indicated by lower revenue), Treasury should spend more on projects and endeavours to increase the demand for Coretime. This pattern underscores that Coretime sales, by their very nature, are an inconsistent and unpredictable source of funding for the Treasury. Given the importance of maintaining a steady and predictable inflow, it's unnecessary to rely on another volatile mechanism. Some might argue that we could have both: a steady inflow (from inflation) and some added bonus from Coretime sales, but burning the revenue would offer further benefits as described below.

@@ -2220,13 +2443,13 @@

Authors Joe Petrowski -Summary

+Summary

Since the introduction of the Collectives parachain, many groups have expressed interest in forming new -- or migrating existing groups into -- on-chain collectives. While adding a new collective is relatively simple from a technical standpoint, the Fellowship will need to merge new pallets into the Collectives parachain for each new collective. This RFC proposes a means for the network to ratify a new collective, thus instructing the Fellowship to instate it in the runtime.

-Motivation

+Motivation

Many groups have expressed interest in representing collectives on-chain. Some of these include:

The group that wishes to operate an on-chain collective should publish the following information:

Collective removal may also come with other governance calls, for example voiding any scheduled Treasury spends that would fund the given collective.

-Passing a Root origin referendum is slow. However, given the network's investment (in terms of code maintenance and salaries) in a new collective, this is an appropriate step.

-No impacts.

-Generally all new collectives will be in the Collectives parachain. Thus, performance impacts should strictly be limited to this parachain and not affect others. As the majority of logic for collectives is generalized and reusable, we expect most collectives to be instances of similar subsets of modules. That is, new collectives should generally be compatible with UIs and other services that provide collective-related functionality, with little modifications to support new ones.

-The launch of the Technical Fellowship, see the initial forum post.

Introduces breaking changes to the Core runtime API by letting Core::initialize_block return an enum. The versions of Core is bumped from 4 to 5.

The main feature that motivates this RFC are Multi-Block-Migrations (MBM); these make it possible to split a migration over multiple blocks.

Further it would be nice to not hinder the possibility of implementing a new hook poll, that runs at the beginning of the block when there are no MBMs and has access to AllPalletsWithSystem. This hook can then be used to replace the use of on_initialize and on_finalize for non-deadline critical logic.

In a similar fashion, it should not hinder the future addition of a System::PostInherents callback that always runs after all inherents were applied.

Core::initialize_blockThis runtime API function is changed from returning () to ExtrinsicInclusionMode:

fn initialize_block(header: &<Block as BlockT>::Header) @@ -2368,23 +2591,23 @@

1. Multi-Block-Migrations: The runtime is being put into lock-down mode for the duration of the migration process by returning OnlyInherentsfrominitialize_block. This ensures that no user provided transaction can interfere with the migration process. It is absolutely necessary to ensure this, otherwise a transaction could call into un-migrated storage and violate storage invariants.2.

pollis possible by usingapply_extrinsicas entry-point and not hindered by this approach. It would not be possible to use a pallet inherent likeSystem::last_inherentto achieve this for two reasons: First is that pallets do not have access toAllPalletsWithSystemwhich is required to invoke thepollhook on all pallets. Second is that the runtime does currently not enforce an order of inherents.3.

-System::PostInherentscan be done in the same manner aspoll.Drawbacks

+Drawbacks

The previous drawback of cementing the order of inherents has been addressed and removed by redesigning the approach. No further drawbacks have been identified thus far.

-Testing, Security, and Privacy

+Testing, Security, and Privacy

The new logic of

initialize_blockcan be tested by checking that the block-builder will skip transactions whenOnlyInherentsis returned.Security: n/a

Privacy: n/a

-Performance, Ergonomics, and Compatibility

-Performance

+Performance, Ergonomics, and Compatibility

+Performance

The performance overhead is minimal in the sense that no clutter was added after fulfilling the requirements. The only performance difference is that

-initialize_blockalso returns an enum that needs to be passed through the WASM boundary. This should be negligible.Ergonomics

+Ergonomics

The new interface allows for more extensible runtime logic. In the future, this will be utilized for multi-block-migrations which should be a huge ergonomic advantage for parachain developers.

-Compatibility

+Compatibility

The advice here is OPTIONAL and outside of the RFC. To not degrade user experience, it is recommended to ensure that an updated node can still import historic blocks.

-Prior Art and References

+Prior Art and References

The RFC is currently being implemented in polkadot-sdk#1781 (formerly substrate#14275). Related issues and merge requests:

@@ -2401,7 +2624,7 @@

ExtrinsicInclusionMode

-

Ispost_inherentsmore consistent instead oflast_inherent? Then we should change it.

=> renamed tolast_inherentFuture Directions and Related Material

+Future Directions and Related Material

The long-term future here is to move the block building logic into the runtime. Currently there is a tight dance between the block author and the runtime; the author has to call into different runtime functions in quick succession and exact order. Any misstep causes the block to be invalid.

@@ -2438,14 +2661,14 @@

This can be unified and simplified by moving both parts into the runtime.

Authors Bryan Chen -Summary

+Summary

This RFC proposes a set of changes to the parachain lock mechanism. The goal is to allow a parachain manager to self-service the parachain without root track governance action.

This is achieved by remove existing lock conditions and only lock a parachain when:

The manager of a parachain has permission to manage the parachain when the parachain is unlocked. Parachains are by default locked when onboarded to a slot. This requires the parachain wasm/genesis must be valid, otherwise a root track governance action on relaychain is required to update the parachain.

The current reliance on root track governance actions for managing parachains can be time-consuming and burdensome. This RFC aims to address this technical difficulty by allowing parachain managers to take self-service actions, rather than relying on general public voting.

The key scenarios this RFC seeks to improve are:

@@ -2464,12 +2687,12 @@A parachain can either be locked or unlocked3. With parachain locked, the parachain manager does not have any privileges. With parachain unlocked, the parachain manager can perform following actions with the paras_registrar pallet:

Parachain locks are designed in such way to ensure the decentralization of parachains. If parachains are not locked when it should be, it could introduce centralization risk for new parachains.

For example, one possible scenario is that a collective may decide to launch a parachain fully decentralized. However, if the parachain is unable to produce block, the parachain manager will be able to replace the wasm and genesis without the consent of the collective.

It is considered this risk is tolerable as it requires the wasm/genesis to be invalid at first place. It is not yet practically possible to develop a parachain without any centralized risk currently.

Another case is that a parachain team may decide to use crowdloan to help secure a slot lease. Previously, creating a crowdloan will lock a parachain. This means crowdloan participants will know exactly the genesis of the parachain for the crowdloan they are participating. However, this actually providers little assurance to crowdloan participants. For example, if the genesis block is determined before a crowdloan is started, it is not possible to have onchain mechanism to enforce reward distributions for crowdloan participants. They always have to rely on the parachain team to fulfill the promise after the parachain is alive.

Existing operational parachains will not be impacted.

-The implementation of this RFC will be tested on testnets (Rococo and Westend) first.

An audit maybe required to ensure the implementation does not introduce unwanted side effects.

There is no privacy related concerns.

-This RFC should not introduce any performance impact.

-This RFC should improve the developer experiences for new and existing parachain teams

-This RFC is fully compatibility with existing interfaces.

-None at this stage.

-This RFC is only intended to be a short term solution. Slots will be removed in future and lock mechanism is likely going to be replaced with a more generalized parachain manage & recovery system in future. Therefore long term impacts of this RFC are not considered.

https://github.com/paritytech/cumulus/issues/377 @@ -2567,19 +2790,19 @@

Encointer is a system chain on Kusama since Jan 2022 and has been developed and maintained by the Encointer association. This RFC proposes to treat Encointer like any other system chain and include it in the fellowship repo with this PR.

-Encointer does not seek to be in control of its runtime repository. As a decentralized system, the fellowship has a more suitable structure to maintain a system chain runtime repo than the Encointer association does.

Also, Encointer aims to update its runtime in batches with other system chains in order to have consistency for interoperability across system chains.

-Our PR has all details about our runtime and how we would move it into the fellowship repo.

Noteworthy: All Encointer-specific pallets will still be located in encointer's repo for the time being: https://github.com/encointer/pallets

It will still be the duty of the Encointer team to keep its runtime up to date and provide adequate test fixtures. Frequent dependency bumps with Polkadot releases would be beneficial for interoperability and could be streamlined with other system chains but that will not be a duty of fellowship. Whenever possible, all system chains could be upgraded jointly (including Encointer) with a batch referendum.

@@ -2588,17 +2811,17 @@Other than all other system chains, development and maintenance of the Encointer Network is mainly financed by the KSM Treasury and possibly the DOT Treasury in the future. Encointer is dedicated to maintaining its network and runtime code for as long as possible, but there is a dependency on funding which is not in the hands of the fellowship. The only risk in the context of funding, however, is that the Encointer runtime will see less frequent updates if there's less funding.

-No changes to the existing system are proposed. Only changes to how maintenance is organized.

-No changes

-Existing Encointer runtime repo

None identified

-More info on Encointer: encointer.org

Table of Contents

@@ -3518,11 +3741,11 @@The Relay Chain contains most of the core logic for the Polkadot network. While this was necessary prior to the launch of parachains and development of XCM, most of this logic can exist in parachains. This is a proposal to migrate several subsystems into system parachains.

-Polkadot's scaling approach allows many distinct state machines (known generally as parachains) to operate with common guarantees about the validity and security of their state transitions. Polkadot provides these common guarantees by executing the state transitions on a strict subset (a backing @@ -3534,13 +3757,13 @@

The following pallets and subsystems are good candidates to migrate from the Relay Chain:

These subsystems will have reduced resources in cores than on the Relay Chain. Staking in particular may require some optimizations to deal with constraints.

-Standard audit/review requirements apply. More powerful multi-chain integration test tools would be useful in developement.

-Describe the impact of the proposal on the exposed functionality of Polkadot.

-This is an optimization. The removal of public/user transactions on the Relay Chain ensures that its primary resources are allocated to system performance.

-This proposal alters very little for coretime users (e.g. parachain developers). Application developers will need to interact with multiple chains, making ergonomic light client tools particularly important for application development.

For existing parachains that interact with these subsystems, they will need to configure their runtimes to recognize the new locations in the network.

-Implementing this proposal will require some changes to pallet APIs and/or a pub-sub protocol. Application developers will need to interact with multiple chains in the network.

-Ideally the Relay Chain becomes transactionless, such that not even balances are represented there. With Staking and Governance off the Relay Chain, this is not an unreasonable next step.

With Identity on Polkadot, Kusama may opt to drop its People Chain.

@@ -3750,13 +3973,13 @@At the moment, we have system_version field on RuntimeVersion that derives which state version is used for the

Storage.

We have a use case where we want extrinsics root is derived using StateVersion::V1. Without defining a new field

under RuntimeVersion,

we would like to propose adding system_version that can be used to derive both storage and extrinsic state version.

Since the extrinsic state version is always StateVersion::V0, deriving extrinsic root requires full extrinsic data.

This would be problematic when we need to verify the extrinsics root if the extrinsic sizes are bigger. This problem is

further explored in https://github.com/polkadot-fellows/RFCs/issues/19

In order to use project specific StateVersion for extrinsic roots, we proposed

an implementation that introduced

parameter to frame_system::Config but that unfortunately did not feel correct.

@@ -3798,26 +4021,26 @@

There should be no drawbacks as it would replace state_version with same behavior but documentation should be updated

so that chains know which system_version to use.

AFAIK, should not have any impact on the security or privacy.

-These changes should be compatible for existing chains if they use state_version value for system_verision.

I do not believe there is any performance hit with this change.

-This does not break any exposed Apis.

-This change should not break any compatibility.

-We proposed introducing a similar change by introducing a

parameter to frame_system::Config but did not feel that

is the correct way of introducing this change.

I do not have any specific questions about this change at the moment.

-IMO, this change is pretty self-contained and there won't be any future work necessary.

Table of Contents

@@ -3846,9 +4069,9 @@This RFC proposes a new host function for parachains, storage_proof_size. It shall provide the size of the currently recorded storage proof to the runtime. Runtime authors can use the proof size to improve block utilization by retroactively reclaiming unused storage weight.

The number of extrinsics that are included in a parachain block is limited by two constraints: execution time and proof size. FRAME weights cover both concepts, and block-builders use them to decide how many extrinsics to include in a block. However, these weights are calculated ahead of time by benchmarking on a machine with reference hardware. The execution-time properties of the state-trie and its storage items are unknown at benchmarking time. Therefore, we make some assumptions about the state-trie:

In addition, the current model does not account for multiple accesses to the same storage items. While these repetitive accesses will not increase storage-proof size, the runtime-side weight monitoring will account for them multiple times. Since the proof size is completely opaque to the runtime, we can not implement retroactive storage weight correction.

A solution must provide a way for the runtime to track the exact storage-proof size consumed on a per-extrinsic basis.

-This RFC proposes a new host function that exposes the storage-proof size to the runtime. As a result, runtimes can implement storage weight reclaiming mechanisms that improve block utilization.

This RFC proposes the following host function signature:

#![allow(unused)] @@ -3870,14 +4093,14 @@Explanation

fn ext_storage_proof_size_version_1() -> u64; }

The host function MUST return an unsigned 64-bit integer value representing the current proof size. In block-execution and block-import contexts, this function MUST return the current size of the proof. To achieve this, parachain node implementors need to enable proof recording for block imports. In other contexts, this function MUST return 18446744073709551615 (u64::MAX), which represents disabled proof recording.

-Parachain nodes need to enable proof recording during block import to correctly implement the proposed host function. Benchmarking conducted with balance transfers has shown a performance reduction of around 0.6% when proof recording is enabled.

-The host function proposed in this RFC allows parachain runtime developers to keep track of the proof size. Typical usage patterns would be to keep track of the overall proof size or the difference between subsequent calls to the host function.

-Parachain teams will need to include this host function to upgrade.

-This RFC proposes changing the current deposit requirements on the Polkadot and Kusama Asset Hub for creating an NFT collection, minting an individual NFT, and lowering its corresponding metadata and attribute deposits. The objective is to lower the barrier to entry for NFT creators, fostering a more inclusive and vibrant ecosystem while maintaining network integrity and preventing spam.

-The current deposit of 10 DOT for collection creation (along with 0.01 DOT for item deposit and 0.2 DOT for metadata and attribute deposits) on the Polkadot Asset Hub and 0.1 KSM on Kusama Asset Hub presents a significant financial barrier for many NFT creators. By lowering the deposit @@ -3953,7 +4176,7 @@

deposit function, adjusted by correspoding pricing mechansim.Previous discussions have been held within the Polkadot Forum, with artists expressing their concerns about the deposit amounts.

-This RFC proposes a revision of the deposit constants in the configuration of the NFTs pallet on the Polkadot Asset Hub. The new deposit amounts would be determined by a standard deposit formula.

As of v1.1.1, the Collection Deposit is 10 DOT and the Item Deposit is 0.01 DOT (see @@ -4038,7 +4261,7 @@

Modifying deposit requirements necessitates a balanced assessment of the potential drawbacks. Highlighted below are cogent points extracted from the discourse on the Polkadot Forum conversation, @@ -4067,20 +4290,20 @@

As noted above, state bloat is a security concern. In the case of abuse, governance could adapt by

increasing deposit rates and/or using forceDestroy on collections agreed to be spam.

The primary performance consideration stems from the potential for state bloat due to increased activity from lower deposit requirements. It's vital to monitor and manage this to avoid any negative impact on the chain's performance. Strategies for mitigating state bloat, including efficient data management and periodic reviews of storage requirements, will be essential.

-The proposed change aims to enhance the user experience for artists, traders, and utilizers of Kusama and Polkadot Asset Hubs, making Polkadot and Kusama more accessible and user-friendly.

-The change does not impact compatibility as a redeposit function is already implemented.

If this RFC is accepted, there should not be any unresolved questions regarding how to adapt the @@ -4170,11 +4393,11 @@

Propose a way of permuting the availability chunk indices assigned to validators, in the context of recovering available data from systematic chunks, with the purpose of fairly distributing network bandwidth usage.

-Currently, the ValidatorIndex is always identical to the ChunkIndex. Since the validator array is only shuffled once per session, naively using the ValidatorIndex as the ChunkIndex would pose an unreasonable stress on the first N/3 validators during an entire session, when favouring availability recovery from systematic chunks.

@@ -4182,9 +4405,9 @@Relay chain node core developers.

-An erasure coding algorithm is considered systematic if it preserves the original unencoded data as part of the resulting code. @@ -4338,7 +4561,7 @@

core_index that used to be occupied by a candidate in some parts of the dispute protocol is

very complicated (See appendix A). This RFC assumes that availability-recovery processes initiated during

@@ -4348,28 +4571,28 @@ CandidateReceiptExtensive testing will be conducted - both automated and manual. This proposal doesn't affect security or privacy.

-This is a necessary data availability optimisation, as reed-solomon erasure coding has proven to be a top consumer of CPU time in polkadot as we scale up the parachain block size and number of availability cores.

With this optimisation, preliminary performance results show that CPU time used for reed-solomon coding/decoding can be halved and total POV recovery time decrease by 80% for large POVs. See more here.

-Not applicable.

-This is a breaking change. See upgrade path section above. All validators and collators need to have upgraded their node versions before the feature will be enabled via a governance call.

-See comments on the tracking issue and the in-progress PR

Not applicable.

-This enables future optimisations for the performance of availability recovery, such as retrieving batched systematic chunks from backers/approval-checkers.

This RFC proposes to changes the SessionKeys::generate_session_keys runtime api interface. This runtime api is used by validator operators to

generate new session keys on a node. The public session keys are then registered manually on chain by the validator operator.

Before this RFC it was not possible by the on chain logic to ensure that the account setting the public session keys is also in

@@ -4452,7 +4675,7 @@

generate_session_keys. Further this RFC proposes to change the return value of the generate_session_keys

function also to not only return the public session keys, but also the proof of ownership for the private session keys. The

validator operator will then need to send the public session keys and the proof together when registering new session keys on chain.

-When submitting the new public session keys to the on chain logic there doesn't exist any verification of possession of the private session keys. This means that users can basically register any kind of public session keys on chain. While the on chain logic ensures that there are no duplicate keys, someone could try to prevent others from registering new session keys by setting them first. While this wouldn't bring @@ -4460,13 +4683,13 @@

After this RFC this kind of attack would not be possible anymore, because the on chain logic can verify that the sending account is in ownership of the private session keys.

-We are first going to explain the proof format being used:

#![allow(unused)] fn main() { @@ -4500,31 +4723,31 @@on Polkadot.Explanation already gets the

proofpassed asVec<u8>. Thisproofneeds to be decoded to the actualProoftype as explained above. Theproofand the SCALE encodedaccount_idof the sender are used to verify the ownership of theSessionKeys. -Drawbacks

+Drawbacks

Validator operators need to pass the their account id when rotating their session keys in a node. This will require updating some high level docs and making users familiar with the slightly changed ergonomics.

-Testing, Security, and Privacy

+Testing, Security, and Privacy

Testing of the new changes only requires passing an appropriate

-ownerfor the current testing context. The changes to the proof generation and verification got audited to ensure they are correct.Performance, Ergonomics, and Compatibility

-Performance

+Performance, Ergonomics, and Compatibility

+Performance

The session key generation is an offchain process and thus, doesn't influence the performance of the chain. Verifying the proof is done on chain as part of the transaction logic for setting the session keys. The verification of the proof is a signature verification number of individual session keys times. As setting the session keys is happening quite rarely, it should not influence the overall system performance.

-Ergonomics

+Ergonomics

The interfaces have been optimized to make it as easy as possible to generate the ownership proof.

-Compatibility

+Compatibility

Introduces a new version of the

SessionKeysruntime api. Thus, nodes should be updated before a runtime is enacted that contains these changes otherwise they will fail to generate session keys. The RPC that exists around this runtime api needs to be updated to support passing the account id and for returning the ownership proof alongside the public session keys.UIs would need to be updated to support the new RPC and the changed on chain logic.

-Prior Art and References

+Prior Art and References

None.

Unresolved Questions

None.

-Future Directions and Related Material

+Future Directions and Related Material

Substrate implementation of the RFC.

Table of Contents

@@ -4562,10 +4785,10 @@Summary +

Summary

The Fellowship Manifesto states that members should receive a monthly allowance on par with gross income in OECD countries. This RFC proposes concrete amounts.

-Motivation

+Motivation

One motivation for the Technical Fellowship is to provide an incentive mechanism that can induct and retain technical talent for the continued progress of the network.

In order for members to uphold their commitment to the network, they should receive support to @@ -4575,12 +4798,12 @@

Motivation

Note: Goals of the Fellowship, expectations for each Dan, and conditions for promotion and demotion are all explained in the Manifesto. This RFC is only to propose concrete values for allowances.

-Stakeholders

+Stakeholders

-

- Fellowship members

- Polkadot Treasury

Explanation

+Explanation

This RFC proposes agreeing on salaries relative to a single level, the III Dan. As such, changes to the amount or asset used would only be on a single value, and all others would adjust relatively. A III Dan is someone whose contributions match the expectations of a full-time individual contributor. @@ -4640,19 +4863,19 @@

Projections

Updates

Updates to these levels, whether relative ratios, the asset used, or the amount, shall be done via RFC.

-Drawbacks

+Drawbacks

By not using DOT for payment, the protocol relies on the stability of other assets and the ability to acquire them. However, the asset of choice can be changed in the future.

-Testing, Security, and Privacy

+Testing, Security, and Privacy

N/A.

-Performance, Ergonomics, and Compatibility

-Performance

+Performance, Ergonomics, and Compatibility

+Performance

N/A

-Ergonomics

+Ergonomics

N/A

-Compatibility

+Compatibility

N/A

-Prior Art and References

+Prior Art and References

- The Polkadot Fellowship Manifesto

@@ -4693,11 +4916,11 @@Summary +

Summary

When two peers connect to each other, they open (amongst other things) a so-called "notifications protocol" substream dedicated to gossiping transactions to each other.

Each notification on this substream currently consists in a SCALE-encoded

Vec<Transaction>whereTransactionis defined in the runtime.This RFC proposes to modify the format of the notification to become

-(Compact(1), Transaction). This maintains backwards compatibility, as this new format decodes as aVecof length equal to 1.Motivation

+Motivation

There exists three motivations behind this change:

-

- @@ -4710,9 +4933,9 @@

MotivationIt makes the implementation way more straight-forward by not having to repeat code related to back-pressure. See explanations below.

Stakeholders

+Stakeholders

Low-level developers.

-Explanation

+Explanation

To give an example, if you send one notification with three transactions, the bytes that are sent on the wire are:

concat( leb128(total-size-in-bytes-of-the-rest), @@ -4732,23 +4955,23 @@Explanation This is equivalent to forcing the

Vec<Transaction>to always have a length of 1, and I expect the Substrate implementation to simply modify the sending side to add aforloop that sends one notification per item in theVec.As explained in the motivation section, this allows extracting

scale(transaction)items without having to know how to decode them.By "flattening" the two-steps hierarchy, an implementation only needs to back-pressure individual notifications rather than back-pressure notifications and transactions within notifications.

-Drawbacks

+Drawbacks

This RFC chooses to maintain backwards compatibility at the cost of introducing a very small wart (the

Compact(1)).An alternative could be to introduce a new version of the transactions notifications protocol that sends one

-Transactionper notification, but this is significantly more complicated to implement and can always be done later in case theCompact(1)is bothersome.Testing, Security, and Privacy

+Testing, Security, and Privacy

Irrelevant.

-Performance, Ergonomics, and Compatibility

-Performance

+Performance, Ergonomics, and Compatibility

+Performance

Irrelevant.

-Ergonomics

+Ergonomics

Irrelevant.

-Compatibility

+Compatibility

The change is backwards compatible if done in two steps: modify the sender to always send one transaction per notification, then, after a while, modify the receiver to enforce the new format.

-Prior Art and References

+Prior Art and References

Irrelevant.

Unresolved Questions

None.

-Future Directions and Related Material

+Future Directions and Related Material

None. This is a simple isolated change.

Table of Contents

@@ -4788,20 +5011,20 @@Summary +

Summary

This RFC proposes to make the mechanism of RFC #8 more generic by introducing the concept of "capabilities".

Implementations can implement certain "capabilities", such as serving old block headers or being a parachain bootnode.

The discovery mechanism of RFC #8 is extended to be able to discover nodes of specific capabilities.

-Motivation

+Motivation

The Polkadot peer-to-peer network is made of nodes. Not all these nodes are equal. Some nodes store only the headers of recent blocks, some nodes store all the block headers and bodies since the genesis, some nodes store the storage of all blocks since the genesis, and so on.

It is currently not possible to know ahead of time (without connecting to it and asking) which nodes have which data available, and it is not easily possible to build a list of nodes that have a specific piece of data available.

If you want to download for example the header of block 500, you have to connect to a randomly-chosen node, ask it for block 500, and if it says that it doesn't have the block, disconnect and try another randomly-chosen node. In certain situations such as downloading the storage of old blocks, nodes that have the information are relatively rare, and finding through trial and error a node that has the data can take a long time.

This RFC attempts to solve this problem by giving the possibility to build a list of nodes that are capable of serving specific data.

-Stakeholders

+Stakeholders

Low-level client developers. People interested in accessing the archive of the chain.

-Explanation

+Explanation

Reading RFC #8 first might help with comprehension, as this RFC is very similar.

Please keep in mind while reading that everything below applies for both relay chains and parachains, except mentioned otherwise.

Capabilities

@@ -4837,30 +5060,30 @@Drawbacks +

Drawbacks

None that I can see.

-Testing, Security, and Privacy

+Testing, Security, and Privacy

The content of this section is basically the same as the one in RFC 8.

This mechanism doesn't add or remove any security by itself, as it relies on existing mechanisms.

Due to the way Kademlia works, it would become the responsibility of the 20 Polkadot nodes whose

sha256(peer_id)is closest to thekey(described in the explanations section) to store the list of nodes that have specific capabilities. Furthermore, when a large number of providers are registered, only the providers closest to thekeyare kept, up to a certain implementation-defined limit.For this reason, an attacker can abuse this mechanism by randomly generating libp2p PeerIds until they find the 20 entries closest to the

keyrepresenting the target capability. They are then in control of the list of nodes with that capability. While doing this can in no way be actually harmful, it could lead to eclipse attacks.Because the key changes periodically and isn't predictable, and assuming that the Polkadot DHT is sufficiently large, it is not realistic for an attack like this to be maintained in the long term.

-Performance, Ergonomics, and Compatibility

-Performance

+Performance, Ergonomics, and Compatibility

+Performance

The DHT mechanism generally has a low overhead, especially given that publishing providers is done only every 24 hours.

Doing a Kademlia iterative query then sending a provider record shouldn't take more than around 50 kiB in total of bandwidth for the parachain bootnode.

Assuming 1000 nodes with a specific capability, the 20 Polkadot full nodes corresponding to that capability will each receive a sudden spike of a few megabytes of networking traffic when the

keyrotates. Again, this is relatively negligible. If this becomes a problem, one can add a random delay before a node registers itself to be the provider of thekeycorresponding toBabeApi_next_epoch.Maybe the biggest uncertainty is the traffic that the 20 Polkadot full nodes will receive from light clients that desire knowing the nodes with a capability. If this every becomes a problem, this value of 20 is an arbitrary constant that can be increased for more redundancy.

-Ergonomics

+Ergonomics

Irrelevant.

-Compatibility

+Compatibility

Irrelevant.

-Prior Art and References

+Prior Art and References

Unknown.

Unresolved Questions

While it fundamentally doesn't change much to this RFC, using

-BabeApi_currentEpochandBabeApi_nextEpochmight be inappropriate. I'm not familiar enough with good practices within the runtime to have an opinion here. Should it be an entirely new pallet?Future Directions and Related Material

+Future Directions and Related Material

This RFC would make it possible to reliably discover archive nodes, which would make it possible to reliably send archive node requests, something that isn't currently possible. This could solve the problem of finding archive RPC node providers by migrating archive-related request to using the native peer-to-peer protocol rather than JSON-RPC.

If we ever decide to break backwards compatibility, we could divide the "history" and "archive" capabilities in two, between nodes capable of serving older blocks and nodes capable of serving newer blocks. We could even add to the peer-to-peer network nodes that are only capable of serving older blocks (by reading from a database) but do not participate in the head of the chain, and that just exist for historical purposes.

@@ -4909,12 +5132,12 @@Summary +

Summary

To interact with chains in the Polkadot ecosystem it is required to know how transactions are encoded and how to read state. For doing this, Polkadot-SDK, the framework used by most of the chains in the Polkadot ecosystem, exposes metadata about the runtime to the outside. UIs, wallets, and others can use this metadata to interact with these chains. This makes the metadata a crucial piece of the transaction encoding as users are relying on the interacting software to encode the transactions in the correct format.

It gets even more important when the user signs the transaction in an offline wallet, as the device by its nature cannot get access to the metadata without relying on the online wallet to provide it. This makes it so that the offline wallet needs to trust an online party, deeming the security assumptions of the offline devices, mute.

This RFC proposes a way for offline wallets to leverage metadata, within the constraints of these. The design idea is that the metadata is chunked and these chunks are put into a merkle tree. The root hash of this merkle tree represents the metadata. The offline wallets can use the root hash to decode transactions by getting proofs for the individual chunks of the metadata. This root hash is also included in the signed data of the transaction (but not sent as part of the transaction). The runtime is then including its known metadata root hash when verifying the transaction. If the metadata root hash known by the runtime differs from the one that the offline wallet used, it very likely means that the online wallet provided some fake data and the verification of the transaction fails.

Users depend on offline wallets to correctly display decoded transactions before signing. With merkleized metadata, they can be assured of the transaction's legitimacy, as incorrect transactions will be rejected by the runtime.

-Motivation

+Motivation

Polkadot's innovative design (both relay chain and parachains) present the ability to developers to upgrade their network as frequently as they need. These systems manage to have integrations working after the upgrades with the help of FRAME Metadata. This Metadata, which is in the order of half a MiB for most Polkadot-SDK chains, completely describes chain interfaces and properties. Securing this metadata is key for users to be able to interact with the Polkadot-SDK chain in the expected way.