SteerBot - A 51515 Robot Inventor car that edge tracks a line #166

Replies: 12 comments 2 replies

-

|

This is really nice, thanks for sharing! One thing I was curious about is if you could get away with not having a calibration of the steering since the motors have absolute encoders. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks, David! And thanks for Pybricks. |

Beta Was this translation helpful? Give feedback.

-

|

...and did you try to use LEGO Python to show benefit/drawbacks of Pybricks/LEGO implementations? |

Beta Was this translation helpful? Give feedback.

-

|

This is great, thanks for sharing both the code and the design 😄 Looks like you've already found quite a few fun Pybricks features. Here are a few more tricks you might be interested in for your next creation:

|

Beta Was this translation helpful? Give feedback.

-

|

I'm curious, is the extra resolution of from pybricks.pupdevices import ColorSensor

from pybricks.parameters import Port

from pybricks.tools import wait

sensor = ColorSensor(Port.B)

while True:

# Gets the HSV value

color = sensor.hsv()

# Print saturation

print(color.s)

# Wait

wait(500)If it's a noticeable/big difference in performance in your application, then others might experience the same. If so, we can probably consider looking at increasing the resolution for |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for the feedback, Laurens! I will incorporate your suggestions and then update the code here with these updates. I will also investigate the use of reading the saturation from hsv. I think the resolution should be adequate but will test to confirm. Philo, there are definitely some features of the the Pybricks firmware that I took advantage of but I can't say conclusively that this can't be done as well with LEGO python. While Pybricks still has some rough edges, crashed a few times, and is non trivial to install and setup so you can connect to the hub, it has one HUGE advantage over the LEGO Python and that is the documentation. If it fantastic. Take a look here: https://docs.pybricks.com/en/latest/pupdevices.html and make sure to click on Show Examples. |

Beta Was this translation helpful? Give feedback.

-

|

I have created some preliminary building instructions for the SteerBot. These do not show the cable routing but it was not difficult to route cables under and around the Hub. |

Beta Was this translation helpful? Give feedback.

-

|

Nitpicker's dept.: differential is supposed to be grey, not white ;) |

Beta Was this translation helpful? Give feedback.

-

|

This is great @GusJansson --- thanks for sharing. |

Beta Was this translation helpful? Give feedback.

-

|

Philo! You are right! I fixed it in the model. It makes me happy that you went through the build. Thanks. Now for getting the wires done I need either go back to trusty old MLCad that I have not used it years or invest the time to learn LDCad. What do you suggest? There is no way to do the wires in Studio, right? |

Beta Was this translation helpful? Give feedback.

-

|

No, so far Studio can't create cables. I would definitely try to learn LDCad: creating flexibles parts with LDCad is almost easy... After that, remains the problem for compatibility between cables generated in LDraw domain and Studio building instructions. With direct import, Studio generates BIs for the cable itself ;)

|

Beta Was this translation helpful? Give feedback.

-

|

Thanks again for sharing this! Looks like a good opportunity for me to try out pybricks and adapt your code to what I'm thinking for "racing line" tracking with LEGO race car models. |

Beta Was this translation helpful? Give feedback.

-

Description

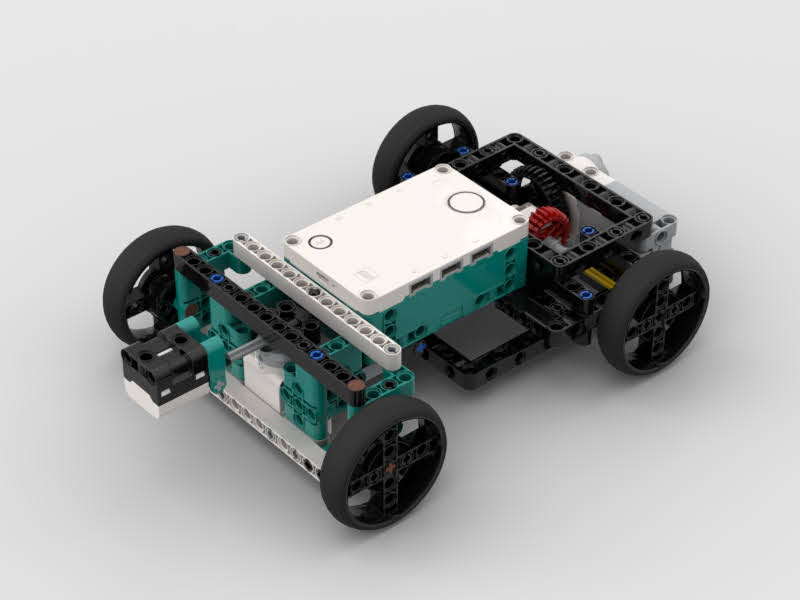

A robot with car like steering has been one of my favorite exercises in robot building. This project, in fact, is largely a variation of what I have previously done with the LEGO RCX, the NXT and to a lesser extent the EV3. But with the 51515 set I as able to take advantage of improved resolution of the color sensor as well as some cool features of Pybricks to make the main tracking code pretty simple.

The robot features Ackermann steering. This means that the steering linkage is more trapezoidal than parallel. The front wheels are only parallel when the steering is pointing straight ahead. When it turns the inside wheel turns sharper than the outside wheel so the wheels track more accurately since the inside wheel has a tighter turning radius. This is particularly important in order to achieve tight overall turning radius with a relatively wide robot.

The robot uses the Color Sensor mounted on an axle high and attached to the steering motor. This means that in order to track a line, actually just the edge of the line, the tracking code tries to keep the sensor on the edge of the line. If the sensor is on the edge, then as the robot drives it will follow the edge since the robot is now steering to where the edge is in front of the robot. The sensor is mounted high in order to give a gradual reading over the edge so that while near the edge the program can determine where the edge is relative to the sensor.

The driving is done through the super cool 5 gear differential that is included with the set. This is the first time I use this LEGO differential and I love it! The drive motor is actually gearing up with the intention of being able to go really fast. On my tight test track I was never able to make it go full speed without running off the line.

Video

https://www.youtube.com/watch?v=i4WPa-rtFB8&feature=youtu.be

Source Code

Programming Notes

Originally I was using the reflection() light value from the ColorSensor. With the blue painters tape I had available and with the sensor mounted high in order to get a gradual value as the sensor passed over the edge, I got a rather unsatisfying resolution in the sensor value. Something like 3 for on the tape and 29 when over the white surface off the tape. Along with a lot of variation in value as the sensor bounced up and down on the axle. After talking to Laurens about this I found out I could get the raw sensor values using the LUMPDevice class. A little experimenting and I discovered that the raw Saturation value was perfect. With the Saturation value you are not measuring how much light is coming back into the sensor, but how much color there is. Since my tape is blue, I got a high saturation value over the tape (about 675) and a low value, since there is no color, (about 100) over the white surface.

UPDATE: On the suggestion from Laurens, I'm now using the ColorSensor .hsv method to get the saturation value. This value is an integer percent and in my case I get a value of about 90 over the blue tape and 15 off the tape over the white board. This is plenty of resolution and this value is more stable than the raw LUMPDevice saturation value which had quite a bit of noise in it.

I also had some fun controlling the speed of the robot. My test track is very tight and the width of the boards are only 2 feet (61cm) wide. That means if the robot was driving fast it had no chance to make the turns and stay on the boards. But a slow robot is no fun so I worked on a scheme to go fast when going straight but slow down in turns, especially when the sensor as no longer near the edge of the line. And, as any good driver will tell you, it is best to control the speed smoothly. Gradually speeding up and slowing down.

UPDATE: Initially I was doing this speed control by gradually changing the speed of the robot in my code to accelerate and slow down. Turns out that there is a much better way in Pybricks by using control.limits on the motor. Using the control.limits method I can set the Acceleration to a value that works better with this robot making the power control smooth but still pretty aggressive to go fast around the track. Robot is now a bit faster on the track than what is in the video.

Building instructions

I have created some preliminary building instructions for the SteerBot. These do not show the cable routing but it was not difficult to route cables under and around the Hub.

steerbot.pdf

Port A Steering Motor

Port B Drive Motor

Port C Color Sensor

Beta Was this translation helpful? Give feedback.

All reactions