CSV to SQLite batch ingestion leveraging Spring Batch framework.

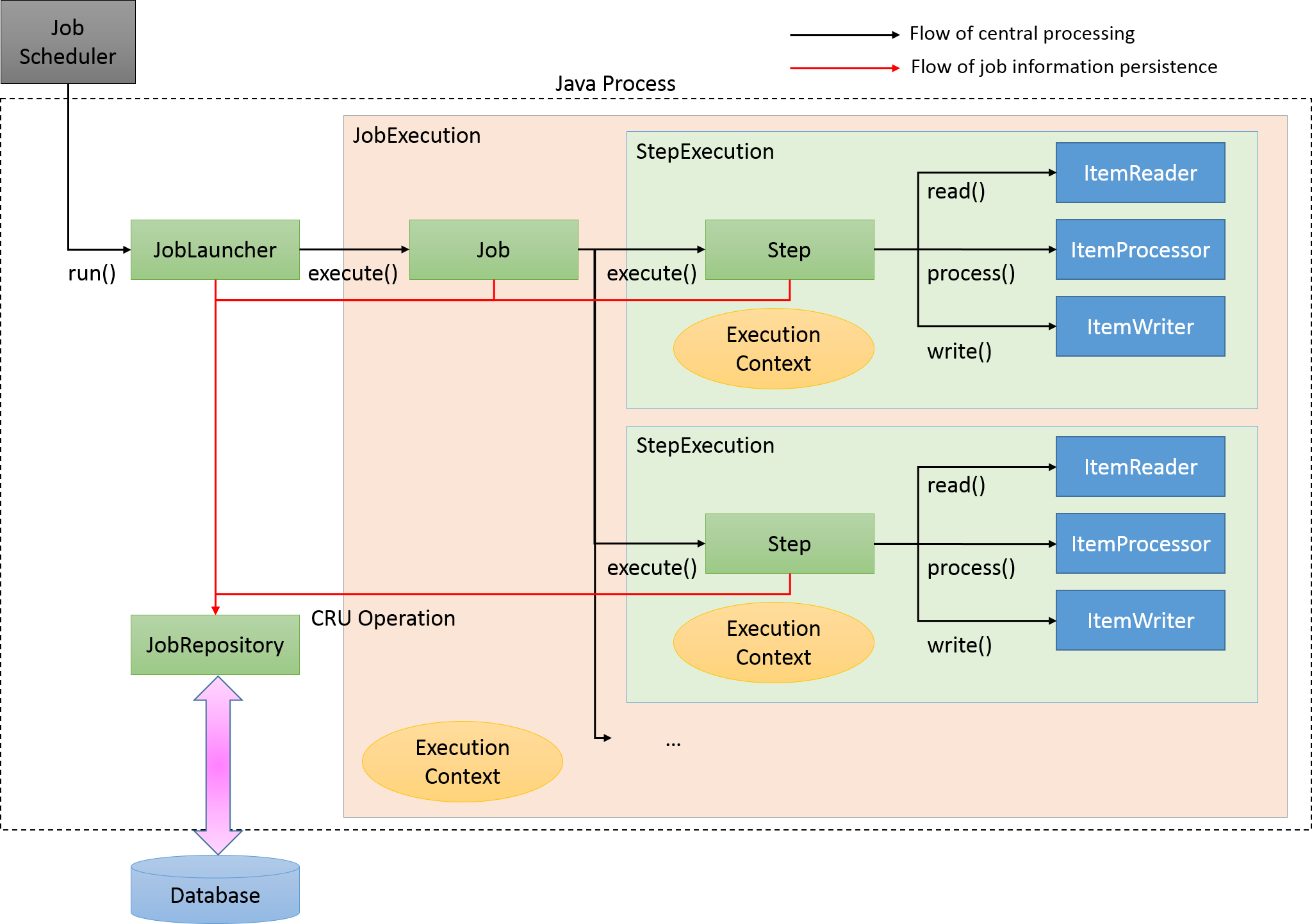

There are many ways to implement batch processing, however Spring has the complete toolkit with relates to batch processing. In this context we have one spring batch job managed by the JobLauncher class that will run the ingestion. In the job, we can create multi-step batch execution flow which are managed by Execution Context.

Inside the step there is the ItemReader, ItemProcessor and ItemWriter interface that you need to implement in your program.

Here are the breakdown:

ItemReaderimplementation reads the records found in the data.csv.ItemProcessorimplementation contains the business logic for processing before the insertion to database takes place.ItemWriterimplementation contains the logic for inserting to database.

For the Spring application to communicate with the SQLite DB, I added an implementation of the SQL Dialect which can be found in this package com.ms3.spring.batch.dialect.

For static files and application.properties configuration, please refer to the resources folder.

What things you need to install the software and how to install them

JDK 1.8

Maven

SQLite Studio

In the application.properties file, change the path.config to where the project is located.

- Open the project either Eclipse or IntelliJ.

- Run

mvn clean installto download dependencies - Right click on the BatchApplication.java and select Run as Spring Boot Application

Once the setup is done and the application is up and running. Do the following steps:

- Copy/paste this URL to the browser/Rest Client to run the batch ingestion.

GET

http://localhost:8080/load - Wait for several minutes to complete the batch job.

- Once the job is complete, check and verify in the browser/Rest Client this message:

// 20200531211053

// http://localhost:8080/load

"COMPLETED"

- You can verify the records either by REST API or using SQLiteStudio.

REST API:

GET http://localhost:8080/record

- Open SQLiteStudio.

- Select Database Menu, then click "Select Add a database".

- Select browse for existing database file on local computer.

- Click OK.

In the terminal, run mvnw clean test.

- All records must have non empty columns before inserting to database, those records that have empty columns were treated as bad data and will be written to data-bad.csv.

- Columns H and I were treated as string boolean values for easier implementation.

- Alphanumeric characters only, characters such as â,€,˜, etc. will be treated as bad data.

- Spring Batch - Framework for batch processing

- Spring Data - Spring Data commons

- Hibernate Validator - Hibernate Bean Validation

- Lombok - Used for common data utilities

- Maven - Dependency Management

- MapStruct - An annotation processor for generating type-safe bean mappers

- David Ramirez - Initial work - swingfox