Not Found

The requested item could not be located.

Perhaps you might want to check all posts or information pages.

The requested item could not be located.

Perhaps you might want to check all posts or information pages.

The requested item could not be located.

Perhaps you might want to check all posts or information pages.

| 2024-01-16 | Using udp-link to enhance TCP connections stability |

| 2020-05-13 | The zoo of binary-to-text encoding schemes |

| 2020-04-18 | Convert binary data to a text with the lowest overhead |

| 2020-01-18 | My notes for the "Pragmatic Thinking and Learning" book |

| 2019-12-20 | Computer Science vs Information Technology |

| 2019-12-19 | Who is an engineer |

| 2019-12-15 | Algorithm is... |

| 2019-12-15 | Turing: thesis, machine, completeness |

| 2019-08-21 | Organizing Unstructured Data |

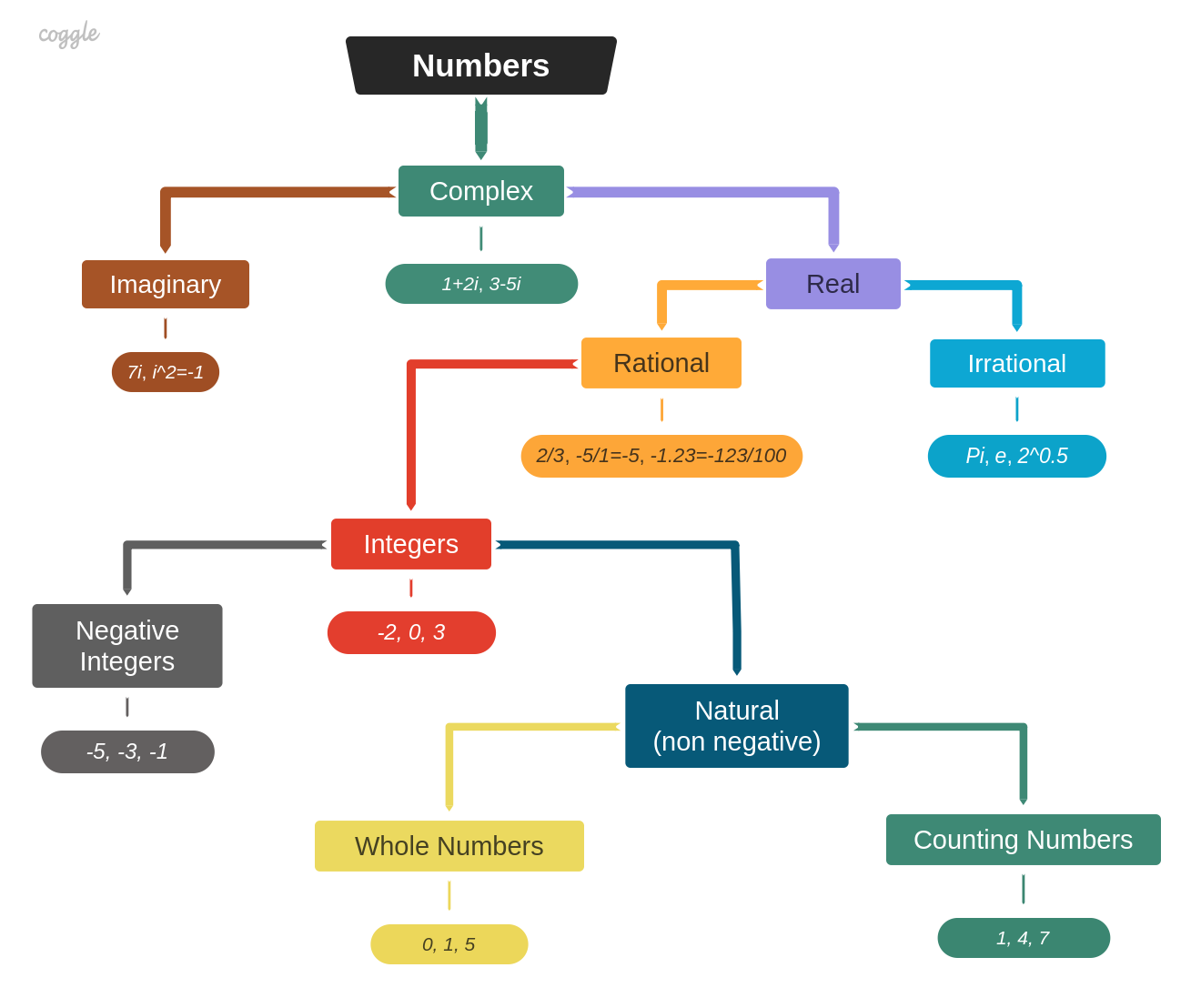

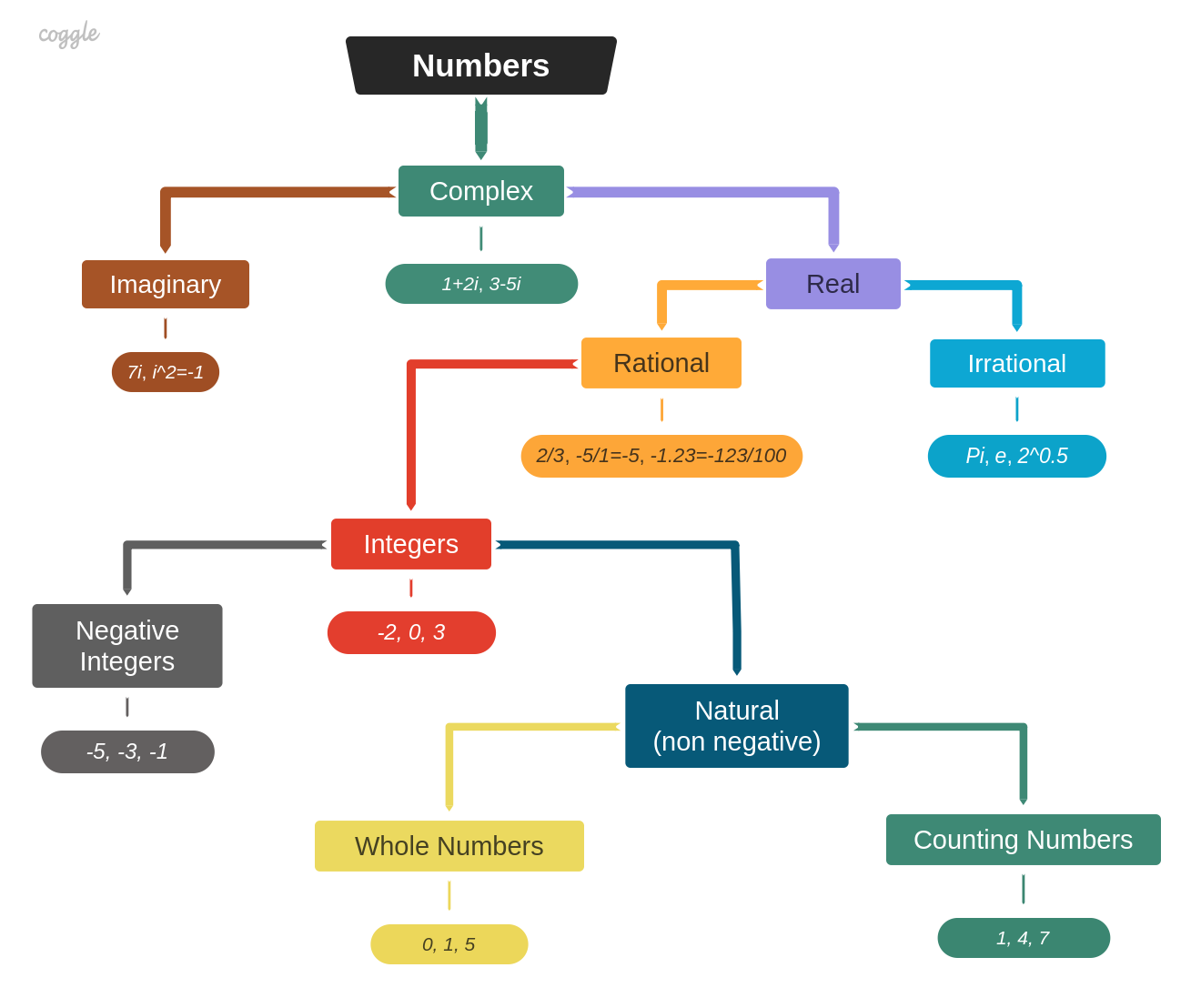

| 2019-08-16 | Number Classification |

| 2019-08-08 | A converter of a character's case and Ascii codes |

| 2019-07-22 | Constraint Satisfaction Problem (CSP) |

| 2019-07-18 | How to remove a webpage from the Google index |

| 2019-07-02 | How to redirect a static website on the Github Pages |

| 2019-06-26 | Managing your plans in the S.M.A.R.T. way |

| 2019-06-21 | Dreyfus model of skill acquisition |

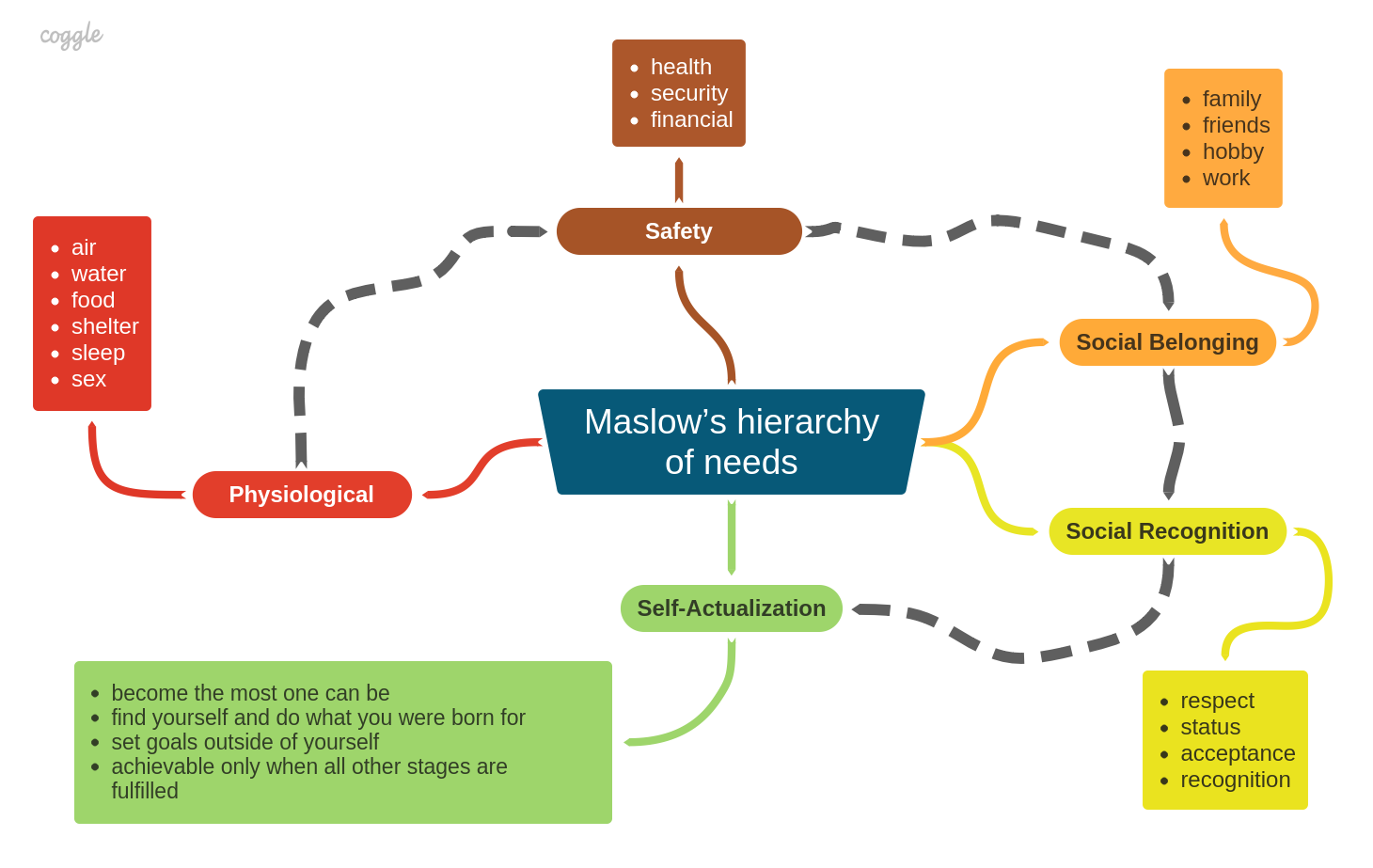

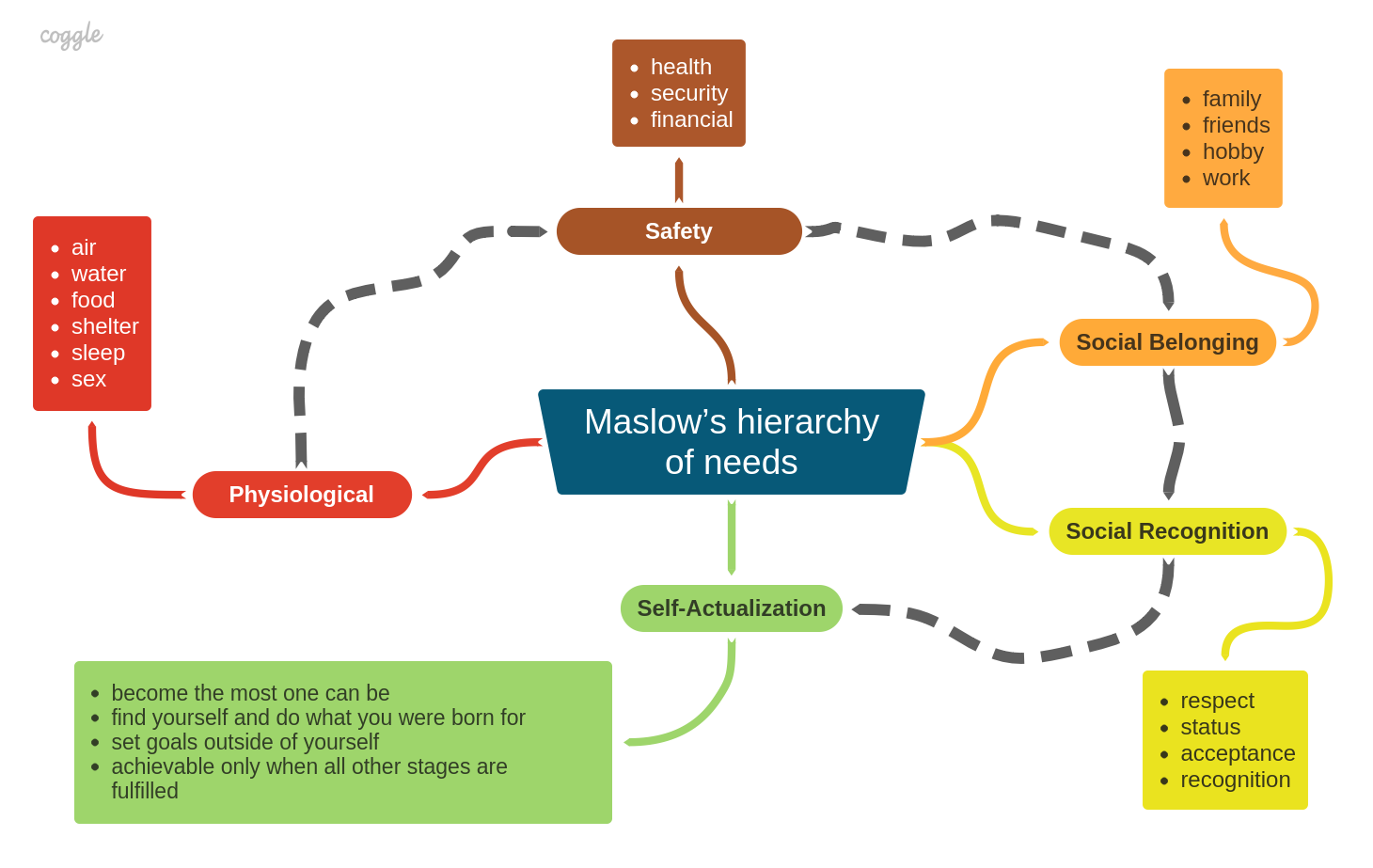

| 2019-06-15 | Maslow's hierarchy of needs |

| 2019-06-12 | Structured Programming Paradigm |

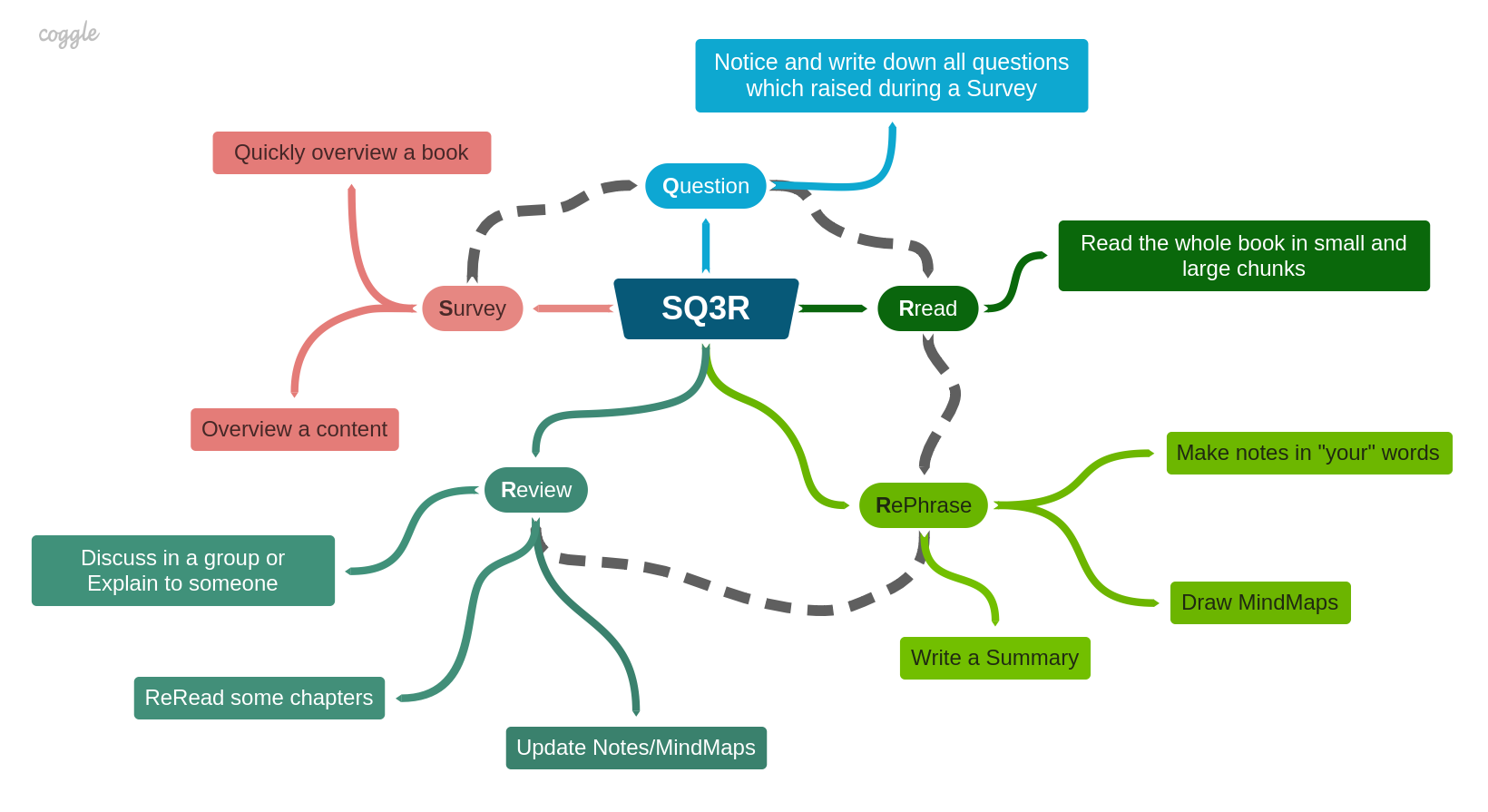

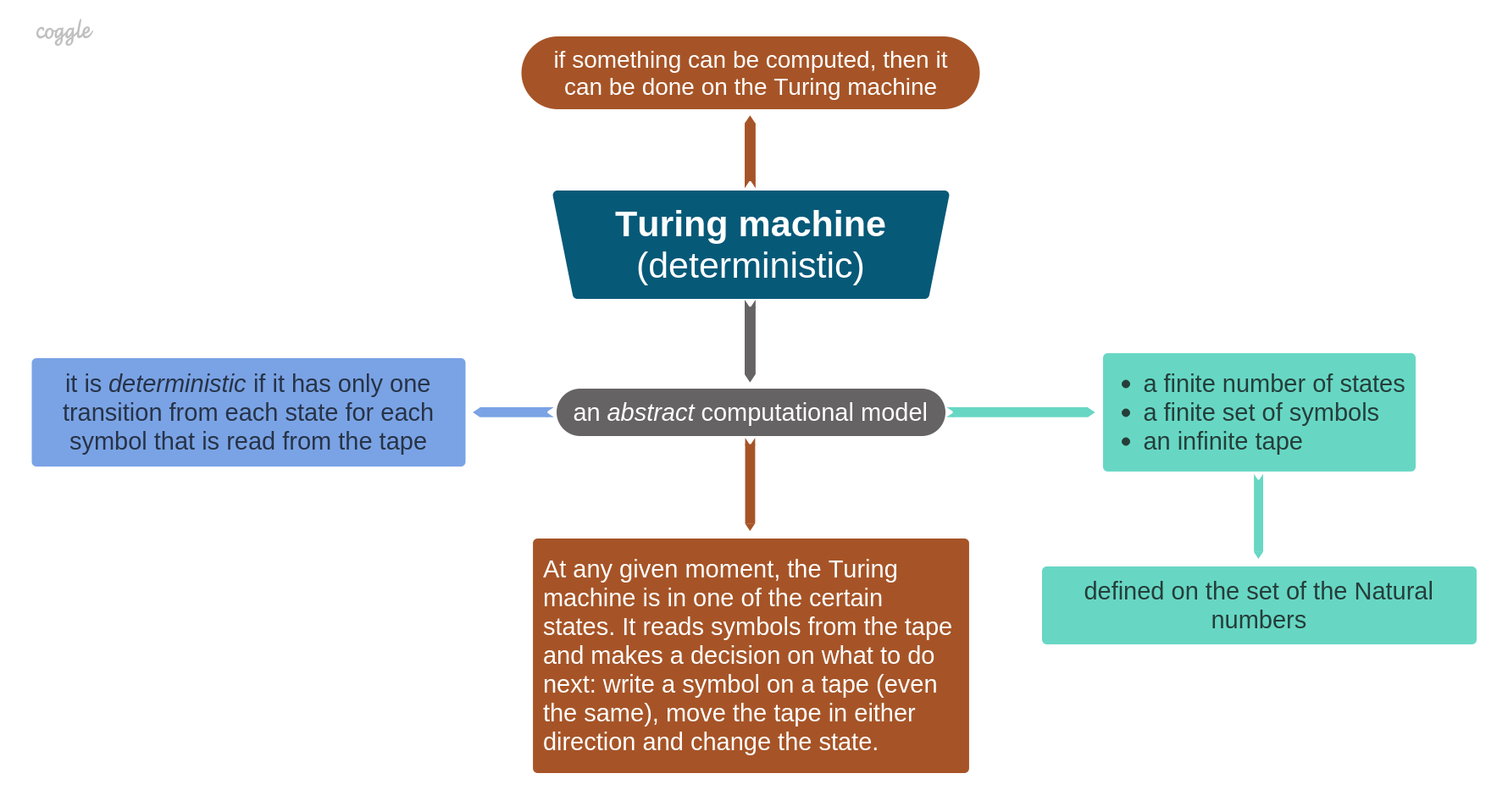

| 2019-06-10 | SQ3R |

| 2024-01-16 | Using udp-link to enhance TCP connections stability |

| 2020-05-13 | The zoo of binary-to-text encoding schemes |

| 2020-04-18 | Convert binary data to a text with the lowest overhead |

| 2020-01-18 | My notes for the "Pragmatic Thinking and Learning" book |

| 2019-12-20 | Computer Science vs Information Technology |

| 2019-12-19 | Who is an engineer |

| 2019-12-15 | Algorithm is... |

| 2019-12-15 | Turing: thesis, machine, completeness |

| 2019-08-21 | Organizing Unstructured Data |

| 2019-08-16 | Number Classification |

| 2019-08-08 | A converter of a character's case and Ascii codes |

| 2019-07-22 | Constraint Satisfaction Problem (CSP) |

| 2019-07-18 | How to remove a webpage from the Google index |

| 2019-07-02 | How to redirect a static website on the Github Pages |

| 2019-06-26 | Managing your plans in the S.M.A.R.T. way |

| 2019-06-21 | Dreyfus model of skill acquisition |

| 2019-06-15 | Maslow's hierarchy of needs |

| 2019-06-12 | Structured Programming Paradigm |

| 2019-06-10 | SQ3R |

| 2019-06-26 | Managing your plans in the S.M.A.R.T. way |

| 2019-06-21 | Dreyfus model of skill acquisition |

| 2019-06-15 | Maslow's hierarchy of needs |

| 2019-06-12 | Structured Programming Paradigm |

| 2019-06-10 | SQ3R |

| 2019-06-26 | Managing your plans in the S.M.A.R.T. way |

| 2019-06-21 | Dreyfus model of skill acquisition |

| 2019-06-15 | Maslow's hierarchy of needs |

| 2019-06-12 | Structured Programming Paradigm |

| 2019-06-10 | SQ3R |

| 2019-07-22 | Constraint Satisfaction Problem (CSP) |

| 2019-07-18 | How to remove a webpage from the Google index |

| 2019-07-02 | How to redirect a static website on the Github Pages |

| 2019-07-22 | Constraint Satisfaction Problem (CSP) |

| 2019-07-18 | How to remove a webpage from the Google index |

| 2019-07-02 | How to redirect a static website on the Github Pages |

| 2019-08-21 | Organizing Unstructured Data |

| 2019-08-16 | Number Classification |

| 2019-08-08 | A converter of a character's case and Ascii codes |

| 2019-08-21 | Organizing Unstructured Data |

| 2019-08-16 | Number Classification |

| 2019-08-08 | A converter of a character's case and Ascii codes |

| 2019-12-20 | Computer Science vs Information Technology |

| 2019-12-19 | Who is an engineer |

| 2019-12-15 | Algorithm is... |

| 2019-12-15 | Turing: thesis, machine, completeness |

| 2019-12-20 | Computer Science vs Information Technology |

| 2019-12-19 | Who is an engineer |

| 2019-12-15 | Algorithm is... |

| 2019-12-15 | Turing: thesis, machine, completeness |

| 2019-12-20 | Computer Science vs Information Technology |

| 2019-12-19 | Who is an engineer |

| 2019-12-15 | Algorithm is... |

| 2019-12-15 | Turing: thesis, machine, completeness |

| 2019-08-21 | Organizing Unstructured Data |

| 2019-08-16 | Number Classification |

| 2019-08-08 | A converter of a character's case and Ascii codes |

| 2019-07-22 | Constraint Satisfaction Problem (CSP) |

| 2019-07-18 | How to remove a webpage from the Google index |

| 2019-07-02 | How to redirect a static website on the Github Pages |

| 2019-06-26 | Managing your plans in the S.M.A.R.T. way |

| 2019-06-21 | Dreyfus model of skill acquisition |

| 2019-06-15 | Maslow's hierarchy of needs |

| 2019-06-12 | Structured Programming Paradigm |

| 2019-06-10 | SQ3R |

| 2019-12-20 | Computer Science vs Information Technology |

| 2019-12-19 | Who is an engineer |

| 2019-12-15 | Algorithm is... |

| 2019-12-15 | Turing: thesis, machine, completeness |

| 2019-08-21 | Organizing Unstructured Data |

| 2019-08-16 | Number Classification |

| 2019-08-08 | A converter of a character's case and Ascii codes |

| 2019-07-22 | Constraint Satisfaction Problem (CSP) |

| 2019-07-18 | How to remove a webpage from the Google index |

| 2019-07-02 | How to redirect a static website on the Github Pages |

| 2019-06-26 | Managing your plans in the S.M.A.R.T. way |

| 2019-06-21 | Dreyfus model of skill acquisition |

| 2019-06-15 | Maslow's hierarchy of needs |

| 2019-06-12 | Structured Programming Paradigm |

| 2019-06-10 | SQ3R |

| 2020-05-13 | The zoo of binary-to-text encoding schemes |

| 2020-05-13 | The zoo of binary-to-text encoding schemes |

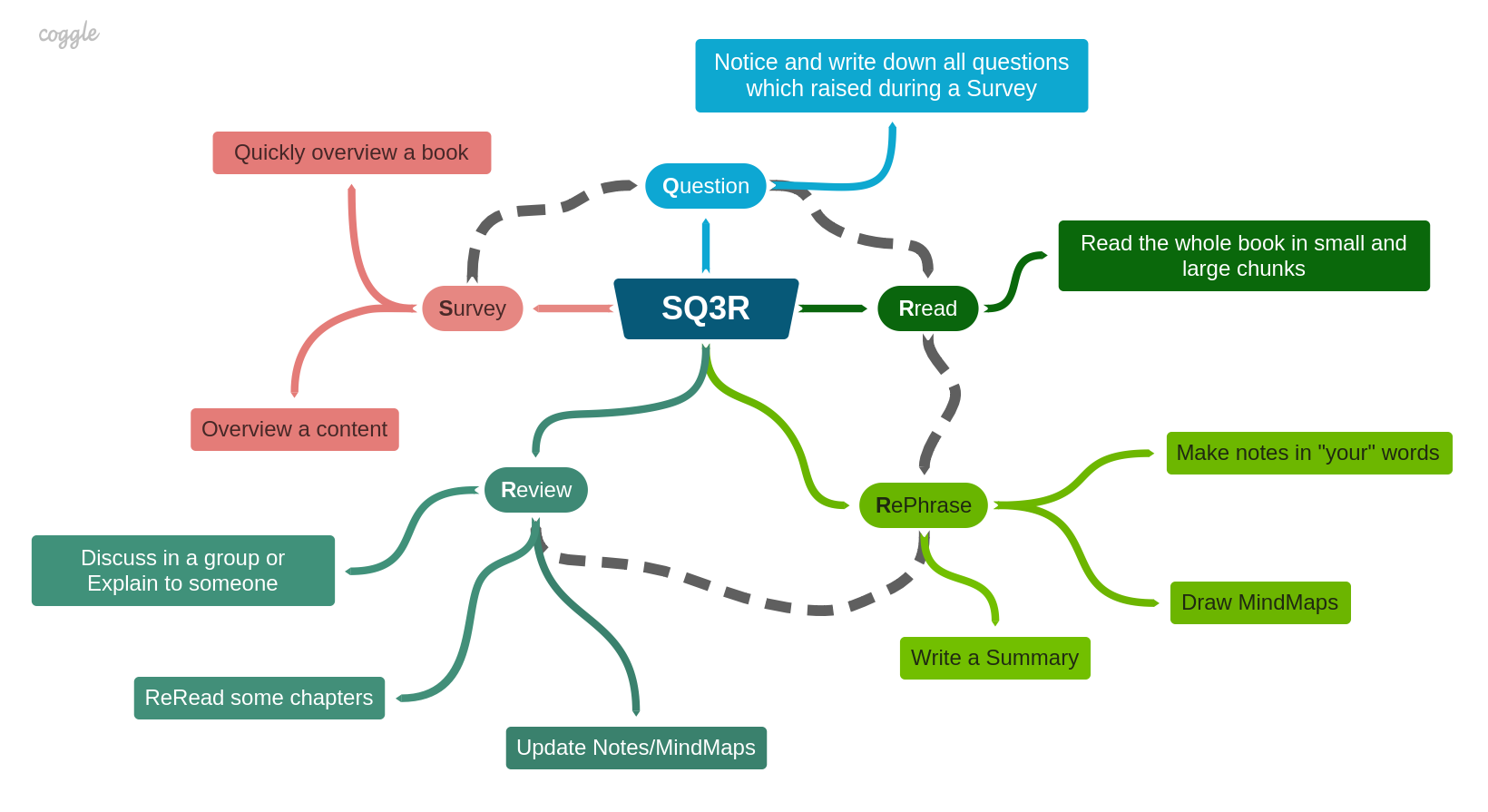

Despite the obvious expectation to find some sort of a definition of the term "Algorithm" here, I have to disappoint you, as there isn't any general or well-accepted definition. But, it's not a unique situation! Take mathematics, for example. Although there are plenty of different "definitions" that can be found in the literature, they all are just oversimplified attempts to explain what an algorithm really means.

In general, an algorithm is a way of describing the logic. And that's why it's so hard to cover all possible forms of it in terms of common rules or definitions. Most prominent mathematicians began seriously thinking about computability and what can be computed at the beginning of the 20th century. But it was so hard to generalize all the cases that eventually they had to limit the consideration by functions defined only on the set of Natural numbers.

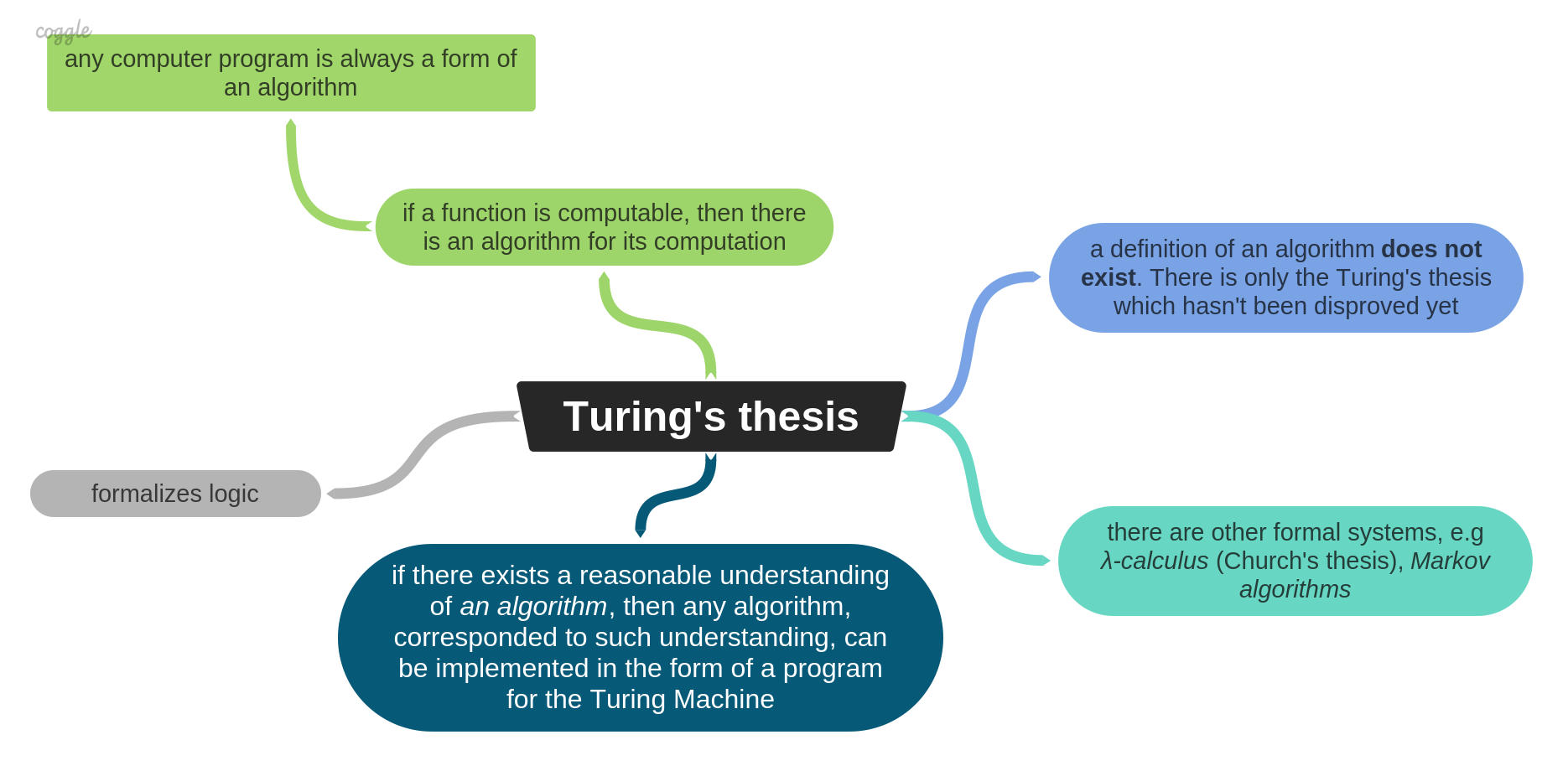

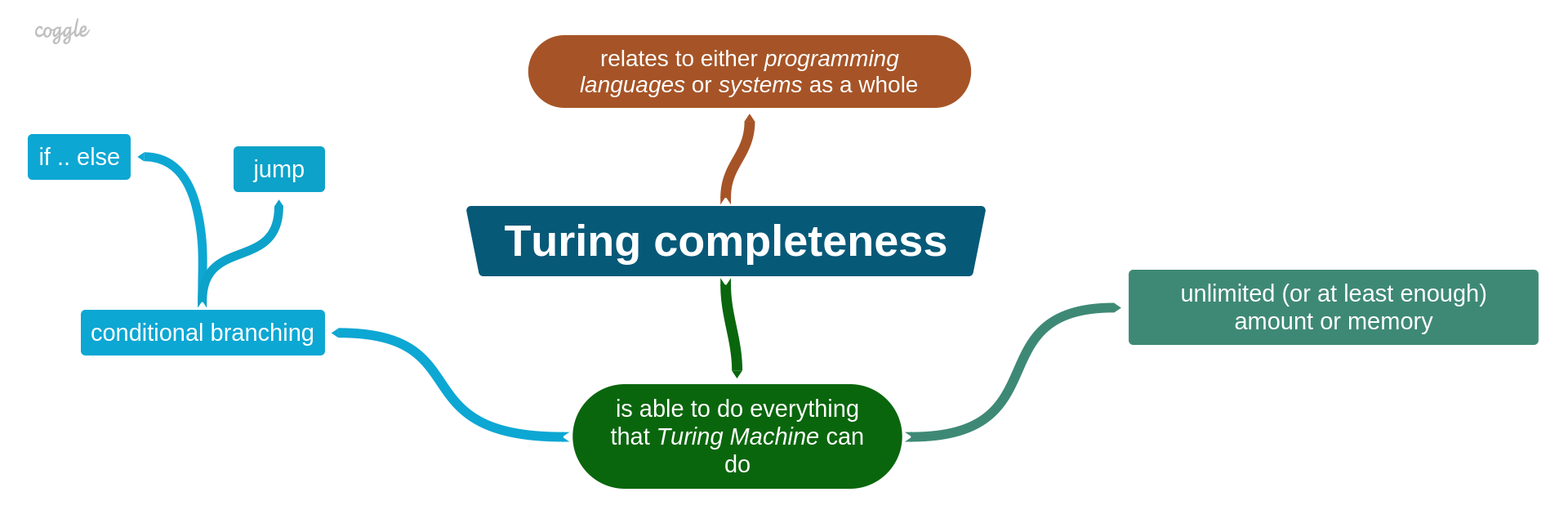

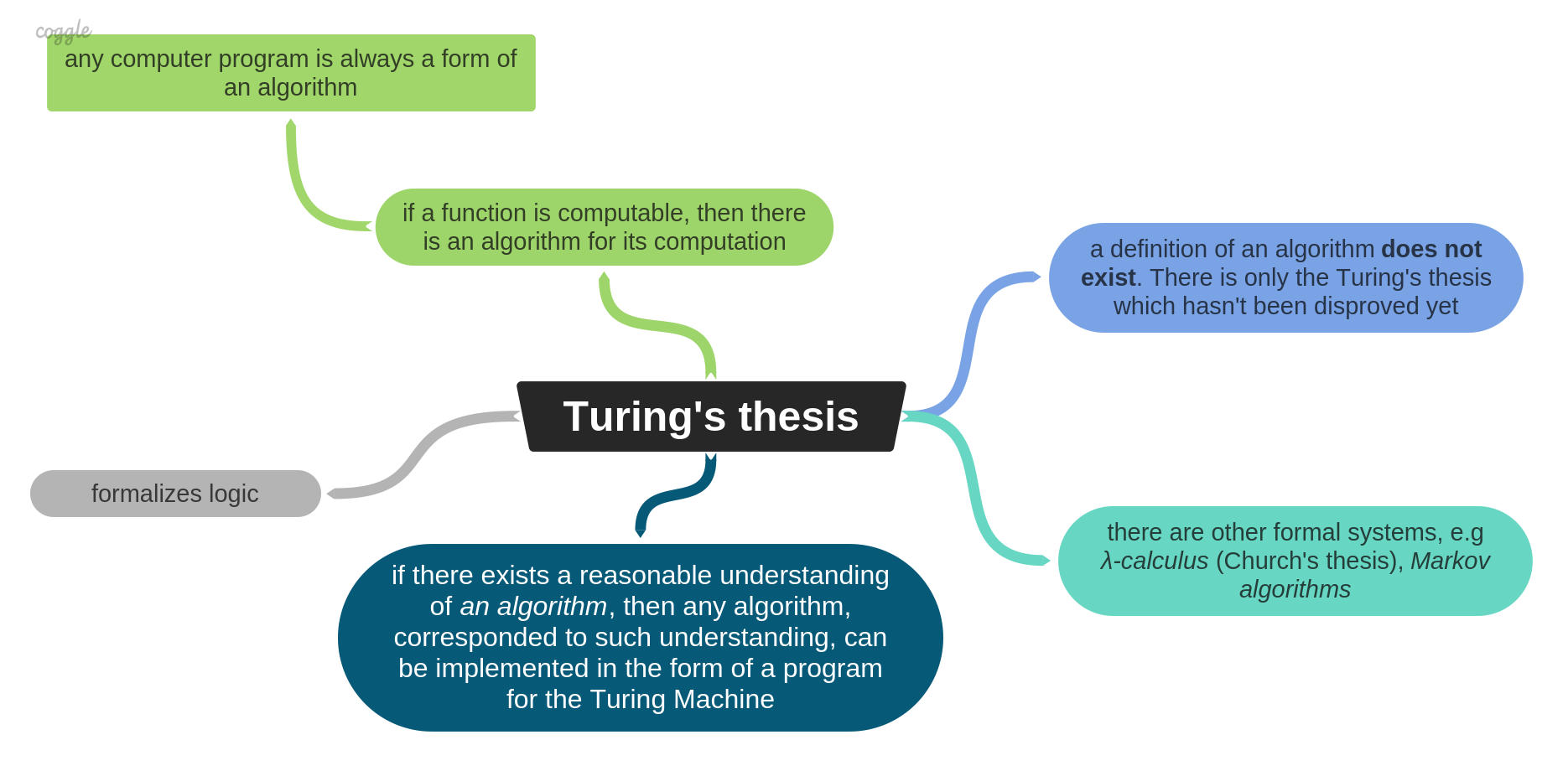

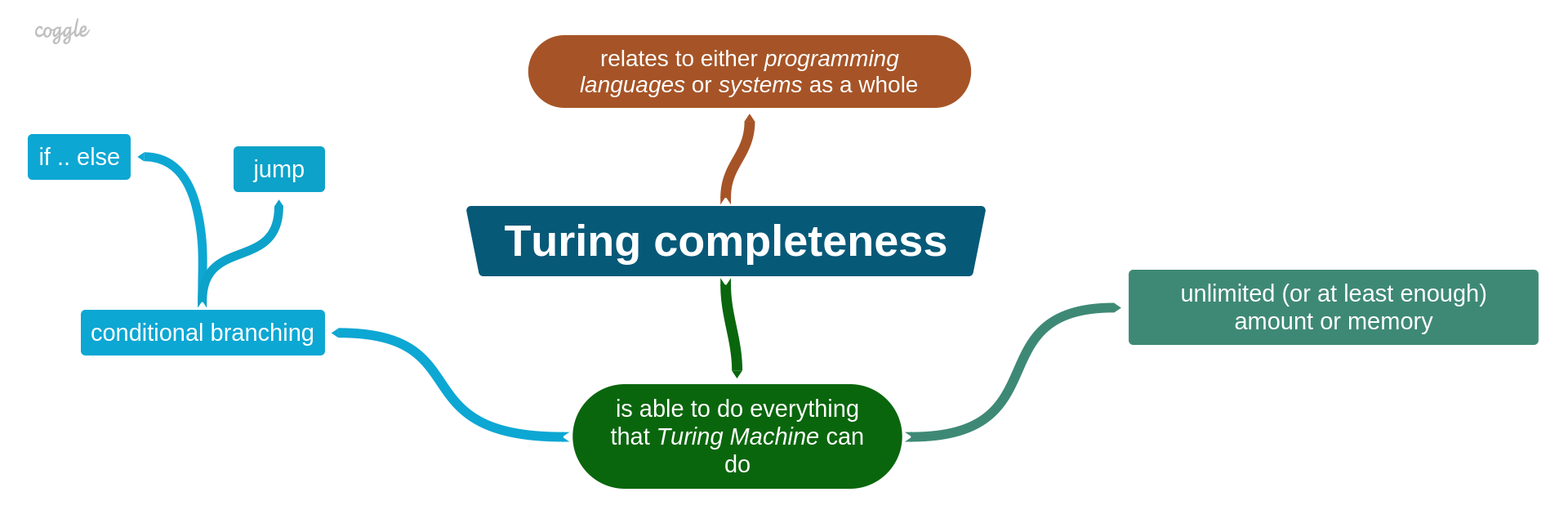

The most famous works were done by Alan Turing (related to algorithms) and Alonzo Church (related to computable functions). Alan Turing came up with the thesis which basically says, that if a function is computable then it has an algorithm, and if so, then it can be implemented on the Turing machine (TM). In other words, Turing's thesis makes it clear what can be computed and what is needed to get computed.

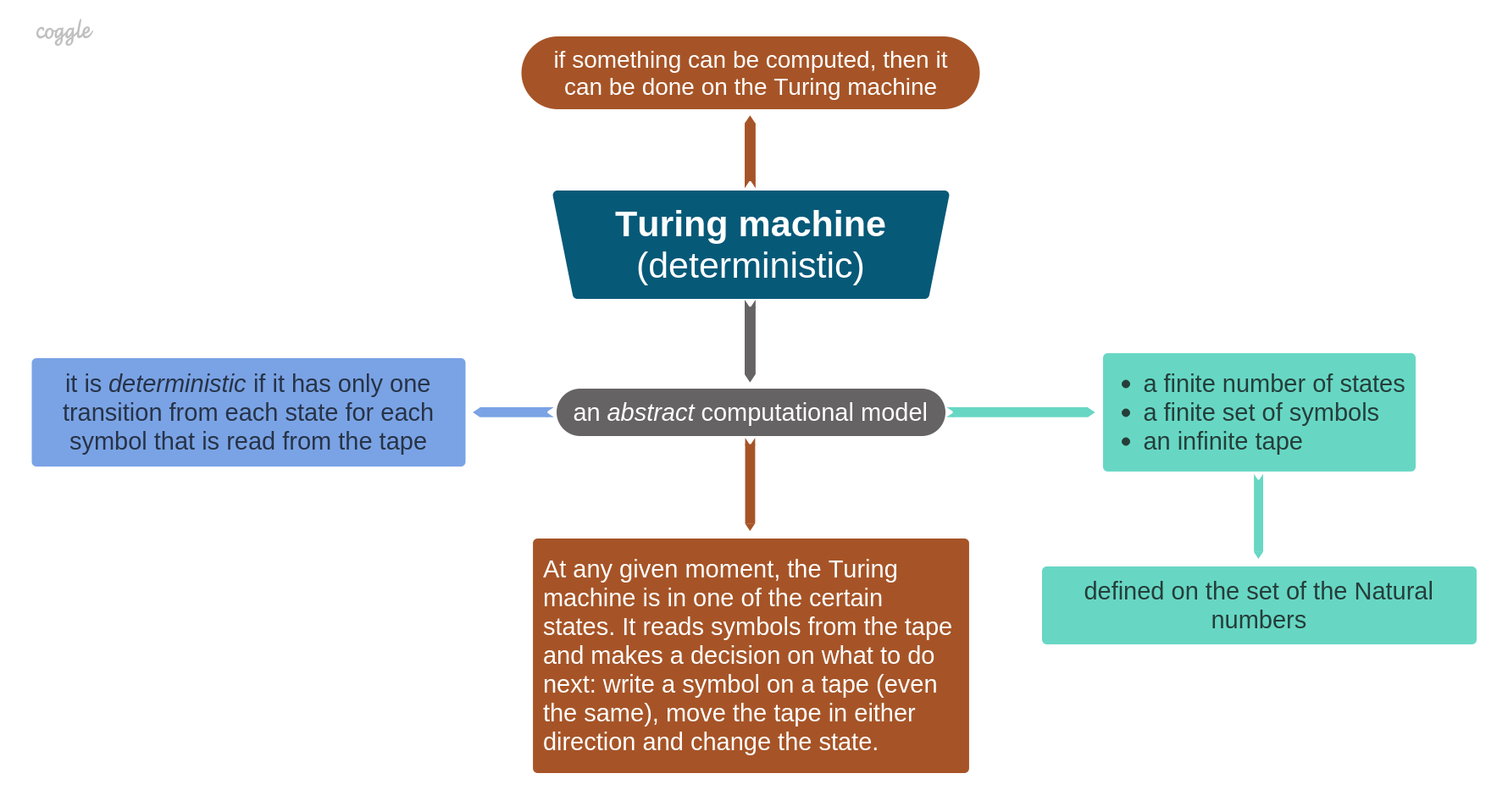

Turing machine is an abstract system that has a finite set of states and symbols, a few certain operations, and an endless tape (consisted of cells). The behavior of a TM is controlled by a program that defines a state transition and a next tape movement depending on a symbol that was read. Although, there is no a real-world analog of the TM as it is unlikely possible to have infinite memory. So, to get it more realistic, for a real analog of TM, it means two things:

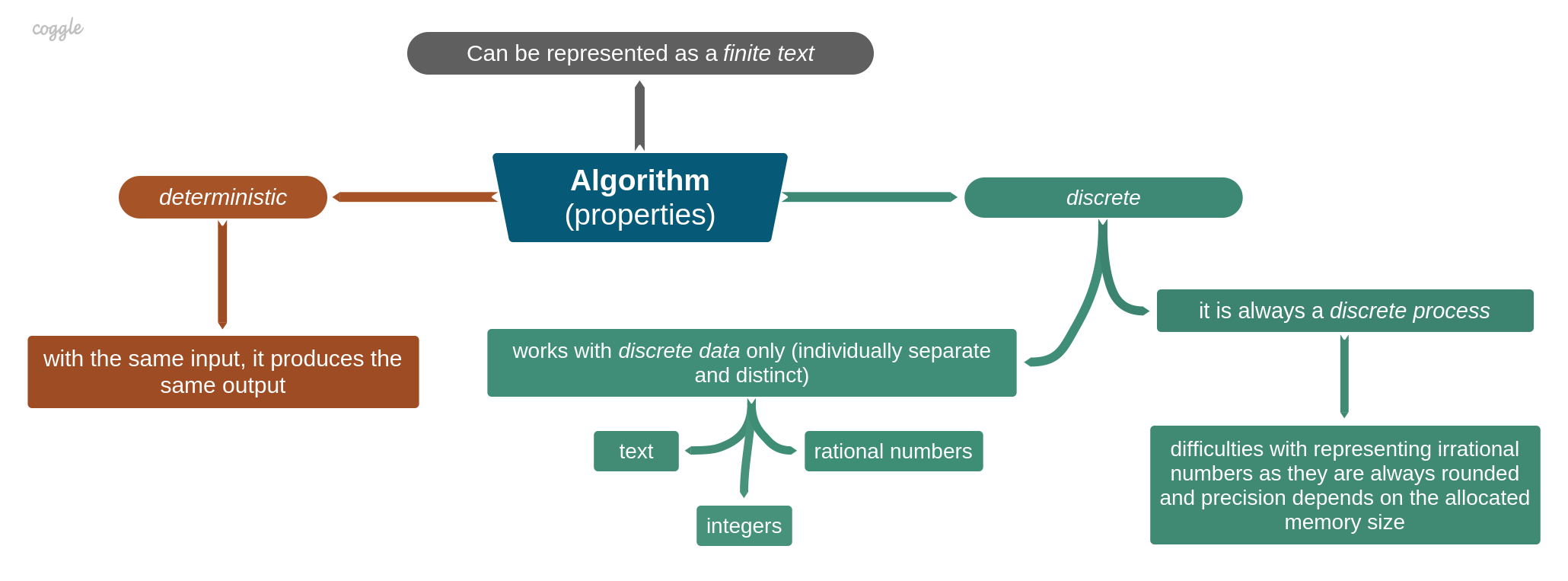

All algorithms share the same properties:

Despite the obvious expectation to find some sort of a definition of the term "Algorithm" here, I have to disappoint you, as there isn't any general or well-accepted definition. But, it's not a unique situation! Take mathematics, for example. Although there are plenty of different "definitions" that can be found in the literature, they all are just oversimplified attempts to explain what an algorithm really means.

In general, an algorithm is a way of describing the logic. And that's why it's so hard to cover all possible forms of it in terms of common rules or definitions. Most prominent mathematicians began seriously thinking about computability and what can be computed at the beginning of the 20th century. But it was so hard to generalize all the cases that eventually they had to limit the consideration by functions defined only on the set of Natural numbers.

The most famous works were done by Alan Turing (related to algorithms) and Alonzo Church (related to computable functions). Alan Turing came up with the thesis which basically says, that if a function is computable then it has an algorithm, and if so, then it can be implemented on the Turing machine (TM). In other words, Turing's thesis makes it clear what can be computed and what is needed to get computed.

Turing machine is an abstract system that has a finite set of states and symbols, a few certain operations, and an endless tape (consisted of cells). The behavior of a TM is controlled by a program that defines a state transition and a next tape movement depending on a symbol that was read. Although, there is no a real-world analog of the TM as it is unlikely possible to have infinite memory. So, to get it more realistic, for a real analog of TM, it means two things:

All algorithms share the same properties:

This article is about binary/text converters, the most popular implementations, and a non-standard approach that uses place-based single number encoding by representing a file as a big number and then converting it to another big number with any non-256 (1 byte/8 bit) radix. To make it practical, it makes sense to limit a radix (base) to 94 for matching numbers to all possible printable symbols within the 7-bit ASCII table. It is probably a theoretical prototype and has a purely academic flavor, as the time and space complexities make it applicable only to small files (up to a few tens of kilobytes), although it allows one to choose any base with no dependencies on powers of two, e.g. 7 or 77.

The main purpose of such converters is to convert a binary file represented by 256 different symbols (radix-256, 1 byte, 2^8) into a form suitable for transmission over a channel with a limited range of supported symbols. A good example is any text-based network protocol, such as HTTP (before ver. 2) or SMTP, where all transmitted binary data must be reversibly converted to a pure text form without control symbols. As you know, ASCII codes from 0 to 31 are considered control characters, and therefore they will definitely be lost during transmission over any logical channel that doesn't allow endpoints to transmit full 8-bit bytes (binary) with codes from 0 to 255. This limits the number of allowed symbols to less than 224 (256-32), but in fact it's limited only by the first 128 standardized symbols in the ASCII table, and even more.

The standard solution today is the Base64 algorithm defined in RFC 4648 (easy reading). It also describes Base32 and Base16 as possible variants. The key point here is that they all share the same property of being powers of two. The wider the range of supported symbols (codes), the more space-efficient the result of the conversion. It will be bigger anyway, the question is how much bigger. For example, Base64 encoding gives about 33% larger output, because 3 input bytes (8 valued bits) are translated into 4 output bytes (6 valued bits, 2^6=64). So the ratio is always 4/3, i.e. the output is larger by 1/3 or 33.(3)%. Practically speaking, Base32 is very inefficient because it means translating 5 input (8 valued bits) bytes to 8 output (5 valued bits, 2^5=32) bytes and the ratio is 8/5, i.e. the output is larger by 3/5 or 60%. In this context, it is hard to consider any kind of efficiency of Base16, since its output size is larger by 100% (each byte of 8 valued bits is represented by two bytes of 4 valued bits, also known as nibbles, 2^4=16). It is not even a translation, just a representation of an 8-bit byte in hexadecimal.

If you're curious how these input/output byte ratios were calculated for the Base64/32/16 encodings, the answer is LCM (Least Common Multiple). Let's calculate it ourselves, and for that we need another function, the GCD (Greatest Common Divisor)

The point is this. What if a channel is only able to transmit a few different symbols, like 9 or 17. That is, we have a file represented by a 256-symbol alphabet (a normal 8-bit byte), we are not limited by computing power or memory constraints on either side, but we are able to send only 7 different symbols instead of 256? Base64/32/16 are no solution here. Then Base7 is the only possible output format.

Another example, what if the amount of data transmitted is a concern for a channel? Base64, as it has been shown, increases the data by 33% no matter what is transmitted, always. Base94, for example, only increases the output by 22%.

It may seem that Base94 is not the limit. If the first 32 ASCII codes are control characters, and there are 256 codes in total, what stops you from using an alphabet of 256 - 32 = 224 symbols? There is a reason. Not all of the 224 ASCII codes are printable characters or have a standard representation. In general, only 7 bits (0..127) are standardized, and the rest (128..255) is used for the variety of locales, e.g. Koi8-R, Windows-1251, etc. This means that only 128 - 32 = 96 are available in the standardized range. In addition, the ASCII code 32 is the space character, and 127 doesn't have a visible character either. So 96 - 2 gives us the 94 printable characters that have the same association with their codes on most machines.

This solution is quite simple, but this simplicity also imposes a significant computational constraint. The entire input file can be treated as a big number with a base of 256. It could easily be a really big number, requiring thousands of bits. Then all we have to do is convert that big number to another base. That's it. And Python3 makes it even easier! Normally, conversions between different bases are done via an intermediate base10. The good news is that Python3 has built-in support for big number calculations (it is built into int). The int class has a method that reads any number of bytes and automatically represents them as a large Base10 number with a desired endian. So essentially all of this complexity can be implemented in just two lines of code, which is pretty amazing!

with open('inpit_file', 'rb') as f:

+ Convert binary data to a text with the lowest overhead :: Vorakl's notes Convert binary data to a text with the lowest overhead

A binary-to-text encoding with any radix from 2 to 94

This article is about binary/text converters, the most popular implementations, and a non-standard approach that uses place-based single number encoding by representing a file as a big number and then converting it to another big number with any non-256 (1 byte/8 bit) radix. To make it practical, it makes sense to limit a radix (base) to 94 for matching numbers to all possible printable symbols within the 7-bit ASCII table. It is probably a theoretical prototype and has a purely academic flavor, as the time and space complexities make it applicable only to small files (up to a few tens of kilobytes), although it allows one to choose any base with no dependencies on powers of two, e.g. 7 or 77.

Background

The main purpose of such converters is to convert a binary file represented by 256 different symbols (radix-256, 1 byte, 2^8) into a form suitable for transmission over a channel with a limited range of supported symbols. A good example is any text-based network protocol, such as HTTP (before ver. 2) or SMTP, where all transmitted binary data must be reversibly converted to a pure text form without control symbols. As you know, ASCII codes from 0 to 31 are considered control characters, and therefore they will definitely be lost during transmission over any logical channel that doesn't allow endpoints to transmit full 8-bit bytes (binary) with codes from 0 to 255. This limits the number of allowed symbols to less than 224 (256-32), but in fact it's limited only by the first 128 standardized symbols in the ASCII table, and even more.

The standard solution today is the Base64 algorithm defined in RFC 4648 (easy reading). It also describes Base32 and Base16 as possible variants. The key point here is that they all share the same property of being powers of two. The wider the range of supported symbols (codes), the more space-efficient the result of the conversion. It will be bigger anyway, the question is how much bigger. For example, Base64 encoding gives about 33% larger output, because 3 input bytes (8 valued bits) are translated into 4 output bytes (6 valued bits, 2^6=64). So the ratio is always 4/3, i.e. the output is larger by 1/3 or 33.(3)%. Practically speaking, Base32 is very inefficient because it means translating 5 input (8 valued bits) bytes to 8 output (5 valued bits, 2^5=32) bytes and the ratio is 8/5, i.e. the output is larger by 3/5 or 60%. In this context, it is hard to consider any kind of efficiency of Base16, since its output size is larger by 100% (each byte of 8 valued bits is represented by two bytes of 4 valued bits, also known as nibbles, 2^4=16). It is not even a translation, just a representation of an 8-bit byte in hexadecimal.

If you're curious how these input/output byte ratios were calculated for the Base64/32/16 encodings, the answer is LCM (Least Common Multiple). Let's calculate it ourselves, and for that we need another function, the GCD (Greatest Common Divisor)

- Base64 (Input: 8 bits, Output: 6 bits):

- LCM(8, 6) = 8*6/GCD(8,6) = 24 bit

- Input: 24 / 8 = 3 bytes

- Output: 24 / 6 = 4 bytes

- Ratio (Output/Input): 4/3

- Base32 (Input: 8 bits, Output: 5 bits):

- LCM(8, 5) = 8*5/GCD(8,5) = 40 bit

- Input: 40 / 8 = 5 bytes

- Output: 40 / 5 = 8 bytes

- Ratio (Output/Input): 8/5

- Base16 (Input: 8 bits, Output: 4 bits):

- LCM(8, 4) = 8*4/GCD(8,4) = 8 bit

- Input: 8 / 8 = 1 byte

- Output: 8 / 4 = 2 bytes

- Ratio (Output/Input): 2/1

What's the point?

The point is this. What if a channel is only able to transmit a few different symbols, like 9 or 17. That is, we have a file represented by a 256-symbol alphabet (a normal 8-bit byte), we are not limited by computing power or memory constraints on either side, but we are able to send only 7 different symbols instead of 256? Base64/32/16 are no solution here. Then Base7 is the only possible output format.

Another example, what if the amount of data transmitted is a concern for a channel? Base64, as it has been shown, increases the data by 33% no matter what is transmitted, always. Base94, for example, only increases the output by 22%.

It may seem that Base94 is not the limit. If the first 32 ASCII codes are control characters, and there are 256 codes in total, what stops you from using an alphabet of 256 - 32 = 224 symbols? There is a reason. Not all of the 224 ASCII codes are printable characters or have a standard representation. In general, only 7 bits (0..127) are standardized, and the rest (128..255) is used for the variety of locales, e.g. Koi8-R, Windows-1251, etc. This means that only 128 - 32 = 96 are available in the standardized range. In addition, the ASCII code 32 is the space character, and 127 doesn't have a visible character either. So 96 - 2 gives us the 94 printable characters that have the same association with their codes on most machines.

Solution

This solution is quite simple, but this simplicity also imposes a significant computational constraint. The entire input file can be treated as a big number with a base of 256. It could easily be a really big number, requiring thousands of bits. Then all we have to do is convert that big number to another base. That's it. And Python3 makes it even easier! Normally, conversions between different bases are done via an intermediate base10. The good news is that Python3 has built-in support for big number calculations (it is built into int). The int class has a method that reads any number of bytes and automatically represents them as a large Base10 number with a desired endian. So essentially all of this complexity can be implemented in just two lines of code, which is pretty amazing!

with open('inpit_file', 'rb') as f:

in_data = int.from_bytes(f.read(), 'big')

where in_data is our large Base10 number. This is only two lines, but this is where most of the math happens and most of the time is spent. So now convert it to any other base, as you'd normally do with normal small decimal numbers.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

diff --git a/articles/canonical/index.html b/articles/canonical/index.html

index 20c0ca2d..5be3fc53 100644

--- a/articles/canonical/index.html

+++ b/articles/canonical/index.html

@@ -1,4 +1,4 @@

- How to redirect a static website on the Github Pages :: Vorakl's notes How to redirect a static website on the Github Pages

The use case for a Temporary Redirect and the Canonical Link Element

I run a few static websites for my private projects on the Github Pages. I'm absolutely happy with the service, as it supports custom domains, automatically redirects to HTTPS, and transparently installs SSL certificates (with automatic issuing via Let's Encrypt). It is very fast (thanks to Fastly's content delivery network) and is extremely reliable (I haven't had any issues for years). Taking into account the fact that I get all of this for free, it perfectly matches my needs at the moment. It has, however, one important limitation: because it serves static websites only, this means no query parameters, no dynamic content generated on the server side, no options for injecting any server-side configuration (e.g., .htaccess), and the only things I can push to the website's root directory are static assets (e.g., HTML, CSS, JS, JPEG, etc.). In general, this is not a big issue. There are a lot of the open source static site generators available, such as Jekyll, which is available by default the dashboard, and Pelican, which I prefer in most cases. Nevertheless, when you need to implement something that is traditionally solved on the server side, a whole new level of challenge begins.

For example, I recently had to change a custom domain name for one of my websites. Keeping the old one was ridiculously expensive, and I wasn't willing to continue wasting money. I found a cheaper alternative and immediately faced a bigger problem: all the search engines have the old name in their indexes. Updating indexes takes time, and until that happens, I would have to redirect all requests to the new location. Ideally, I would redirect each indexed resource to the equivalent on the new site, but at minimum, I needed to redirect requests to the new start page. I had access to the old domain name for enough time, and therefore, I could run the site separately on both domain names at the same time.

There is one proper solution to this situation that should be used whenever possible: Permanent redirect, or the 301 Moved Permanently status code, is the way to redirect pages implemented in the HTTP protocol. The only issue is that it's supposed to happen on the server side within a server response's HTTP header. But the only solution I could implement resides on a client side; that is, either HTML code or JavaScript. I didn't consider the JS variant because I didn't want to rely on the script's support in web browsers. Once I defined the task, I recalled a solution: the HTML <meta> tag <meta http-equiv> with the 'refresh' HTTP header. Although it can be used to ask browsers to reload a page or jump to another URL after a specified number of seconds, after some research, I learned it is more complicated than I thought with some interesting facts and details.

The solution

TL;DR (for anyone who isn't interested in all the details): In brief, this solution configures two repositories to serve as static websites with custom domain names. On the site with the old domain, I reconstructed the website's entire directory structure and put the following index.html in each of them (including the root):

<!DOCTYPE HTML>

+ How to redirect a static website on the Github Pages :: Vorakl's notes How to redirect a static website on the Github Pages

The use case for a Temporary Redirect and the Canonical Link Element

I run a few static websites for my private projects on the Github Pages. I'm absolutely happy with the service, as it supports custom domains, automatically redirects to HTTPS, and transparently installs SSL certificates (with automatic issuing via Let's Encrypt). It is very fast (thanks to Fastly's content delivery network) and is extremely reliable (I haven't had any issues for years). Taking into account the fact that I get all of this for free, it perfectly matches my needs at the moment. It has, however, one important limitation: because it serves static websites only, this means no query parameters, no dynamic content generated on the server side, no options for injecting any server-side configuration (e.g., .htaccess), and the only things I can push to the website's root directory are static assets (e.g., HTML, CSS, JS, JPEG, etc.). In general, this is not a big issue. There are a lot of the open source static site generators available, such as Jekyll, which is available by default the dashboard, and Pelican, which I prefer in most cases. Nevertheless, when you need to implement something that is traditionally solved on the server side, a whole new level of challenge begins.

For example, I recently had to change a custom domain name for one of my websites. Keeping the old one was ridiculously expensive, and I wasn't willing to continue wasting money. I found a cheaper alternative and immediately faced a bigger problem: all the search engines have the old name in their indexes. Updating indexes takes time, and until that happens, I would have to redirect all requests to the new location. Ideally, I would redirect each indexed resource to the equivalent on the new site, but at minimum, I needed to redirect requests to the new start page. I had access to the old domain name for enough time, and therefore, I could run the site separately on both domain names at the same time.

There is one proper solution to this situation that should be used whenever possible: Permanent redirect, or the 301 Moved Permanently status code, is the way to redirect pages implemented in the HTTP protocol. The only issue is that it's supposed to happen on the server side within a server response's HTTP header. But the only solution I could implement resides on a client side; that is, either HTML code or JavaScript. I didn't consider the JS variant because I didn't want to rely on the script's support in web browsers. Once I defined the task, I recalled a solution: the HTML <meta> tag <meta http-equiv> with the 'refresh' HTTP header. Although it can be used to ask browsers to reload a page or jump to another URL after a specified number of seconds, after some research, I learned it is more complicated than I thought with some interesting facts and details.

The solution

TL;DR (for anyone who isn't interested in all the details): In brief, this solution configures two repositories to serve as static websites with custom domain names. On the site with the old domain, I reconstructed the website's entire directory structure and put the following index.html in each of them (including the root):

<!DOCTYPE HTML>

<html lang="en">

<head>

<meta charset="utf-8">

diff --git a/articles/char-converter/index.html b/articles/char-converter/index.html

index 650b7c7f..0ec22c0b 100644

--- a/articles/char-converter/index.html

+++ b/articles/char-converter/index.html

@@ -1,4 +1,4 @@

- A converter of a character's case and Ascii codes :: Vorakl's notes A converter of a character's case and Ascii codes

An example of using the Constraint Programming for calculating multiple but linked results

The constraint programming paradigm is effectively applied for solving a group of problems which can be translated to variables and constraints or represented as a mathematic equation, and so related to the CSP. Using declarative programming style it describes a general model with certain properties. In contrast to the imperative style, it doesn't tell how to achieve something, but rather what to achieve. Instead of defining a set of instructions with only one obvious way for computing values, the constraint programming declares relationships between variables within constraints. A final model makes it possible to compute the values of variables regardless of direction or changes. Thus, any change of the value of one variable affects the whole system (all other variables) and to satisfy defined constraints it leads to recomputing the other values.

Let's take, for example, Pythagoras' theorem: a² + b² = c². The constraint is represented by this equation, which has three variables (a, b, and c), and each has a domain (non-negative). Using the imperative programming style, to compute any of these variables having other two, we would need to create three different functions (because each variable is computed by a different equation):

- c = √(a² + b²)

- a = √(c² - b²)

- b = √(c² - a²)

These functions satisfy the main constraint and to check domains, each function should validate the input. Moreover, at least one more function would be needed for choosing an appropriate function accordingly to provided variables. This is one of possible solutions:

def pythagoras(*, a=None, b=None, c=None):

+ A converter of a character's case and Ascii codes :: Vorakl's notes A converter of a character's case and Ascii codes

An example of using the Constraint Programming for calculating multiple but linked results

The constraint programming paradigm is effectively applied for solving a group of problems which can be translated to variables and constraints or represented as a mathematic equation, and so related to the CSP. Using declarative programming style it describes a general model with certain properties. In contrast to the imperative style, it doesn't tell how to achieve something, but rather what to achieve. Instead of defining a set of instructions with only one obvious way for computing values, the constraint programming declares relationships between variables within constraints. A final model makes it possible to compute the values of variables regardless of direction or changes. Thus, any change of the value of one variable affects the whole system (all other variables) and to satisfy defined constraints it leads to recomputing the other values.

Let's take, for example, Pythagoras' theorem: a² + b² = c². The constraint is represented by this equation, which has three variables (a, b, and c), and each has a domain (non-negative). Using the imperative programming style, to compute any of these variables having other two, we would need to create three different functions (because each variable is computed by a different equation):

- c = √(a² + b²)

- a = √(c² - b²)

- b = √(c² - a²)

These functions satisfy the main constraint and to check domains, each function should validate the input. Moreover, at least one more function would be needed for choosing an appropriate function accordingly to provided variables. This is one of possible solutions:

def pythagoras(*, a=None, b=None, c=None):

''' Computes a side of a right triangle '''

# Validate

diff --git a/articles/cs-vs-it/index.html b/articles/cs-vs-it/index.html

index 4cb88a70..02677418 100644

--- a/articles/cs-vs-it/index.html

+++ b/articles/cs-vs-it/index.html

@@ -1 +1 @@

- Computer Science vs Information Technology :: Vorakl's notes Computer Science vs Information Technology

Differences between two computer-related studies

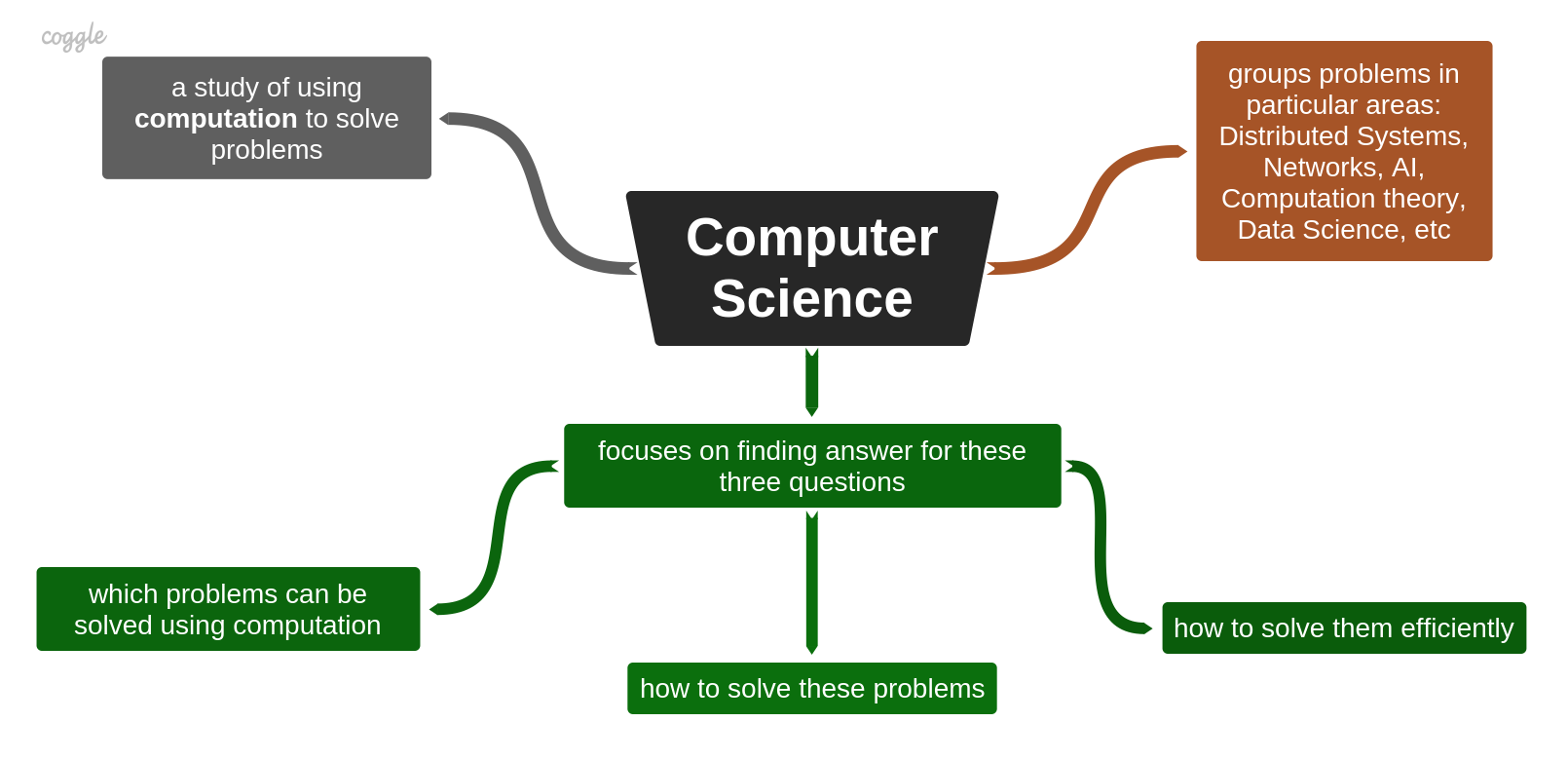

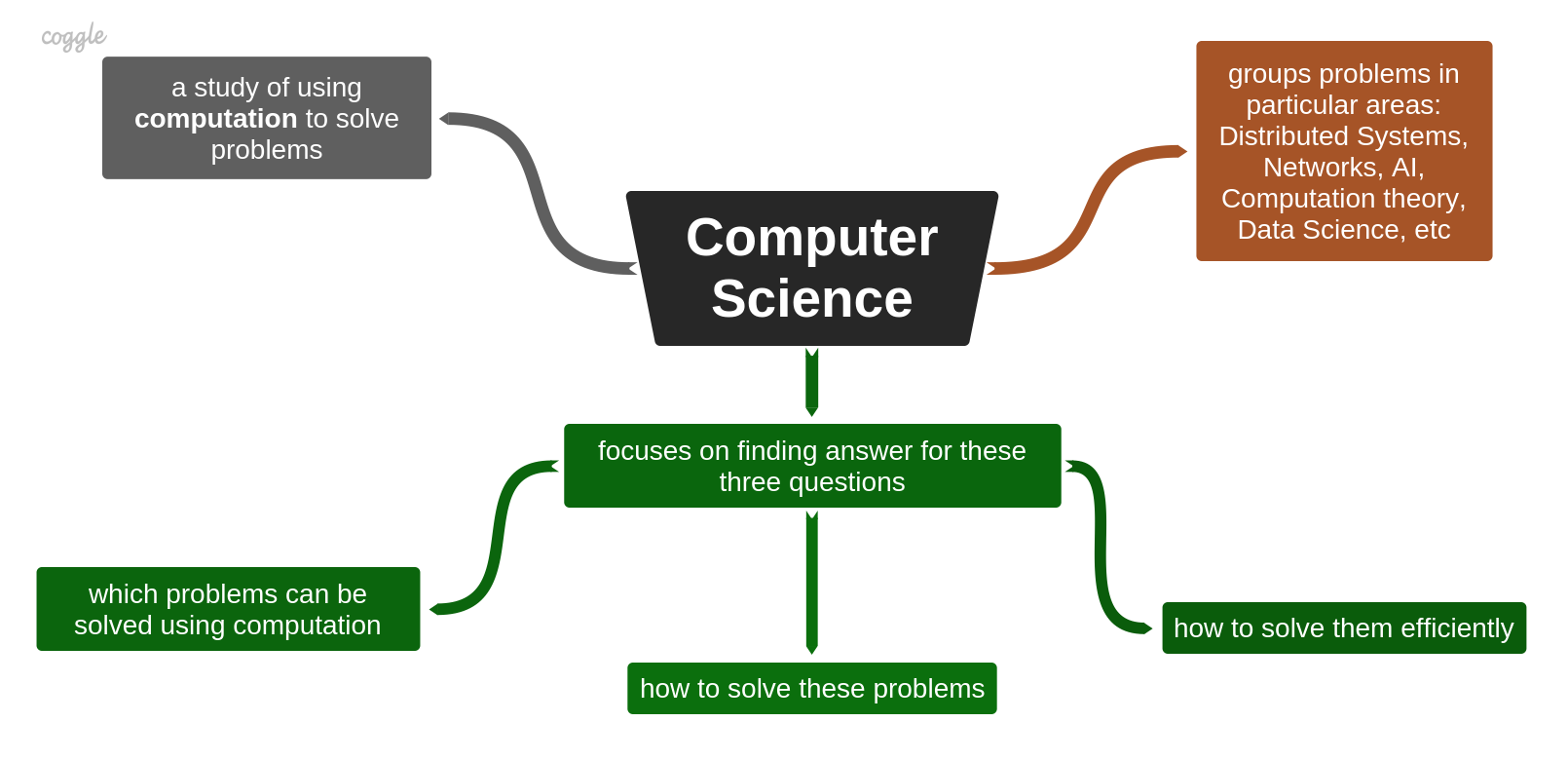

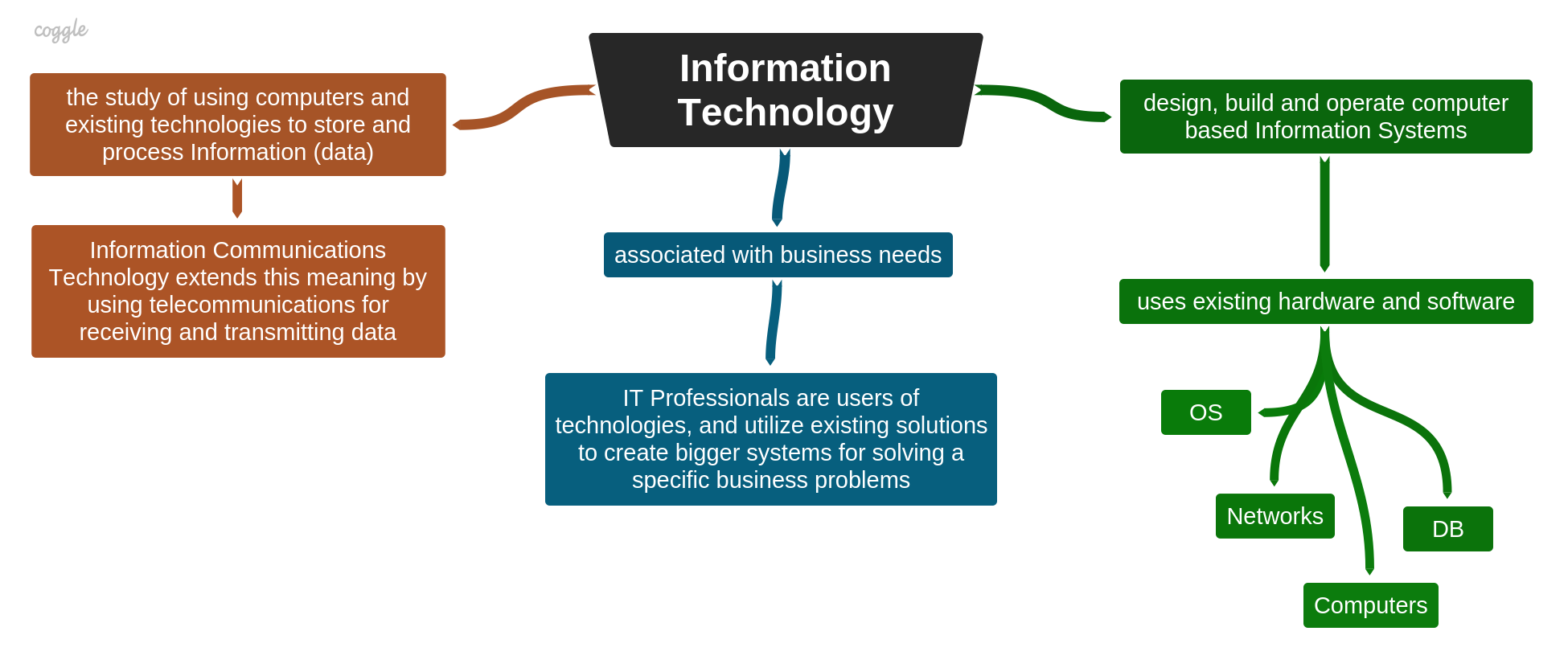

If you ever thought about getting a computer-related (graduated) education, you probably came across a variety of similar disciplines, more or less connected to each other, but grouped under two major fields of study: Computer Science (CS) and Information Technology (IT). The latest one sometimes comes in a broader meaning - Information Communications Technology (ICT), and Computer Science, in turn, is highly linked to Electrical Engineering. But what exactly makes them all different?

Briefly, Computer Science creates computer software technologies, Electrical Engineering creates hardware to run this software in an efficient way, while Information Technology uses them later to create Information Systems for storing, processing and transmitting data.

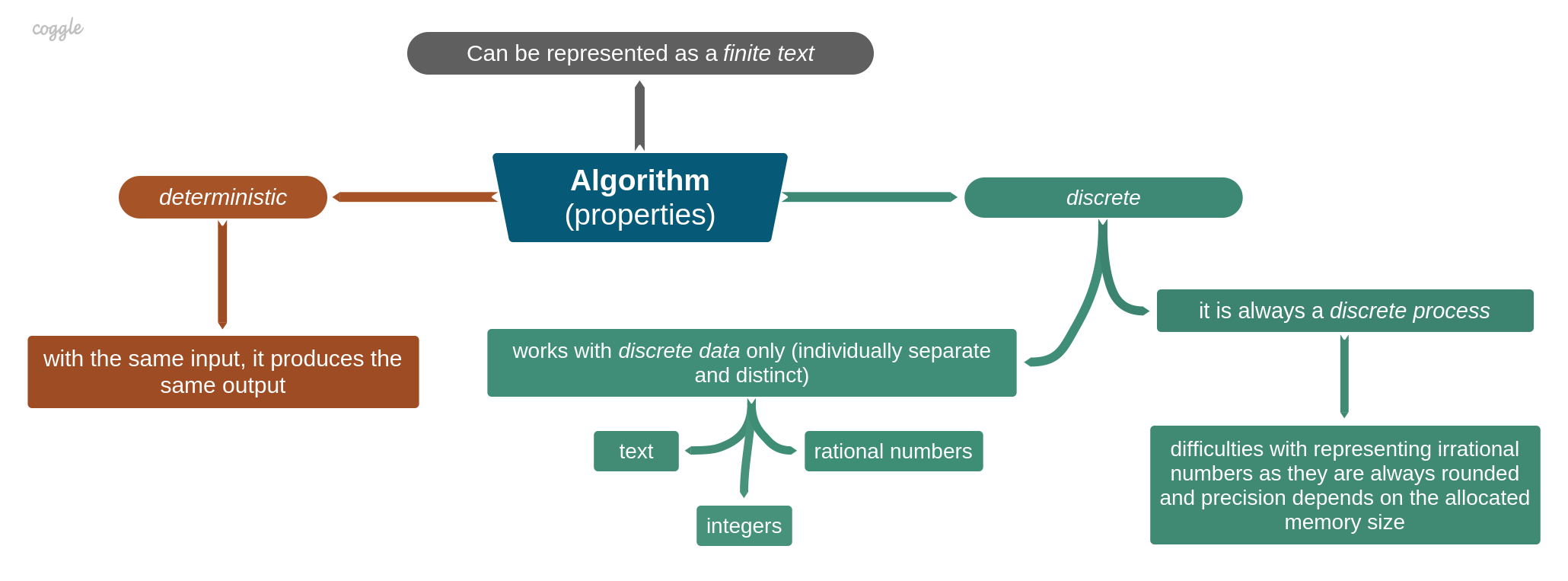

CS is a study of using computation and computer systems for solving real-world problems. Dealing mostly with software, the study includes the theory of computation and computer architecture, design, development, and application of software systems. The most common problems are organized in groups in particular areas, such as Distributed Systems, Artificial Intelligence, Data Science, Programming Languages and Compilers, Algorithms and Data Structures, etc. Summarizing, CS mainly focuses on finding answers to the following questions (by John DeNero, cs61a):

- which real-world problems can be solved using computation

- how to solve these problems

- how to solve them efficiently

The fact that CS is all about software, makes it tightly coupled to Electrical Engineering that deals with hardware and focuses on designing computer systems and electronic devices for running software in the most efficient way.

Unlike CS, IT is a study of using computers to design, build, and operate Information Systems which are used for storing and processing information (data). ICT extends it by applying telecommunications for receiving and transmitting data. It is crucial to notice, that IT apply existing technologies (e.g. hardware, operating systems, systems software, middleware applications, databases, networks) for creating Information Systems. Hence, IT professionals are users of technologies and utilize existing solutions (hardware and software) to create larger systems for solving a specific business need.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

+ Computer Science vs Information Technology :: Vorakl's notes Computer Science vs Information Technology

Differences between two computer-related studies

If you ever thought about getting a computer-related (graduated) education, you probably came across a variety of similar disciplines, more or less connected to each other, but grouped under two major fields of study: Computer Science (CS) and Information Technology (IT). The latest one sometimes comes in a broader meaning - Information Communications Technology (ICT), and Computer Science, in turn, is highly linked to Electrical Engineering. But what exactly makes them all different?

Briefly, Computer Science creates computer software technologies, Electrical Engineering creates hardware to run this software in an efficient way, while Information Technology uses them later to create Information Systems for storing, processing and transmitting data.

CS is a study of using computation and computer systems for solving real-world problems. Dealing mostly with software, the study includes the theory of computation and computer architecture, design, development, and application of software systems. The most common problems are organized in groups in particular areas, such as Distributed Systems, Artificial Intelligence, Data Science, Programming Languages and Compilers, Algorithms and Data Structures, etc. Summarizing, CS mainly focuses on finding answers to the following questions (by John DeNero, cs61a):

- which real-world problems can be solved using computation

- how to solve these problems

- how to solve them efficiently

The fact that CS is all about software, makes it tightly coupled to Electrical Engineering that deals with hardware and focuses on designing computer systems and electronic devices for running software in the most efficient way.

Unlike CS, IT is a study of using computers to design, build, and operate Information Systems which are used for storing and processing information (data). ICT extends it by applying telecommunications for receiving and transmitting data. It is crucial to notice, that IT apply existing technologies (e.g. hardware, operating systems, systems software, middleware applications, databases, networks) for creating Information Systems. Hence, IT professionals are users of technologies and utilize existing solutions (hardware and software) to create larger systems for solving a specific business need.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

diff --git a/articles/csp/index.html b/articles/csp/index.html

index 8ea4c1a4..06000625 100644

--- a/articles/csp/index.html

+++ b/articles/csp/index.html

@@ -1 +1 @@

- Constraint Satisfaction Problem (CSP) :: Vorakl's notes Constraint Satisfaction Problem (CSP)

A mathematical question that is defined by variables, domains, and constraints

Constraint Satisfaction Problem (CSP) is a class of problems that can be used to represent a large set of real-world problems. In particular, it is widely used in Artificial Intelligent (AI) as finding a solution for a formulated CSP may be used in decision making. There are a few adjacent areas in terms that for solving problems they all involve constraints: SAT (Boolean satisfiability problem), and the SMT (satisfiability modulo theories).

Generally speaking, the complexity of finding a solution for CSP is NP-Complete (takes exponential time), because a solution can be guessed and verified relatively fast (in polynomial time), and the SAT problem (NP-Hard) can be translated into a CSP problem. But, it also means, there is no known polynomial-time solution. Thus, the development of efficient algorithms and techniques for solving CSPs is crucial and appears as a subject in many scientific pieces of research.

The simplest kind of CSPs are defined by a set of discrete variables (e.g. X, Y), finite non-empty domains (e.g. 0<X<=4, Y<10), and a set of constraints (e.g. Y=X^2, X<>3) which involve some of the variables. There are distinguished two related terms: the Possible World (or the Complete Assignment) of a CSP is an assignment of values to all variables and the Model (or the Solution to a CSP) is a possible world that satisfies all the constraints.

Within the topic, there is a programming paradigm - Constraint Programming. It allows building a Constraint Based System where relations between variables are stated in a form of constraints. Hence, this defines certain specifics:

- the paradigm doesn't specify a sequence of steps to execute for finding a solution, but rather declares the solution's properties and desired result. This makes the paradigm a sort of Declarative Programming

- it's usually characterized by non-directional computation when to satisfy constraints, computations are propagated throughout a system accordingly to changed conditions or variables' values.

The example of using this paradigm can be seen in another my article "A converter of a character's case and Ascii codes".

A CSP can be effectively applied in a number of areas like mappings, assignments, planning and scheduling, games (e.g. sudoku), solving system of equations, etc. There are also a number of software frameworks which provide CSP solvers, like python-constraint and Google OR-Tools, just to name a few.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

+ Constraint Satisfaction Problem (CSP) :: Vorakl's notes Constraint Satisfaction Problem (CSP)

A mathematical question that is defined by variables, domains, and constraints

Constraint Satisfaction Problem (CSP) is a class of problems that can be used to represent a large set of real-world problems. In particular, it is widely used in Artificial Intelligent (AI) as finding a solution for a formulated CSP may be used in decision making. There are a few adjacent areas in terms that for solving problems they all involve constraints: SAT (Boolean satisfiability problem), and the SMT (satisfiability modulo theories).

Generally speaking, the complexity of finding a solution for CSP is NP-Complete (takes exponential time), because a solution can be guessed and verified relatively fast (in polynomial time), and the SAT problem (NP-Hard) can be translated into a CSP problem. But, it also means, there is no known polynomial-time solution. Thus, the development of efficient algorithms and techniques for solving CSPs is crucial and appears as a subject in many scientific pieces of research.

The simplest kind of CSPs are defined by a set of discrete variables (e.g. X, Y), finite non-empty domains (e.g. 0<X<=4, Y<10), and a set of constraints (e.g. Y=X^2, X<>3) which involve some of the variables. There are distinguished two related terms: the Possible World (or the Complete Assignment) of a CSP is an assignment of values to all variables and the Model (or the Solution to a CSP) is a possible world that satisfies all the constraints.

Within the topic, there is a programming paradigm - Constraint Programming. It allows building a Constraint Based System where relations between variables are stated in a form of constraints. Hence, this defines certain specifics:

- the paradigm doesn't specify a sequence of steps to execute for finding a solution, but rather declares the solution's properties and desired result. This makes the paradigm a sort of Declarative Programming

- it's usually characterized by non-directional computation when to satisfy constraints, computations are propagated throughout a system accordingly to changed conditions or variables' values.

The example of using this paradigm can be seen in another my article "A converter of a character's case and Ascii codes".

A CSP can be effectively applied in a number of areas like mappings, assignments, planning and scheduling, games (e.g. sudoku), solving system of equations, etc. There are also a number of software frameworks which provide CSP solvers, like python-constraint and Google OR-Tools, just to name a few.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

diff --git a/articles/data-structure/index.html b/articles/data-structure/index.html

index 4929b593..a74bd608 100644

--- a/articles/data-structure/index.html

+++ b/articles/data-structure/index.html

@@ -1 +1 @@

- Organizing Unstructured Data :: Vorakl's notes Organizing Unstructured Data

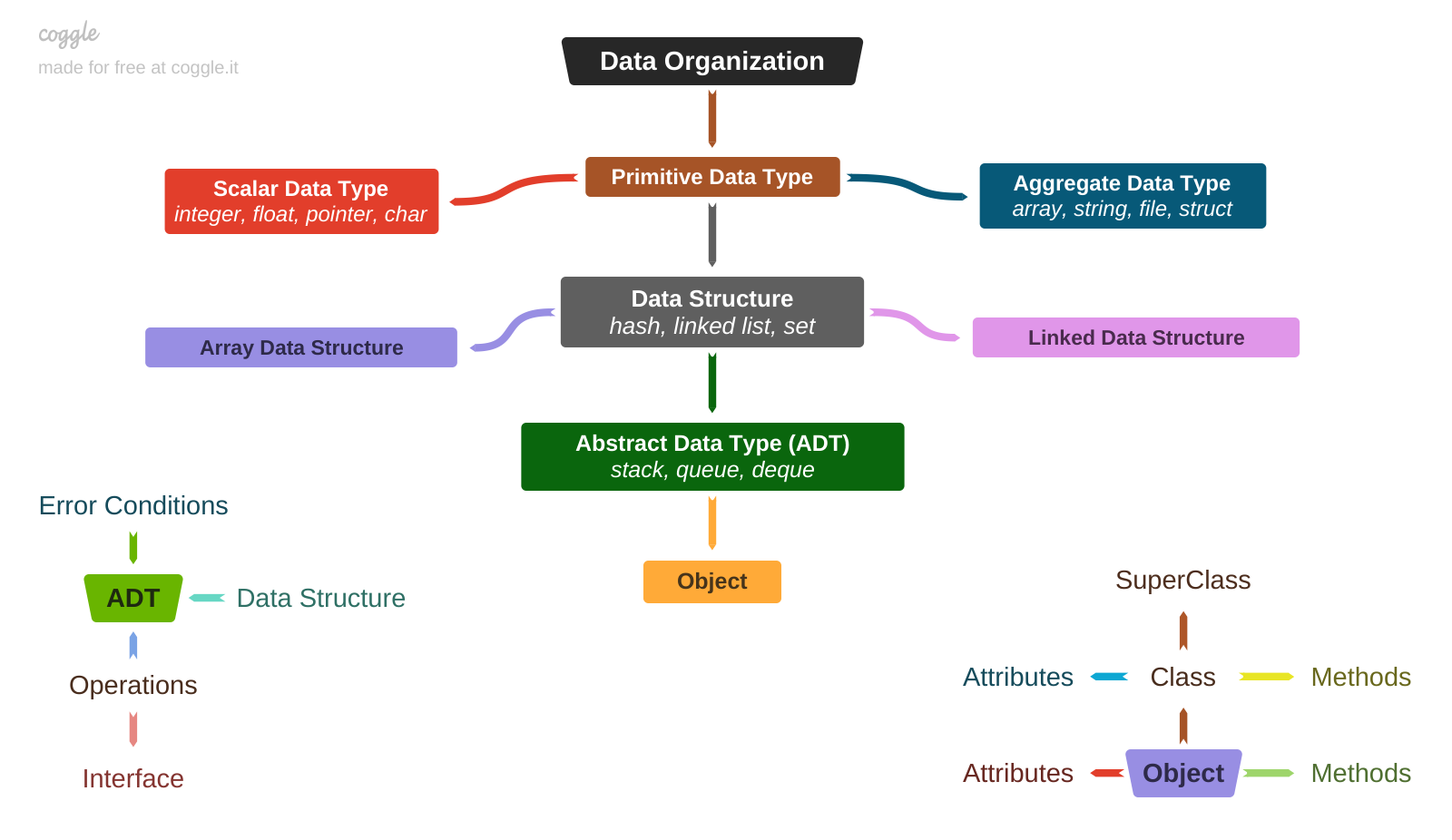

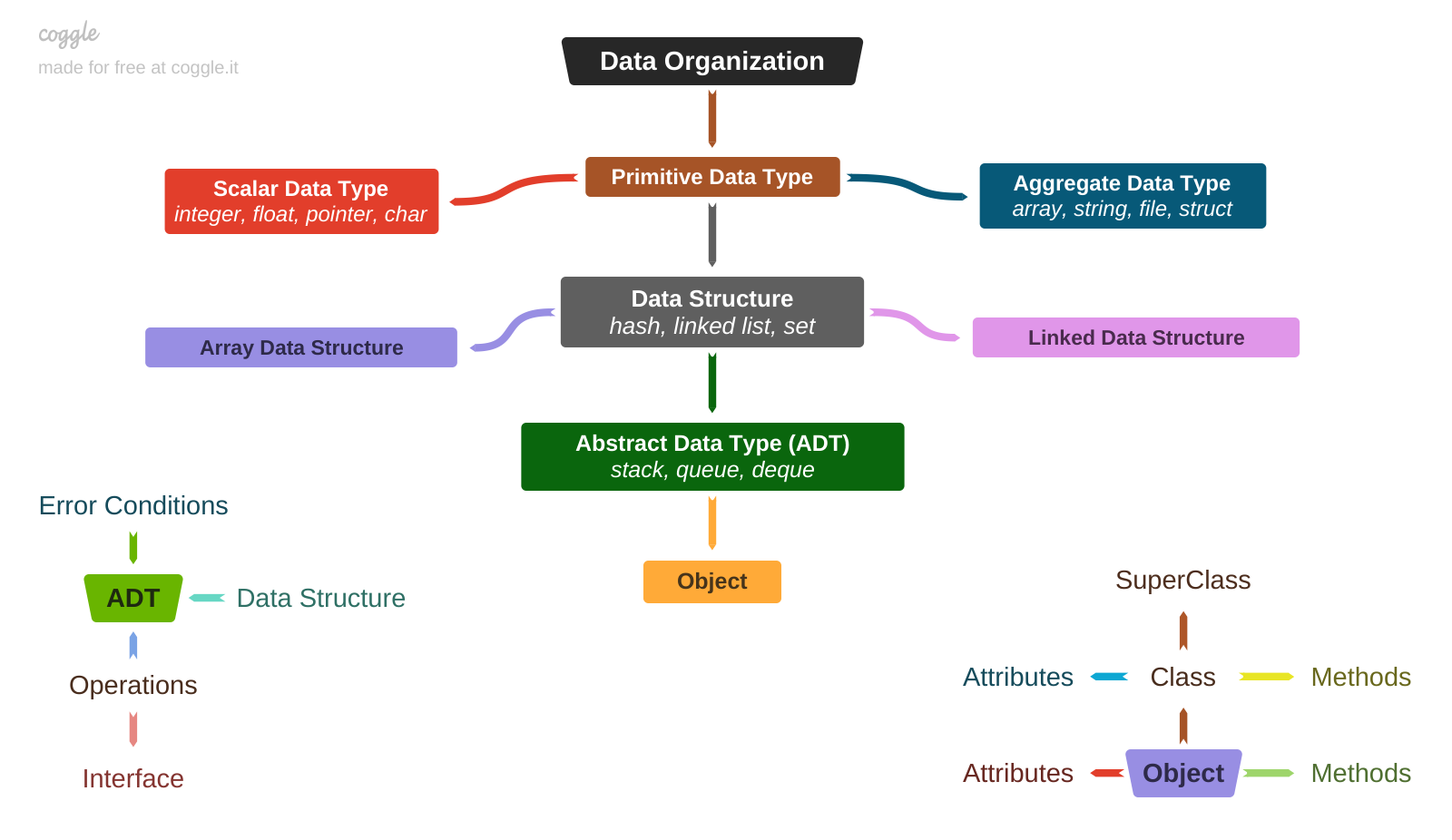

Managing data complexity using types, structures, ADTs, and objects

Topics

The main, if not the only, purpose of a computer is to compute information. It doesn't always have to be a computation of mathematical formulas. In general, it is a transformation of one piece of information into another. Computers only work with information that can be represented as discrete data. The input and output of a computer engine are always natural numbers or text (a sequence of symbols from a dictionary that correspond to certain natural numbers).

As long as data is unstructured, it's hard to make some sense of it. But once data is given a structured form, it becomes meaningful and suitable for further transformation.

Type

The simplest form of data organization is Type. In general, a data type defines a set of values with certain properties. It usually defines a size in bytes. A primitive data type is an ordered set of bytes. When a variable of a primitive data type has only one value (holds only one piece of information), it's called a scalar and a type - scalar data type. Well-known examples are integer, float, pointer, and char. A collection of primitive (scalar) data types is called an aggregate data type, and it allows multiple values to be stored. This can be a homogeneous collection, where all elements are of the same type, such as an array, a string, or a file. Or it can be heterogeneous, where elements are of different types, such as a structure or a class. The main property is an ordered set of bytes. The internal organization is simple, straightforward, and all actions (e.g. reading or modifying) are performed directly on the data, according to a hardware architecture that defines the byte order in memory (little-/big-endian).

Data Structure

The next level of data abstraction is called Data Structure. It brings more complexity, but also more flexibility to make the right choice between access speed, ability to grow, modification speed, etc. Internally, it's represented by a collection of the scalar or aggregate data types. The main focus is on the details of the internal organization and a set of rules to control that organization. There are two types of data structures that result from a difference in the memory allocation of the underlying elements:

- Array Data Structures (static), based on physically contiguous elements in memory, with no gaps between them;

- Linked Data Structures (dynamic), based on elements, dynamically allocated in memory and linked in a linear structure using pointers (usually, one or two)

Well-known examples are linked list, hash (dictionary), set, list. These data structures are defined only by their physical organization in memory and a set of rules for data modifications that are performed directly. All internal implementation details are open. The actions performed on the data structures (add, remove, update, etc.) and the ways in which they are used can vary.

Abstract Data Type (ADT)

A higher level of data abstraction is represented by an Abstract Data Type (ADT), which shifts the focus from "how to store data" to "how to work with data". An ADT represents a logical organization, defined mainly by a list of predefined operations (functions) for manipulating data and controlling its consistency. Internally, data can be stored in any data structure or combination thereof. However, these internals are hidden and should not be directly accessible. All interactions with data are done through an interface (operations exposed to users). Most of ADTs share a common set of primitive operations, such as

- create - a constructor of a new instance

- destroy - a destructor of an existing instance

- add, get - the set-get functions for adding and removing elements of an instance

- is_empty, size - useful functions for managing existing data in an instance

The most common examples of ADTs are stack and queue. Both of these ADTs can be implemented using either array or linked data structures, and both have specific rules for adding and removing elements. All of these specifics are abstracted as functions, which in turn, perform appropriate actions on internal data. Dividing an ADT into operations and data structures creates an abstraction barrier that allows you to maintain a solid interface with the flexibility to change internals without side effects on the code using that ADT.

Object

A more comprehensive way of abstracting data is represented by Objects. An object can be thought of as a container for a piece of data that has certain properties. Similar to the ADT, this data is not directly accessible (known as encapsulation or isolation), but instead each object has a set of tightly bound methods that can be applied to operate on its data to produce an expected behavior for that object (known as polymorphism). All such methods are really just functions collected under a class. However, they become methods when called to operate on a particular object. Methods can also be inherited from another class, which is called a superclass. Unlike an ADT, an object doesn't represent a particular type of data, but rather a set of attributes, and it behaves as it should when its methods are invoked. Attributes are nothing more than variables of any type (including ADTs). Formally speaking, classes act as specifications of all of the object's attributes and the methods that can be invoked to deal with those attributes.

The Object-Oriented Programming (OOP) paradigm uses objects as the central elements of a program design. At program runtime, each object exists as an instance of a class. The class, in turn, plays a dual role: it defines the behavior (through a set of methods) of all objects instantiated from it, and it declares a prototype of data that will carry some state within the object once it's instantiated. As long as the state is isolated (incapsulated) in the objects, access to that state is organized by communication between the objects via message passing. It's usually implemented by calling a method of an object, which is equivalent to "passing" a message to that object.

This behavior is completely different from the Structured Programming Paradigm, which instead of maintaining a collection of interacting objects with an an embedded state, relies on dividing of a project's code into a sequence of mostly independent tasks (functions) that operate with an externally (to them) stored state.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

+ Organizing Unstructured Data :: Vorakl's notes Organizing Unstructured Data

Managing data complexity using types, structures, ADTs, and objects

Topics

The main, if not the only, purpose of a computer is to compute information. It doesn't always have to be a computation of mathematical formulas. In general, it is a transformation of one piece of information into another. Computers only work with information that can be represented as discrete data. The input and output of a computer engine are always natural numbers or text (a sequence of symbols from a dictionary that correspond to certain natural numbers).

As long as data is unstructured, it's hard to make some sense of it. But once data is given a structured form, it becomes meaningful and suitable for further transformation.

Type

The simplest form of data organization is Type. In general, a data type defines a set of values with certain properties. It usually defines a size in bytes. A primitive data type is an ordered set of bytes. When a variable of a primitive data type has only one value (holds only one piece of information), it's called a scalar and a type - scalar data type. Well-known examples are integer, float, pointer, and char. A collection of primitive (scalar) data types is called an aggregate data type, and it allows multiple values to be stored. This can be a homogeneous collection, where all elements are of the same type, such as an array, a string, or a file. Or it can be heterogeneous, where elements are of different types, such as a structure or a class. The main property is an ordered set of bytes. The internal organization is simple, straightforward, and all actions (e.g. reading or modifying) are performed directly on the data, according to a hardware architecture that defines the byte order in memory (little-/big-endian).

Data Structure

The next level of data abstraction is called Data Structure. It brings more complexity, but also more flexibility to make the right choice between access speed, ability to grow, modification speed, etc. Internally, it's represented by a collection of the scalar or aggregate data types. The main focus is on the details of the internal organization and a set of rules to control that organization. There are two types of data structures that result from a difference in the memory allocation of the underlying elements:

- Array Data Structures (static), based on physically contiguous elements in memory, with no gaps between them;

- Linked Data Structures (dynamic), based on elements, dynamically allocated in memory and linked in a linear structure using pointers (usually, one or two)

Well-known examples are linked list, hash (dictionary), set, list. These data structures are defined only by their physical organization in memory and a set of rules for data modifications that are performed directly. All internal implementation details are open. The actions performed on the data structures (add, remove, update, etc.) and the ways in which they are used can vary.

Abstract Data Type (ADT)

A higher level of data abstraction is represented by an Abstract Data Type (ADT), which shifts the focus from "how to store data" to "how to work with data". An ADT represents a logical organization, defined mainly by a list of predefined operations (functions) for manipulating data and controlling its consistency. Internally, data can be stored in any data structure or combination thereof. However, these internals are hidden and should not be directly accessible. All interactions with data are done through an interface (operations exposed to users). Most of ADTs share a common set of primitive operations, such as

- create - a constructor of a new instance

- destroy - a destructor of an existing instance

- add, get - the set-get functions for adding and removing elements of an instance

- is_empty, size - useful functions for managing existing data in an instance

The most common examples of ADTs are stack and queue. Both of these ADTs can be implemented using either array or linked data structures, and both have specific rules for adding and removing elements. All of these specifics are abstracted as functions, which in turn, perform appropriate actions on internal data. Dividing an ADT into operations and data structures creates an abstraction barrier that allows you to maintain a solid interface with the flexibility to change internals without side effects on the code using that ADT.

Object

A more comprehensive way of abstracting data is represented by Objects. An object can be thought of as a container for a piece of data that has certain properties. Similar to the ADT, this data is not directly accessible (known as encapsulation or isolation), but instead each object has a set of tightly bound methods that can be applied to operate on its data to produce an expected behavior for that object (known as polymorphism). All such methods are really just functions collected under a class. However, they become methods when called to operate on a particular object. Methods can also be inherited from another class, which is called a superclass. Unlike an ADT, an object doesn't represent a particular type of data, but rather a set of attributes, and it behaves as it should when its methods are invoked. Attributes are nothing more than variables of any type (including ADTs). Formally speaking, classes act as specifications of all of the object's attributes and the methods that can be invoked to deal with those attributes.

The Object-Oriented Programming (OOP) paradigm uses objects as the central elements of a program design. At program runtime, each object exists as an instance of a class. The class, in turn, plays a dual role: it defines the behavior (through a set of methods) of all objects instantiated from it, and it declares a prototype of data that will carry some state within the object once it's instantiated. As long as the state is isolated (incapsulated) in the objects, access to that state is organized by communication between the objects via message passing. It's usually implemented by calling a method of an object, which is equivalent to "passing" a message to that object.

This behavior is completely different from the Structured Programming Paradigm, which instead of maintaining a collection of interacting objects with an an embedded state, relies on dividing of a project's code into a sequence of mostly independent tasks (functions) that operate with an externally (to them) stored state.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

diff --git a/articles/dreyfus/index.html b/articles/dreyfus/index.html

index 066b4e9f..b07769a6 100644

--- a/articles/dreyfus/index.html

+++ b/articles/dreyfus/index.html

@@ -1 +1 @@

- Dreyfus model of skill acquisition :: Vorakl's notes Dreyfus model of skill acquisition

The power of the human mind over the reasoning machines

There is a special term to describe the intelligence of computers that actually mimics human intelligence only to a certain extent. It is called Artificial Intelligence (AI) to emphasize its synthetic nature. Going back to 1980, the U.S. military had been supporting AI research for more than 20 years, and it was time to ask why almost all efforts to build AI systems capable of "providing expert medical advice, exhibiting common sense, and functioning autonomously in critical military situations" had failed.

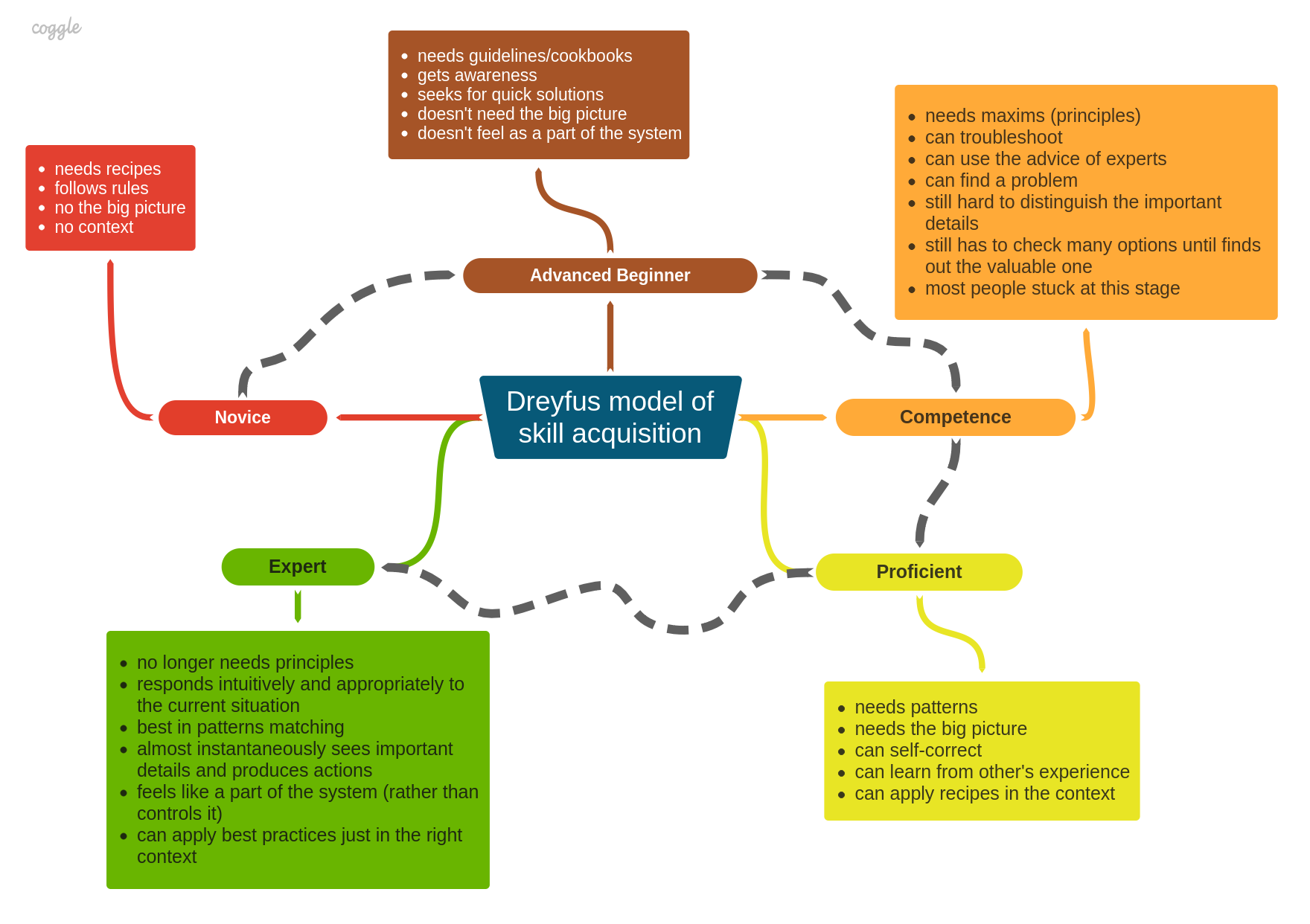

In February 1980, the Dreyfus brothers (Stuart E. and Hubert L.) presented their report on the research conducted at the University of California, Berkeley with the support of the US Air Force Office of Scientific Research - "A Five-Stage Model of the Mental Activities Involved in Directed Skill Acquisition". This model shows how humans acquire new skills through instruction and experience. After the publication, they continued to work on the model and with some modifications and extensions, the results were published in their book "Mind over Machine. The Power of Human Intuition and Expertise in the Era of the Computer" in 1986. As the brothers said in the book, "our intention is more modest, but more fundamental": "what we can reasonably expect from computer intelligence".

A process of skill acquisition typically goes through five developmental stages (novice, advanced beginner, competent, proficient, expert). Each stage characterizes a particular behavior and mental response to a situation. A successful transition from one stage to another is associated with the appropriate transformation of four mental functions: recall, recognition, decision, awareness. Understanding the stages of development is essential for any skill training procedure to facilitate the process of acquiring new skills and advancing to the next stage. For human beings, the entire journey from novice to expert usually takes an average of 10 years. Or, more realistically, 10,000 hours of learning and practice. Some research has also shown that there are only up to 5% of experts on the planet, regardless of their field of expertise.

The report also pointed out that "as the student becomes more skilled, he depends less on abstract principles and more on concrete experience" and that only "skill in its minimal form is produced by following abstract formal rules". Thus, the higher levels of performance depend on "everyday, concrete, experience in problem solving". By gaining experience, a student is able to start from scratch by following rules of action in context-free situations. Then, after gaining some experience, a student is able to use guidelines for responding to situational aspects. Further practice leads to being able to use maxims (principles) to determine an appropriate action. All of these three transformations correspond to the first three stages and always represent some kind of analytical decision-making process that is needed to "connect his understanding of the general situation with a specific action".

The significant change occurs at Stage 4, when the number of "experienced situations is so vast that normally each specific situation immediately dictates an intuitively appropriate action". The key point here is the shift "from analytical thinking to intuitive response". The highest level of expertise with masterful performance occurs only when the expert "no longer needs principles" and is able to "move almost instantaneously into the production of the appropriate perspective and its associated action". This is one of the most important observations: experts perform beyond the rules, and their performance degrades significantly when they are constrained by any kind of formal rules or processes. In 2008 the Pragmatic Bookshelf published an excellent book "Pragmatic Thinking and Learning" by Andy Hunt. It gives a lot of insight into the human brain and how it works, a number of tips on how to learn more and faster, including a detailed review of the Dreyfus model (ch.2). I also created mind maps for the entire book.

Understanding the difference between the human mind and how a reasoning machine "thinks" helps to set realistic expectations for the development of artificial intelligence systems. In trying to define what computers should do, it is first necessary to be clear about what computers can do. In this regard, the computer is an analytical engine, so it can apply rules and make logical inferences. Of course, it does this with extreme speed, high accuracy, and remarkable reproducibility, but it still follows a certain logic. It turns out that precisely this crucial difference between a reasoning machine, which is perhaps perpetually limited, and the intuitive expertise of the human mind, which cannot be reduced to rules, seems to be a good starting point for finding the balance between humans and computers.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

+ Dreyfus model of skill acquisition :: Vorakl's notes Dreyfus model of skill acquisition

The power of the human mind over the reasoning machines

There is a special term to describe the intelligence of computers that actually mimics human intelligence only to a certain extent. It is called Artificial Intelligence (AI) to emphasize its synthetic nature. Going back to 1980, the U.S. military had been supporting AI research for more than 20 years, and it was time to ask why almost all efforts to build AI systems capable of "providing expert medical advice, exhibiting common sense, and functioning autonomously in critical military situations" had failed.

In February 1980, the Dreyfus brothers (Stuart E. and Hubert L.) presented their report on the research conducted at the University of California, Berkeley with the support of the US Air Force Office of Scientific Research - "A Five-Stage Model of the Mental Activities Involved in Directed Skill Acquisition". This model shows how humans acquire new skills through instruction and experience. After the publication, they continued to work on the model and with some modifications and extensions, the results were published in their book "Mind over Machine. The Power of Human Intuition and Expertise in the Era of the Computer" in 1986. As the brothers said in the book, "our intention is more modest, but more fundamental": "what we can reasonably expect from computer intelligence".

A process of skill acquisition typically goes through five developmental stages (novice, advanced beginner, competent, proficient, expert). Each stage characterizes a particular behavior and mental response to a situation. A successful transition from one stage to another is associated with the appropriate transformation of four mental functions: recall, recognition, decision, awareness. Understanding the stages of development is essential for any skill training procedure to facilitate the process of acquiring new skills and advancing to the next stage. For human beings, the entire journey from novice to expert usually takes an average of 10 years. Or, more realistically, 10,000 hours of learning and practice. Some research has also shown that there are only up to 5% of experts on the planet, regardless of their field of expertise.

The report also pointed out that "as the student becomes more skilled, he depends less on abstract principles and more on concrete experience" and that only "skill in its minimal form is produced by following abstract formal rules". Thus, the higher levels of performance depend on "everyday, concrete, experience in problem solving". By gaining experience, a student is able to start from scratch by following rules of action in context-free situations. Then, after gaining some experience, a student is able to use guidelines for responding to situational aspects. Further practice leads to being able to use maxims (principles) to determine an appropriate action. All of these three transformations correspond to the first three stages and always represent some kind of analytical decision-making process that is needed to "connect his understanding of the general situation with a specific action".

The significant change occurs at Stage 4, when the number of "experienced situations is so vast that normally each specific situation immediately dictates an intuitively appropriate action". The key point here is the shift "from analytical thinking to intuitive response". The highest level of expertise with masterful performance occurs only when the expert "no longer needs principles" and is able to "move almost instantaneously into the production of the appropriate perspective and its associated action". This is one of the most important observations: experts perform beyond the rules, and their performance degrades significantly when they are constrained by any kind of formal rules or processes. In 2008 the Pragmatic Bookshelf published an excellent book "Pragmatic Thinking and Learning" by Andy Hunt. It gives a lot of insight into the human brain and how it works, a number of tips on how to learn more and faster, including a detailed review of the Dreyfus model (ch.2). I also created mind maps for the entire book.

Understanding the difference between the human mind and how a reasoning machine "thinks" helps to set realistic expectations for the development of artificial intelligence systems. In trying to define what computers should do, it is first necessary to be clear about what computers can do. In this regard, the computer is an analytical engine, so it can apply rules and make logical inferences. Of course, it does this with extreme speed, high accuracy, and remarkable reproducibility, but it still follows a certain logic. It turns out that precisely this crucial difference between a reasoning machine, which is perhaps perpetually limited, and the intuitive expertise of the human mind, which cannot be reduced to rules, seems to be a good starting point for finding the balance between humans and computers.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

diff --git a/articles/engineering/index.html b/articles/engineering/index.html

index 7453fadf..b8d0b02c 100644

--- a/articles/engineering/index.html

+++ b/articles/engineering/index.html

@@ -1 +1 @@

- Who is an engineer :: Vorakl's notes Who is an engineer

What's the crucial difference between engineers and scientists

With the coming of the Industrial Age (approx. 1760-1950), an agricultural society transitioned to an economy, based primarily on massive industrial production. It was the time of the rise of specialized educational centers, where people could get deep knowledge in many different fields of science and became either scientists or engineers.

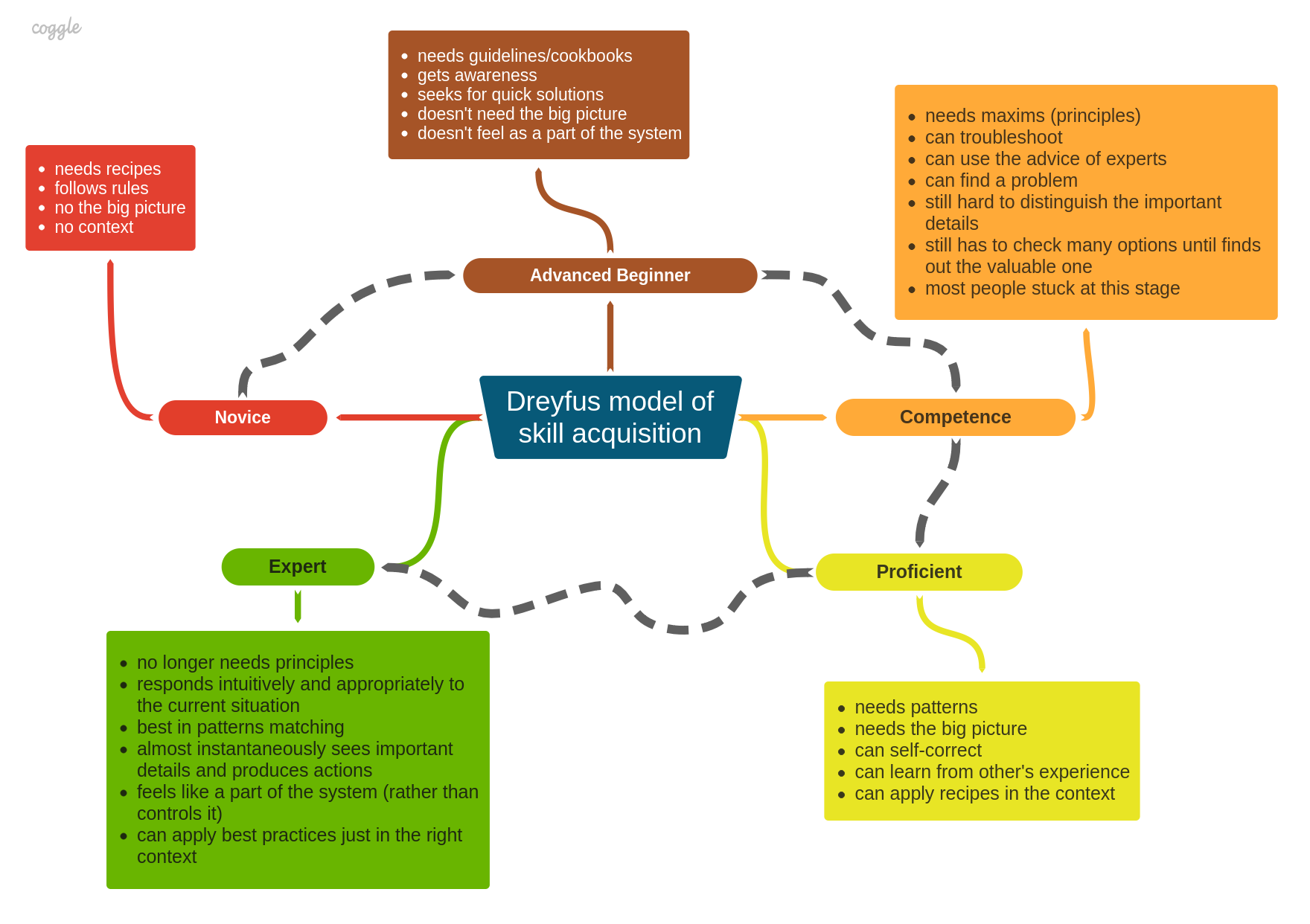

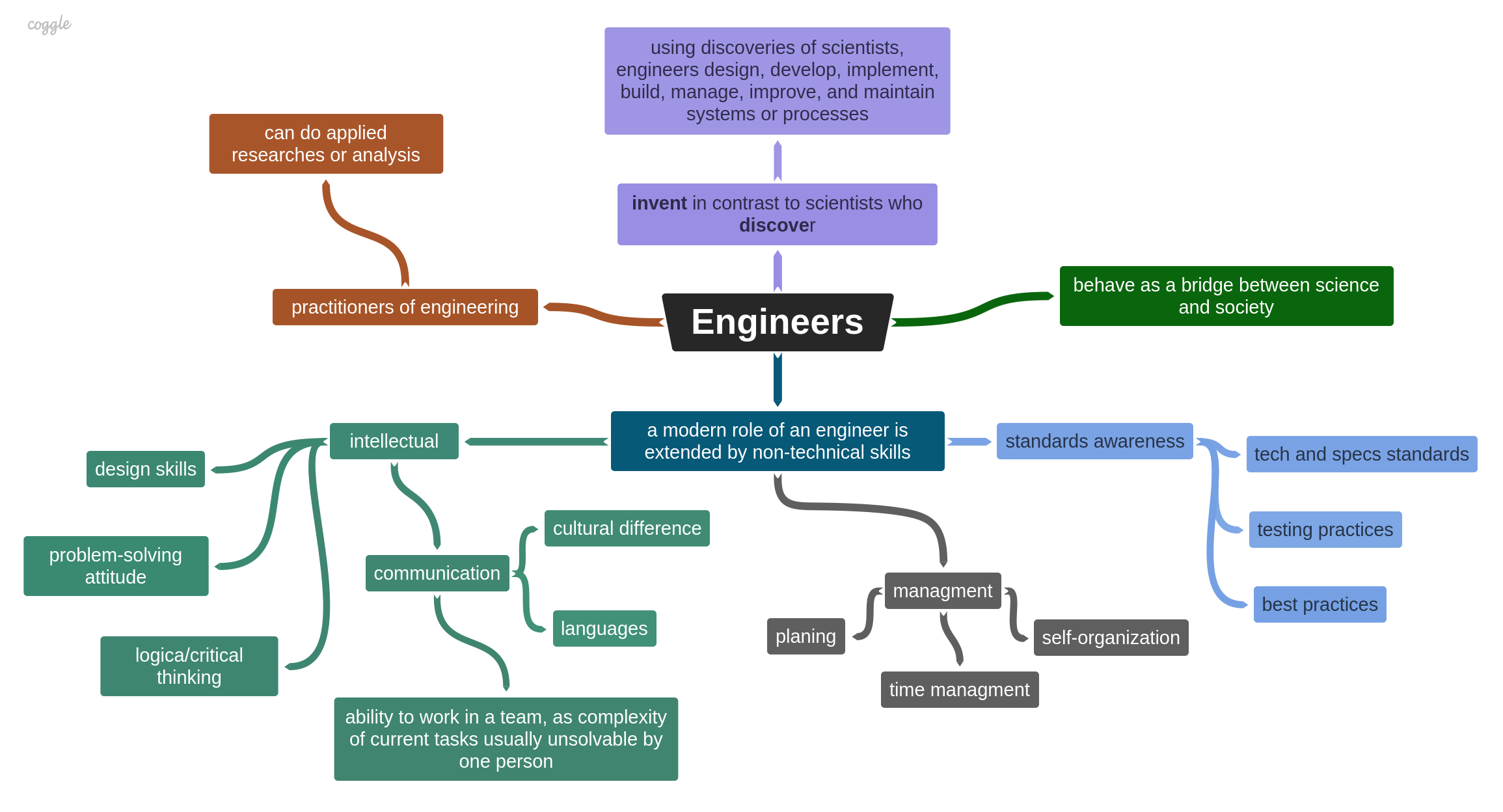

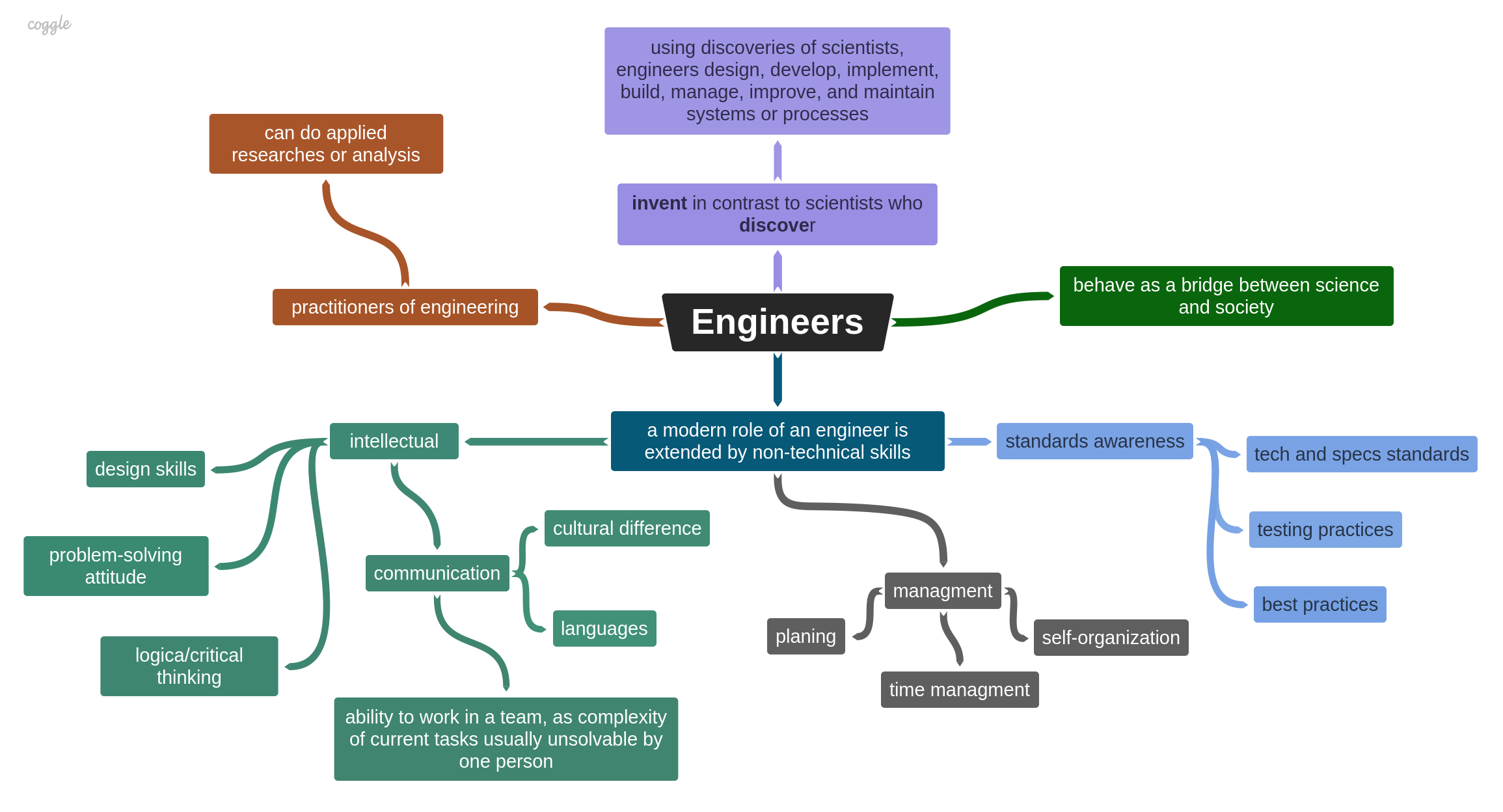

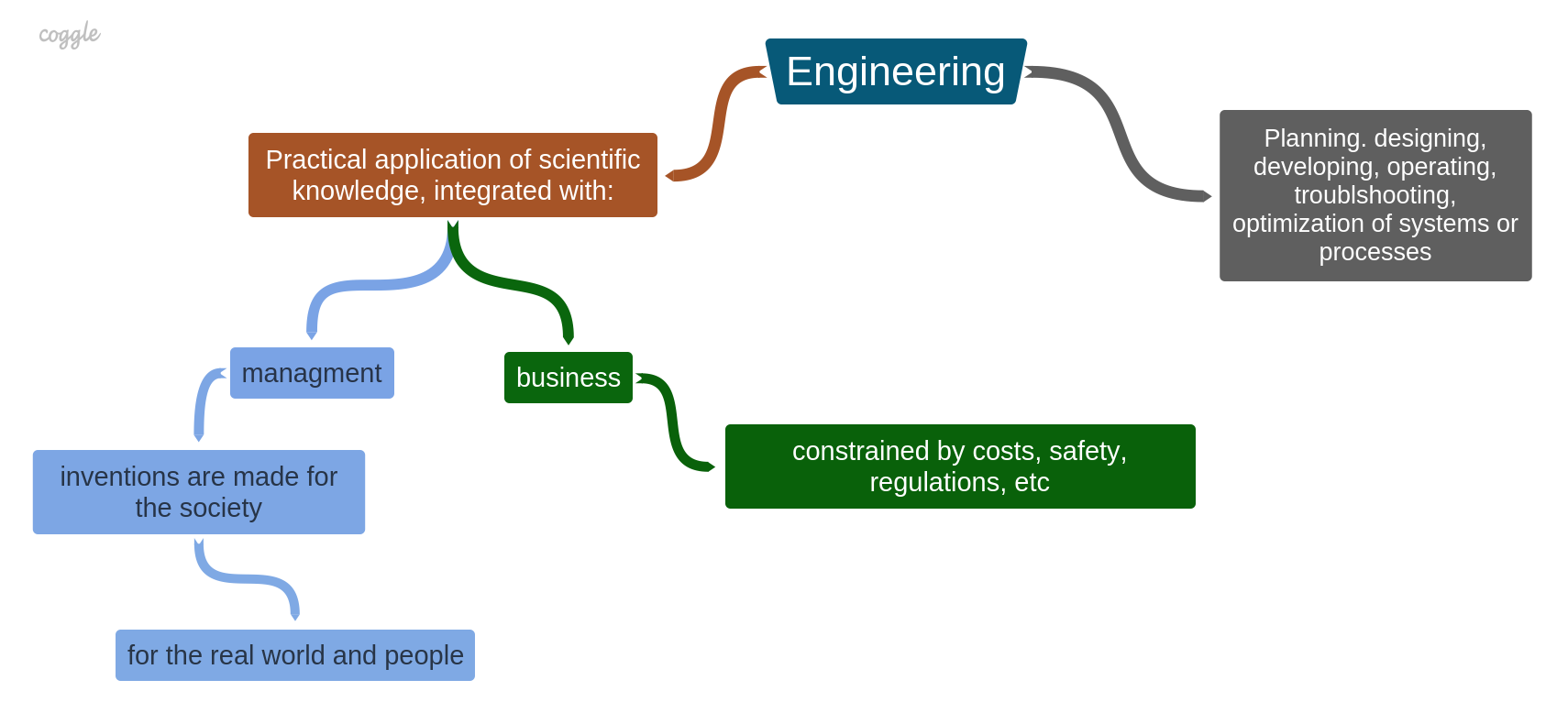

Briefly, the main difference between scientists and engineers is that scientists discover, but engineers invent. That is Engineers, using discoveries of scientists, invent systems, devices, processes, which they design, develop, implement, build, manage, maintain, and improve as different stages of the Engineering process. Engineering is a practical application of scientific knowledge, integrated with business and management. In other words, engineers act as a bridge between science and society by doing inventions for the real world and people.

In the modern time of the Information Age, the role of an engineer has been extended by non-technical skills, as a result of the globalization and spreading of trade relationships across the globe. These are skills such as intellectual (communication, foreign languages, critical thinking), management (time management, self-organization, planning), and standards awareness (tech certifications, best practices).

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

+ Who is an engineer :: Vorakl's notes Who is an engineer

What's the crucial difference between engineers and scientists

With the coming of the Industrial Age (approx. 1760-1950), an agricultural society transitioned to an economy, based primarily on massive industrial production. It was the time of the rise of specialized educational centers, where people could get deep knowledge in many different fields of science and became either scientists or engineers.

Briefly, the main difference between scientists and engineers is that scientists discover, but engineers invent. That is Engineers, using discoveries of scientists, invent systems, devices, processes, which they design, develop, implement, build, manage, maintain, and improve as different stages of the Engineering process. Engineering is a practical application of scientific knowledge, integrated with business and management. In other words, engineers act as a bridge between science and society by doing inventions for the real world and people.

In the modern time of the Information Age, the role of an engineer has been extended by non-technical skills, as a result of the globalization and spreading of trade relationships across the globe. These are skills such as intellectual (communication, foreign languages, critical thinking), management (time management, self-organization, planning), and standards awareness (tech certifications, best practices).

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

diff --git a/articles/goto/index.html b/articles/goto/index.html

index 841226d7..81e143c0 100644

--- a/articles/goto/index.html

+++ b/articles/goto/index.html

@@ -1 +1 @@

- Structured Programming Paradigm :: Vorakl's notes Structured Programming Paradigm

What can cause too much use of "goto statements"

There was a time when computer programs were so long and unstructured that only a few people could logically navigate the source code of huge software projects. With low-level programming languages, programmers used various equivalents of "goto" statements for conditional branching, which often resulted in decreased readability and difficulty maintaining logical context, especially when jumping too far into another subroutine.

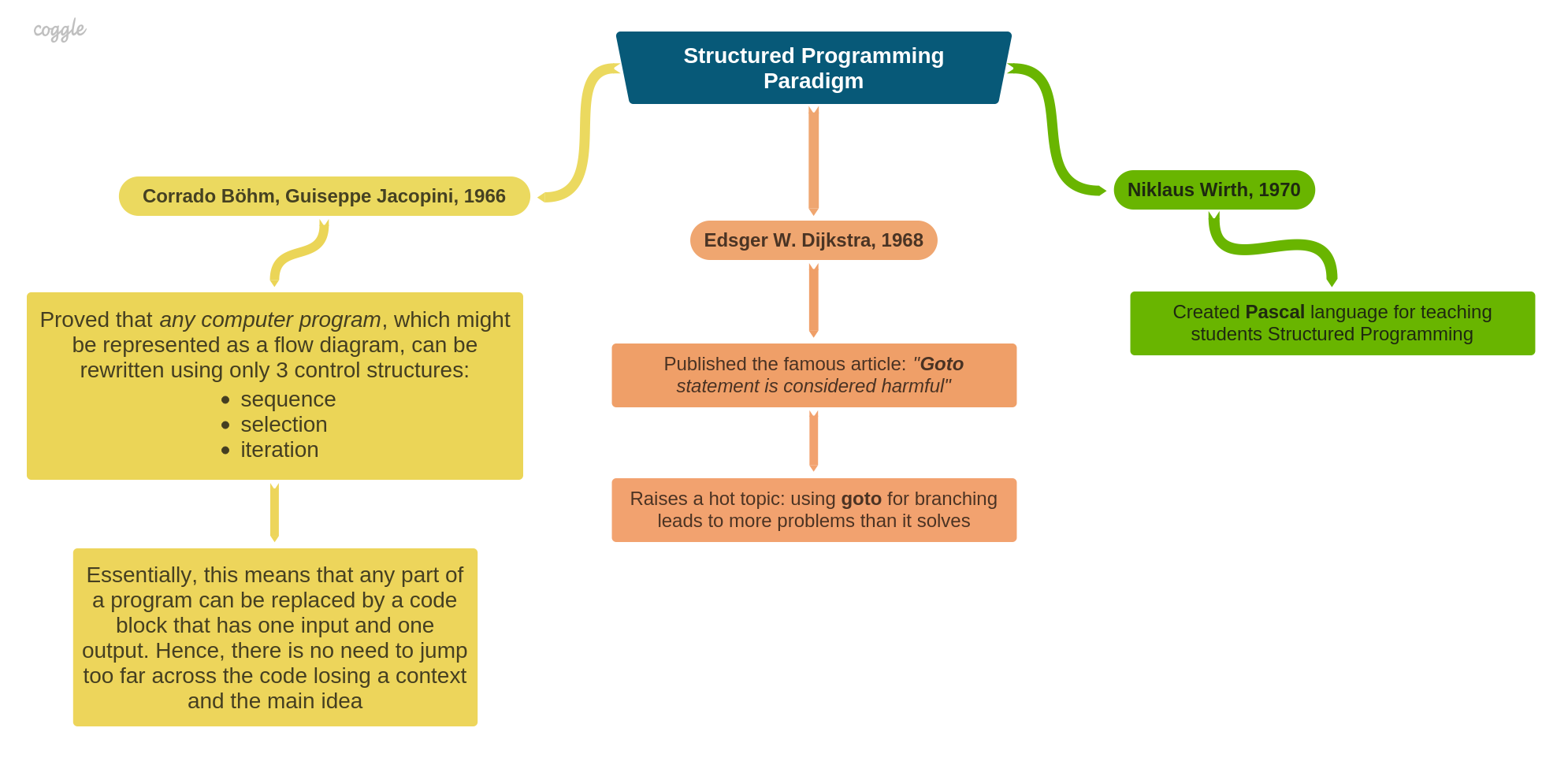

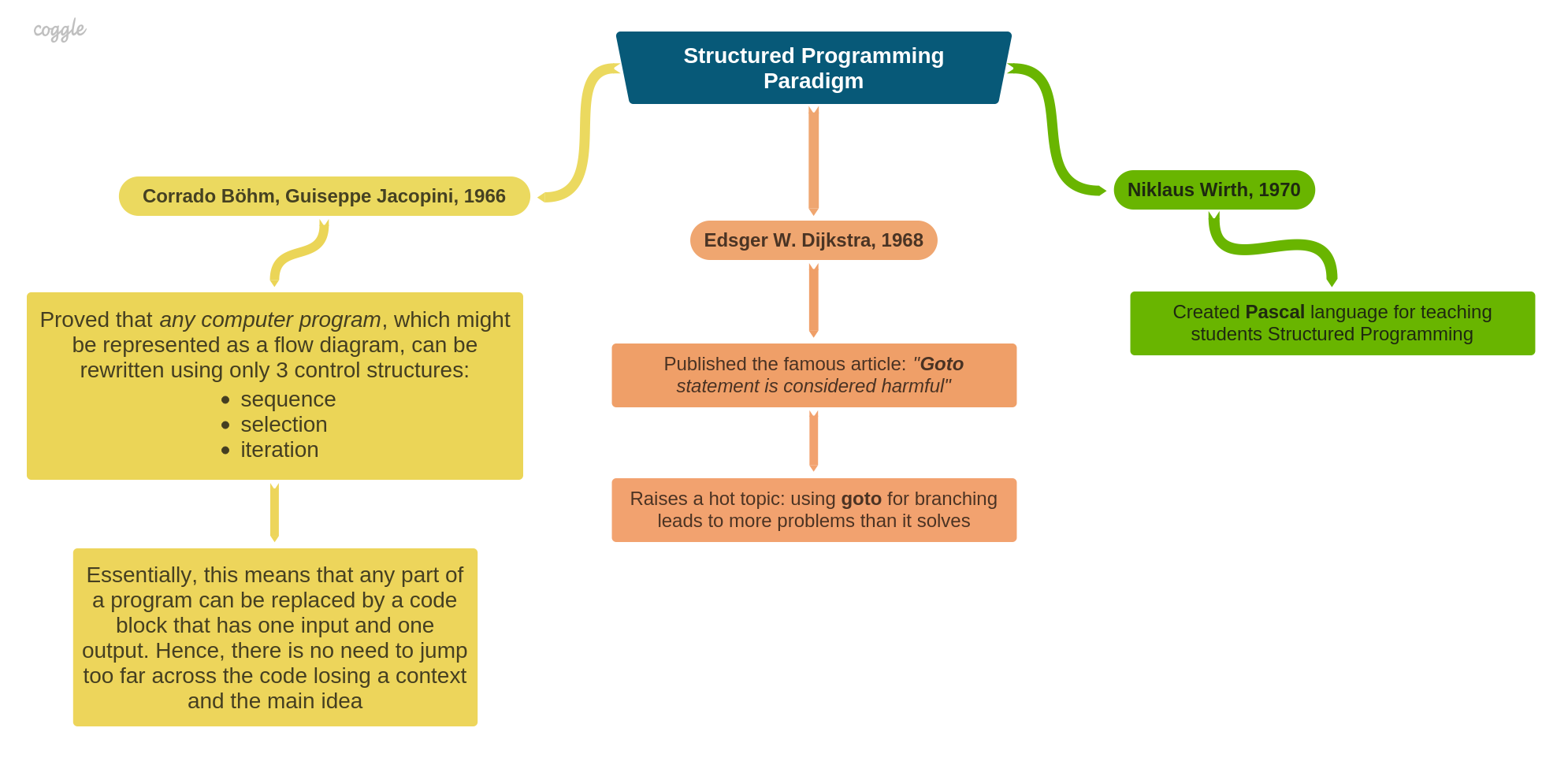

A few things happened on the way to a solution that eventually appeared in the form of the Structured Programming Paradigm. In 1966, Corrado Böhm and Guiseppe Jacopini proved a theorem that any computer program that can be represented as a flowchart can be rewritten using only 3 control structures (sequence, selection, and iteration).

In 1968, Edsger W. Dijkstra published the influential article "Go To Statement Considered Harmful", in which he pointed out that using too many goto statements would make computer programs harder to read and understand. However, his intention was unfortunately misunderstood and misused by the almost complete abandonment of the use of "goto" in high-level programming languages, even at the cost of less readable and vague code.

As a result of his work on improving ALGOL, Niklaus Wirth designed a new imperative programming language, Pascal, which was released in 1970. It has been widely used for teaching structured programming design to students for several decades since.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

+ Structured Programming Paradigm :: Vorakl's notes Structured Programming Paradigm

What can cause too much use of "goto statements"

There was a time when computer programs were so long and unstructured that only a few people could logically navigate the source code of huge software projects. With low-level programming languages, programmers used various equivalents of "goto" statements for conditional branching, which often resulted in decreased readability and difficulty maintaining logical context, especially when jumping too far into another subroutine.

A few things happened on the way to a solution that eventually appeared in the form of the Structured Programming Paradigm. In 1966, Corrado Böhm and Guiseppe Jacopini proved a theorem that any computer program that can be represented as a flowchart can be rewritten using only 3 control structures (sequence, selection, and iteration).

In 1968, Edsger W. Dijkstra published the influential article "Go To Statement Considered Harmful", in which he pointed out that using too many goto statements would make computer programs harder to read and understand. However, his intention was unfortunately misunderstood and misused by the almost complete abandonment of the use of "goto" in high-level programming languages, even at the cost of less readable and vague code.

As a result of his work on improving ALGOL, Niklaus Wirth designed a new imperative programming language, Pascal, which was released in 1970. It has been widely used for teaching structured programming design to students for several decades since.

This is my personal blog. All ideas, opinions, examples, and other information that can be found here are my own and belong entirely to me. This is the result of my personal efforts and activities at my free time. It doesn't relate to any professional work I've done and doesn't have correlations with any companies I worked for, I'm currently working, or will work in the future.

\ No newline at end of file

diff --git a/articles/index.html b/articles/index.html

index 9d4b891d..349419f5 100644

--- a/articles/index.html

+++ b/articles/index.html

@@ -1 +1 @@

- Archive :: Vorakl's notes

\ No newline at end of file

+ Archive :: Vorakl's notes

\ No newline at end of file

diff --git a/articles/learning/index.html b/articles/learning/index.html

index 36f0b30e..1803fed4 100644

--- a/articles/learning/index.html

+++ b/articles/learning/index.html

@@ -1 +1 @@

- My notes for the "Pragmatic Thinking and Learning" book :: Vorakl's notes My notes for the "Pragmatic Thinking and Learning" book

Notes in the form of mindmaps