-

Notifications

You must be signed in to change notification settings - Fork 5

Architecture

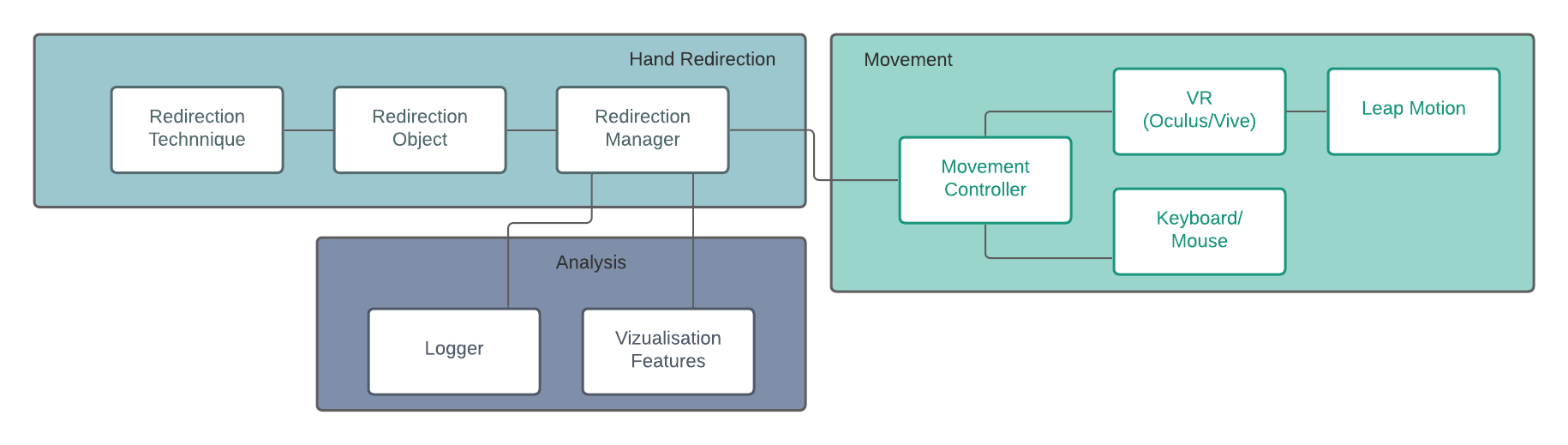

The HaRT can be divided into three main components:

-

Hand Redirection Component - includes the implementation of different Hand Redirection algorithms as well as the required classes, data structures, and Hand Redirection management logic.

-

Movement Component - integrates common VR SDKs and implements a script to use mouse and keyboard to move the real hand when no VR system is available.

-

Analysis Component - contains various visualization features and logging functionality.

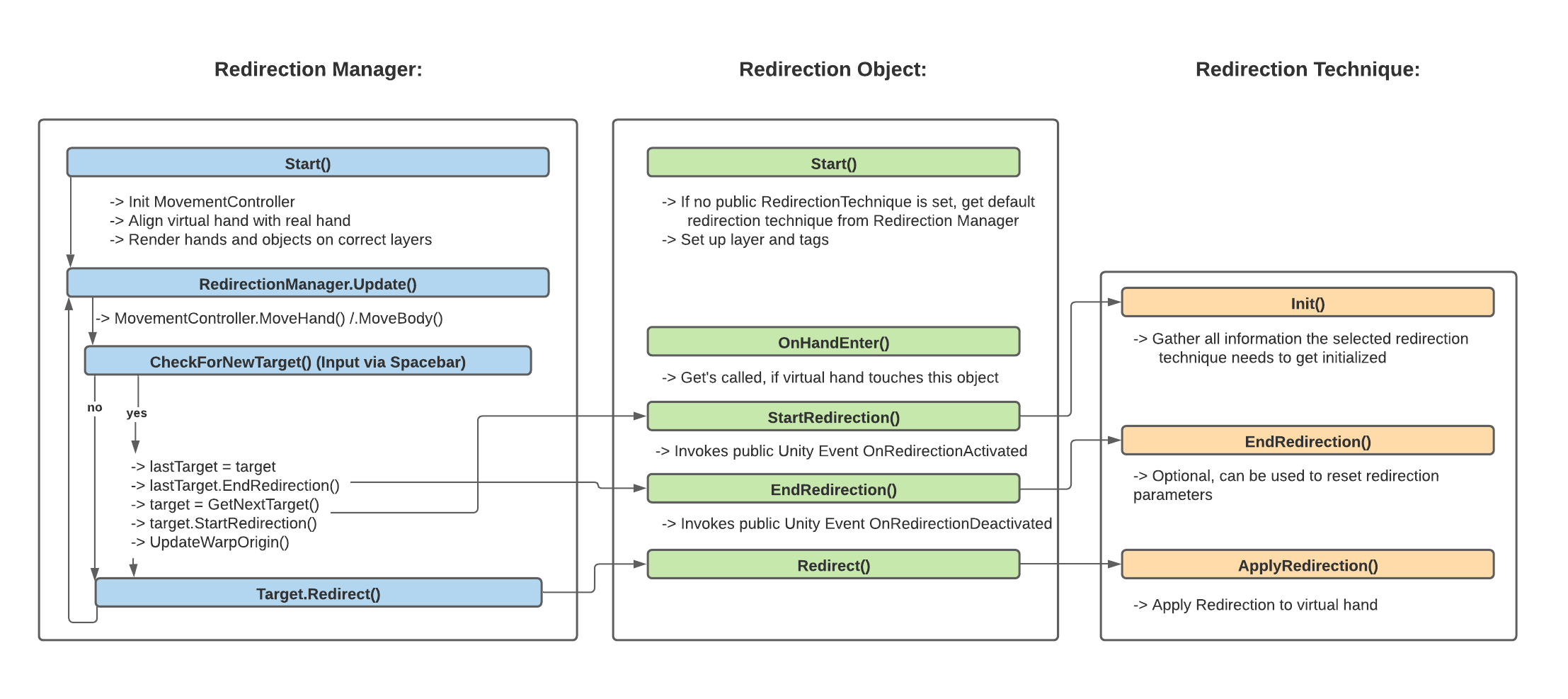

The Hand Redirection Component contains all the main functionalities and logic that is required to deploy or test hand redirection. This mainly includes the Redirection Manager class, which controls all processes. The Redirection Object class represents a virtual object that can be interacted with and a Redirection Technique holds the logic for a specific hand redirection algorithm. A detailed sequence diagram that describes the flow of redirection can be found below.

The Redirection Manager is the heart of the toolkit. Using the Unity Inspector interface, central parameters and settings can be applied (e.g., defining which objects in the scene belong to the real, and which to the virtual world).

Each redirection gets started and terminated by the Redirection Manager as well. Also, a default redirection technique (which is used if no specific redirection technique is set for a specific virtual object) can be set here. To trigger custom application code in response to the redirection, custom event callbacks can be registered that will be triggered, for example on the start/end of a redirection, as well as when the virtual hand reaches the virtual target.

Each mapping of a virtual to a real object is represented by a Redirection Object script. This script gets attached to the GameObject representing the virtual object of the redirection. The script holds a set of references to Virtual-To-Real Connection objects in the scene, each representing an individual mapping of a physical location to a virtual location.

The Hand Redirection Techniques are implemented as individual scripts. For more information on the internal inheritance structure, please visit the corresponding Wiki Page about Redirection Techniques.

The Movement Component handles the user's motion input. The toolkit is designed to work with the widely-used SteamVR platform, supporting HTC Vive and Oculus Rift. Additionally, the user's hands can be tracked with Leap Motion.

The toolkit can also simulate user movements by using only a mouse and keyboard as an input device. This simulation can be practical for quick testing or while creating novel Hand Redirection concepts. The manual movement input overview can be found below.

The Analysis Component implements additional functionality that aims to lower the barrier to understanding, evaluating, and debugging hand redirection techniques and scenes. The toolkit's logging functionality is described in the Logging section of the wiki and a detailed overview of all Visualization Features can also be found in the wiki.