-

Notifications

You must be signed in to change notification settings - Fork 54

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Add integrations documentation (#256)

Add 3 documentations for VSCode, GitHub Action and SIEM.

- Loading branch information

Corentin Mors

authored

Jun 10, 2024

1 parent

06671cc

commit da7f452

Showing

13 changed files

with

353 additions

and

64 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,12 @@ | ||

| import { Card, Cards } from 'nextra/components'; | ||

|

|

||

| # Integrations | ||

|

|

||

| We support several integrations with popular services and tools. | ||

| Explore the integrations below to learn how to improve your development workflows. | ||

|

|

||

| <Cards> | ||

| <Card title="🐙 GitHub Action" href="/integrations/github-action" /> | ||

| <Card title="💻 VS Code Extension" href="/integrations/vscode" /> | ||

| <Card title="🗂️ Logs to SIEM" href="/integrations/siem" /> | ||

| </Cards> |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,5 @@ | ||

| { | ||

| "github-action": "GitHub Action", | ||

| "vscode": "Visual Studio Code", | ||

| "siem": "SIEM" | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,49 @@ | ||

| import { Steps } from 'nextra/components'; | ||

|

|

||

| # GitHub Action for Dashlane | ||

|

|

||

| This CI/CD GitHub Action allows developers to inject their secrets vault from Dashlane to their GitHub workflow. | ||

|

|

||

| <Steps> | ||

| ### Register your device locally | ||

|

|

||

| ```sh | ||

| dcli devices register "action-name" | ||

| ``` | ||

|

|

||

| For more details refer to Dashlane CLI documentation https://dashlane.github.io/dashlane-cli | ||

|

|

||

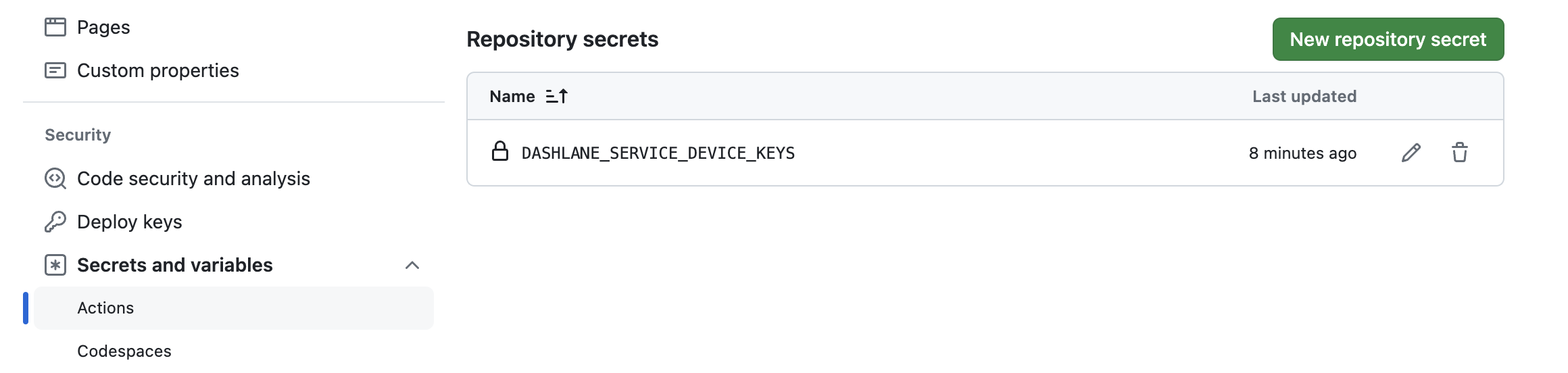

| ### Set GitHub Action environment secrets | ||

|

|

||

| Set the environment variable prompted by the previous step in your GitHub repository's secrets and variables. | ||

|

|

||

|  | ||

|

|

||

| ### Create a job to load your secrets | ||

|

|

||

| Set the same env variables in your pipeline as well as the ids of the secrets you want to read from Dashlane starting with `dl://`. | ||

|

|

||

| ```yml | ||

| steps: | ||

| - uses: actions/checkout@v2 | ||

| - name: Load secrets | ||

| id: load_secrets | ||

| uses: ./ # Dashlane/github-action@<version> | ||

| env: | ||

| ACTION_SECRET_PASSWORD: dl://918E3113-CA48-4642-8FAF-CE832BDED6BE/password | ||

| ACTION_SECRET_NOTE: dl://918E3113-CA48-4642-8FAF-CE832BDED6BE/note | ||

| DASHLANE_SERVICE_DEVICE_KEYS: ${{ secrets.DASHLANE_SERVICE_DEVICE_KEYS }} | ||

| ``` | ||

| ### Retrieve your secrets in the next steps | ||

| Get your secrets in any next step of your pipeline using `GITHUB_OUTPUT`. | ||

|

|

||

| ```yml | ||

| - name: test secret values | ||

| env: | ||

| ACTION_SECRET_PASSWORD: ${{ steps.load_secrets.outputs.ACTION_SECRET_PASSWORD }} | ||

| ACTION_SECRET_NOTE: ${{ steps.load_secrets.outputs.ACTION_SECRET_NOTE }} | ||

| ``` | ||

|

|

||

| </Steps> |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,205 @@ | ||

| # Send your audit logs to a SIEM | ||

|

|

||

| **Read full documentation on how to use the Dashlane CLI to send your audit logs to a SIEM, here: https://github.com/Dashlane/dashlane-audit-logs** | ||

|

|

||

| --- | ||

|

|

||

| This projects allows you to retrieve your Dashlane's audit log and send them in the SIEM or storage solution of your choice, using FluentBit. At the moment, we provide out of the box configurations for the following solutions: | ||

|

|

||

| - Azure log analytics workspace | ||

| - Azure blob storage | ||

| - Splunk | ||

| - Elasticsearch | ||

|

|

||

| This list is not restrictive, as others destination can be used. You can find the list of supported platforms on FluentBit's website: https://docs.fluentbit.io/manual/pipeline/outputs | ||

|

|

||

| ## Prerequisites | ||

|

|

||

| In order to manage the Dashlane audit logs of your business account, you need to generate the credentials that will be used to pull the logs. The procedure can be found here: https://dashlane.github.io/dashlane-cli/business | ||

|

|

||

| ## How does it work ? | ||

|

|

||

| The Docker image provided leverages the Dashlane CLI tool that will pull the audit logs and send them in your SIEM of choice. By default, when running the image in a container, the logs from DAY-1 will be retrieved, and new logs will be pulled every thirty minutes. To handle the logs, we included FluentBit with this basic configuration file: | ||

|

|

||

| ``` | ||

| [INPUT] | ||

| Name stdin | ||

| Tag dashlane | ||

| [OUTPUT] | ||

| Name stdout | ||

| Match * | ||

| Format json_lines | ||

| ``` | ||

|

|

||

| To send the logs to a new destination, you need to enrich this configuration file template and add an **OUTPUT** section such as described on the following sections. To use your custom configuration file, you need to override the **DASHLANE_CLI_FLUENTBIT_CONF** environment variable and set the path of your configuration file. The method to pass your file will depend on the plaform you use to run the image. | ||

|

|

||

| ## Accessing the logs | ||

|

|

||

| The first step to retrieve the audits logs is to run the custom image we provide and that can be found here: https://hub.docker.com/r/dashlane/audit-logs | ||

|

|

||

| This image can run on the platform of your choice. To make a simple test, you can deploy it with Docker by doing so: | ||

|

|

||

| ### Environment variables | ||

|

|

||

| `DASHLANE_CLI_FLUENTBIT_CONF` | ||

|

|

||

| - Path of the FluentBit configuration file | ||

| - Default to `/opt/fluent-bit.conf` | ||

|

|

||

| `DASHLANE_CLI_RUN_DELAY` | ||

|

|

||

| - Delay between each log pull | ||

| - Default to `60` seconds | ||

|

|

||

| `DASHLANE_TEAM_DEVICE_KEYS` | ||

|

|

||

| - Secret key to authenticate against Dashlane servers as the team | ||

| - [Documentation to generate the credentials](https://dashlane.github.io/dashlane-cli/business) | ||

|

|

||

| ### Running in Docker | ||

|

|

||

| ``` | ||

| docker pull dashlane/audit-logs | ||

| docker run -e DASHLANE_TEAM_DEVICE_KEYS=XXX -it dashlane/audit-logs:latest | ||

| ``` | ||

|

|

||

| Running those commands will create a simple container that pull your business every minutes and and print them on the stdout of the container. | ||

|

|

||

| ### Kubernetes | ||

|

|

||

| A helm chart is provided by the repository to deploy the service on Kubernetes. | ||

|

|

||

| ```bash | ||

| helm install dashlane-audit-logs dashlane-audit-logs/ | ||

| ``` | ||

|

|

||

| Some example of configuration is provided in `example/`. | ||

|

|

||

| ## SIEM configuration | ||

|

|

||

| ### Azure Log analytics workspace | ||

|

|

||

| To send your Dashlane audit logs on Azure in a Log Analytics Workspace, you can use the template provided in the dashlane-audit-logs repository. The template will create a container instance that will automatically pull and run the Dashlane Docker image and send the logs in a **ContainerInstanceLog_CL** table in the Log Analytics Workspace of your choice. Before deploying the template you will have to provide: | ||

|

|

||

| - The location where you want your container to run (ex: "West Europe") | ||

| - Your Dashlane credentials | ||

| - Your Log Analytics Workspace ID and Shared Key | ||

|

|

||

| > **Click on the button to start the deployment** | ||

| > | ||

| > [](https://portal.azure.com/#create/Microsoft.Template/uri/https%3A%2F%2Fraw.githubusercontent.com%2FDashlane%2Fdashlane-audit-logs%2Fmain%2FAzureTemplates%2FLog%20Analytics%20Workspace%2Fazuredeploy.json) | ||

| ### Azure blob storage | ||

|

|

||

| If you want to send your logs to an Azure storage account, you can use the deployment template we provide in the dashlane-audit-logs repository, which will: | ||

|

|

||

| - Create a storage account and a file share to upload a custom FluentBit configuration file | ||

| - Create a container instance running the Docker image with your custom file | ||

|

|

||

| You will need: | ||

|

|

||

| - Your Dashlane credentials | ||

| - A custom FluentBit configuration file | ||

|

|

||

| > **Click on the button to start the deployment** | ||

| > | ||

| > [](https://portal.azure.com/#create/Microsoft.Template/uri/https%3A%2F%2Fraw.githubusercontent.com%2FDashlane%2Fdashlane-audit-logs%2Fmain%2FAzureTemplates%2FBlob%20storage%2Fazuredeploy.json) | ||

| Once your container is deployed, copy the following configuration into a file called "fluent-bit.conf". | ||

|

|

||

| ``` | ||

| [INPUT] | ||

| Name stdin | ||

| Tag dashlane | ||

| [OUTPUT] | ||

| Name stdout | ||

| Match * | ||

| Format json_lines | ||

| [OUTPUT] | ||

| name azure_blob | ||

| match * | ||

| account_name ${STORAGE_ACCOUNT_NAME} | ||

| shared_key ${ACCESS_KEY} | ||

| container_name audit-logs | ||

| auto_create_container on | ||

| tls on | ||

| blob_type blockblob | ||

| ``` | ||

|

|

||

| Then upload in the storage account you just created. In the Azure Portal, go to **Storage accounts**, select the one you just created, go to **File shares**, select **fluentbit-configuration** and upload your configuration file. | ||

|

|

||

| > The "blob_type" configuration specifies to create a blob for every log entry on the storage account, which facilitates the logs manipulation for eventual post-processing treatment. | ||

| > The configuration provided above is meant to be working out of the box, but can be customized to suit your needs. You can refer to FluentBit's documentation to see all available options: https://docs.fluentbit.io/manual/pipeline/outputs/azure_blob | ||

| ## Splunk | ||

|

|

||

| If you want to send your logs to Splunk, you need to create a HEC (HTTP Event Collector) on your Splunk instance. As an example, we will show here how to create one on a Splunk Cloud instance. | ||

|

|

||

| 1- On the Splunk console, go to **"Settings / Data input"** and click on **Add New** in the **HTTP Event Collector** line. | ||

|

|

||

| 2- Give your collector a name and click **Next** | ||

|

|

||

| 3- In the **Input settings** tab keep the option as is and click on **Next** | ||

|

|

||

| 4- In the **Review tab**, click on **Submit**. You should see a page indicating that the collector has been created. | ||

|

|

||

| > The token provided will be used to authenticate and send the logs to your Splunk instance. | ||

| You can make a test by running the following command: | ||

|

|

||

| ``` | ||

| curl -k https://$SPLUNK_URL.com:8088/services/collector/event -H "Authorization: Splunk $SPLUNK_TOKEN" -d '{"event": "Dashlane test"}' | ||

| ``` | ||

|

|

||

| If all is working you should receive the following response: | ||

|

|

||

| ``` | ||

| {"text":"Success","code":0} | ||

| ``` | ||

|

|

||

| Finally, to send your Dashlane logs to Splunk, you need to customize your FluentBit configuration file by adding the relevant Splunk configuration: | ||

|

|

||

| ``` | ||

| [OUTPUT] | ||

| Name splunk | ||

| Match * | ||

| Host splunk-instance.com | ||

| Port 8088 | ||

| TLS On | ||

| splunk_token ${SPLUNK_TOKEN} | ||

| ``` | ||

|

|

||

| Here, you just need to change the host parameter and indicate yours, and pass your Splunk token as an environment variable to the container. | ||

| Once the data are sent, you can query them by going to the **"Apps/Search and reporting"** menu in the console and type this basic query in the search bar: | ||

|

|

||

| ``` | ||

| index=* sourcetype=* | ||

| ``` | ||

|

|

||

| You should now be able to access your Dashlane audit logs. | ||

|

|

||

| ## Elasticsearch | ||

|

|

||

| Work in progress | ||

|

|

||

| Output configuration for Elasticsearch | ||

|

|

||

| ``` | ||

| [OUTPUT] | ||

| Name es | ||

| Match * | ||

| Host host | ||

| Port 443 | ||

| tls on | ||

| HTTP_User user | ||

| HTTP_Passwd pwd | ||

| Suppress_Type_Name On | ||

| ``` | ||

|

|

||

| ## Notes | ||

|

|

||

| All configuration are provided as is and designed to work out of the box. If you want customize them, you can consult the FluentBit documentation: https://docs.fluentbit.io/manual/pipeline/outputs |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,16 @@ | ||

| # VS Code Extension for Dashlane | ||

|

|

||

| The Dashlane VS Code extension helps integrate your favorite IDE with the Dashlane password manager. | ||

|

|

||

| You can download it from the [VS Code Marketplace](https://marketplace.visualstudio.com/items?itemName=Dashlane.dashlane-vscode). | ||

|

|

||

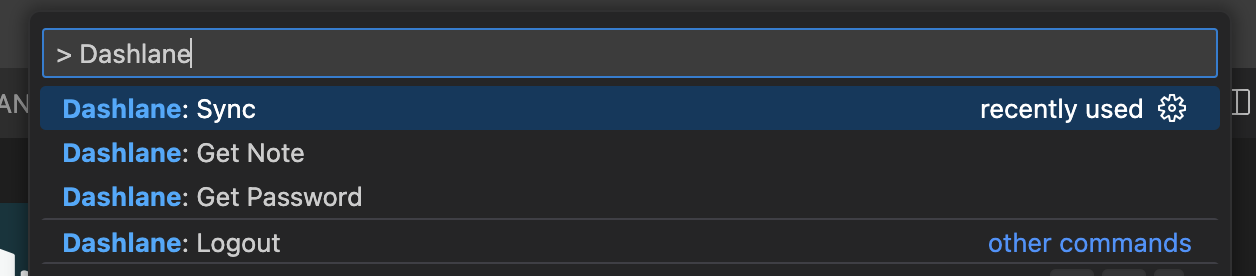

| ## Features | ||

|

|

||

|  | ||

|

|

||

| ## Extension Settings | ||

|

|

||

| This extension contributes the following settings: | ||

|

|

||

| - `dashlane-vscode.shell`: The shell path or a login-shell to override Dashlane CLI process default shell (see Node child_process.spawn()) for more detail. | ||

| - `dashlane-vscode.cli`: The path to the Dashlane-CLI binary (resolvable from `$PATH`). |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,8 +1,8 @@ | ||

| { | ||

| "index": "Get Started", | ||

| "authentication": "Authentication", | ||

| "devices": "Managing your Devices", | ||

| "vault": "Accessing your Vault", | ||

| "secrets": "Load secrets", | ||

| "backup": "Backup your local Vault" | ||

| "backup": "Backup your local Vault", | ||

| "logout": "Logout" | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.