-

Notifications

You must be signed in to change notification settings - Fork 106

Virtualization

Context Part Two: Virtualization

In this Chapter a brief summary about Virtualization, Continous Integration, IaC and Docker will be given:

-

An introduction about what is Virtualization, the benefits and the ways to accomplish it;

-

What are microservices and why this model is quickly spreading;

-

What is Docker and where is located in virtualization context;

-

Infrastructure as Code and Continous Integration with a particular reference to Docker Technology.

Virtualization

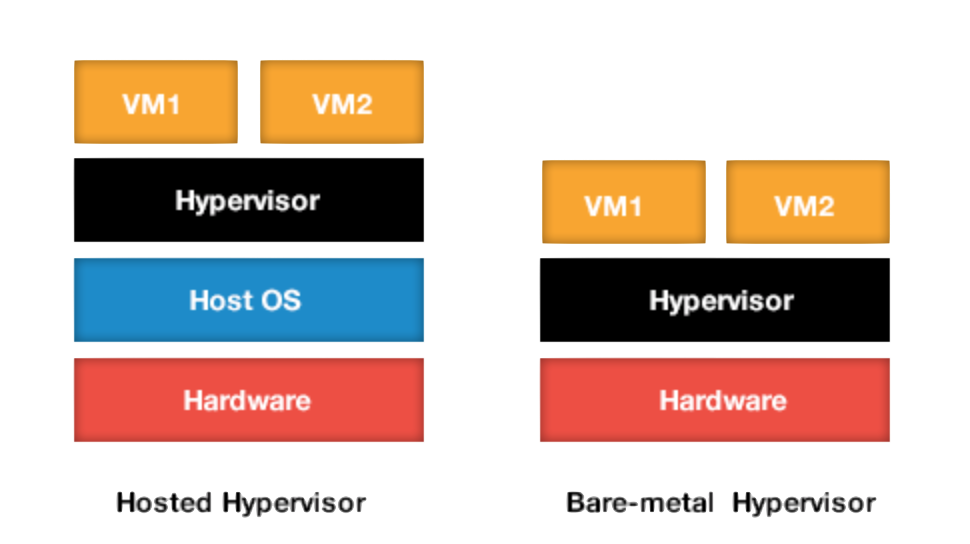

Virtualization is technology that allows you to create multiple simulated environments or dedicated resources from a single, physical hardware system [@redhat] . Virtualization allows to run multiple OSes on a single computer by providing the illusion that each OS is running on real HW. With virtualization is possible to obtain multiple benefits, such as resource optimization, load balancing, high availability, isolation of different environments. There are many ways to do virtualization. Two principal ways are Hosted Virtualization and Bare-Metal Virtualization. This classification depends on the type of Hypervisor (or VMM) that is virtual machine managment’s component: in the first type the virtual machines takes advantages of all hardware resources without the support of an underlying OS, the hypervisor offers all the managment functions create, configure, destroy virtual-machines and offers an hardware abstraction to VMs in order to simulate multiple instances of the actual hardware. In the second system an OS (named host operating system) manages a special process (the hypervisor process), which provides all VMM functionalities.

This classification can be extended according to used technique to do virtualization: in Full Virtualization the hypervisor trap, translate and execute privileged instruction on-the-fly. There is no need to modify the guest operating system and it’s like it is running on the same hardware of the host OS. A completely different virtualization is called Paravirtualization. In this approach there is no virtual isolation but hypervisor provides a set of hypercalls that allow the guest to send requests in order to do privileged sensitive operations, so in this approach the kernel of guest operating system is modified in order to provides hypercalls. This kernel change depends on particular vendor, so a proposed solution is to modify the lower kernel layer that interfaces with hypervisors / hardware in order to provide a generic VMI; in this way if the hypervisor changes or is removed no kernel modifications are required:

Microservices

Microservices are an architectural pattern that allows to build modular and scalable applications by decoupling all the functionalities in a set of loosely coupled, collaborating services [@richardsonMicroservices]. The increasing application’s complexity made the monolithic approach not satisfactory in terms of scalability and maintainability. In order to resolve the scalability problem different three-tiers pattern were developed. In these approaches a thin client is served by a business logic layer that offers an abstraction layer for the data layer, and a server replication can resolves the scalability problem. Anyway, this doesn’t resolve the maintainability problem for the business logic layer: when the complexity of the functions grows the application code become unmanageable. In addition this approaches make it hard for developers to collaborate: when a developer have to edit a business logic feature he has to know all the business logic layer. So a new architectural approach has been developed: Service Oriented Architecture. In this approach the logic is divided in more subsets of functionalities and each subset is developed separately.

Microservices are a “thin” evolution of this approach for a single application (while the SOA are used to offer services to many application, a microservice architecture is used in a single application that is developed by subdividing each functionality in a separated module. Microservices are a thin service oriented approach because:

-

Use simple communication protocol, (such as API Restful);

-

Use a simple data exchange format (JSON).

Main advantages of microservices architectures are:

-

Modularity because of the functional decomposition.

-

Scalability

-

Support for agile development, for versioning and for a building, testing and deploy environment

-

Makes it easier the continous delivery and the group collaboration.

That is the reason because of the current spread of microservices-based architectures.

IaC and CI

Infrastructure as code (IaC) is the process of managing and provisioning computer data centers through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools [@WittigIAC].

This recent approach is a productive way to manage the growing CloudComputing platforms and modern technologies such as web frameworks and utility computing that lead to scalability problems. In the past a new server application required to find and configure hardware and deploy the application, an expensive and long time process. By IaC approach it’s possible to deploy a new application by simply writing a file .

Main IaC’s characteristics are [@fowlerIAC]:

-

self-documentation: with a more readable and precise code it’s possible to develop self-documentation for systems and processes. If necessary, human automatic generation can be developed by code

-

Keep services available continously by use of techniques as Blue Green Deployment

-

Increase the change frequency by reducing the difficulty that leads to errors (with a simple source code the old batches are replaced by small changes)

IaC brings a lot of advantages: make it easily to manage servers’ replication, because the configuration is shared and reduces the risk of conflicts between different server configurations. In addition make it easily the changes, by introducing the version controlling in the code, and gives complete support to scalability architectures. It’s a perfect approach when it’s used with microservices.

Continous Integration is a development approach where different teams work concurrently by doing multiple daily integrations that are verified by automated build tools [@fowlerCI]. CI focuses on integrating components of an application more frequently than traditional approaches by using supporting tools that help compile, testing, building processes. In Continous Integration there is a main repository shared by all the teams, the typical steps that a contributor does are:

-

Copy current source code in local;

-

Do changes by altering the main production code, and self-testing code in order to check errors;

-

Do automatic build: commit, link, execution and run tests.

-

Check-out remote updates that have been committed during self-changes;

-

Resolves optional conflicts with current version of source code;

-

Commit changes, integration testing based on mainline code.

This approach uses a source code managment system with version managment. Everyone have to synchronize with the source repository and have to know these tools in order to contribute. In addition to source code, other types of files can be saved, such as IDE configuration files, build files and test code. A useful testing methodology used with CI is Continous Integration Testing. Continous Integration Testing is a modern testing methodology that brings closer the development and testing phase, by supporting QA and development process in order to meet the modern business needs. Nowadays there is more business competition, budget constraints so new ways to improve business performance are needed, such as agile development process, continous integration, extreme programming, etc. CIT enables unit and functional testing during development phase, by using a combination of testing and development tools, and by doing daily performance, functional and non functional tests. Main advantages with these approach are to minimize defects by detecting them in early development process phase, reduction of total testing time and cost. CIT application tests starts at the beginning of development phase. The source and test code are in a single core repository that is shared between developers and testers, so there is not more a clear distinction between these two roles.

Why Docker?

From the Docker documentation [@whatIsDocker]:

Docker is the worlds leading software container platform. Developers use Docker to eliminate “works on my machine” problems when collaborating on code with co-workers. Operators use Docker to run and manage apps side-by-side in isolated containers to get better compute density. Enterprises use Docker to build agile software delivery pipelines to ship new features faster, more securely and with confidence for both Linux and Windows Server apps.

It can be seen that Docker resolves three problems:

-

Collaboration: with elements as Registry, DockerHub and containers it’s possible to share Docker images (the “templates” of the services). With the containers it’s possible to create shared and collaborative systems.

-

Isolation: Docker provided many options that allow users to completely configure host machine resources on which the docker engine works.

-

Continous Integration of secured applications and agile software development: it’s possible to create any type of service inside “low-resource-usage boxes” called containers that can be continuously updated by changing the image of containers.

Docker helps developers to automate repetitive task of setting up and configuring development environments. Docker also helps enterprise enviroment by supporting traditional and microservices archictectures and by providing agile development, cloud-ready and secure apps at optimal costs. Docker provides an unified framework for all apps and accelerate delivery by automating deployment pipelines. Docker also provides fully interoperability with development platforms such as github and with cloud computing and clustering with Docker Cloud concepts and features. Other advanced features enables load balancing and replication of containers on overlad (see Docker Swarm and Docker-Machine).

Docker Overview

Docker has a client-server architecture [@dockerOverview]. Docker client talks to the Docker daemon that builds, runs and distribuites Docker containers. Communication can be done by REST API, UNIX sockets or by network interface.

A Docker registry stores Docker images. Docker Hub is a public registry that everyone can use. Images can be downloaded by docker pull command. An image is a read-only template with instructions for creating a Docker container. It uses inheritance pattern, so an image can be based on another image with additional customization. In order to use an image you have to build it. A Dockerfile is a file with simple syntax that is used to create an image. Each instruction inside the Dockerfile creates a layer in the image, so an image can also be seen as a set of layers. When the image is created, it can be pulled on a registry in order to let other users to use it.

A Container is a runnable and isolate instance of an image. Docker Programming language is GO

Docker and Virtualization

Docker originates by a Linux kernel feature developed by Google called **cgroups **that provides many virtual OS capabilities such as resource limiting, prioritization, accounting and control of control groups, i.e. collection of processes that are bound by the same criteria and associated with a set of parameters or limits . Each subsystem (memory, CPU) has an independent hierarchy that starts with a root node, each node is a group of process sharing the same resources. Docker also uses namespaces to provide the isolated workspace (container). Namespaces limits what you can see, each process is in one namespace of each type. When you run a container, Docker creates a set of namespaces for that container in order to create a layer of isolation for each aspect of a container: pid for process isolation, net for network interfaces, ipc for access to IPC resources etc.

Docker Engine uses UnionFS and UnionFS variants to provide the building blocks for containers. Union FS are file systems that operate by creating layers, making them very lightweight and fast. All these functionalities are combined into a wrapper called container wrapper. The default container format is libcontainer, that is a native Go implementation for creating containers with namespaces, cgroups, capabilities and filesystem access controls. All these Linux Kernel features that provides support to virtualization are known as KVM. Some IBM experiments in 2012 have demonstrated that Docker performance is comparable to KVM and native performance.

Docker and CI

A typical use case of Docker is Continous Integration [@dockerCI]. Docker can integrate all CI tools such as Jenking and GitHub, by helping developers to submit code (with GitHub) and automatically trigger a build (with Jenkins). When all the process is done the image can be updated in the registry, and can be downloaded by the users (so Docker also enables Continous Delivery features). This process help to save time on build and setup processes, by allowing developers to run tests in parallel and automate them. Furthermore there is no inconsistency between different environment types because all the code runs on shared container images.

Why Docker-Compose?

Docker-compose allows to define infrastructure by simply writing a file in yaml syntax (docker-compose.yml) [@dockerCompose]. So, it’s possible to set an IaC process by using the docker-compose syntax. Here an example where we run 5 containers as showed in the image:

Docker Compose allows to use all the option that it’s possible to use with docker-cli when a container is started. Docker Compose uses YAML syntax to describe network infrastructures. A docker-compose.yml file describing infrastructure is located inside a root directory. When the user gives the docker-compose up command a shared network is created and all the containers defined in docker-compose.yml file run.

Common Use Cases of Docker Compose are to create development environments, to automate testing enviroments by easily creating and destroying testing environments for your test suite and single-host deployments (there is a complete documentation in docker site where best practices about using compose in production are showed). Docker Security Playground uses Docker Compose in order to create vulnerable network infrastructures.

Docker-Compose and IaC

Docker-Compose allows you to create and configure infrastructures by coding. As we have seen previously, this approach is called IaC. Docker-Compose implements IaC, but its simple syntax doesn’t provide advanced IaC features, such as functions post-running, check of status. For example, after that a SQL server is running, you’d like to verify that MySQL is running properly, create a new user, create a database through code. YAML syntax only provides you a way to create the infrastructures, but you cannot dinamically use actions to containers. In order to do this, you’d have to create custom images but it could be inefficient if you’d like to do some parametric action, such as add a new user or create a database. Anyway you can create a bash script that uses docker-compose exec function in order to execute commands inside containers. Docker Security Playground introduces a Docker Image Standard in order to automate the process of sending commands to containers (Docker Image Wrapper).

Docker + Docker-Compose = a CI for an IaC

By using Docker-Compose + Docker CLI it’s possible to implement a CI process for an IaC: with docker-compose you can create, configure and deploy a service-based network, or also a virtual network environment by configuring networks, routers, client and server machines. Each service or machine is a container and it’s created by using an image. With docker commands you can easily update the core images of all containers inside the virtual network, and you can publish them on public or private registry, by making it easy and automating the build, test and run process. In this way it’s possible to create advanced network infrastructures that can be easily updated by updating the core images and can be shared by multiple stakeholders, in order to provide efficient, scalable and robust services. Docker Security Playground uses all these aspect in order to create virtual vulnerable network infrastructures for penetration testing that can be easily shared, updated and used by users. The Docker hub registry is used to share vulnerable images that are used in docker labs. A docker-compose.yml file is a vulnerable network lab that allow user to learn a network security aspect. The application uses a convention called Docker Image Wrapper in order to automatize the post-running operations in containers ( create users, create databases, add new pages to web servers).

What Docker cannot offer to Docker Security Playground?

In spite of all the advantages that Docker offers, by providing a flexible virtualization environment , there are also some missing features in network simulation that we need. About vulnerable services Docker is the best virtualization environment, because it’s possible to completely reproduce a vulnerable service such as a web server vulnerable to SQL Injection, an old OpenSSH with hearthbleed vulnerability. Docker is a great platform also to reproduce user process vulnerability such as buffer overflow. Main problems comes in his dependency with Linux: in Docker it is not possible to reproduce Windows environments because all the architecture is based on kernel Linux (not completely true, because there is a Docker native support for Windows in advanced Windows versions, such as Windows 10 Pro or Windows Server 2016, but the chance to simulate Windows vulnerable services has not been studied in this work). Other vulnerabilities that cannot be implemented are all the low-level / kernel vulnerabilities: docker containers share the host kernel, so you cannot change the kernel of a single container to an older version in order to analyze known kernel vulnerabilities. Anyway, a solution could be to implement mixed labs: Docker containers can communicate with vulnerable host-based virtual machines (created with VMWare or VBox). In this way it’s possible to completely implement all the types of vulnerabilities, including Windows and Kernel low-level vulnerabilities ( depending on the depth of virtualization that HVM offer ).

What Now?