Agentic systems, whether designed for tool use or for reasoning, are fundamentally built on prompts. Yet prompts themselves follow a linear, sequential pattern and cannot self-optimize. Real agentic training comes from the way an agent learns, adapts, and collaborates in dynamic environments.

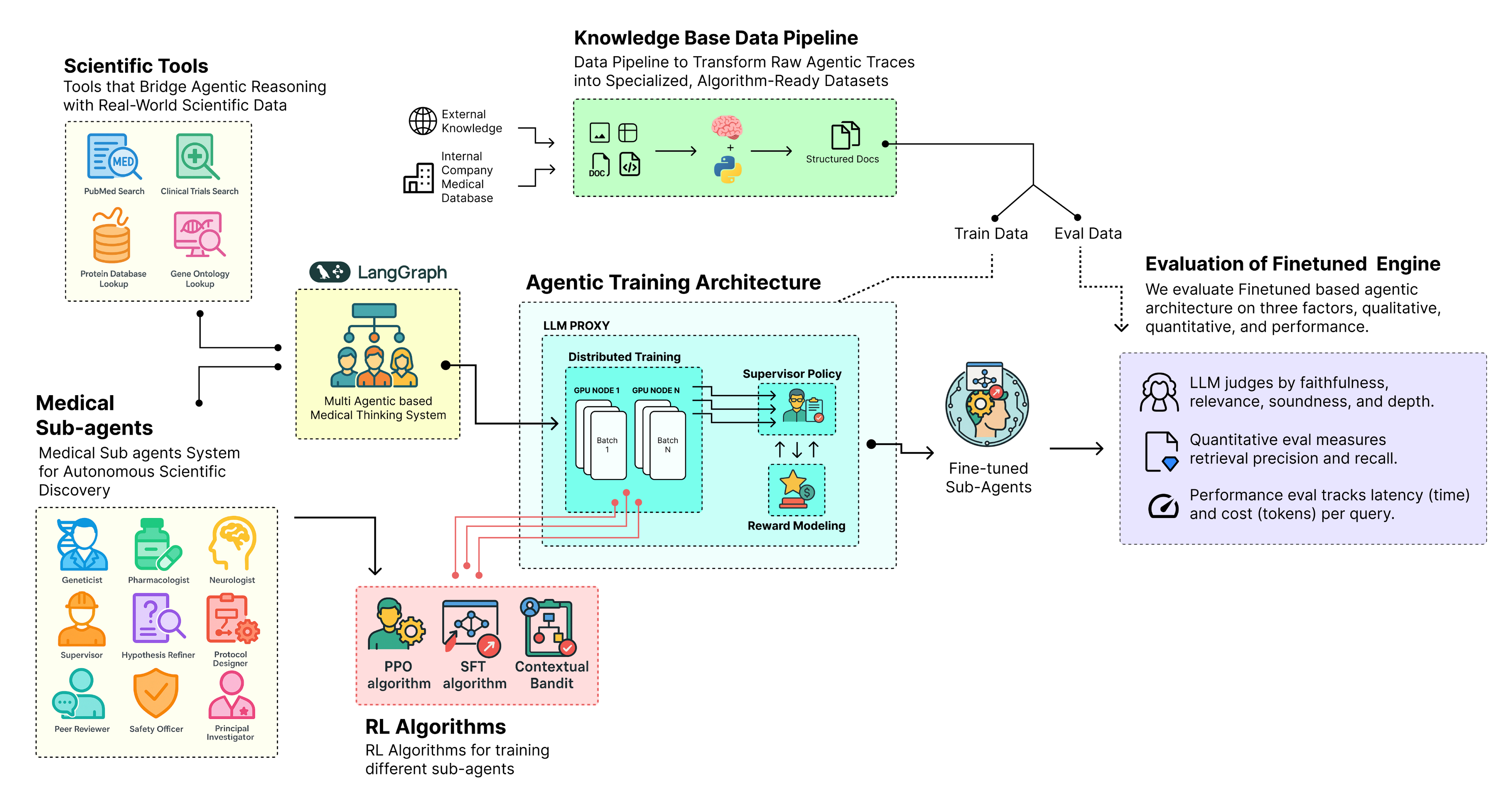

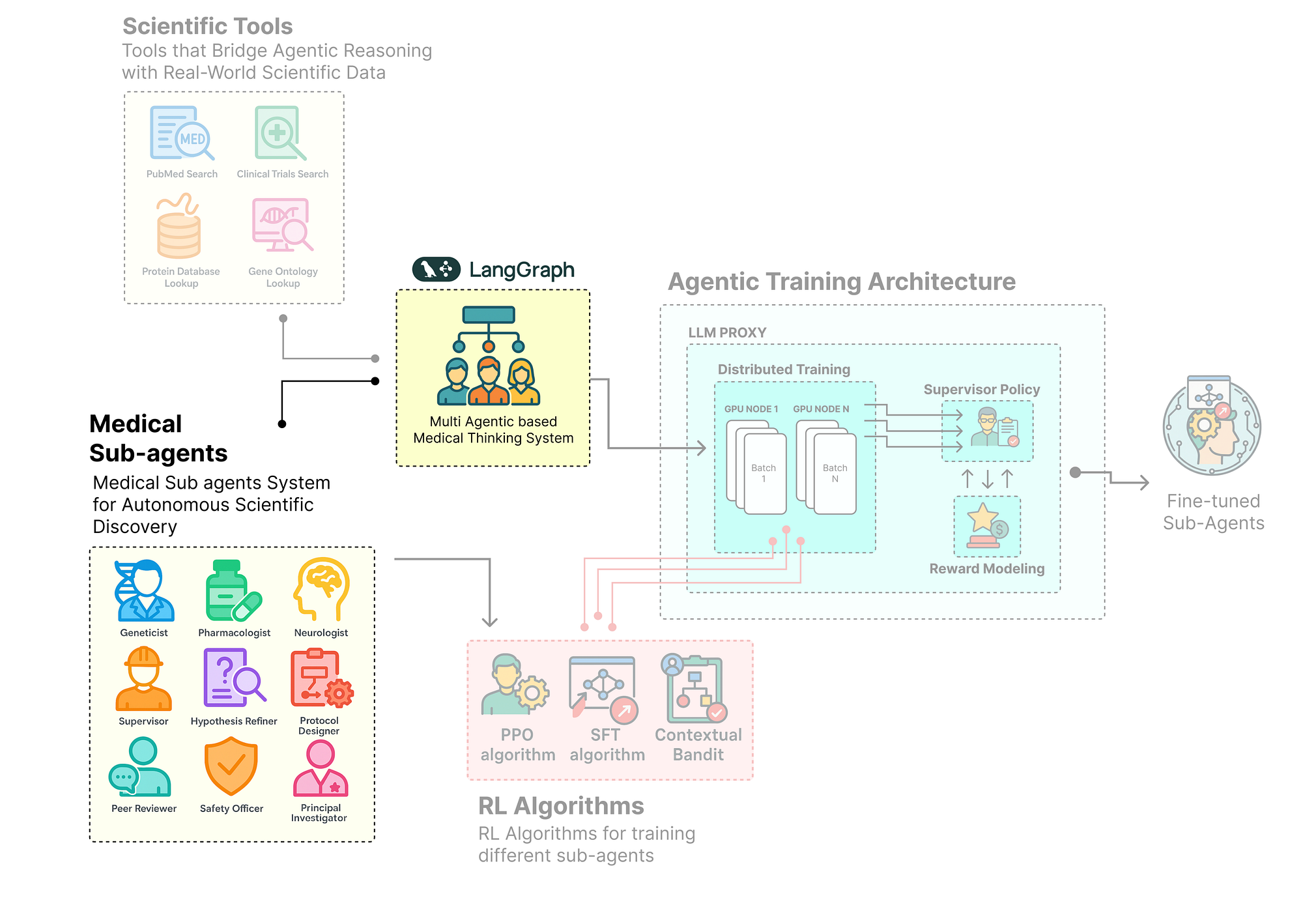

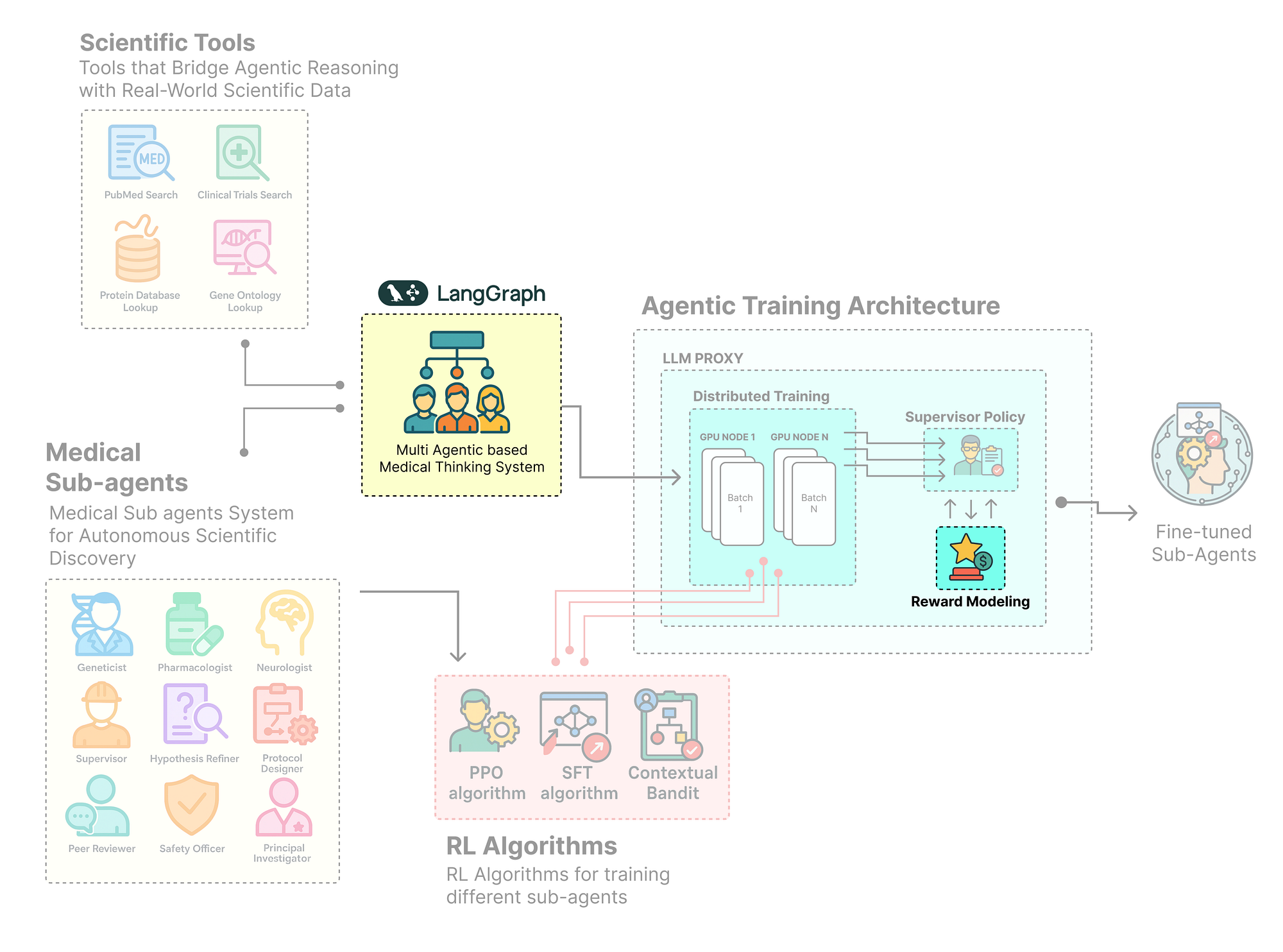

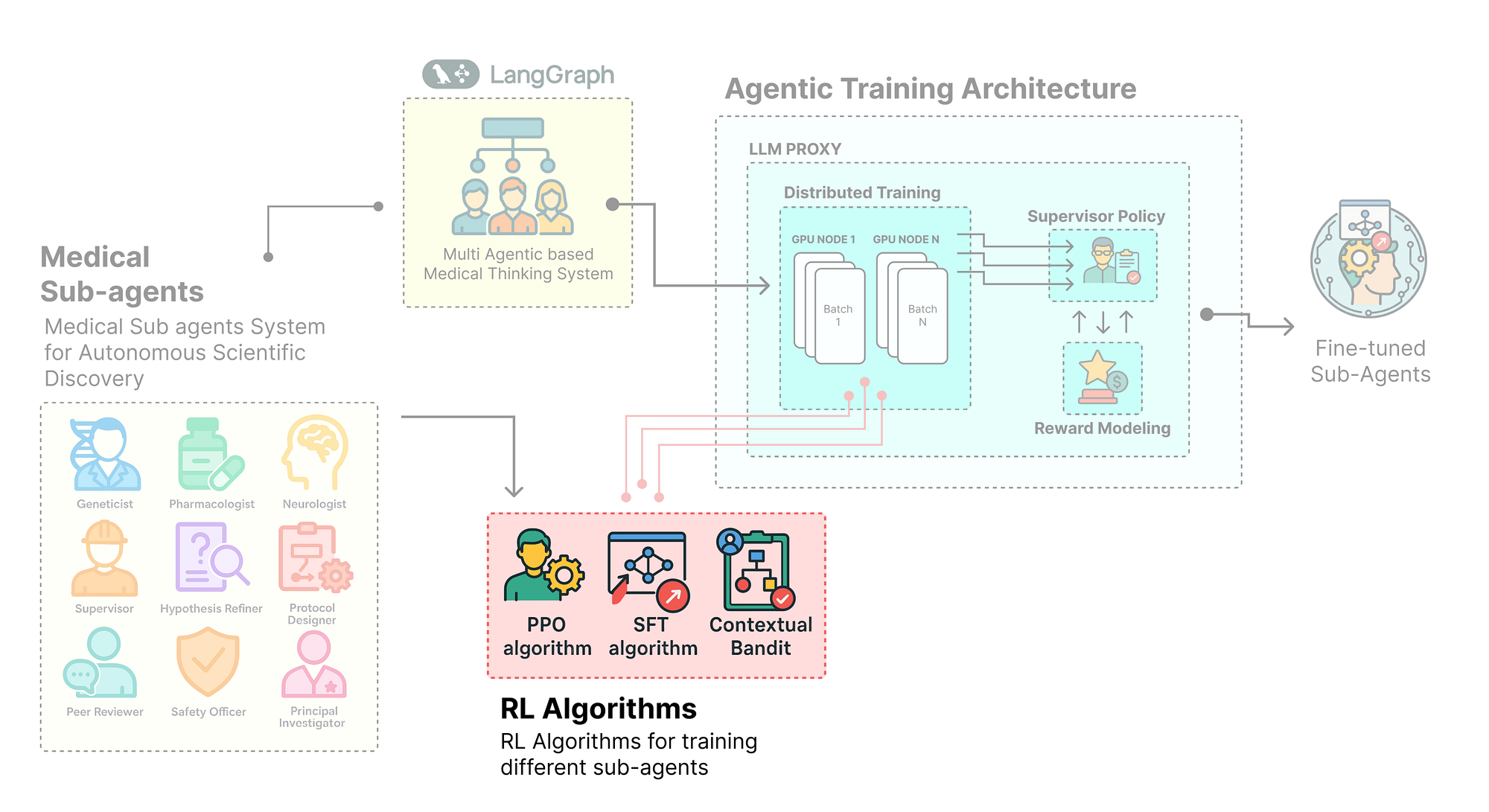

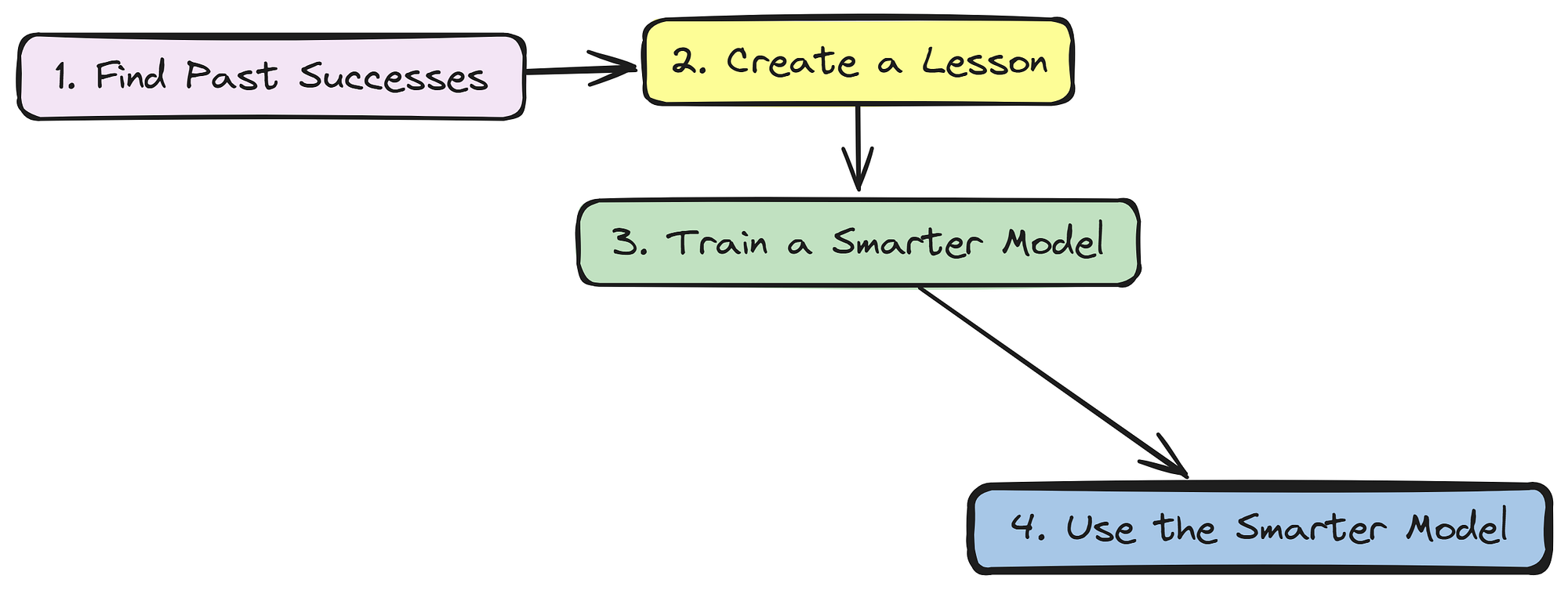

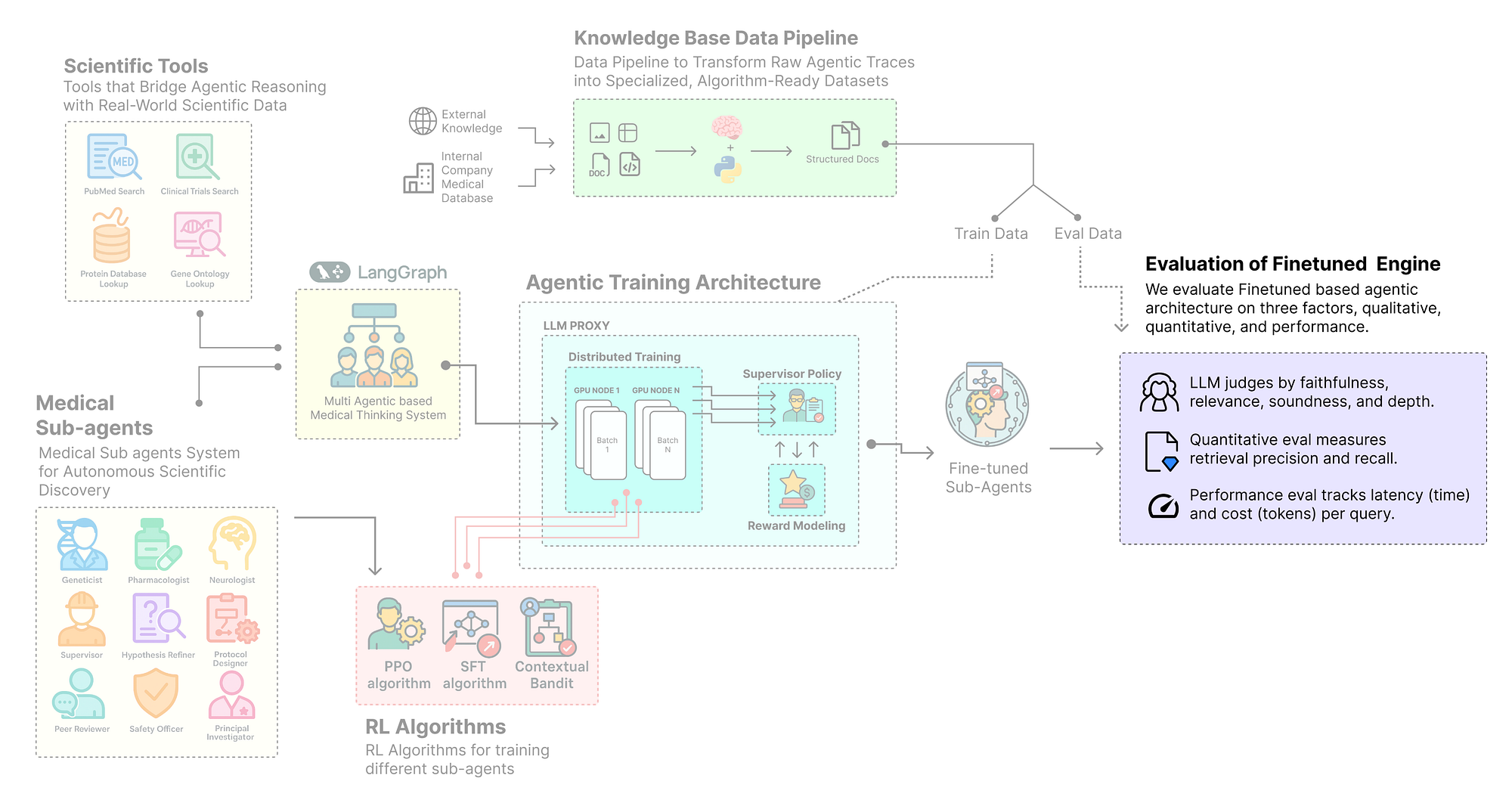

In an agentic architecture each sub-agent has a different purpose, and that means a single algorithm won’t work for all. To make them more effective, we need a complete training architecture that connects reasoning, reward, and real-time feedback. A typical training architecture for an agentic system involves several interconnected components …

- First, we define the training foundation by setting up the environment, initializing agent states, and aligning their objectives with the system goals.

- Next, we build the distributed training pipeline where multiple agents can interact, learn in parallel, and exchange knowledge through shared memory or logs.

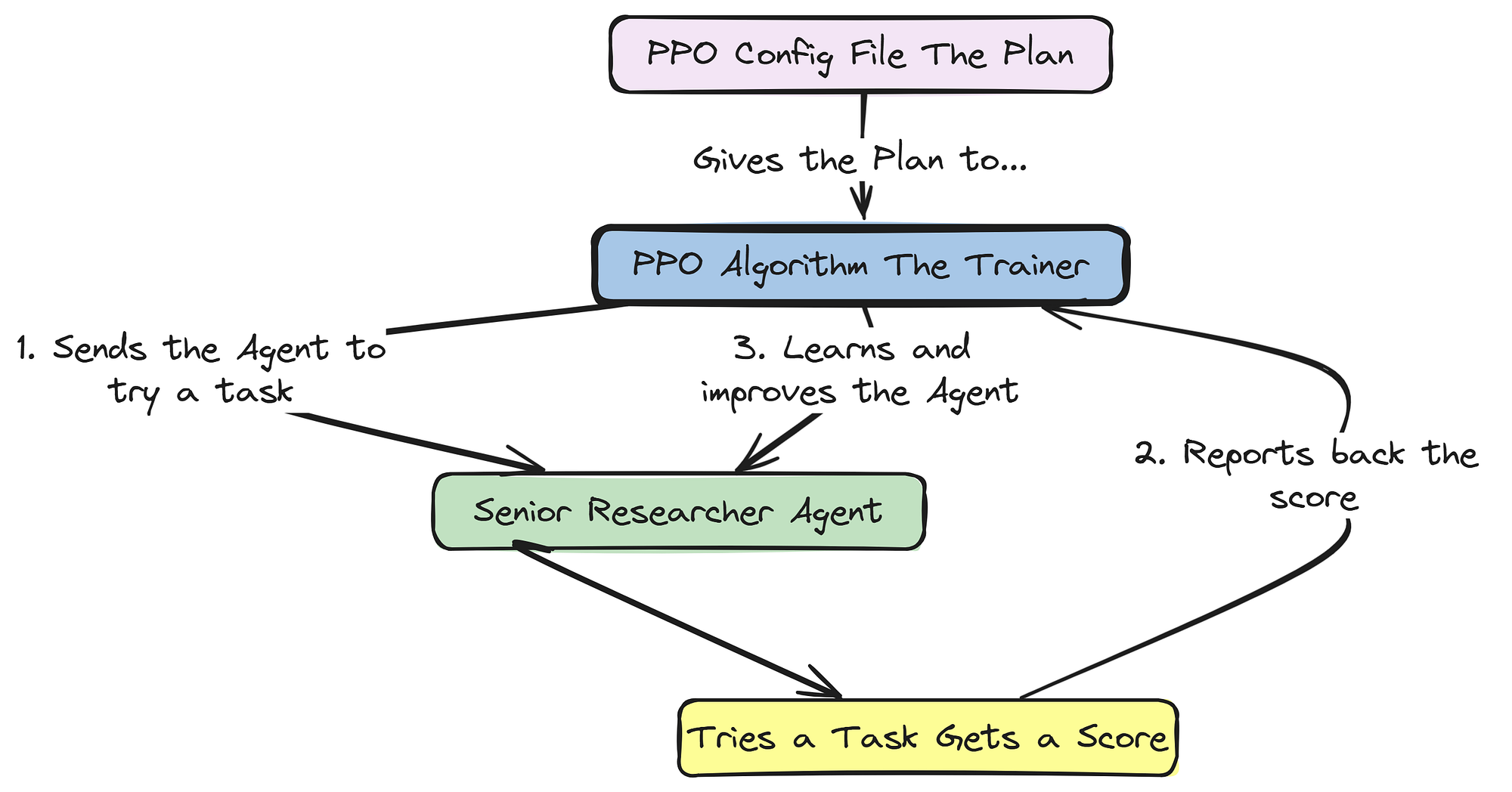

- We add the reinforcement learning layer that powers self-improvement using algorithms like SFT for beginners, PPO for advanced optimization, and contextual bandits for adaptive decision making.

- We connect observability and monitoring tools such as tracing hooks and logging adapters to capture every interaction and learning step in real time.

- We design a dynamic reward system that allows agents to receive feedback based on their performance, alignment, and contribution to the overall task.

- We create a multi phase training loop where agents progress through different stages, from supervised fine tuning to full reinforcement based adaptation.

- Finally, we evaluate and refine the architecture by analyzing reward curves, performance metrics, and qualitative behavior across all agent roles.

In this blog we are going to …

build a complete multi-agentic system combining reasoning, collaboration, and reinforcement learning (RL) to enable agents to adapt and improve through real-time feedback and rewards.

- Creating the Foundation for an Research Lab

- Designing Our Society of Scientists (LangGraph)

- Creating the RL Based Training Architecture

- Implementing Three RL Algorithms

- Building the 3 Phases based Training Loop

- Performance Evaluation and Analysis

- How Our RL Training Logic Works

When we start building a production-grade AI system we don’t instantly start with the algorithm, but with a proper foundation of the entire system. This initial setup is important every choice we make here from the libraries we install to the data we source, will determine the reliability and reproducibility of our final trained agent.

So, in this section this is what we are going to do:

- We are going to install all core libraries and specialized dependencies required for our hierarchical training setup.

- Then we we are going to perform configurtion of our API keys, avoiding hardcoded values, and connect our LangSmith project for observability.

- After configurtion, we are going to download and process the PubMedQA dataset to build a high-quality corpus for our agents.

- We are also going to design the central AgentState, the shared memory that enables collaboration and reasoning.

- Then going to equip our agents with essential tools such as mock databases, live web search and more for external interaction.

First, we need to set up our Python environment. Instead of a simple pip install, we are going to use uv, because it’s is not a fast and modern package manager, to ensure our environment is both quick to set up and highly reproducible suitable for production environment.

We are also installing specific extras for agent-lightning verl for our PPO algorithm and apo (Asynchronous Policy Optimization) and unsloth for efficient SFT which are important for our advanced hierarchical training strategy.

print("Updating and installing system packages...")

# We first update the system's package list and install 'uv' and 'graphviz'.

# 'graphviz' is a system dependency required by LangGraph to visualize our agentic workflows.

!apt-get update -qq && apt-get install -y -qq uv graphviz

print("\nInstalling packages...\n")

# Here, we use 'uv' to install our Python dependencies.

# We install the '[verl,apo]' extras for Agent-Lightning to get the necessary components for PPO and other advanced RL algorithms.

# '[unsloth[pt231]]' provides a highly optimized framework for Supervised Fine-Tuning, which we'll use for our Junior Researchers.

!uv pip install -q -U "langchain" "langgraph" "langchain_openai" "tavily-python" "agentlightning[verl,apo]" "unsloth[pt231]" "pandas" "scikit-learn" "rich" "wandb" "datasets" "pyarrow"

print("Successfully installed all required packages.")Let’ start the installation process …

#### OUTPUT ####

Updating and installing system packages...

...

Installing packages...

Resolved 178 packages in 3.12s

...

+ agentlightning==0.2.2

+ langchain==0.2.5

+ langgraph==0.1.5

+ unsloth==2024.5

+ verl==0.6.0

...

Successfully installed all required packages.By installing graphviz, we have enabled the visualization capabilities of LangGraph, which will be invaluable for debugging our complex agent society later.

More importantly, the installation of agentlightning with the verl and unsloth extras gives us the specific, high-performance training backends we need for our hierarchical strategy.

We now have a stable and complete foundation to build upon. We can now start pre-processing the training data.

Every machine learning system requires training data or at least some initial observations to begin self-learning.

Our agents cannot reason in isolation they need access to rich, domain-specific information.

A static, hardcoded list of facts would be overly simplistic. To build a realistic and challenging research environment, we will draw our knowledge base from the PubMedQA dataset, specifically utilizing its labeled subset, pqa_l.

It contains real biomedical questions, the original scientific abstracts that provide the necessary context, and a final ‘yes/no/maybe’ answer determined by human experts. This structure provides not only a rich source of information for our agents to search but also a ground truth that we can use to calculate a reward for our reinforcement learning loop.

First, let’s define a simple TypedDict to structure each task. This ensures our data is clean and consistent throughout the pipeline.

from typing import List, TypedDict

# A TypedDict provides a clean, structured way to represent each research task.

# This makes our code more readable and less prone to errors from using plain dictionaries.

class ResearchTask(TypedDict):

id: str # The unique PubMed ID for the article

goal: str # The research question our agent must investigate

context: str # The full scientific abstract providing the necessary evidence

expected_decision: str # The ground truth answer ('yes', 'no', or 'maybe')We are basically creating a ResearchTask blueprint using TypedDict. This isn't just a plain dictionary, it's a contract that enforces a specific structure for our data. Every task will now consistently have an id, goal, context, and expected_decision. This strict typing is a best practice that prevents bugs down the line, ensuring that every component of our system knows exactly what kind of data to expect.

With our data structure defined, we can now write a function to download the dataset from the Hugging Face Hub, process it into our ResearchTask format, and split it into training and validation sets. A separate validation set is crucial for objectively evaluating our agent's performance after training.

from datasets import load_dataset

import pandas as pd

def load_and_prepare_dataset() -> tuple[List[ResearchTask], List[ResearchTask]]:

"""

Downloads, processes, and splits the PubMedQA dataset into training and validation sets.

"""

print("Downloading and preparing PubMedQA dataset...")

# Load the 'pqa_l' (labeled) subset of the PubMedQA dataset.

dataset = load_dataset("pubmed_qa", "pqa_l", trust_remote_code=True)

# Convert the training split to a pandas DataFrame for easier manipulation.

df = dataset['train'].to_pandas()

# This list will hold our structured ResearchTask objects.

research_tasks = []

# Iterate through each row of the DataFrame to create our tasks.

for _, row in df.iterrows():

# The 'CONTEXTS' field is a list of strings; we join them into a single block of text.

context_str = " ".join(row['CONTEXTS'])

# Create a ResearchTask dictionary with the cleaned and structured data.

task = ResearchTask(

id=str(row['PUBMED_ID']),

goal=row['QUESTION'],

context=context_str,

expected_decision=row['final_decision']

)

research_tasks.append(task)

# We perform a simple 80/20 split for our training and validation sets.

train_size = int(0.8 * len(research_tasks))

train_set = research_tasks[:train_size]

val_set = research_tasks[train_size:]

print(f"Dataset downloaded and processed. Total samples: {len(research_tasks)}")

print(f"Train dataset size: {len(train_set)} | Validation dataset size: {len(val_set)}")

return train_set, val_set

# Let's execute the function.

train_dataset, val_dataset = load_and_prepare_dataset()The load_and_prepare_dataset function we just coded is our data ingestion pipeline. It automates the entire process of sourcing our knowledge base: it connects to the Hugging Face Hub, downloads the raw data, and most importantly transforms it from a generic DataFrame into a clean list of our custom ResearchTask objects.

The 80/20 split is a standard machine learning practice, it gives us a large set of data to train on (

train_set) and a separate, unseen set (val_set) to later test how well our agent has generalized its knowledge.

Now that the data is loaded, it’s always a good practice to visually inspect a sample. This helps us confirm that our parsing logic worked correctly and gives us a feel for the kind of challenges our agents will face. We’ll write a small utility function to display a few examples in a clean, readable table.

from rich.console import Console

from rich.table import Table

console = Console()

def display_dataset_sample(dataset: List[ResearchTask], sample_size=5):

"""

Displays a sample of the dataset in a rich, formatted table.

"""

# Create a table for display using the 'rich' library for better readability.

table = Table(title="PubMedQA Research Goals Dataset (Sample)")

table.add_column("ID", style="cyan")

table.add_column("Research Goal (Question)", style="magenta")

table.add_column("Expected Decision", style="green")

# Populate the table with the first few items from the dataset.

for item in dataset[:sample_size]:

table.add_row(item['id'], item['goal'], item['expected_decision'])

console.print(table)

display_dataset_sample(train_dataset)This display_dataset_sample function is our sanity check. By using the rich library to create a formatted table, we can quickly and clearly verify the structure of our loaded data. It's much more effective than just printing raw dictionaries. Seeing the data laid out like this confirms that our load_and_prepare_dataset function correctly extracted the ID, goal, and expected_decision for each task.

Let’s look at the output of the above function we have just coded.

#### OUTPUT ####

Downloading and preparing PubMedQA dataset...

Dataset downloaded and processed. Total samples: 1000

Train dataset size: 800 | Validation dataset size: 200

--- Sample 0 ---

ID: 11843333

Goal: Do all cases of ulcerative colitis in childhood need colectomy?

Expected Decision: yes

Context (first 200 chars): A retrospective review of 135 children with ulcerative colitis was performed to determin ...sample_data (Created by Fareed Khan)

We have transformed the raw PubMedQA data into a clean, structured list of ResearchTask objects, split into training and validation sets. Each row in this table represents a complete research challenge that we can feed into our agent's rollout method.

The Research Goal will serve as the initial prompt, and the Expected Decision will be the ground truth for calculating our final reward signal. Our agents now have a world-class, realistic knowledge base to learn from.

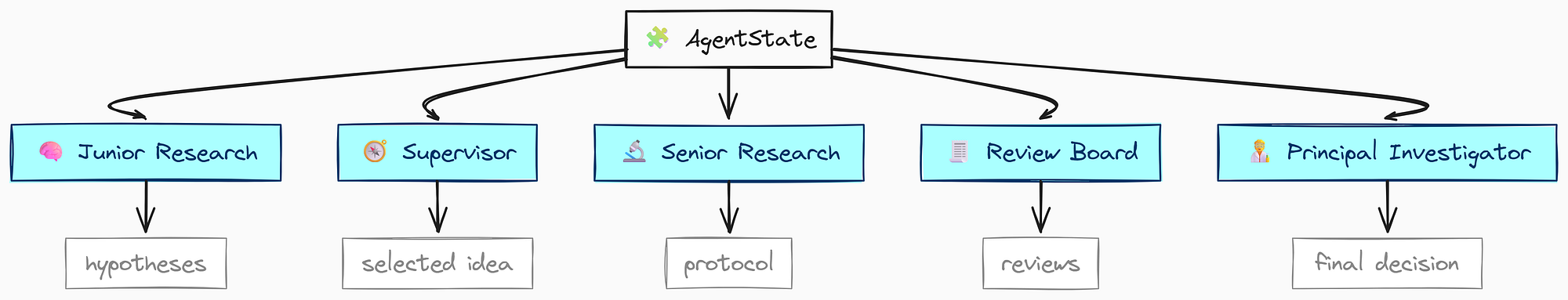

With our data sourced and structured, we now need to design the “nervous system” of our agent society. This is the shared memory, or state, that will allow our diverse group of agents to collaborate, pass information, and build upon each other’s work. In LangGraph, this shared memory is managed by a central state object.

A simple dictionary would be too fragile for a complex system like ours. Instead, we will architect a nested, hierarchical

AgentStateusing Python'sTypedDict.

This approach provides a machine-readable blueprint for our agent entire cognitive process. Each field in our state will represent a distinct stage of the research workflow, from the initial hypotheses generated by the Junior Researchers to the final, peer-reviewed protocol.

Here’s what we are going to do:

- Define Sub-States: We will create smaller

TypedDictclasses for specific artifacts likeJuniorResearch,Protocol, andReviewDecision. - Architect the Master State: Assemble these sub-states into the main

AgentState, which will hold all information for a single research run. - Enable ReAct Logic: adding a

senderfield, a crucial component that allows us to build robust ReAct-style loops where tool results are routed back to the correct agent.

First, let’s define the data structure for the output from our Junior Researchers. This make sure that every hypothesis they generate is consistently formatted.

from typing import List, TypedDict, Literal

from langchain_core.messages import BaseMessage

# This defines the structure for a single hypothesis from a Junior Researcher.

# It captures the core idea, the evidence found, and which agent proposed it.

class JuniorResearch(TypedDict):

hypothesis: str

supporting_papers: List[str]

agent_name: str # To track which junior researcher proposed itWe are basically creating a blueprint for what a “hypothesis submission” looks like. The JuniorResearch class uses TypedDict to enforce that every submission must contain a hypothesis string, a list of supporting_papers, and the agent_name. This structure is important for the Supervisor agent, as it guarantees it will receive a consistent set of proposals to evaluate, each with clear attribution.

Next, we will define the structure for the experimental protocol. This is the primary output of our Senior Researcher, and it needs to be detailed and actionable.

# This defines the structure for the final experimental protocol.

# It's a detailed, actionable plan.

class Protocol(TypedDict):

title: str

steps: List[str]

safety_concerns: str

budget_usd: floatThe Protocol class formalizes the key components of a scientific experiment. By requiring a title, a list of steps, a safety_concerns section, and a budget_usd, we are instructing our Senior Researcher agent to think through the practical details of its proposal.

This structured output is far more valuable than a simple block of text and will be the basis for our final reward calculation.

Now, let’s create the structure for the feedback from our Review Board. This is crucial for our revision loop, as it needs to be both clear and machine-readable.

# This defines the structured feedback from our review agents.

# It forces a clear decision, a severity level, and constructive feedback.

class ReviewDecision(TypedDict):

decision: Literal['APPROVE', 'REVISE']

critique_severity: Literal['CRITICAL', 'MAJOR', 'MINOR']

feedback: strHere, we have designed the ReviewDecision class to capture the nuanced output of a critique. The use of Literal is a key piece of engineering:

- It forces the review agents to make a discrete choice (

APPROVEorREVISE) - To classify the severity of their feedback (

CRITICAL,MAJOR, orMINOR).

This way we are allowing our LangGraph router to decide whether to send the protocol back for a major rewrite or a minor tweak.

Finally, we can assemble these smaller structures into our main AgentState. This will be the single, comprehensive object that tracks everything that happens during a research run.

from typing import Annotated

# This is the master state dictionary that will be passed between all nodes in our LangGraph.

class AgentState(TypedDict):

# The 'messages' field accumulates the conversation history.

# The 'lambda x, y: x + y' tells LangGraph how to merge this field: by appending new messages.

messages: Annotated[List[BaseMessage], lambda x, y: x + y]

research_goal: str # The initial high-level goal from our dataset.

sender: str # Crucial for ReAct: tracks which agent last acted, so tool results can be sent back to it.

turn_count: int # A counter to prevent infinite loops in our graph.

# Junior Researcher Team's output (accumulates from parallel runs)

initial_hypotheses: List[JuniorResearch]

# Supervisor's choice

selected_hypothesis: JuniorResearch

supervisor_justification: str

# Senior Researcher Team's output

refined_hypothesis: str

experimental_protocol: Protocol

# Review Board's output

peer_review: ReviewDecision

safety_review: ReviewDecision

# Principal Investigator's final decision

final_protocol: Protocol

final_decision: Literal['GO', 'NO-GO']

final_rationale: str

# The final evaluation score from our reward function

final_evaluation: dictWe have now successfully defined the entire cognitive architecture of our agent society.

The flow of information is clear: initial_hypotheses are generated, one is chosen as the selected_hypothesis, it's refined into an experimental_protocol, it undergoes peer_review and safety_review, and results in a final_decision.

The sender field is particularly important.

In a ReAct (Reason-Act) loop, an agent decides to use a tool. After the tool runs, the system needs to know which agent to return the result to.

By updating the sender field every time an agent acts, we create a clear return address, enabling this complex, back-and-forth reasoning pattern. With this state defined, our graph now has a solid memory structure to work with.

Our agents now have a sophisticated memory (AgentState), but to perform their research, they need access to the outside world or in a more technical term (theexternal knowledgebase).

An agent without tools is just a conversationalist, an agent with tools becomes a powerful actor capable of gathering real-time, domain-specific information.

In this section, we will build a ScientificToolkit for our agent society. This toolkit will provide a set of specialized functions that our agents can call to perform essential research tasks.

Here is what we are going to do:

- Integrate Live Web Search: We will use the

TavilySearchResultstool to give our agents the ability to search PubMed and ClinicalTrials.gov for the latest scientific literature. - Simulate Internal Databases: We will create mock databases for proteins and gene ontologies to simulate how an agent would query proprietary, internal knowledge bases.

- Decorate with

@tool: Using LangChain@tooldecorator to make these Python functions discoverable and callable by our LLM-powered agents. - Test a Tool: Then to perform a quick test call on one of our new tools to ensure everything is wired up correctly.

First, let’s define the class that will house all our tools. Grouping them in a class is good practice for organization and state management (like managing API clients).

from langchain_core.tools import tool

from langchain_community.tools.tavily_search import TavilySearchResults

class ScientificToolkit:

def __init__(self):

# Initialize the Tavily search client, configured to return the top 5 results.

self.tavily = TavilySearchResults(max_results=5)

# This is a mock database simulating an internal resource for protein information.

self.mock_protein_db = {

"amyloid-beta": "A key protein involved in the formation of amyloid plaques in Alzheimer's.",

"tau": "A protein that forms neurofibrillary tangles inside neurons in Alzheimer's.",

"apoe4": "A genetic risk factor for Alzheimer's disease, affecting lipid metabolism in the brain.",

"trem2": "A receptor on microglia that, when mutated, increases Alzheimer's risk.",

"glp-1": "Glucagon-like peptide-1, a hormone involved in insulin regulation with potential neuroprotective effects."

}

# This is a second mock database, this time for gene functions.

self.mock_go_db = {

"apoe4": "A major genetic risk factor for Alzheimer's disease, involved in lipid transport and amyloid-beta clearance.",

"trem2": "Associated with microglial function, immune response, and phagocytosis of amyloid-beta."

}We have now set up the foundation for our ScientificToolkit. Let’s quickly understand it …

- The

__init__method initializes our live web search tool (Tavily) - Setting up two simple Python dictionaries (

mock_protein_db,mock_go_db) to simulate internal, proprietary databases. - This mix of live and mock tools is a realistic representation of a real-world enterprise environment where agents need to access both public and private data sources.

Now, let’s define the actual tool methods. Each method will be a specific capability we want to grant our agents. We will start with the PubMed search tool.

@tool

def pubmed_search(self, query: str) -> str:

"""Searches PubMed for biomedical literature. Use highly specific keywords related to genes, proteins, and disease mechanisms."""

console.print(f"--- TOOL: PubMed Search, Query: {query} ---")

# We prepend 'site:pubmed.ncbi.nlm.nih.gov' to the query to restrict the search to PubMed.

return self.tavily.invoke(f"site:pubmed.ncbi.nlm.nih.gov {query}")So, we first defines our first tool, pubmed_search. The @tool decorator from LangChain is making things easier for us, it automatically converts this Python function into a structured tool that an LLM can understand and decide to call.

Next, we are going to create a similar tool for searching clinical trials.

@tool

def clinical_trials_search(self, query: str) -> str:

"""Searches for information on clinical trials related to specific drugs or therapies."""

console.print(f"--- TOOL: Clinical Trials Search, Query: {query} ---")

# This tool is focused on ClinicalTrials.gov to find information about ongoing or completed studies.

return self.tavily.invoke(f"site:clinicaltrials.gov {query}")This clinical_trials_search tool is another example of a specialized, live-data tool. By restricting the search to clinicaltrials.gov, we provide our agents with a focused way to find information about drug development pipelines and therapeutic interventions, which is a different type of information from what is typically found in PubMed abstracts.

Now, let’s implement the tools that interact with our mock internal databases.

@tool

def protein_database_lookup(self, protein_name: str) -> str:

"""Looks up information about a specific protein in our mock database."""

console.print(f"--- TOOL: Protein DB Lookup, Protein: {protein_name} ---")

# This simulates a fast lookup in a proprietary, internal database of protein information.

return self.mock_protein_db.get(protein_name.lower(), "Protein not found.")

@tool

def gene_ontology_lookup(self, gene_symbol: str) -> str:

"""Looks up the function and pathways associated with a specific gene symbol in the Gene Ontology database."""

console.print(f"--- TOOL: Gene Ontology Lookup, Gene: {gene_symbol.upper()} ---")

# This simulates a query to another specialized internal database, this time for gene functions.

result = self.mock_go_db.get(gene_symbol.lower(), f"Gene '{gene_symbol}' not found in ontology database.")

console.print(f"Gene '{gene_symbol.upper()}' lookup result: {result}")

return resultThese two functions, protein_database_lookup and gene_ontology_lookup, demonstrate how to integrate agents with internal or proprietary data sources.

Even though we are using simple dictionaries for this demo, in a real system, these functions could contain the logic to connect to a SQL database, a private API, or a specialized bioinformatics library (Private database for hospitals).

Finally, let’s instantiate our toolkit and consolidate all the tool functions into a single list that we can easily pass to our agent runners.

# Instantiate our toolkit class.

toolkit = ScientificToolkit()

# Create a list that holds all the tool functions we've defined.

all_tools = [toolkit.pubmed_search, toolkit.clinical_trials_search, toolkit.protein_database_lookup, toolkit.gene_ontology_lookup]

print("Scientific Toolkit with live data tools defined successfully.")

# Test the new gene_ontology_lookup tool to confirm it's working.

toolkit.gene_ontology_lookup.invoke("APOE4")Let’s run this code and see what the output of our toolkit looks like …

#### OUTPUT ####

Scientific Toolkit with live data tools defined successfully.

--- TOOL: Gene Ontology Lookup, Gene: APOE4 ---

Gene 'APOE4' lookup result: A major genetic risk factor for Alzheimers disease, involved in lipid transport and amyloid-beta clearance.We can see that the output confirms our ScientificToolkit has been successfully instantiated and that our new gene_ontology_lookup tool is working correctly.

The all_tools list is now a complete, portable set of capabilities that we can bind to any of our agents. This way we are actively seeking out and integrating information from multiple sources to our agentic system, transforming them from simple reasoners into active researchers.

With our foundational components in place the secure environment, the dataset, the hierarchical AgentState, and the powerful ScientificToolkit we are now ready to build the agents themselves.

This is where we move from defining data structures to engineering the cognitive entities that will perform the research or in simple terms we will be building the core compoents of our multi-agentic system.

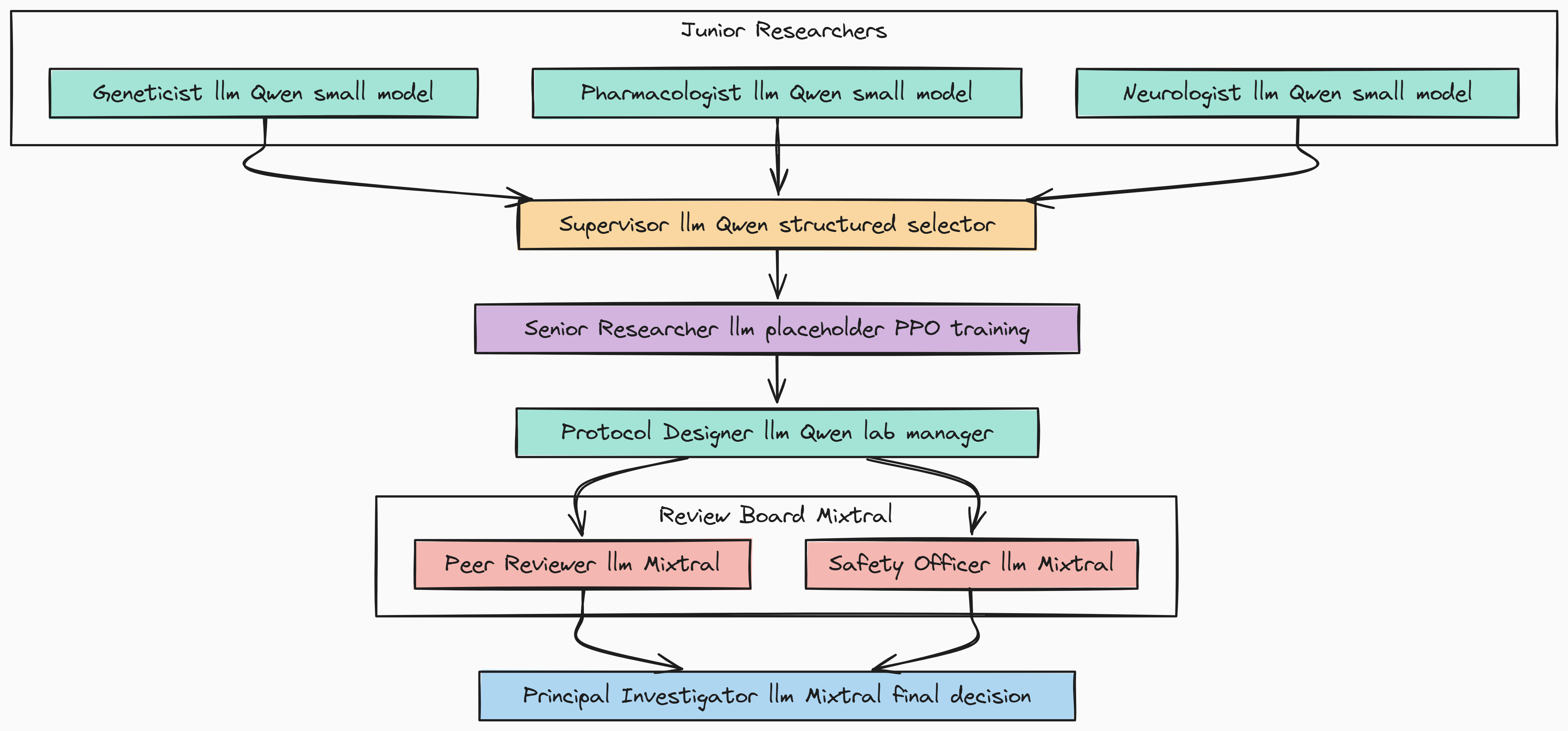

In this section, we are going to use LangGraph to design and orchestrate our multi-agent society.

To mimic a real workflow, We will create a team of specialists, each with a specific role and powered by a strategically chosen open-source model.

Here’s what we are going to do:

- Assign Roles and Models: Defining the “personas” for each of our AI scientists and assign different open-source models to them based on the complexity of their tasks.

- Create Agent Runners: Creating a factory function that takes a model, a prompt, and a set of tools, and produces a runnable agent executor.

- Architect the StateGraph: We will use

LangGraphto wire these agents together, implementing advanced ReAct logic and a multi-level revision loop to create a robust, cyclical workflow. - Visualize the Architecture: Generate a workflow of our final graph to get a clear, intuitive picture of our agent society cognitive architecture.

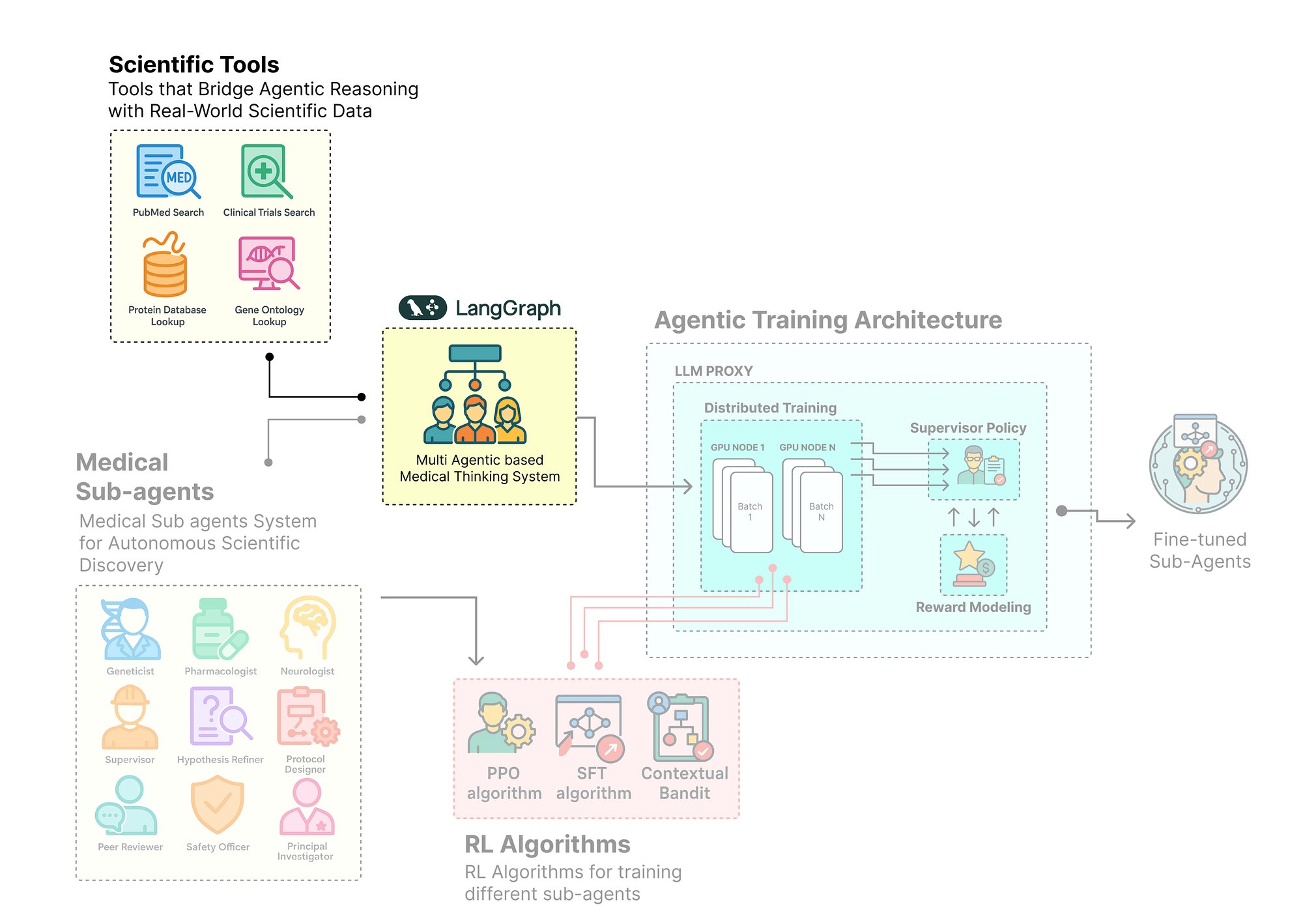

A key principle of advanced agentic design is that not all tasks are created equal. Using a single, massive model for every job is inefficient and costly. Instead, we will strategically assign different open-source models from the Hugging Face Hub to different roles within our research team.

This “right model for the right job” approach is a cornerstone of building production-grade, cost-effective agentic systems.

We need to define the LLM configurations. We will use a small, fast model for the creative brainstorming of our Junior Researchers, a placeholder for the more powerful model that we will fine-tune with PPO for our Senior Researcher, and a highly capable mixture-of-experts model for the critical review tasks.

import os

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

# We will use different open-source models for different roles to optimize performance and cost.

# The 'openai_api_base' will be dynamically set by the LLMProxy during training,

# pointing to a local server (like Ollama or vLLM) instead of OpenAI's API.

junior_researcher_llm = ChatOpenAI(

model="Qwen/Qwen2-1.5B-Instruct", # A small, fast model for creative, parallel brainstorming.

temperature=0.7,

openai_api_base="http://localhost:11434/v1", # Assuming an Ollama server is running locally.

openai_api_key="ollama"

)

supervisor_llm = ChatOpenAI(

model="Qwen/Qwen2-1.5B-Instruct", # The same small model is sufficient for the structured selection task.

temperature=0.0,

openai_api_base="http://localhost:11434/v1",

openai_api_key="ollama"

)

# This is a special placeholder. During training, the VERL algorithm will serve the Llama-3 model

# under this logical name via the Agent-Lightning LLMProxy.

senior_researcher_llm = ChatOpenAI(

model="senior_researcher_llm", # A logical name, not a real model endpoint initially.

temperature=0.1,

openai_api_base="http://placeholder-will-be-replaced:8000/v1",

openai_api_key="dummy_key"

)

# For the critical review and final decision stages, we use a more powerful model.

review_board_llm = ChatOpenAI(

model="mistralai/Mixtral-8x7B-Instruct-v0.1", # A powerful Mixture-of-Experts model for nuanced evaluation.

temperature=0.0,

openai_api_base="http://localhost:11434/v1",

openai_api_key="ollama"

)

print("Agent personas and open-source LLM configurations are defined.")Make sure you have pulled the respective and serving them through ollma/vllm.

We have now defined our research team “hardware”.

- By assigning

Qwen2-1.5Bto the junior roles, we enable fast, parallel, and low-cost ideation. - The

senior_researcher_llmis now explicitly a logical placeholder, this is a key concept for training.Agent-Lightningwill intercept calls to this model name and route them to our PPO-trained model, allowing us to update its policy without affecting the rest of the system. - Finally, using a powerful

Mixtralmodel for the review board ensures that the critique and evaluation steps are performed with the highest level of scrutiny.

Next, we need a standardized way to combine a model, a system prompt, and a set of tools into a runnable agent. We’ll create a simple factory function for this.

def create_agent_runner(llm, system_prompt, tools):

"""A factory function to create a runnable agent executor."""

# The prompt consists of a system message, and a placeholder for the conversation history.

prompt = ChatPromptTemplate.from_messages([

("system", system_prompt),

MessagesPlaceholder(variable_name="messages"),

])

# We bind the tools to the LLM, making them available for the agent to call.

return prompt | llm.bind_tools(tools)This create_agent_runner function is a small but important piece here. It standardizes how we build our agents. By creating a reusable "factory", we ensure that every agent in our system is constructed consistently, taking a specific system_prompt that defines its persona, an llm that provides its reasoning power, and a list of tools it can use. This makes our main graph-building code cleaner and easier to manage.

Finally, we’ll define the specific system prompts for each role in our agent society. These prompts are the “software” that runs on our LLM “hardware”, guiding each agent’s behavior and defining its specific responsibilities and output format.

# This is holding the detailed system prompts for each agent role.

prompts = {

"Geneticist": "You are a geneticist specializing in Alzheimer's. Propose a hypothesis related to genetic factors. Use tools to find supporting evidence. Respond with a JSON object: {'hypothesis': str, 'supporting_papers': List[str]}.",

"Pharmacologist": "You are a pharmacologist. Propose a drug target hypothesis. Use tools to find clinical trial data. Respond with a JSON object: {'hypothesis': str, 'supporting_papers': List[str]}.",

"Neurologist": "You are a clinical neurologist. Propose a systems-level neurobiology hypothesis. Use tools to find papers on brain pathways. Respond with a JSON object: {'hypothesis': str, 'supporting_papers': List[str]}.",

"Supervisor": "You are a research supervisor. Review the hypotheses and select the most promising one. Justify your choice based on novelty, feasibility, and impact. Return a JSON object: {'selected_hypothesis_index': int, 'justification': str}.",

"HypothesisRefiner": "You are a senior scientist. Deepen the selected hypothesis with more literature review, refining it into a specific, testable statement. Return a JSON object: {'refined_hypothesis': str}.",

"ProtocolDesigner": "You are a lab manager. Design a detailed, step-by-step experimental protocol to test the refined hypothesis. Be specific about methods, materials, and controls. Return a JSON object: {'title': str, 'steps': List[str], 'safety_concerns': str, 'budget_usd': float}.",

"PeerReviewer": "You are a critical peer reviewer. Find flaws in the protocol. Be constructive but rigorous. Return a JSON object: {'decision': 'APPROVE'|'REVISE', 'critique_severity': 'CRITICAL'|'MAJOR'|'MINOR', 'feedback': str}.",

"SafetyOfficer": "You are a lab safety officer. Review the protocol for safety, regulatory, and ethical concerns. Be thorough. Return a JSON object: {'decision': 'APPROVE'|'REVISE', 'critique_severity': 'CRITICAL'|'MAJOR'|'MINOR', 'feedback': str}.", # Note: I corrected a typo from 'safety_review' to 'feedback' for consistency

"PrincipalInvestigator": "You are the Principal Investigator. Synthesize the protocol and reviews into a final document. Make the final GO/NO-GO decision and provide a comprehensive rationale. Return a JSON object: {'final_protocol': Protocol, 'final_decision': 'GO'|'NO-GO', 'final_rationale': str}."

}We have now fully defined our cast of AI scientists. Each agent has been assigned a specific persona through its prompt, a reasoning engine through its llm, and a set of capabilities through the tools.

A key detail in these prompts is the instruction to respond with a specific JSON object. This structured output is essential for reliably updating our hierarchical AgentState as the workflow progresses from one agent to the next. Our workforce is now ready to be assembled into a functioning team.

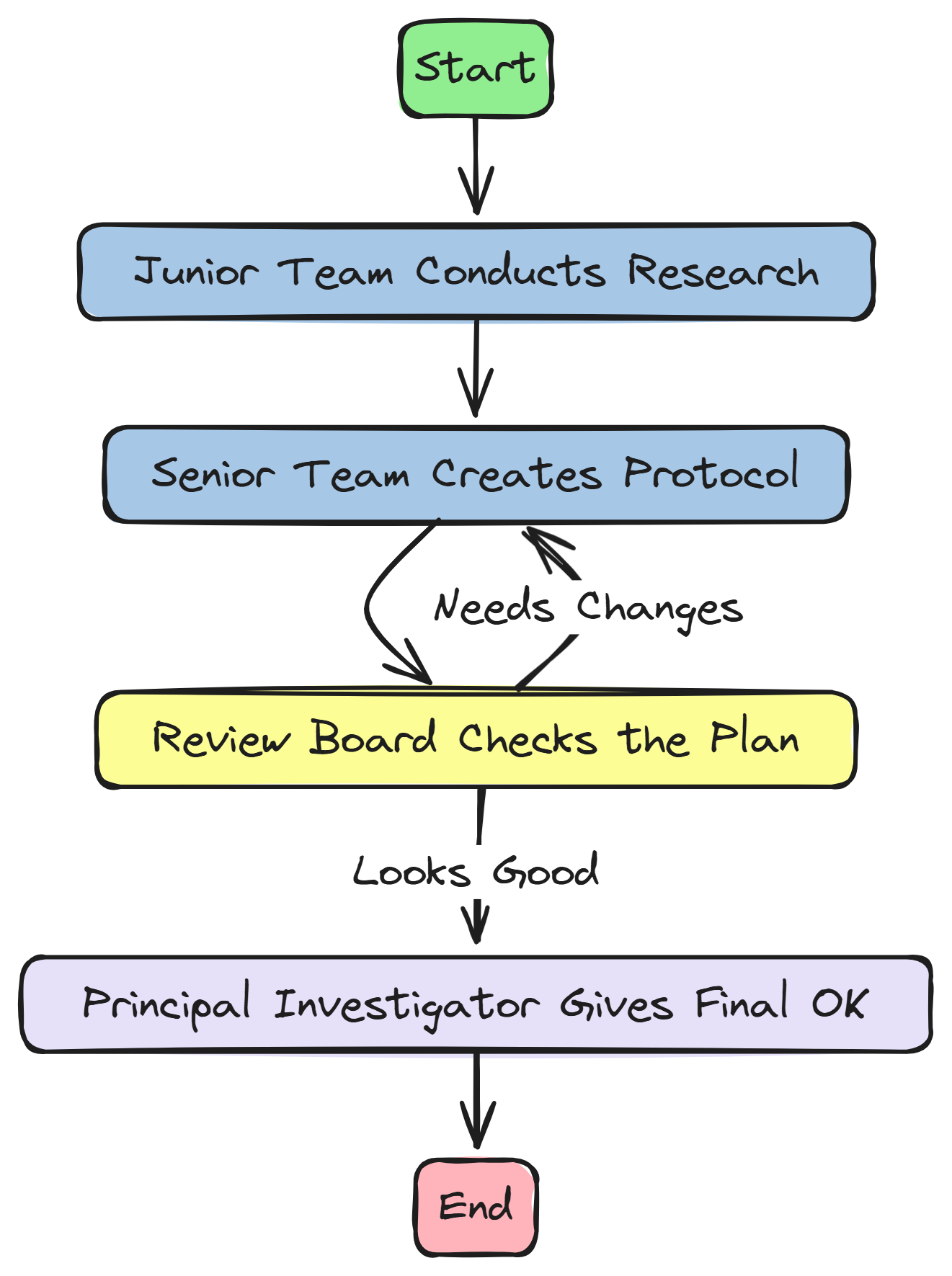

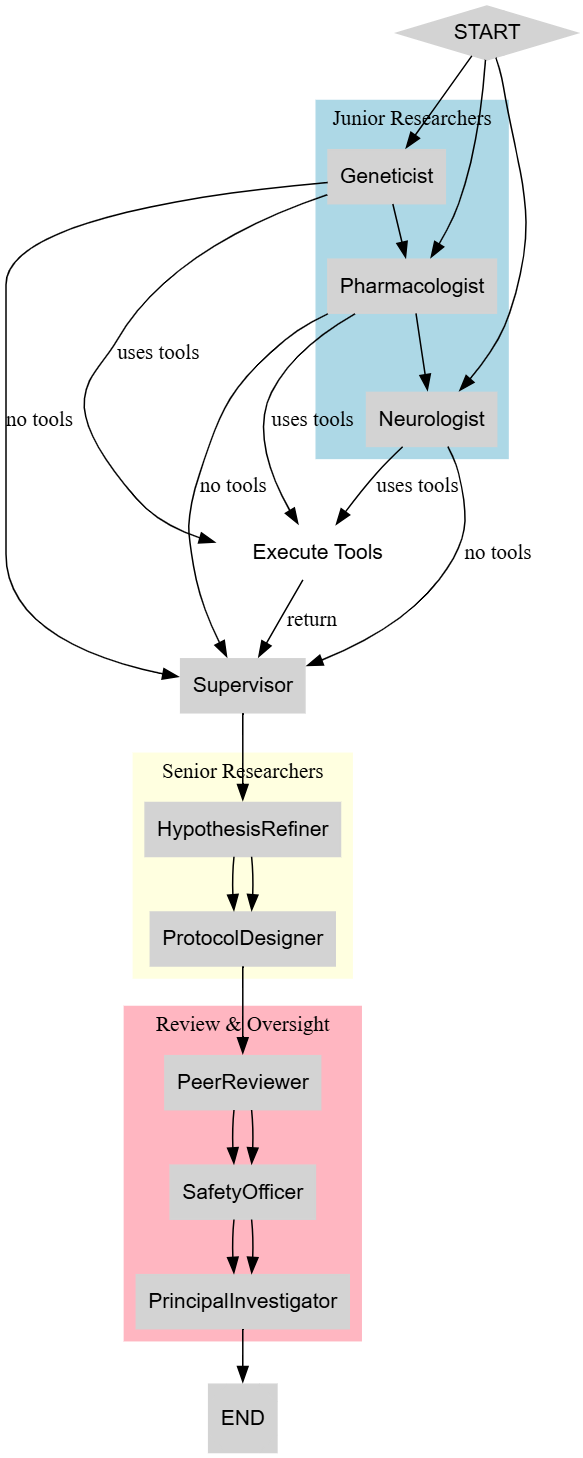

Now that we have defined our team of specialist agents, we need to build the laboratory where they will collaborate. This is the job of LangGraph. We will now assemble our agents into a functioning, cyclical workflow, creating a StateGraph that defines the flow of information and control between each member of our research team.

This will not be a simple, linear pipeline …

To mimic a real research process, we need to implement sophisticated logic, including feedback loops for revision and a robust mechanism for tool use.

In this section we are going to do the following …

- Build the Agent Nodes: Creating a factory function to wrap each of our agent runners into a

LangGraphnode that correctly updates ourAgentState. - Implement ReAct-style Tool Use: Defining a conditional edge and a router that ensures after any agent uses a tool, the result is returned directly to that same agent for processing.

- Engineer a Multi-Level Revision Loop: Designing an intelligent conditional edge that routes the workflow differently based on the severity of the feedback from our Review Board, enabling both minor tweaks and major rethinks.

- Compile and Visualize the Graph: Finally, we will compile the complete

StateGraphand generate a visualization to get a clear picture of our agent's cognitive architecture.

First, we need a way to create a graph node from one of our agent runners. We will create a helper function that takes an agent’s name and its runnable executor, and returns a function that can be added as a node to our graph. This node function will handle updating the turn_count and the sender field in our AgentState.

from langgraph.graph import StateGraph, START, END

from langgraph.prebuilt import ToolNode

from langchain_core.messages import HumanMessage, BaseMessage

import json

MAX_TURNS = 15 # A safeguard to prevent our graph from getting stuck in an infinite loop.

# This is a helper function, a "factory" that creates a node function for a specific agent.

def create_agent_node(agent_name: str, agent_runner):

"""Creates a LangGraph node function for a given agent runner."""

def agent_node(state: AgentState) -> dict:

# Print a console message to trace the graph's execution path.

console.print(f"--- Node: {agent_name} (Turn {state['turn_count']}) ---")

# Increment the turn count as a safety measure.

state['turn_count'] += 1

# Invoke the agent runner with the current state.

result = agent_runner.invoke(state)

# We need to handle the structured JSON output from our review agents specifically.

if agent_name in ["PeerReviewer", "SafetyOfficer"]:

try:

# The agent's output is a JSON string in the 'content' of the AIMessage.

content = json.loads(result.content)

# We update the correct field in our AgentState based on which reviewer ran.

if agent_name == "PeerReviewer":

state['peer_review'] = content

else:

state['safety_review'] = content # The key here was 'safety_review', not 'feedback'.

except (json.JSONDecodeError, TypeError):

# If parsing fails, we log an error but don't crash the graph.

console.print(f"[bold red]Error parsing JSON from {agent_name}: {result.content}[/bold red]")

# We update the 'messages' list and crucially, set the 'sender' field for ReAct routing.

return {"messages": [result], "sender": agent_name}

return agent_nodeThe create_agent_node function is our standardized wrapper for every agent in the system.

- It ensures that every time an agent runs, we log its activity, increment our safety counter (

turn_count), and most importantly update thesenderfield in the state. - This last step is the key to our ReAct logic. it leaves a "breadcrumb" so our graph knows who just acted. The special handling for the review agents ensures their structured JSON feedback is correctly parsed and placed into the appropriate fields (

peer_reviewandsafety_review) in ourAgentState.

Now, let’s define the conditional logic for our ReAct loop. This function will check the last message in the state. If it contains tool calls, it directs the graph to the ToolNode. If not, it signals that the agent's reasoning for this step is complete.

def tools_condition(state: AgentState) -> str:

"""A conditional edge that checks for tool calls and the turn count."""

# Examine the most recent message in the state.

last_message = state['messages'][-1]

# If the message has no tool calls, the agent's turn is done.

if not hasattr(last_message, 'tool_calls') or not last_message.tool_calls:

return "end"

# If we've exceeded our maximum number of turns, we also end to prevent loops.

if state['turn_count'] >= MAX_TURNS:

console.print("[bold yellow]Max turns reached. Ending graph.[/bold yellow]")

return "end"

# Otherwise, there are tools to be executed.

return "tools"The tools_condition function is the decision-maker for our ReAct loop. It acts as a gatekeeper after every agent's turn. Its logic is simple but powerful:

- It inspects the last message and checks for the presence of

tool_calls. If found, it returns the string"tools", signaling toLangGraphto route the state to our tool execution node. - If no tool calls are present, or if our safety

MAX_TURNSlimit is reached, it returns"end", allowing the workflow to proceed.

Next, we need a router that directs the workflow after a tool has been executed. This is where our sender field becomes critical.

# This router function will route the workflow back to the agent that originally called the tool.

def route_after_tools(state: AgentState) -> str:

"""A router that sends the workflow back to the agent that initiated the tool call."""

# Get the name of the last agent that acted from the 'sender' field in the state.

sender = state.get("sender")

console.print(f"--- Routing back to: {sender} after tool execution ---")

if not sender:

# If for some reason the sender is not set, we end the graph as a fallback.

return END

# The returned string must match the name of a node in our graph.

return senderThis route_after_tools function is the second half of our ReAct implementation. It is a conditional edge that simply reads the sender value from the AgentState left by our create_agent_node function and returns it. LangGraph will then use this string to route the state, now containing the tool's output, directly back to the agent that requested it. This allows the agent to see the result of its action and continue its reasoning process.

Now for our most important piece of routing logic, the multi-level revision loop after the review stage.

def route_after_review(state: AgentState) -> Literal["PrincipalInvestigator", "HypothesisRefiner", "ProtocolDesigner"]:

"""

An intelligent router that determines the next step based on the severity of review feedback.

"""

peer_review = state.get("peer_review", {})

safety_review = state.get("safety_review", {})

# Extract the decision and severity from both reviews, with safe defaults.

peer_severity = peer_review.get("critique_severity", "MINOR")

safety_severity = safety_review.get("critique_severity", "MINOR")

# If our safety counter is maxed out, we must proceed to the PI, regardless of feedback.

if state['turn_count'] >= MAX_TURNS:

console.print("[bold yellow]Max turns reached during review. Proceeding to PI.[/bold yellow]")

return "PrincipalInvestigator"

# If EITHER review has a 'CRITICAL' severity, the fundamental hypothesis is flawed.

# We route all the way back to the HypothesisRefiner for a major rethink.

if peer_severity == 'CRITICAL' or safety_severity == 'CRITICAL':

console.print("--- Review requires CRITICAL revision, routing back to HypothesisRefiner. ---")

state['messages'].append(HumanMessage(content="Critical feedback received. The core hypothesis needs rethinking."))

return "HypothesisRefiner"

# If EITHER review has a 'MAJOR' severity (but no critical ones), the protocol itself is flawed.

# We route back to the ProtocolDesigner for a significant revision.

if peer_severity == 'MAJOR' or safety_severity == 'MAJOR':

console.print("--- Review requires MAJOR revision, routing back to ProtocolDesigner. ---")

state['messages'].append(HumanMessage(content="Major feedback received. The protocol needs significant revision."))

return "ProtocolDesigner"

# If there are only MINOR revisions or everything is approved, the protocol is fundamentally sound.

# We can proceed to the PrincipalInvestigator for the final decision.

console.print("--- Reviews complete, routing to PrincipalInvestigator. ---")

return "PrincipalInvestigator"This function is the most important component of our iterative refinement process.

- It inspects the

critique_severityfrom both thepeer_reviewandsafety_reviewin theAgentState. This allows it to make a nuanced, hierarchical routing decision: Critical feedback triggers a loop all the way back to the beginning of the senior research phase (HypothesisRefiner). - Major feedback triggers a smaller loop back to the

ProtocolDesigner, and Minor or approved reviews allow the process to move forward. This multi-level feedback loop is a powerful pattern that mimics how real-world projects are revised.

Finally, we can bring all these pieces together in a builder function that constructs and compiles our complete StateGraph.

def build_graph() -> StateGraph:

workflow = StateGraph(AgentState)

# Instantiate all our agent runners using the factory function.

agent_runners = {

"Geneticist": create_agent_runner(junior_researcher_llm, prompts["Geneticist"], all_tools),

"Pharmacologist": create_agent_runner(junior_researcher_llm, prompts["Pharmacologist"], all_tools),

"Neurologist": create_agent_runner(junior_researcher_llm, prompts["Neurologist"], all_tools),

"Supervisor": create_agent_runner(supervisor_llm, prompts["Supervisor"], []),

"HypothesisRefiner": create_agent_runner(senior_researcher_llm, prompts["HypothesisRefiner"], all_tools),

"ProtocolDesigner": create_agent_runner(senior_researcher_llm, prompts["ProtocolDesigner"], all_tools),

"PeerReviewer": create_agent_runner(review_board_llm, prompts["PeerReviewer"], []),

"SafetyOfficer": create_agent_runner(review_board_llm, prompts["SafetyOfficer"], []),

"PrincipalInvestigator": create_agent_runner(review_board_llm, prompts["PrincipalInvestigator"], [])

}

# Add all the agent nodes and the single tool execution node to the graph.

for name, runner in agent_runners.items():

workflow.add_node(name, create_agent_node(name, runner))

workflow.add_node("execute_tools", ToolNode(all_tools))

# ---- Define the graph's control flow using edges ----

# The graph starts by running the three Junior Researchers in parallel.

workflow.add_edge(START, "Geneticist")

workflow.add_edge(START, "Pharmacologist")

workflow.add_edge(START, "Neurologist")

# For each agent that can use tools, we add the ReAct conditional edge.

for agent_name in ["Geneticist", "Pharmacologist", "Neurologist", "HypothesisRefiner", "ProtocolDesigner"]:

# After the agent runs, check for tool calls.

workflow.add_conditional_edges(

agent_name,

tools_condition,

{

"tools": "execute_tools", # If tools are called, go to the tool node.

"end": "Supervisor" if agent_name in ["Geneticist", "Pharmacologist", "Neurologist"] else "ProtocolDesigner" if agent_name == "HypothesisRefiner" else "PeerReviewer" # If no tools, proceed to the next logical step.

}

)

# After tools are executed, route back to the agent that called them.

workflow.add_conditional_edges("execute_tools", route_after_tools)

# Define the main linear flow of the research pipeline.

workflow.add_edge("Supervisor", "HypothesisRefiner")

workflow.add_edge("PeerReviewer", "SafetyOfficer")

# After the SafetyOfficer, use our intelligent review router.

workflow.add_conditional_edges("SafetyOfficer", route_after_review)

# The PrincipalInvestigator is the final step before the graph ends.

workflow.add_edge("PrincipalInvestigator", END)

return workflow

# Build the graph and compile it into a runnable object.

research_graph_builder = build_graph()

research_graph = research_graph_builder.compile()

print("LangGraph StateGraph builder is defined and compiled.")

# We can also visualize our compiled graph to see the final architecture.

try:

from IPython.display import Image, display

png_image = research_graph.get_graph().draw_png()

display(Image(png_image))

except Exception as e:

print(f"Could not visualize graph: {e}. Please ensure pygraphviz and graphviz are installed.")The build_graph function has assembled all our components which are nodes, edges, and routers into a complete, runnable StateGraph.

We can clearly see the parallel start with the Junior Researchers, the ReAct loops where agents can call tools and get results back, and the sophisticated multi-level feedback loops in the review stage.

We can now start building the training architecture of our agentic system. let’s do that.

We have successfully designed and assembled our society of agents into a complex LangGraph workflow. However, a static workflow, no matter how sophisticated, cannot learn or improve. To enable learning, we need to bridge the gap between our LangGraph orchestration and a training framework. This is the role of Agent-Lightning.

In this section, we will create the two crucial components that form this bridge: the LitAgent and the reward function. These will transform our static graph into a dynamic, trainable system.

Here’s what we are going to do:

- Encapsulate the Workflow: We will create a

MedicalResearchAgentclass that inherits fromagl.LitAgent, wrapping our entireLangGraphinside itsrolloutmethod. - Enable Targeted Training: We’ll engineer the

rolloutmethod to dynamically inject the model-under-training into only the specific nodes we want to improve (the Senior Researchers), a powerful pattern for surgical policy updates. - Design a Nuanced Reward System: We will build a multi-faceted

protocol_evaluatorfunction that acts as an LLM-as-a-Judge, scoring the agent's final output on multiple criteria like feasibility, impact, and groundedness. - Create a Weighted Reward: We will implement a function to combine these multiple scores into a single, weighted reward signal that will guide our reinforcement learning algorithm.

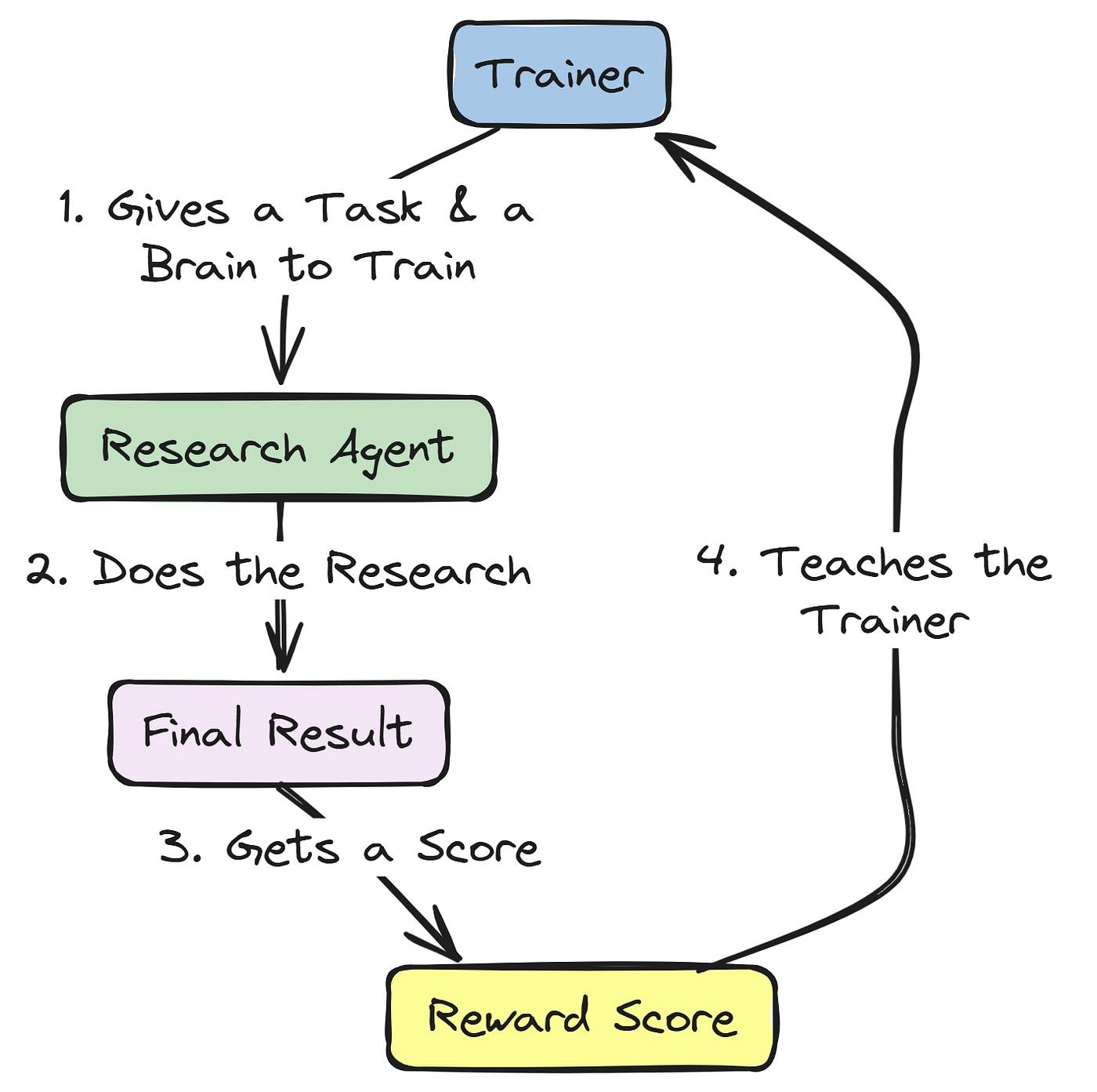

The first step in making our system trainable is to encapsulate our LangGraph workflow within an agl.LitAgent. The LitAgent is the fundamental, trainable unit in the Agent-Lightning ecosystem. Its primary job is to define a rollout method, which is a single, end-to-end execution of our agent on a given task.

We will create a class, MedicalResearchAgent, that inherits from agl.LitAgent. This class will hold our compiled LangGraph and our reward function. Its rollout method will be the heart of the training loop: it will take a research goal from our dataset, execute the full graph, and then use the reward function to score the final outcome.

A key piece of engineering here is how we handle the model-under-training.

Instead of the graph using a fixed set of models, the rollout method will dynamically bind the LLM endpoint provided by the Agent-Lightning trainer to the specific agent nodes we want to train (our Senior Researchers). This allows for targeted, surgical fine-tuning of a specific agent's policy within the larger multi-agent system.

Let’s start by defining our MedicalResearchAgent class.

import agentlightning as agl

from typing import Any, cast

class MedicalResearchAgent(agl.LitAgent):

def __init__(self, graph, reward_func):

# The LitAgent must be initialized with the compiled graph and the reward function.

super().__init__()

self.graph = graph

self.reward_func = reward_func

def rollout(self, task: ResearchTask, resources: agl.NamedResources, rollout: agl.Rollout) -> None:

# This method defines a single, end-to-end run of our agent.

console.print(f"\n[bold green]-- Starting Rollout {rollout.rollout_id} for Task: {task['id']} --[/bold green]")

# The 'senior_researcher_llm' resource is our model-under-training, served by the VERL algorithm via the LLMProxy.

llm_resource = cast(agl.LLM, resources['senior_researcher_llm'])

# The trainer's tracer provides a LangChain callback handler, which is crucial for deep observability in LangSmith.

langchain_callback_handler = self.trainer.tracer.get_langchain_handler()

# Here we dynamically bind the LLM endpoint from the training resources to the specific

# agent runners we want to train. This is the key to targeted policy optimization.

llm_with_endpoint = senior_researcher_llm.with_config({

"openai_api_base": llm_resource.endpoint,

"openai_api_key": llm_resource.api_key or "dummy-key"

})

# We create fresh agent runners for this specific rollout, using the updated LLM binding.

hypothesis_refiner_agent_trained = create_agent_runner(llm_with_endpoint, prompts["HypothesisRefiner"], all_tools)

protocol_designer_agent_trained = create_agent_runner(llm_with_endpoint, prompts["ProtocolDesigner"], all_tools)

# We get a mutable copy of the graph to temporarily update the nodes for this rollout.

graph_with_trained_model = self.graph.copy()

# We replace the functions for the 'HypothesisRefiner' and 'ProtocolDesigner' nodes with our newly created, trainable runners.

graph_with_trained_model.nodes["HypothesisRefiner"]['func'] = create_agent_node("HypothesisRefiner", hypothesis_refiner_agent_trained)

graph_with_trained_model.nodes["ProtocolDesigner"]['func'] = create_agent_node("ProtocolDesigner", protocol_designer_agent_trained)

# Compile the modified graph into a runnable for this specific rollout.

runnable_graph = graph_with_trained_model.compile()

# Prepare the initial state for the graph execution.

initial_state = {"research_goal": task['goal'], "messages": [HumanMessage(content=task['goal'])], "turn_count": 0, "initial_hypotheses": []}

# Configure the run to use our LangSmith callback handler.

config = {"callbacks": [langchain_callback_handler]} if langchain_callback_handler else {}

try:

# Execute the full LangGraph workflow from start to finish.

final_state = runnable_graph.invoke(initial_state, config=config)

# Extract the final protocol from the graph's terminal state.

final_protocol = final_state.get('final_protocol')

# If a protocol was successfully generated, we calculate its reward.

if final_protocol:

console.print("--- Final Protocol Generated by Agent ---")

console.print(final_protocol)

# Call our multi-faceted reward function to get a dictionary of scores.

reward_scores = self.reward_func(final_protocol, task['context'])

# Convert the scores into a single weighted reward value.

final_reward = get_weighted_reward(reward_scores)

else:

# Assign a reward of 0.0 for failed or incomplete rollouts.

final_reward = 0.0

# Emit the final reward. Agent-Lightning captures this value and uses it for the RL update step.

agl.emit_reward(final_reward)

console.print(f"[bold green]-- Rollout {rollout.rollout_id} Finished with Final Reward: {final_reward:.2f} --[/bold green]")

# The method returns None because the results (reward and traces) are emitted via agl.emit_* calls.

return NoneThe MedicalResearchAgent class is now our core trainable unit. It connects the complex, multi-step logic of our LangGraph with the Agent-Lightning training loop.

- The most important concept here is the dynamic binding of the

senior_researcher_llm. Notice that we don't modify the original graph. - For each

rollout, we create a temporary, modified copy of the graph where only the Senior Researcher nodes are pointed to the model-under-training.

In this approach our PPO algorithm updates will only influence the policy of the Senior Researchers, teaching them how to refine hypotheses and design protocols better, while the other agents (Junior Researchers, Review Board, etc.) continue to use their stable, pre-defined models. This allows for targeted, efficient training in a complex and heterogeneous multi-agent system.

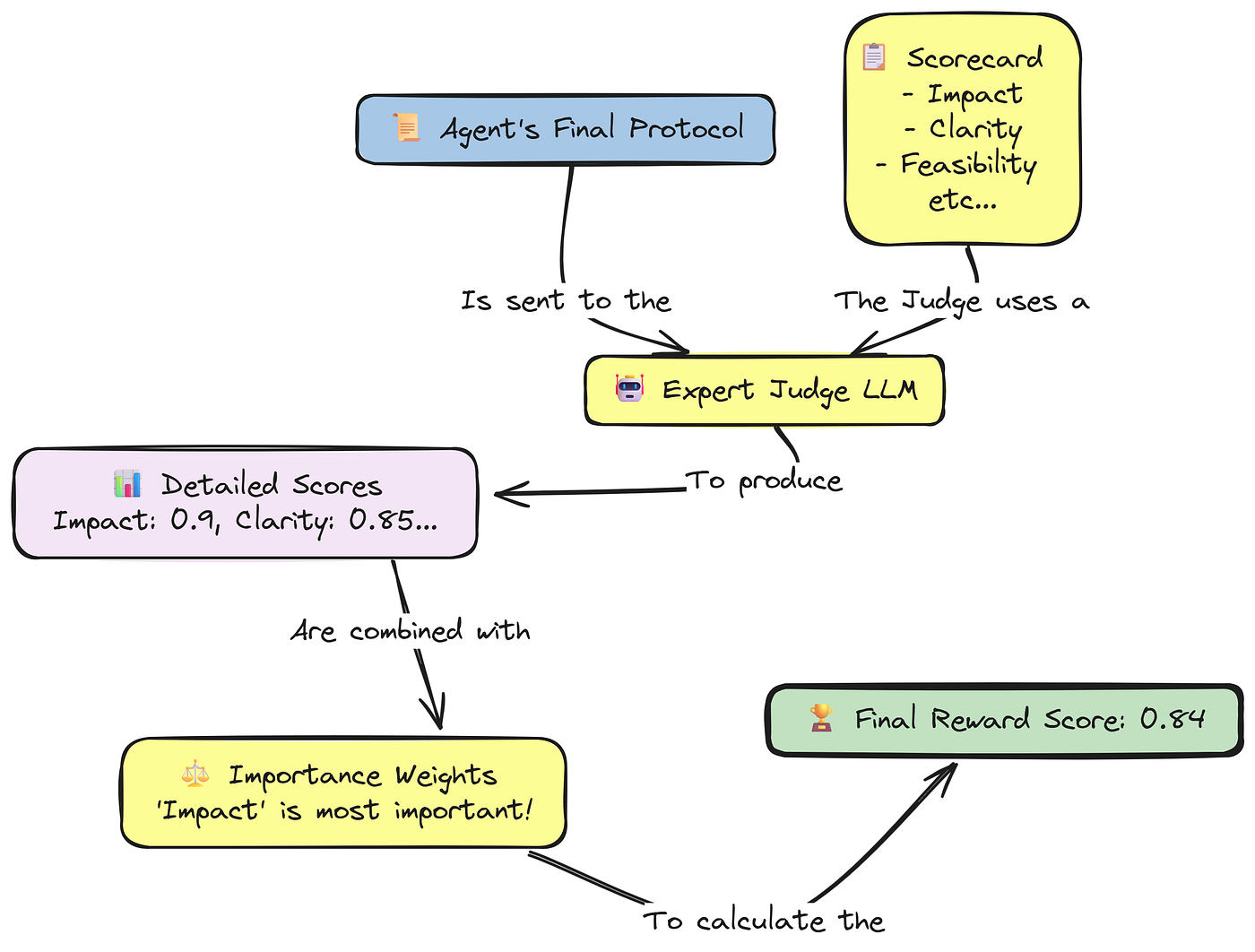

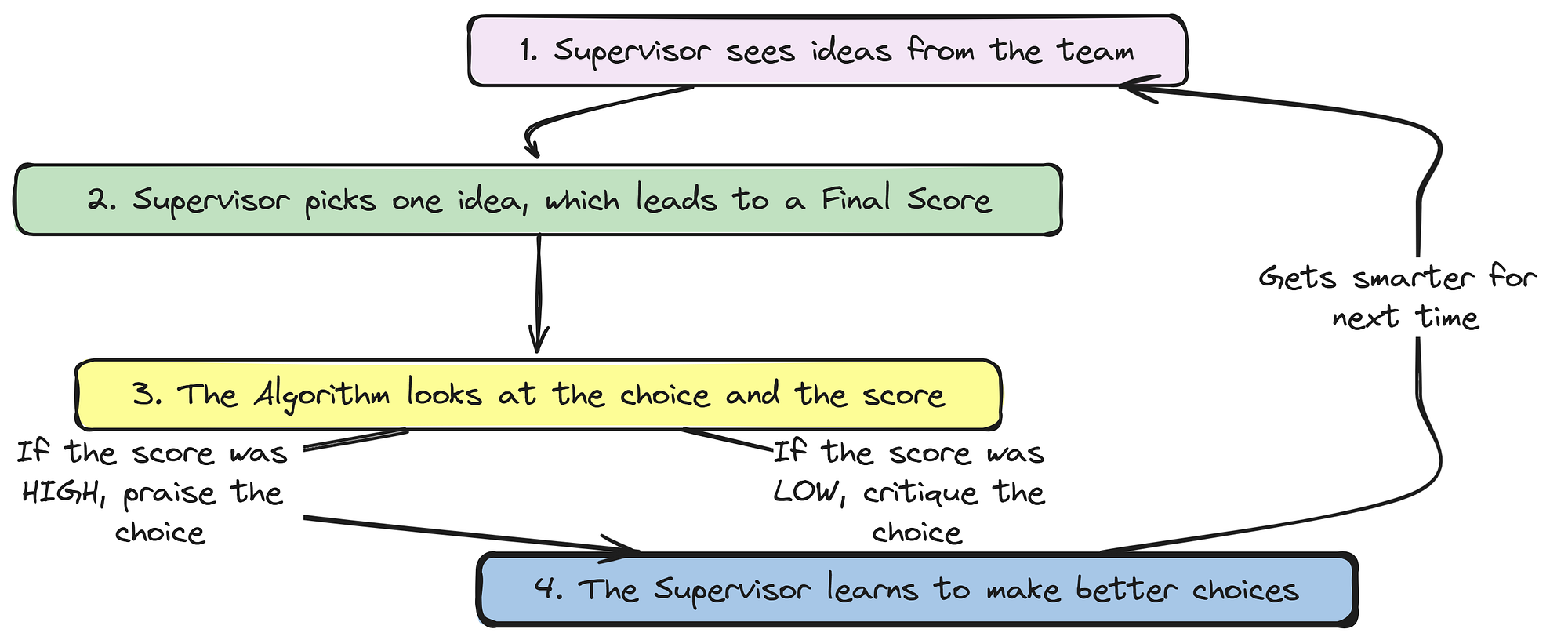

An RL agent is only as good as the reward signal it learns from. For a task as nuanced as scientific research, a simple binary reward (e.g., success=1, fail=0) is insufficient.

It wouldn’t teach the agent the difference between a mediocre protocol and a brilliant one.

To provide a rich, informative learning signal, we will design a reward system. We will build a protocol_evaluator function that acts as an LLM-as-a-Judge.

This "judge" will be a powerful model that evaluates the agent's final generated protocol from multiple, distinct angles, providing a structured dictionary of scores.

Here’s what we are going to do:

- Define Evaluation Criteria: We will create a Pydantic model,

EvaluationOutput, that defines the specific criteria our judge will use, including novelty, feasibility, impact, clarity, and, crucially,groundednessagainst the source context. - Build the Evaluator Function: Then implementing the

protocol_evaluatorfunction, which formats a detailed prompt for our judge LLM and parses its structured response. - Create a Weighted Reward: Defining a

get_weighted_rewardfunction that takes the dictionary of scores from our evaluator and combines them into a single, floating-point reward value, allowing us to prioritize certain criteria (like impact) over others.

First, let’s define the Pydantic schema for our evaluation. This schema acts as a strict “rubric” for our LLM judge, ensuring its feedback is consistent and machine-readable.

from langchain_core.pydantic_v1 import BaseModel, Field

# This Pydantic model defines the "scorecard" for our LLM-as-a-Judge.

class EvaluationOutput(BaseModel):

novelty: float = Field(description="Score 0-1 for originality and innovation beyond the provided context.")

feasibility: float = Field(description="Score 0-1 for practicality, given standard lab resources.")

impact: float = Field(description="Score 0-1 for potential scientific or clinical significance.")

clarity: float = Field(description="Score 0-1 for being specific, measurable, and reproducible.")

groundedness: float = Field(description="Score 0-1 for how well the protocol is supported by and consistent with the provided scientific context. Penalize any claims not supported by the context.")

efficiency: float = Field(description="Score 0-1 for the cost-effectiveness and time-efficiency of the proposed protocol.")We have now created the EvaluationOutput schema, which is the formal rubric for our reward system. By defining these specific, well-described fields, we are providing clear instructions to our evaluator LLM.

The inclusion of groundedness is particularly important, as it will teach our PPO agent to avoid hallucinating or making claims that are not supported by the literature it has reviewed. The new efficiency metric further enriches the learning signal, pushing the agent to consider practical constraints.

Now, let’s build the main protocol_evaluator function that will use this schema.

def protocol_evaluator(protocol: Protocol, context: str) -> dict:

"""

Acts as an LLM-as-a-Judge to score a protocol against multiple criteria.

"""

console.print("--- Running Protocol Evaluator (Reward Function) ---")

# The prompt for our LLM judge is detailed, asking it to act as an expert panel.

evaluator_prompt = ChatPromptTemplate.from_messages([

("system", "You are an expert panel of senior scientists. Evaluate the following experimental protocol on a scale of 0.0 to 1.0 for each of the specified criteria. Be critical and justify your scores briefly."),

# We provide both the original scientific context and the agent's generated protocol.

("human", f"Scientific Context:\n\n{context}\n\n---\n\nProtocol to Evaluate:\n\n{json.dumps(protocol, indent=2)}")

])

# We use our powerful review_board_llm and instruct it to format its output according to our EvaluationOutput schema.

evaluator_llm = review_board_llm.with_structured_output(EvaluationOutput)

try:

# Invoke the evaluator chain.

evaluation = evaluator_llm.invoke(evaluator_prompt.format_messages())

# The output is a Pydantic object, which we can easily convert to a dictionary.

scores = evaluation.dict()

console.print(f"Generated Scores: {scores}")

return scores

except Exception as e:

# If the LLM fails to generate a valid evaluation, we return a default low score to penalize the failure.

console.print(f"[bold red]Error in protocol evaluation: {e}. Returning zero scores.[/bold red]")

return {"novelty": 0.1, "feasibility": 0.1, "impact": 0.1, "clarity": 0.1, "groundedness": 0.1, "efficiency": 0.1}The protocol_evaluator function is our automated quality assurance step.

- It takes the agent's final

protocoland the originalcontextfrom the dataset. - It then presents both to our powerful

review_board_llm, instructing it to act as an expert panel and return a structuredEvaluationOutput. - The

try...exceptblock is a crucial piece of production-grade engineering, it ensures that even if the evaluation LLM fails or produces malformed output, our training loop won't crash. Instead, the agent receives a low reward, correctly penalizing the failed rollout.

Finally, our RL algorithm needs a single floating-point number for its update step. The following function takes the dictionary of scores and collapses it into a single weighted average.

def get_weighted_reward(scores: dict) -> float:

"""

Calculates a single weighted reward score from a dictionary of metric scores.

"""

# These weights allow us to prioritize certain aspects of a "good" protocol.

# Here, we're saying 'impact' is the most important factor, and 'efficiency' is a nice-to-have.

weights = {

"novelty": 0.1,

"feasibility": 0.2,

"impact": 0.3,

"clarity": 0.15,

"groundedness": 0.2,

"efficiency": 0.05

}

# Calculate the weighted sum of scores. If a score is missing from the input dictionary, it defaults to 0.

weighted_sum = sum(scores.get(key, 0) * weight for key, weight in weights.items())

return weighted_sumWe can now test this reward system and let’s observe how it is working …

print("Multi-faceted and weighted reward function defined.")

# Let's test the full reward pipeline with a sample protocol.

test_protocol = {"title": "Test Protocol", "steps": ["1. Do this.", "2. Do that."], "safety_concerns": "Handle with care.", "budget_usd": 50000.0}

test_context = "Recent studies suggest a link between gut microbiota and neuroinflammation in Alzheimer's disease."

test_scores = protocol_evaluator(test_protocol, test_context)

final_test_reward = get_weighted_reward(test_scores)

print(f"Weighted Final Reward: {final_test_reward:.2f}")

#### OUTPUT ####

Multi-faceted and weighted reward function defined.

--- Running Protocol Evaluator (Reward Function) ---

Generated Scores: {'novelty': 0.8, 'feasibility': 0.7, 'impact': 0.9, 'clarity': 0.85, 'groundedness': 0.95, 'efficiency': 0.9}

Weighted Final Reward: 0.84The get_weighted_reward function is the final step in our reward calculation. By assigning different weights to each criterion, we can fine-tune the learning signal to match our specific research goals.

- For example, by giving

impactthe highest weight (0.3), we are explicitly telling our RL algorithm to prioritize protocols that have the potential for significant scientific breakthroughs. - The successful test run confirms that our entire reward pipeline from evaluation to weighting is working correctly.

We now have a reward signal to guide our agent training.

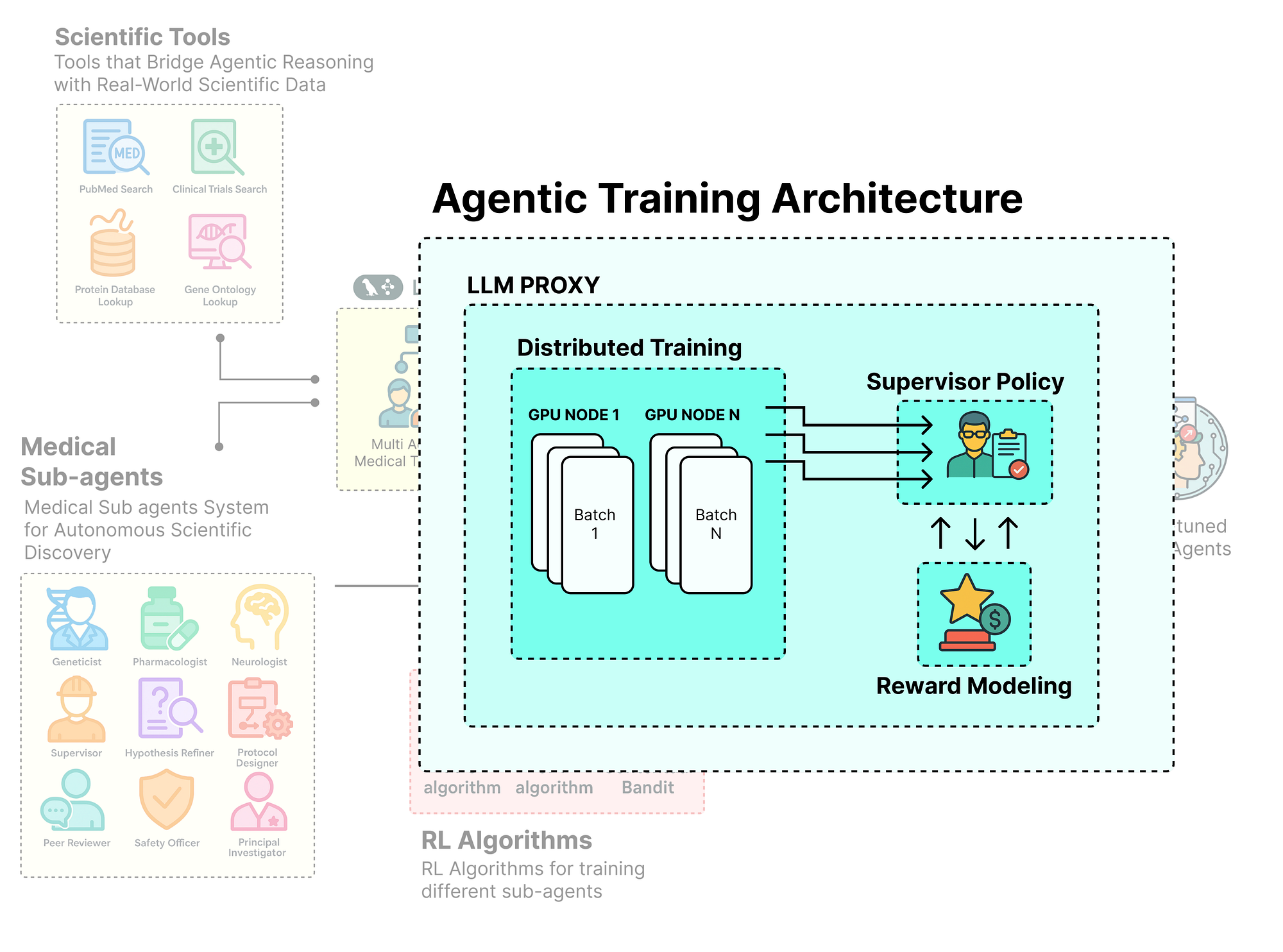

We have now designed our agent society with LangGraph and engineered a reward system. The next logical step is to build the industrial-grade infrastructure that will allow us to train these agents efficiently and at scale. This is where Agent-Lightning advanced features come into play.

A simple, single-process training loop is insufficient for a complex, multi-agent system that makes numerous LLM calls.

We need a distributed architecture that can run multiple agent “rollouts” in parallel while managing a central training algorithm.

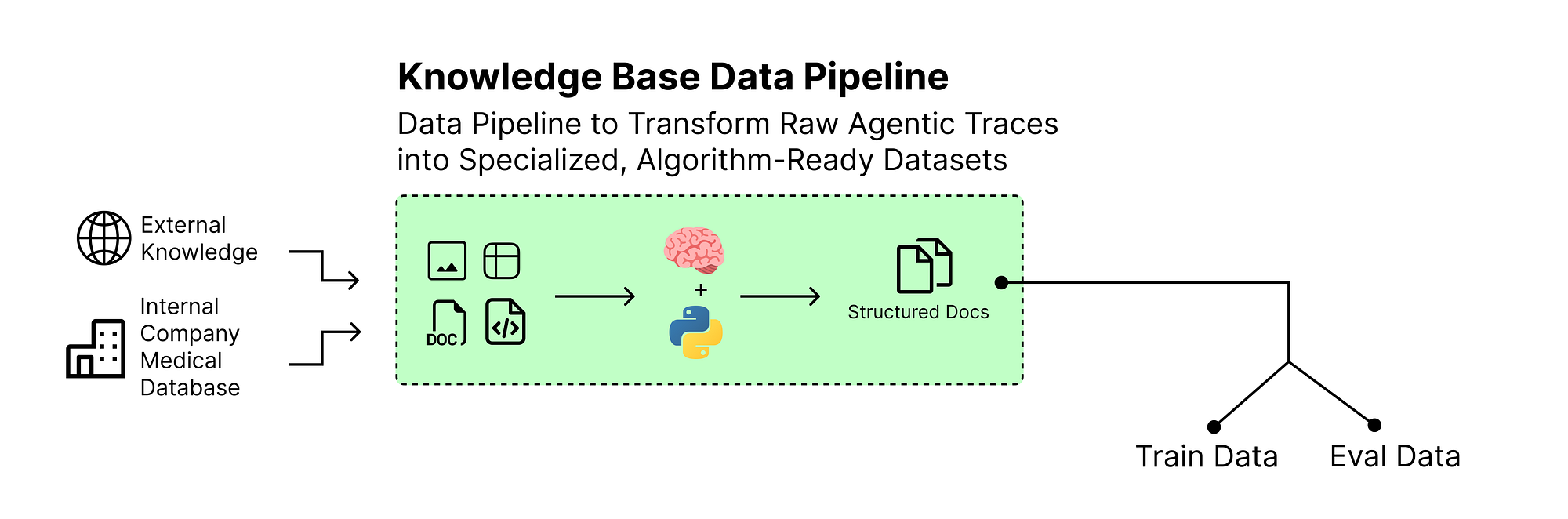

In this section, we will configure the core components of our Agent-Lightning training infrastructure:

- Enable Parallelism: We will configure the

ClientServerExecutionStrategyto run our agent rollouts in multiple, parallel processes, dramatically speeding up data collection. - Manage Multiple Models: Setting up the

LLMProxyto act as a central hub, intelligently routing requests for different models to different backends, including our model-under-training. - Create a Hierarchical Data Pipeline: Designing a custom

HierarchicalTraceAdapterthat can process a single, complex agent trace and generate distinct datasets formatted for each of our different training algorithms (SFT, PPO, and Contextual Bandit). - Implement Real-time Monitoring: We will build a custom

WandbLoggingHookto log our agent's performance to Weights & Biases in real-time, giving us a live view of the learning process.

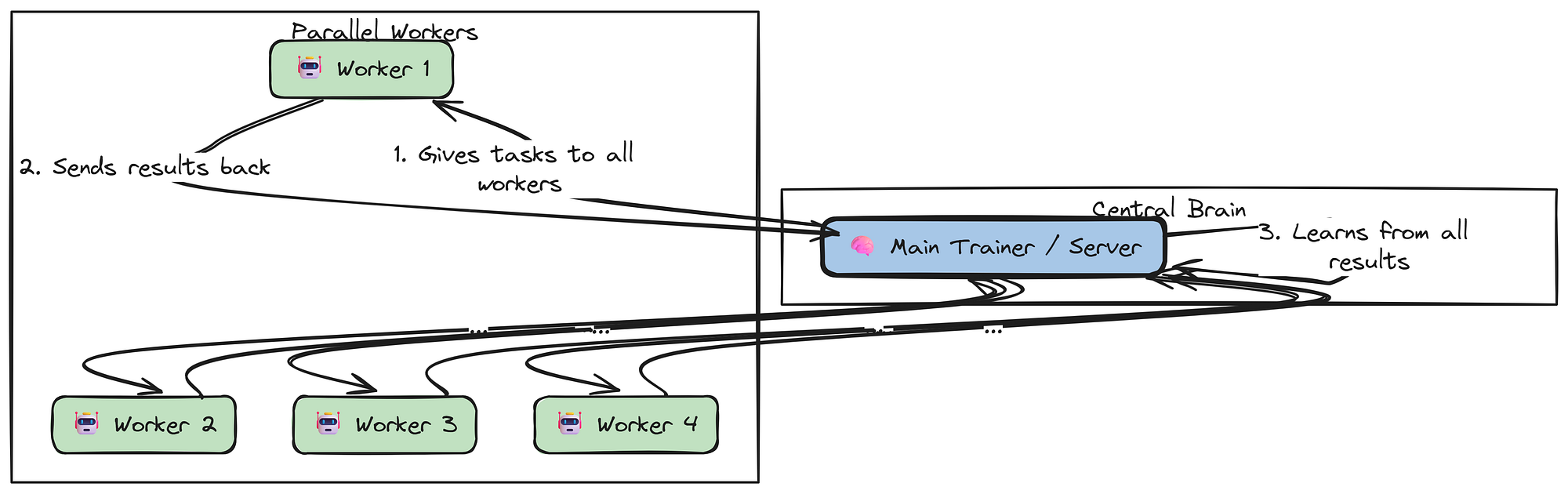

To perform cour training, we need to collect experience from our agent as quickly as possible. Running one rollout at a time would be a major bottleneck. Instead, we will configure our Trainer to use the ClientServerExecutionStrategy.

This strategy creates a distributed training architecture. The main process will run the core training algorithm (like PPO) and a LightningStoreServer to manage data.

It will then spawn multiple, separate runner processes. Each runner will act as a client, connecting to the server to get tasks and then executing our MedicalResearchAgent's rollout method in parallel. This allows us to gather large amounts of training data simultaneously, which is essential for efficient reinforcement learning.

We will define a simple configuration dictionary to specify this strategy and the number of parallel runners we want to use.

import agentlightning as agl

# We'll configure our system to run 4 agent rollouts in parallel.

num_runners = 4

# This dictionary defines the execution strategy for the Agent-Lightning Trainer.

strategy_config = {

"type": "cs", # 'cs' is the shorthand for ClientServerExecutionStrategy.

"n_runners": num_runners, # The number of parallel worker processes to spawn.

"server_port": 48000 # We specify a high port to avoid potential conflicts with other services.

}

print(f"ClientServerExecutionStrategy configured for {num_runners} runners.")We have now defined the blueprint for our distributed training infrastructure. The strategy_config dictionary is a simple but powerful declaration.

When we pass this to our agl.Trainer, it will automatically handle all the complexity of setting up the multi-process architecture, including inter-process communication and data synchronization. This allows us to scale up our data collection efforts by simply increasing n_runners, without having to change our core agent or algorithm code.

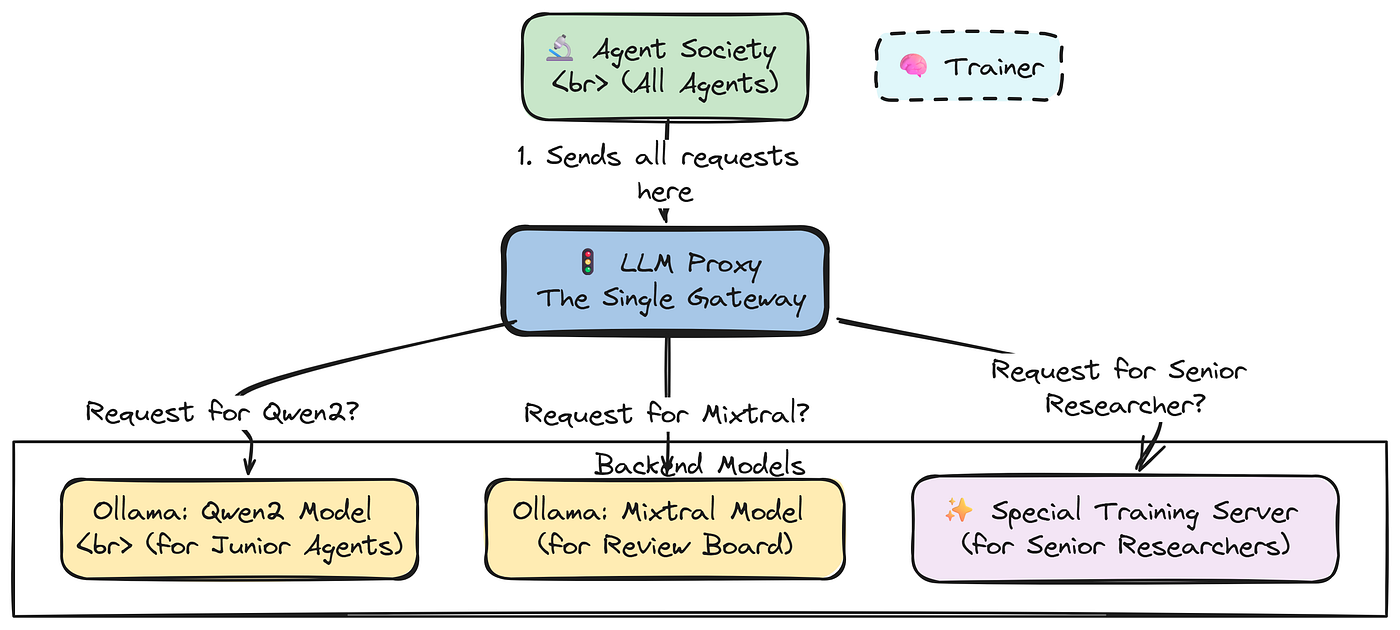

Our agent society is heterogeneous, it uses different models for different roles. Managing these multiple model endpoints can be complex, especially when one of them is a model-under-training that is being served dynamically.

The

LLMProxyfromAgent-Lightningis the perfect solution to this problem.

It acts as a single gateway for all LLM calls. Our LitAgent will send all its requests to the proxy's address. The proxy then intelligently routes each request to the correct backend model based on the model_name specified in the call.

This is particularly powerful for our training setup:

- The

VERL(PPO) algorithm will be able to automatically update the proxy's configuration, redirecting calls for"senior_researcher_llm"to its own dynamically served vLLM instance. - Meanwhile, requests for other models (like

Qwen2orMixtral) will be routed to a different backend, such as a local Ollama server.

Let’s define the configuration for our LLMProxy.

# The 'model_list' defines the routing rules for the LLMProxy.

llm_proxy_config = {

"port": 48001, # The port the LLMProxy itself will listen on.

"model_list": [

# Rule 1: For Junior Researchers and the Supervisor.

# Any request for this model name will be forwarded to a local Ollama server running Qwen2.

{

"model_name": "Qwen/Qwen2-1.5B-Instruct",

"litellm_params": {"model": "ollama/qwen2:1.5b"}

},

# Rule 2: For our Senior Researcher (the model-under-training).

# Initially, it might point to a baseline model. During training, the VERL algorithm

# will automatically update this entry to point to its own vLLM server.

{

"model_name": "senior_researcher_llm",

"litellm_params": {"model": "ollama/llama3"} # An initial fallback.

},

# Rule 3: For the powerful Review Board.

# Requests for this model will be routed to a local Ollama server running Mixtral.

{

"model_name": "mistralai/Mixtral-8x7B-Instruct-v0.1",

"litellm_params": {"model": "ollama/mixtral"}

}

]

}The llm_proxy_config dictionary is the routing table for our entire multi-agent system.

- It decouples the logical model names used by our agents (e.g.,

"senior_researcher_llm") from the physical model backends (e.g., a specific Ollama endpoint or a dynamic vLLM server). - It allows us to swap out backend models, redirect traffic for A/B testing, or, in our case, dynamically update the endpoint for our model-under-training, all without ever having to change the agent's core code.

- The

LLMProxyprovides a single point of control and observability for all model interactions in our system.

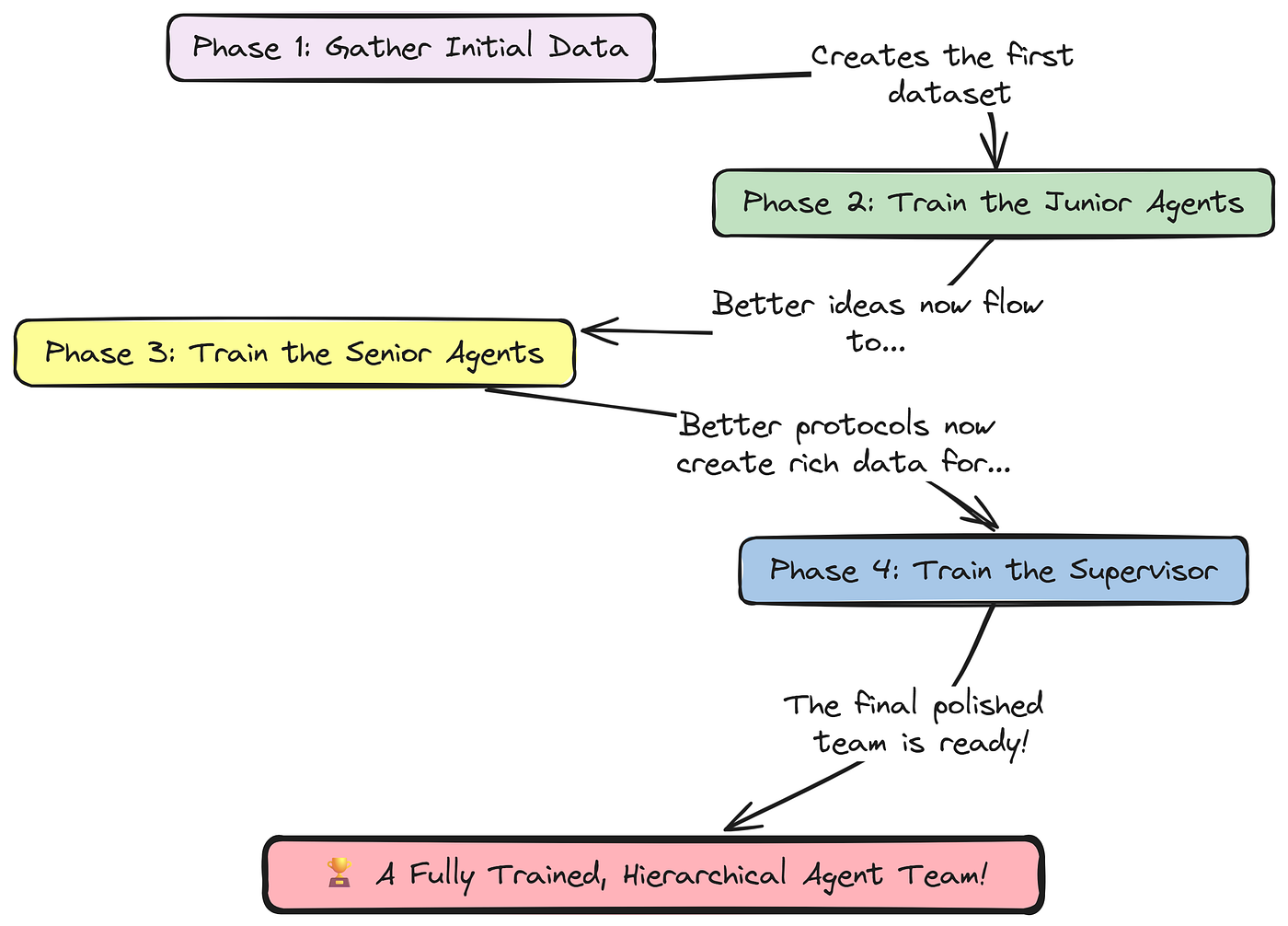

Our hierarchical training strategy presents a unique data processing challenge. We have a single, complex LangGraph trace for each rollout, but we need to feed data to three different training algorithms, each expecting a different format:

- SFT Algorithm: Needs conversational data (a list of messages) from the Junior Researchers.

- PPO Algorithm: Needs RL triplets (

state,action,reward) from the Senior Researchers. - Contextual Bandit Algorithm: Needs a single (

context,action,reward) tuple from the Supervisor's decision.

To solve this, we will build a custom, sophisticated Trace Adapter. An adapter in Agent-Lightning is a class that transforms the raw trace data (a list of spans from LangSmith) into the specific format required by a training algorithm.

Our

HierarchicalTraceAdapterwill be a multi-headed data processor, capable of producing all three required data formats from a single source trace.

We will create a new class that inherits from agl.TracerTraceToTriplet and add new methods to it, one for each of our target data formats. This demonstrates the powerful flexibility of Agent-Lightning's data pipeline.

Let’s define the HierarchicalTraceAdapter class.

from agentlightning.adapter import TraceToMessages

class HierarchicalTraceAdapter(agl.TracerTraceToTriplet):

def __init__(self, *args, **kwargs):

# We initialize the parent class for PPO triplet generation.

super().__init__(*args, **kwargs)

# We also create an instance of a standard adapter for SFT message generation.

self.message_adapter = TraceToMessages()

def adapt_for_sft(self, source: List[agl.Span]) -> List[dict]:

"""Adapts traces for Supervised Fine-Tuning by filtering for junior researchers and converting to messages."""

# Define the names of the nodes corresponding to our Junior Researcher agents.

junior_agent_names = ["Geneticist", "Pharmacologist", "Neurologist"]

# Filter the raw trace to get only the spans generated by these agents.

# LangSmith conveniently adds a 'name' field for LangGraph nodes in the span attributes.

junior_spans = [s for s in source if s.attributes.get('name') in junior_agent_names]

console.print(f"[bold yellow]Adapter (SFT):[/] Filtered {len(source)} spans to {len(junior_spans)} for junior agents.")

if not junior_spans:

return []

# Use the standard message adapter to convert these filtered spans into a conversational dataset.

return self.message_adapter.adapt(junior_spans)

def adapt_for_ppo(self, source: List[agl.Span]) -> List[agl.Triplet]:

"""Adapts traces for PPO by filtering for senior researchers and converting to triplets."""

# Define the names of the nodes for our Senior Researcher agents.

senior_agent_names = ["HypothesisRefiner", "ProtocolDesigner"]

# We configure the parent class's filter to only match these agent names.

self.agent_match = '|'.join(senior_agent_names)

# Now, when we call the parent's 'adapt' method, it will automatically filter and process only the relevant spans.

ppo_triplets = super().adapt(source)

console.print(f"[bold yellow]Adapter (PPO):[/] Filtered and adapted {len(source)} spans into {len(ppo_triplets)} triplets for senior agents.")

return ppo_triplets

def adapt_for_bandit(self, source: List[agl.Span]) -> List[tuple[list[str], int, float]]:

"""Adapts a completed rollout trace for the contextual bandit algorithm."""

# First, find the final reward for the entire rollout.

final_reward = agl.find_final_reward(source)

if final_reward is None:

return []

# Next, find the specific span where the Supervisor agent made its decision.

supervisor_span = next((s for s in source if s.attributes.get('name') == 'Supervisor'), None)

if not supervisor_span:

return []

# Then, we need to reconstruct the 'context' - the list of hypotheses the supervisor had to choose from.

junior_spans = [s for s in source if s.attributes.get('name') in ["Geneticist", "Pharmacologist", "Neurologist"]]

contexts = []

# We sort by start time to ensure the order of hypotheses is correct.

for span in sorted(junior_spans, key=lambda s: s.start_time):

try:

# In LangGraph, the agent's final JSON output is in the 'messages' attribute of the state.

output_message = span.attributes.get('output.messages')

if output_message and isinstance(output_message, list):

# The actual content is a JSON string within the AIMessage's content field.

content_str = output_message[-1].get('content', '{}')

hypothesis_data = json.loads(content_str)

contexts.append(hypothesis_data.get('hypothesis', ''))

except (json.JSONDecodeError, KeyError, IndexError):

continue

if not contexts:

return []

# Finally, extract the 'action' - the index of the hypothesis the supervisor chose.

try:

output_message = supervisor_span.attributes.get('output.messages')

if output_message and isinstance(output_message, list):

content_str = output_message[-1].get('content', '{}')

supervisor_output = json.loads(content_str)

chosen_index = supervisor_output.get('selected_hypothesis_index')

if chosen_index is not None and 0 <= chosen_index < len(contexts):

console.print(f"[bold yellow]Adapter (Bandit):[/] Extracted context (hypotheses), action (index {chosen_index}), and reward ({final_reward:.2f}).")

# Return the single data point for the bandit algorithm.

return [(contexts, chosen_index, final_reward)]

except (json.JSONDecodeError, KeyError, IndexError):

pass

return []

# Instantiate our custom adapter.

custom_adapter = HierarchicalTraceAdapter()The HierarchicalTraceAdapter is a testament to the flexibility of the Agent-Lightning data pipeline. We have created a single, powerful data processing class that serves the needs of our entire hierarchical training strategy.

- The

adapt_for_sftmethod acts as a filter, surgically extracting only the conversational turns involving our Junior Researchers and formatting them perfectly for fine-tuning. - The

adapt_for_ppomethod leverages the power of the parentTracerTraceToTripletclass, but cleverly configures it on the fly to only process spans from our Senior Researchers. - The

adapt_for_banditmethod is the most complex, it performs a forensic analysis of the entire trace, reconstructing the supervisor's decision-making moment by finding the available choices (thecontexts), the chosenaction, and the finalreward.

This adapter is the linchpin of our training architecture. It allows us to maintain a single, unified agent workflow (LangGraph) and a single data source (LangSmith traces), while still being able to apply specialized, targeted training algorithms to different components of that workflow.

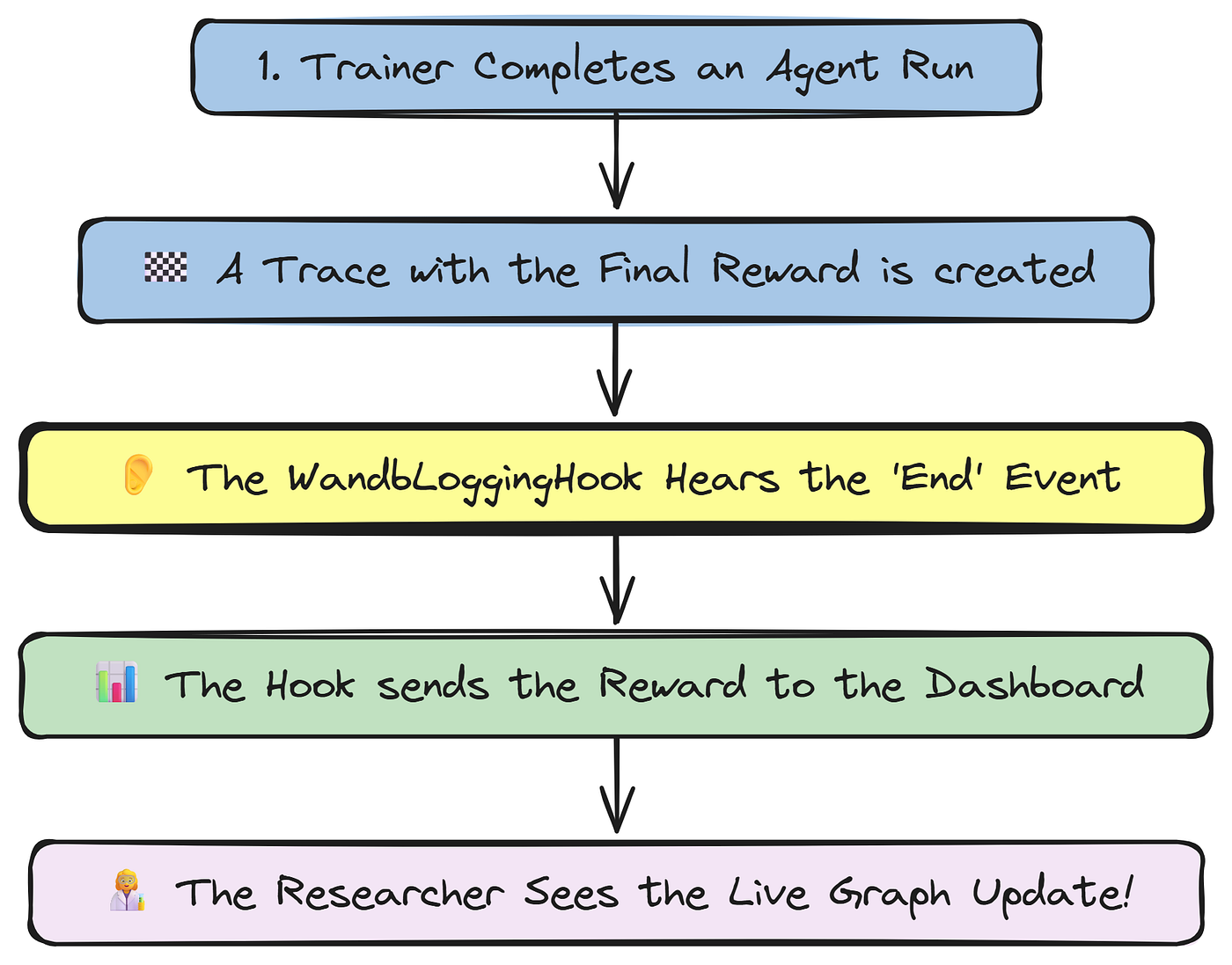

Effective training requires more than just running an algorithm; it requires real-time observability.

We need to be able to “see” our agent performance as it learns, rollout by rollout.

While LangSmith gives us deep, forensic detail on individual traces, we also need a high-level, aggregate view of our training progress.

To achieve this, we will create a custom Hook. A Hook in Agent-Lightning is a powerful mechanism that allows you to inject custom logic at various points in the training lifecycle (e.g., on_rollout_start, on_trace_end).

We will build a WandbLoggingHook that listens for the on_trace_end event. As soon as a rollout is complete and its trace is available, this hook will trigger.

It will extract the final reward from the trace and log this single, crucial metric to a Weights & Biases (W&B) project. This will give us a live, streaming plot of our agent's reward, providing an immediate and intuitive visualization of its learning curve.

Let’s define our custom hook class.

import wandb

class WandbLoggingHook(agl.Hook):

def __init__(self, project_name: str):

# We initialize the W&B run once, when the hook is created.

self.run_initialized = False

if os.environ.get("WANDB_API_KEY"):

try:

wandb.init(project=project_name, resume="allow", id=wandb.util.generate_id())

self.run_initialized = True

except Exception as e:

print(f"Failed to initialize W&B: {e}")

else:

print("W&B API Key not found. Hook will be inactive.")

async def on_trace_end(self, *, rollout: agl.Rollout, tracer: agl.Tracer, **kwargs):

"""

This method is automatically called by the Trainer at the end of every rollout.

"""

# If W&B wasn't initialized, we do nothing.

if not self.run_initialized: return

# Use a helper function to find the final reward value from the list of spans in the trace.

final_reward_value = agl.find_final_reward(tracer.get_last_trace())

# If a reward was found, log it to W&B.

if final_reward_value is not None: