Customers can virtually try on shirts, sweaters, coats, and pants by using their smartphone camera. The virtual try-on technology allows users to swipe through various colorways and patterns, ensuring that the particular article of clothing they choose is right for them.

Nowadays, people are purchasing most of their items online and are spending more on it especially in fashion items since browsing different styles and categories of clothes is easy with just a few mouse clicks. Despite the convenience that online shopping provides, customers tend to concern about how a particular fashion item image on the website would fit with themselves. Therefore, there is an urgent demand to provide a quick and simple solution for virtual try-on. With the recent progress in virtual try-on technologies, people can have a better online shopping experience by accurately envisioning themselves wearing the clothes from online categories. Furthermore, virtual try-on technologies not only have demand in online shopping but also in physical shopping. In other words, with the try-on technologies developed on mobile application, customers can save their time of going into the fitting room.

• The intent of our project is that the users should be able to take virtual experience of Dressing by using Artificial Intelligence and optimizing the cost and time required for trying cloth.

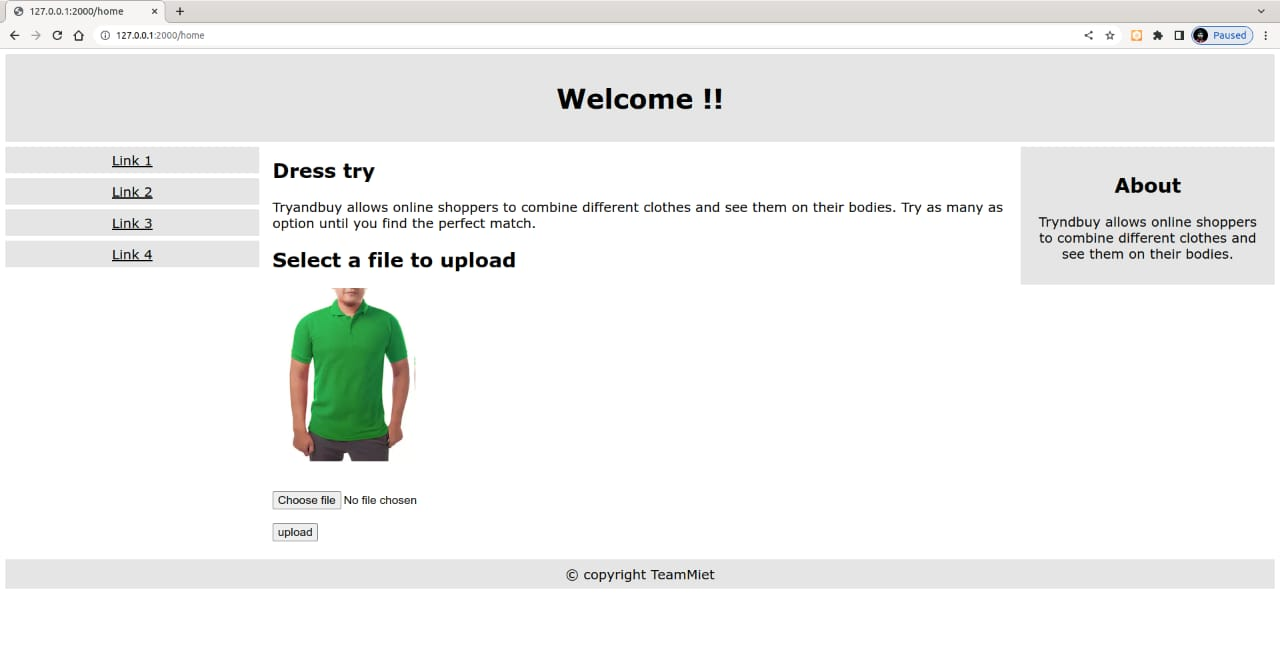

• Our application will be a simple web based application that will be user friendly.

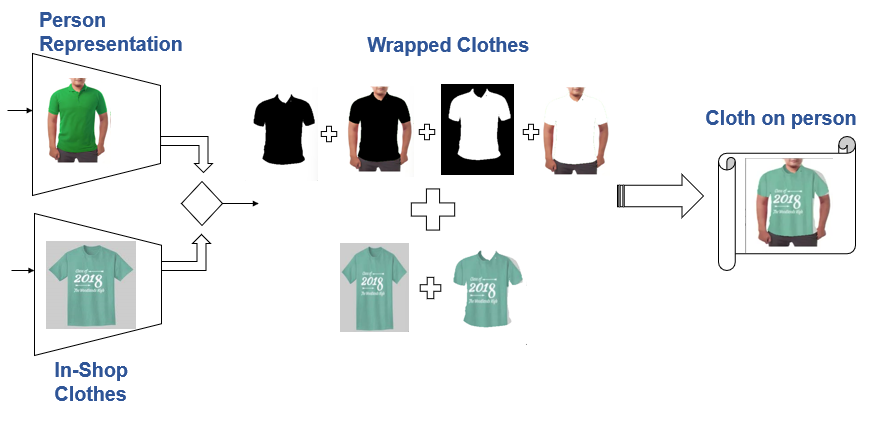

• The principle of the application is started with uploading picture of user as Model and Targeted Clothes and our model will do Pose Detection, Clothes masking and then finally gives Output as Model with Targeted Clothes.

OBJECTIVE

• To give virtual view of targeted cloth.

• To get instant view of clothes on ourselves.

• To reduce time and effort required for trying dresses.

METHODOLOGY

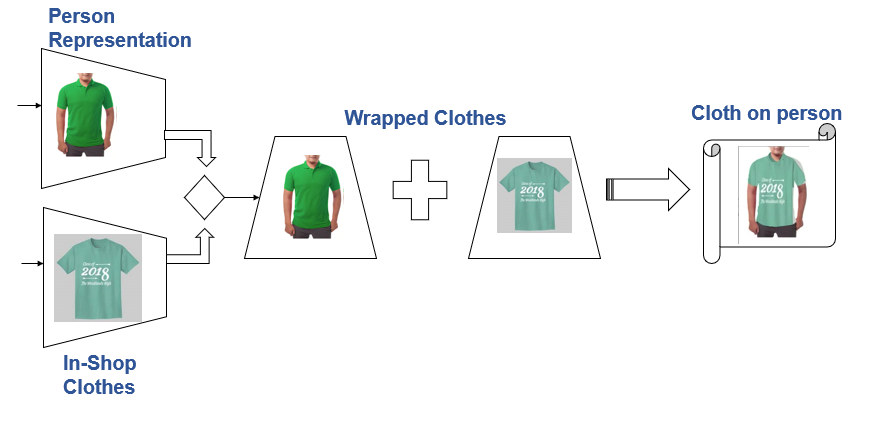

• Person representation and shop clothes are wrapped by using Open CV libraries of Python. OpenCV-Python is a library of Python bindings designed to solve computer vision problems.

• Creating the webpage using CSS and HTML.

• Selecting the Clothes of user choice.

• Output is seen on the screen of selected material.

• A application with user interface is developed to test practically the performance. The user interface allows the user to choose a dress and fashion kit.

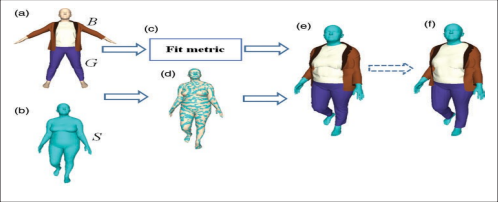

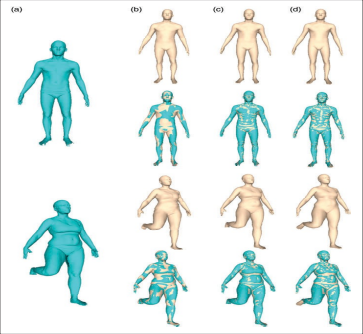

Figure 1. Overview of the proposed method: (a) given reference garments G fitted on a reference body model B; (b) input the target body model S; (c) novel clothing fit representation that accounts for the interaction between garments and the body; (d) deform the body model B to fit S via the proposed automatic approach; (e) a simple yet efficient method is proposed to redress garments Gr onto the target body S; (f) surface refinement using cloth simulation.

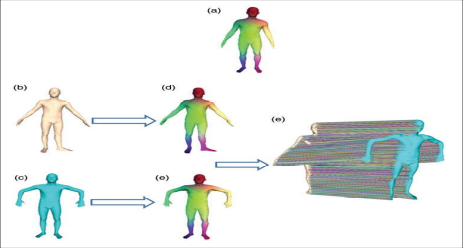

Figure 2. Method overview of 3D-CODED: (a) high-solution template; (b) and (c) source body and the target body respectively; (d) and (e) deformed templates for (b) and (c); (e) dense correspondences between (b) and (c), which are visualized by drawing lines between correspondences.

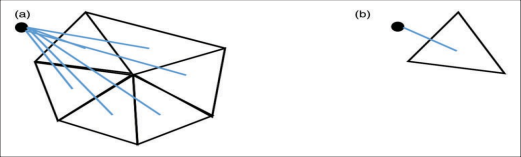

Figure 3. Fit representation: (a) our method; (b) method from Hu et al.

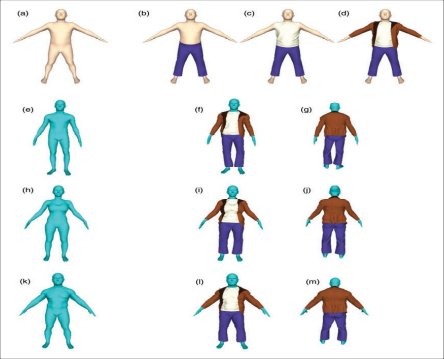

Figure 4. Redressing multi-layer garments onto bodies with the same posture: (a) a reference body; (b), (c) and (d) are each layer of cloth fitted on the reference body; (e), (h) and (k) are bodies with the same pose; and the rest are the redressing results using our method.

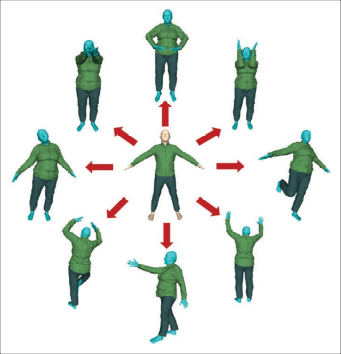

Figure 5. Redressing garments onto the same body in varying postures.

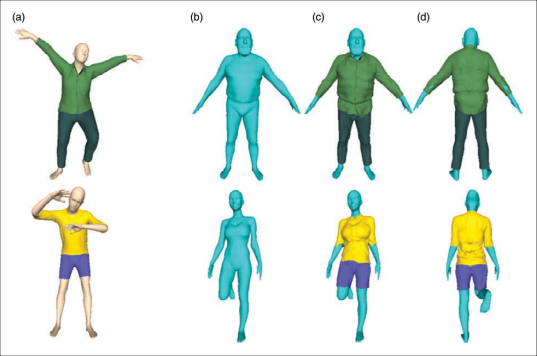

Figure 6. Redressing garments onto different posed bodies from a non-standard reference body: (a) reference garments and bodies with non-standard poses; (b) target bodies; (c) and (d) are the redressed garments onto (b).

Figure 7. Results of wearable items including eyeglasses, watch, shoes, hat and necklace, or even for the hair.

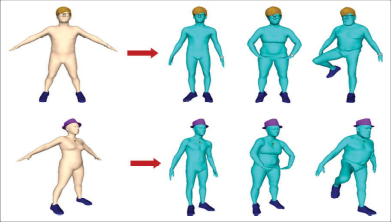

Figure 8. Comparison of body fitting methods: (a) target bodies (in blue); (b) results of Groueix et al. (c) results from our method; (d) results of Sumner et al. The deformed templates are colorized by flesh color.

CURRENTLY WORKING :

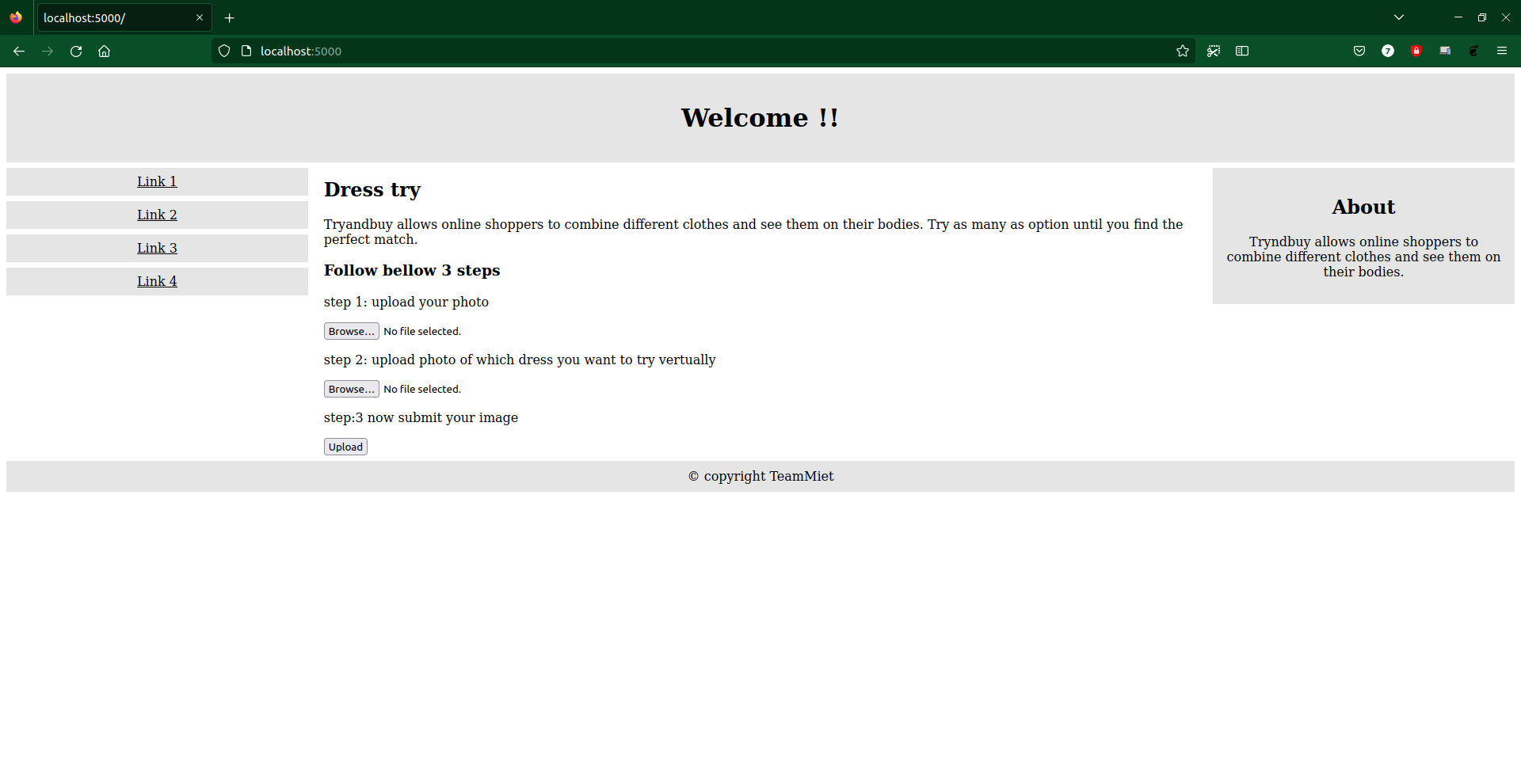

index.html page:

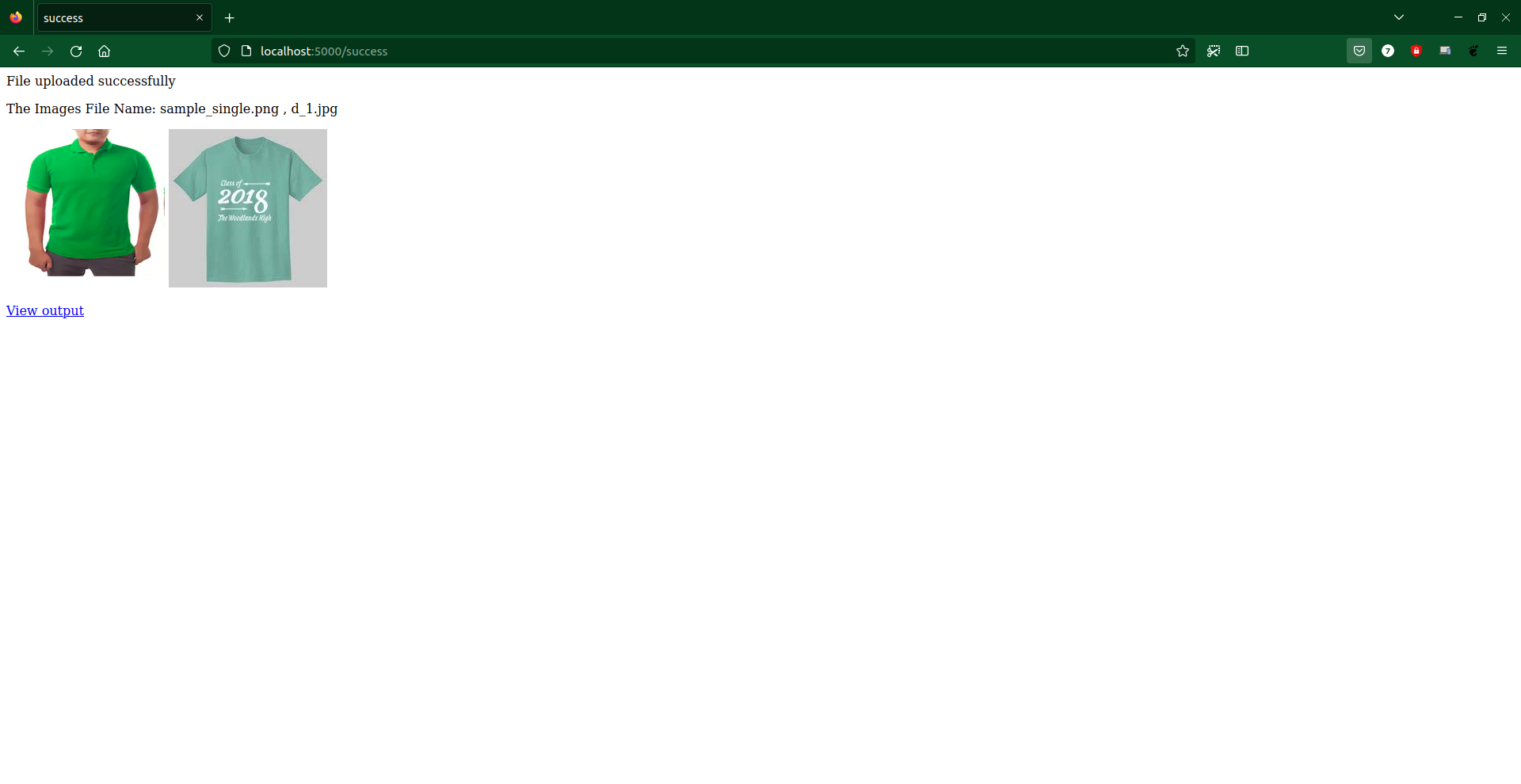

success.html page:

success.html page:

output.html page:

output.html page:

Home:

Output:

FUTURE SCOPE:

This application can be easily implemented in various situations. We can add new features as and when we require them. There is flexibility in all the modules.

1. This Project works currently for clothes. The Project can be improvised to include male garments also.

2. This project can be improvised by including an automatic size recommendation feature for the user.

3. It is also providing a window of opportunity for AI-based innovations in the future. And there are already new approaches designed to solve those issues.

4. Another important thing is to take the technology capabilities into account when choosing a proper use case scenario.

5. We propose a novel fully automatic method to redress 3D wearable items including garments, shoes, eyeglasses, necklaces, hats, watches and even hair, from a reference body mesh to a target body mesh.

6. To our best knowledge, this is the first generic method of wearable items virtual try-on.

7. We proposed a novel pipeline of automatically deforming a template body mesh to fit to a target body mesh.

8. The extensive experiments show that the proposed method can be used to redress varying wear- able items. The geometry of redressed items depends on the shape and posture of the target body. The main limitation of our current work is that it fails on dresses. This is because our fit representation cannot assign a vertex of garment to two legs, which results in cloth tear. Future work will address this issue.