📃 Paper • 🌐 Project Page

AlphaTablets: A Generic Plane Representation for 3D Planar Reconstruction from Monocular Videos

Yuze He, Wang Zhao, Shaohui Liu, Yubin Hu, Yushi Bai, Yu-Hui Wen, Yong-Jin Liu

NeurIPS 2024

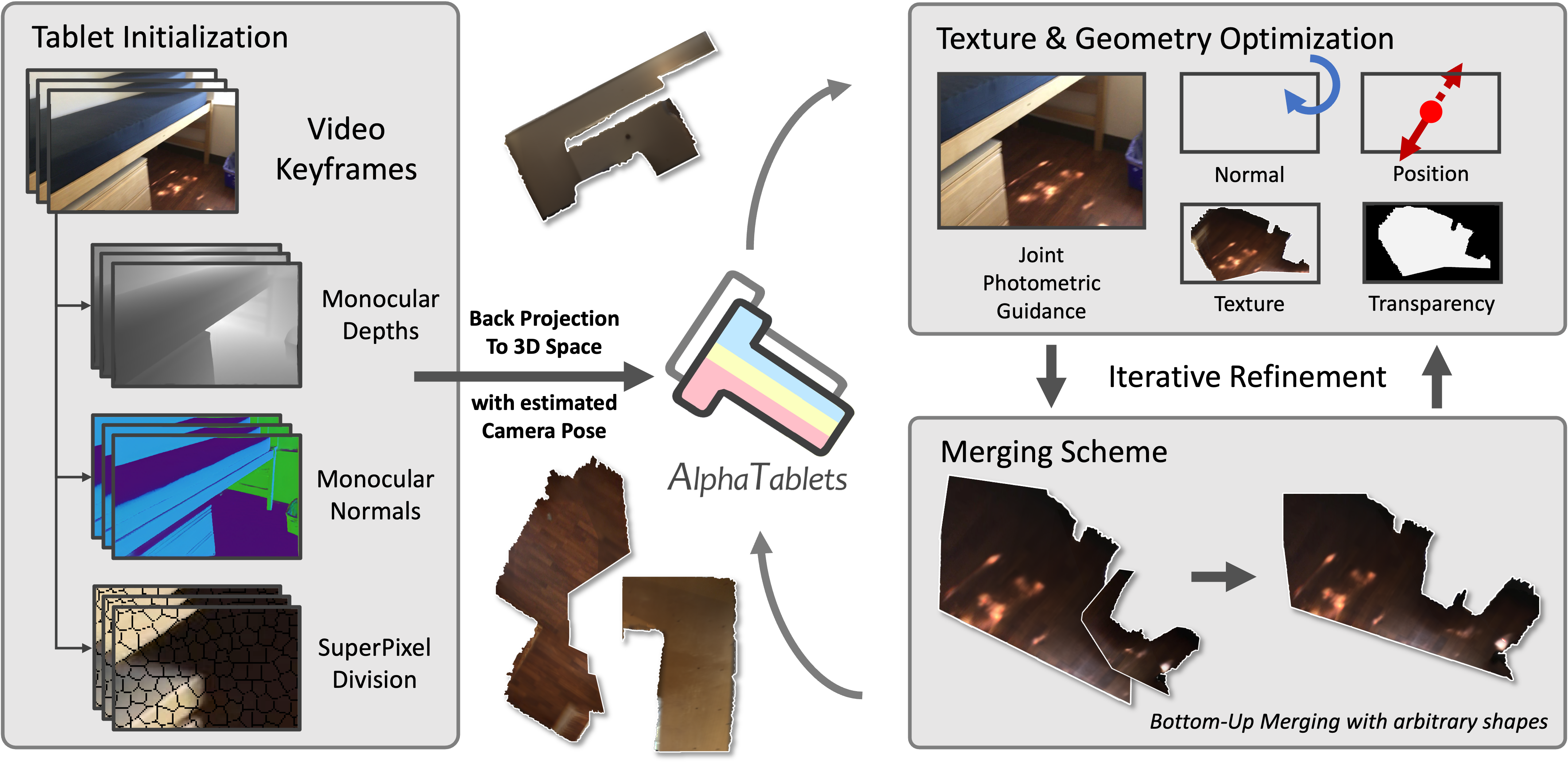

AlphaTablets is a novel and generic representation of 3D planes that features continuous 3D surface and precise boundary delineation. By representing 3D planes as rectangles with alpha channels, AlphaTablets combine the advantages of current 2D and 3D plane representations, enabling accurate, consistent and flexible modeling of 3D planes.

We propose a novel bottom-up pipeline for 3D planar reconstruction from monocular videos. Starting with 2D superpixels and geometric cues from pre-trained models, we initialize 3D planes as AlphaTablets and optimize them via differentiable rendering. An effective merging scheme is introduced to facilitate the growth and refinement of AlphaTablets. Through iterative optimization and merging, we reconstruct complete and accurate 3D planes with solid surfaces and clear boundaries.

Make sure to clone the repository along with its submodules:

git clone --recursive https://github.com/THU-LYJ-Lab/AlphaTabletsSet up a Python environment and install the required packages:

conda create -n alphatablets python=3.9

conda activate alphatablets

# Install PyTorch based on your machine configuration

pip install torch==2.1.2 torchvision==0.16.2 torchaudio==2.1.2 --index-url https://download.pytorch.org/whl/cu118

# Install other dependencies

# Note: mmcv package also requires CUDA. To avoid potential errors, set the CUDA_HOME environment variable and download a CUDA-compatible version of the library.

# Example: python -m pip install mmcv==2.2.0 -f https://download.openmmlab.com/mmcv/dist/cu118/torch2.1/index.html

pip install -r requirements.txtDownload Omnidata pretrained weights:

- File:

omnidata_dpt_normal_v2.ckpt - Link: Download Here

Place the file in the directory:

./recon/third_party/omnidata/omnidata_tools/torch/pretrained_models

Download Metric3D pretrained weights:

- File:

metric_depth_vit_giant2_800k.pth - Link: Download Here

Place the file in the directory:

./recon/third_party/metric3d/weight

- Download the

scene0684_01demo scene from here and extract it to./data/. - Run the demo with the following command:

python run.py --job scene0684_01- Download the

office0demo scene from here and extract it to./data/. - Run the demo using the specified configuration:

python run.py --config configs/replica.yaml --job office0- Out-of-Memory (OOM): Reduce

batch_sizeif you encounter memory issues. - Low Frame Rate Sequences: Increase

weight_decay, or set it to-1for an automatic decay. The default value is0.9(works well for ScanNet and Replica), but it can go up to larger values (no more than1.0). - Scene Scaling Issues: If the scene scale differs significantly from real-world dimensions, adjust merging parameters such as

dist_thres(maximum allowable distance for tablet merging).

-

Download and Extract ScanNet: Follow the instructions provided on the ScanNet website to download and extract the dataset.

-

Prepare the Data: Use the data preparation script to parse the raw ScanNet data into a processed pickle format and generate ground truth planes using code modified from PlaneRCNN and PlanarRecon.

Run the following command under the PlanarRecon environment:

python tools/generate_gt.py --data_path PATH_TO_SCANNET --save_name planes_9/ --window_size 9 --n_proc 2 --n_gpu 1 python tools/prepare_inst_gt_txt.py --val_list PATH_TO_SCANNET/scannetv2_val.txt --plane_mesh_path ./planes_9

-

Process Scenes in the Validation Set: You can use the following command to process each scene in the validation set. Update

scene????_??with the specific scene name. Train/val/test split information is available here:python run.py --job scene????_?? --input_dir PATH_TO_SCANNET/scene????_??

-

Run the Test Script: Finally, execute the test script to evaluate the processed data:

python test.py

If you find our work useful, please kindly cite:

@article{he2024alphatablets,

title={AlphaTablets: A Generic Plane Representation for 3D Planar Reconstruction from Monocular Videos},

author={Yuze He and Wang Zhao and Shaohui Liu and Yubin Hu and Yushi Bai and Yu-Hui Wen and Yong-Jin Liu},

journal={arXiv preprint arXiv:2411.19950},

year={2024}

}

Some of the test code and installation guide in this repo is borrowed from NeuralRecon, PlanarRecon and ParticleSfM! We sincerely thank them all.