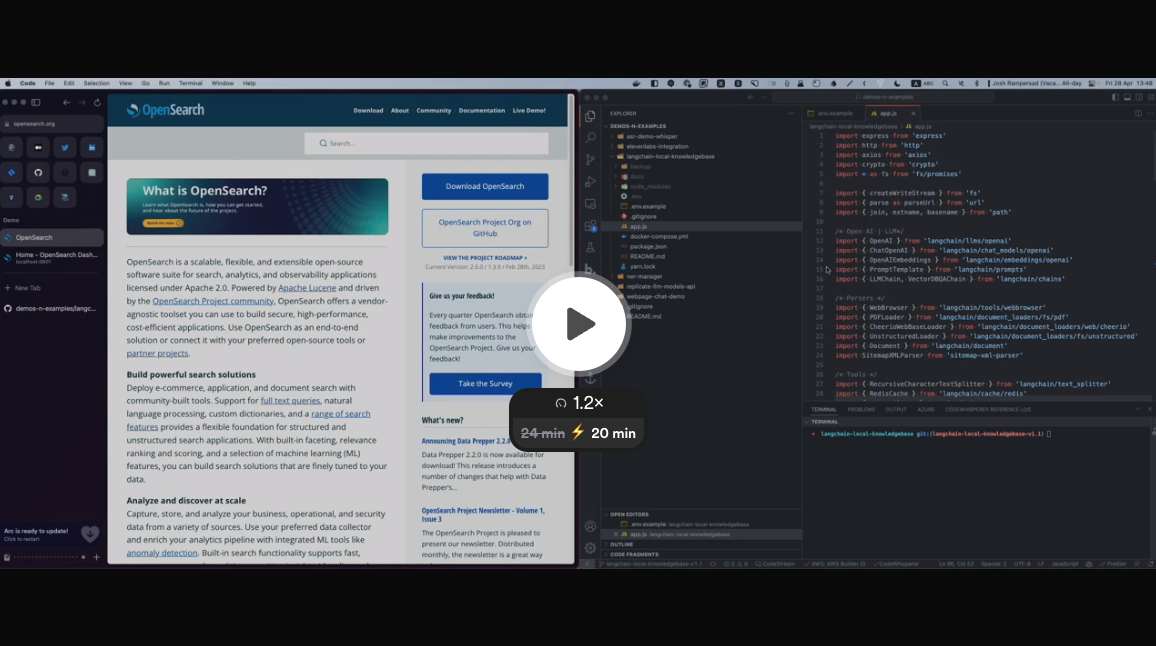

This code utilizes Open AI GPT, Langchain, Redis Cache, OpenSearch and Unstructured to fetch content from URLs, sitemap, PDF, Powerpoint, Notion doc and images to create embeddings/vectors and save them in a local OpenSearch database. The created collections can then be used with GPT to answer questions.

You need Node.js 18+ to run this code. You can download it here: https://nodejs.org/en/download/

First, copy the .env file and set up required environment variables.

cp .env.example .envTo create the containers, install the required dependencies and launch the server, run:

yarn buildThis should create the following containers: ✔ Container redis (cache) ✔ Container unstructured (handle images, ppt, text, markdown) ✔ Container opensearch (search engine) ✔ Container opensearch-dashboards (search engine dashboard)

OpenSearch dashboard can be accessed at http://localhost:5601

Install dependencies and start the server (app.js)

The server will listen on the port specified in the .env file (default is 3000).

GET /api/health

200 OKon success

{

"success": true,

"message": "Server is healthy"

}GET /api/clearcache

200 OKon success

{

"success": true,

"message": "Cache cleared"

}POST /api/add

{

"url": "https://www.example.com/sitemap.xml", //* url of the sitemap

"collection": "collection_name", //* name of the collection to populate

"filter": "filter", // default to null - use to filter URL with this string (ex. "/blog/")

"limit": 10, // default to null

"chunkSize": 2000, // default to 2000

"chunkOverlap": 250, // default to 250

"sleep": 0 // For sitemap, time to wait between each URLs

}200 OKon success

{

"response": "added",

"collection": "collection_name"

}DELETE /api/collection

{

"collection": "collection_name", //* name of the collection to delete

}200 OKon success

{

"success": true,

"message": "{collection_name} has been deleted"

}POST /api/live

{

"url": "https://www.example.com", //* url of the webpage

"question": "Your question", //* the question to ask

"temperature": 0 // default to 0

}200 OKon success

{

"response": "response_text"

}POST /api/question

{

"question": "your question", //* the question to ask

"collection": "collection_name", //* name of the collection to search

"model": "model_name", // default to gpt-3.5-turbo

"k": 3, // default to 3 (max number of results to use)

"temperature": 0, // default to 0

"max_tokens": 400 // default to 400

}200 OKon success

{

"response": "response_text",

"sources": ["source1", "source2"]

}To allow access to the app externally using the port set in the .env file, you can use ngrok. Follow the steps below:

- Install ngrok: https://ngrok.com/download

- Run

ngrok http <port>in your terminal (replace<port>with the port set in your.envfile) - Copy the ngrok URL generated by the command and use it in your Voiceflow Assistant API step.

This can be handy if you want to quickly test this in an API step within your Voiceflow Assistant.