[IEEE TIM'24] CSBSR:

Joint Learning of Blind Super-Resolution and

Crack Segmentation for Realistic Degraded Images

Toyota Technological Institute

IEEE Transactions on Instrumentation and Measurement (TIM) 2024

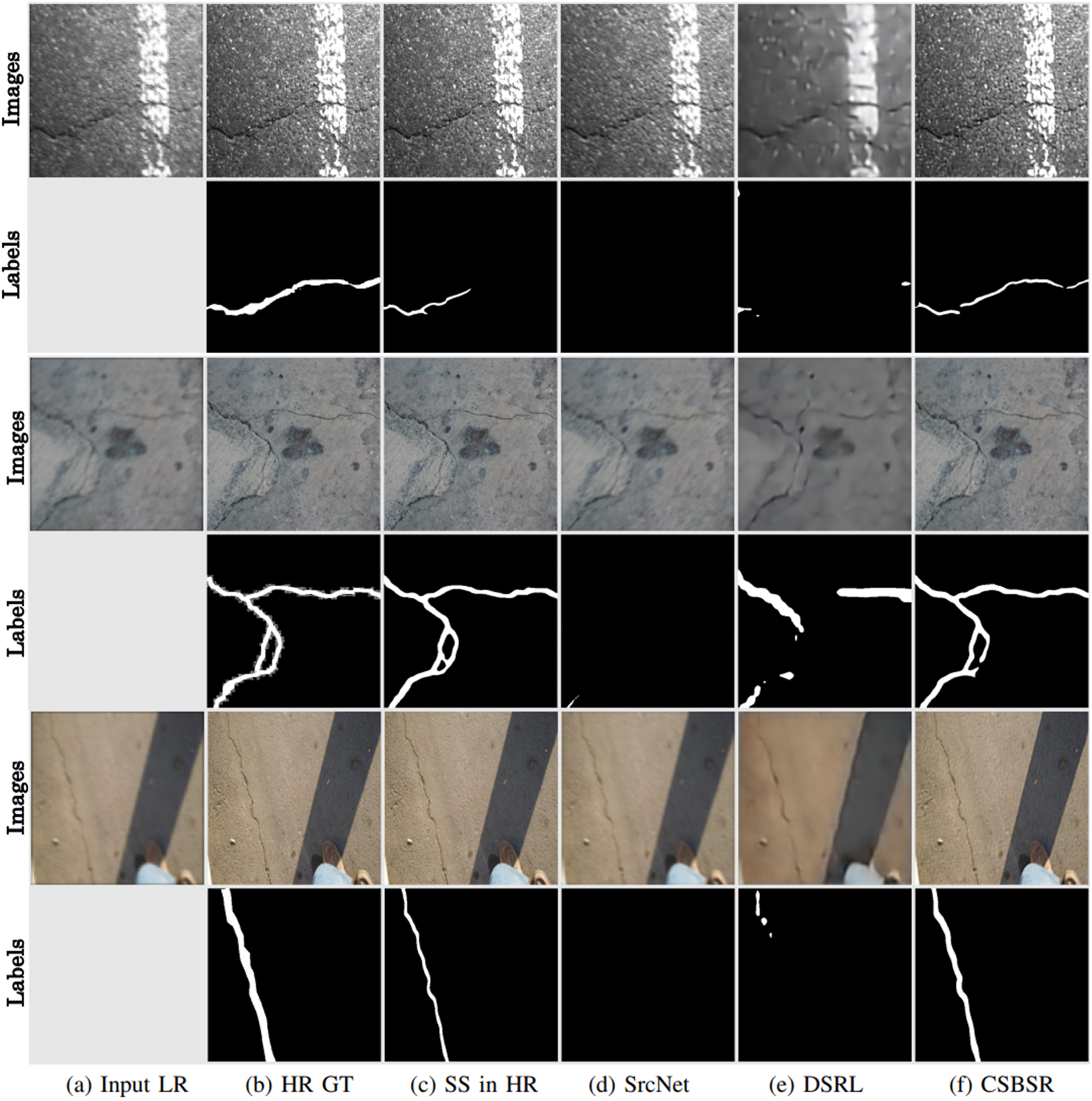

Crack segmentation challenges for synthetically-degraded images given by low resolution and anisotropic Gaussian blur. Our method (f) CSBSR succeeds in detecting cracks in the most detail compared to previous studies (d), (e). Furthermore, in several cases our method was able to detect cracks as successfully as when GT high-resolution images were used for segmentation (c), despite the fact that our method was inferring from degraded images.

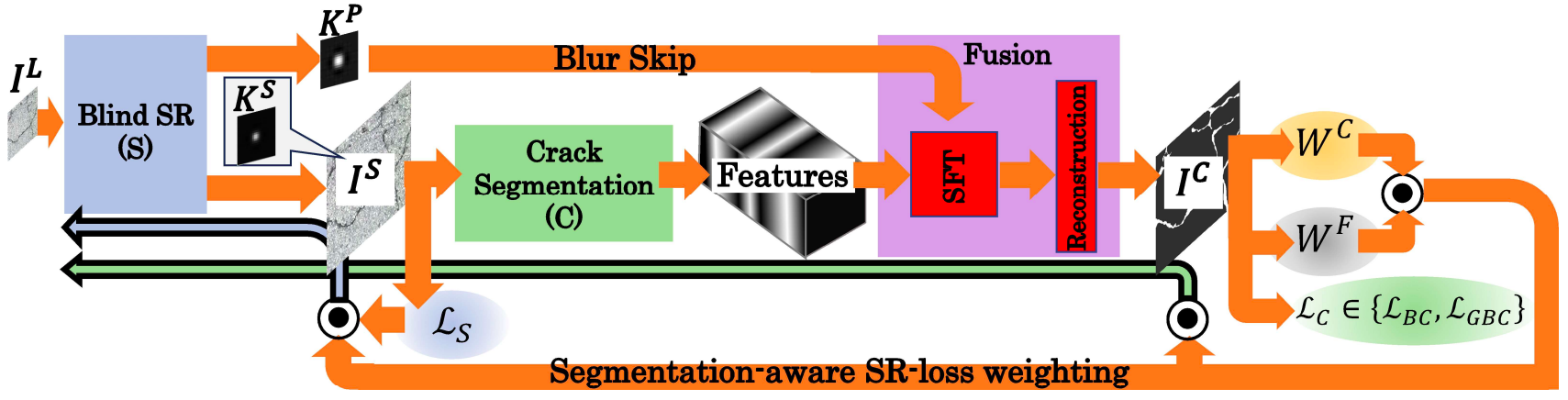

Proposed joint learning network with blind SR and segmentation.

This paper proposes crack segmentation augmented by super resolution (SR) with deep neural networks. In the proposed method, a SR network is jointly trained with a binary segmentation network in an end-to-end manner. This joint learning allows the SR network to be optimized for improving segmentation results. For realistic scenarios, the SR network is extended from non-blind to blind for processing a low-resolution image degraded by unknown blurs. The joint network is improved by our proposed two extra paths that further encourage the mutual optimization between SR and segmentation. Comparative experiments with State-of-the-Art segmentation methods demonstrate the superiority of our joint learning, and various ablation studies prove the effects of our contributions.

git clone git@github.com:Yuki-11/CSBSR.git

cd CSBSR

virtualenv env

source env/bin/activate

pip install -r requirement.txtDue to a temporary issue with wandb, specify the version of protobuf:

codepip install protobuf==3.20.1Download the model weights from here and save them under weights/. The performance of the released models is as follows.

| Model | IoUmax | AIU | HD95min | AHD95 | PSNR | SSIM | Link |

|---|---|---|---|---|---|---|---|

| CSBSR w/ PSPNet (β=0.3) | 0.573 | 0.552 | 20.92 | 22.52 | 28.75 | 0.703 | here |

| CSBSR w/ HRNet+OCR (β=0.9) | 0.553 | 0.534 | 17.54 | 20.29 | 27.66 | 0.668 | here |

| CSBSR w/ CrackFormer (β=0.9) | 0.469 | 0.443 | 39.37 | 56.59 | 25.93 | 0.571 | here |

| CSBSR w/ U-Net (β=0.3) | 0.530 | 0.506 | 26.33 | 27.24 | 28.68 | 0.702 | here |

| CSSR w/ PSPNet (β=0.7) | 0.557 | 0.539 | 21.20 | 24.74 | 28.35 | 0.656 | here |

| CSBSR w/ PSPNet (β=0.3)+wF (mF=1) | 0.573 | 0.551 | 18.73 | 21.7 | 28.73 | 0.702 | here |

| CSBSR w/ PSPNet (β=0.3)+wF (mF=1)+BlurSkip | 0.550 | 0.528 | 18.06 | 19.1 | 28.65 | 0.702 | here |

Download the crack segmentation dataset by khanhha from here and extract it under datasets/. For more details, refer khanhha's repository.

Additionally, download the degraded original test set from here and extract it under datasets/crack_segmentation_dataset. Finally, the directory structure should look like this:

├── datasets

│ └── crack_segmentation_dataset

│ ├── images

│ ├── masks

│ ├── readme

│ ├── test

│ ├── test_blurred

│ └── trainRun the following command for testing:

python test.py weights/CSBSR_w_PSPNet_beta03 latest --sf_save_imageRun the following command for training:

python train.py --config_file config/config_csbsr_pspnet.yamlTo resume training from a checkpoint, use the following command:

python train.py --config_file output/CSBSR/model_compe/CSBSR_w_PSPNet_beta03/config.yaml --resume_iter <resume iteration>Distributed under the Apache-2.0 license License. Some of the code is based on the reference codes listed in the "Acknowledgements" section, and some of these reference codes have MIT license or Apache-2.0 license. Please, see LICENSE for more information.

If you find our models useful, please consider citing our paper!

@article{kondo2024csbsr,

author={Kondo, Yuki and Ukita, Norimichi},

journal={IEEE Transactions on Instrumentation and Measurement},

title={Joint Learning of Blind Super-Resolution and Crack Segmentation for Realistic Degraded Images},

year={2024},

volume={73},

number={},

pages={1-16},

}

This implementation utilizes the following code. We deeply appreciate the authors for their open-source codes.

- Lextal/pspnet-pytorch

- Dootmaan/DSRL

- openseg-group/openseg.pytorch

- LouisNUST/CrackFormer-II

- lterzero/DBPN-Pytorch

- Yuki-11/KBPN

- vinceecws/SegNet_PyTorch

- khanhha/crack_segmentation

- vacancy/Synchronized-BatchNorm-PyTorch

- XPixelGroup/BasicSR

- google-deepmind/surface-distance

- hubutui/DiceLoss-PyTorch

- JunMa11/SegLossOdyssey

- NVIDIA/DeepLearningExamples

- xingyizhou/CenterNet

- YutaroOgawa/pytorch_advanced

If you have any questions, feedback, or suggestions regarding this project, feel free to reach out:

- Email: yuki.kondo.ab@gmail.com