Documentation 📑 Hands-on Tutorials 🎯 RisingWave Cloud 🚀 Get Instant Help

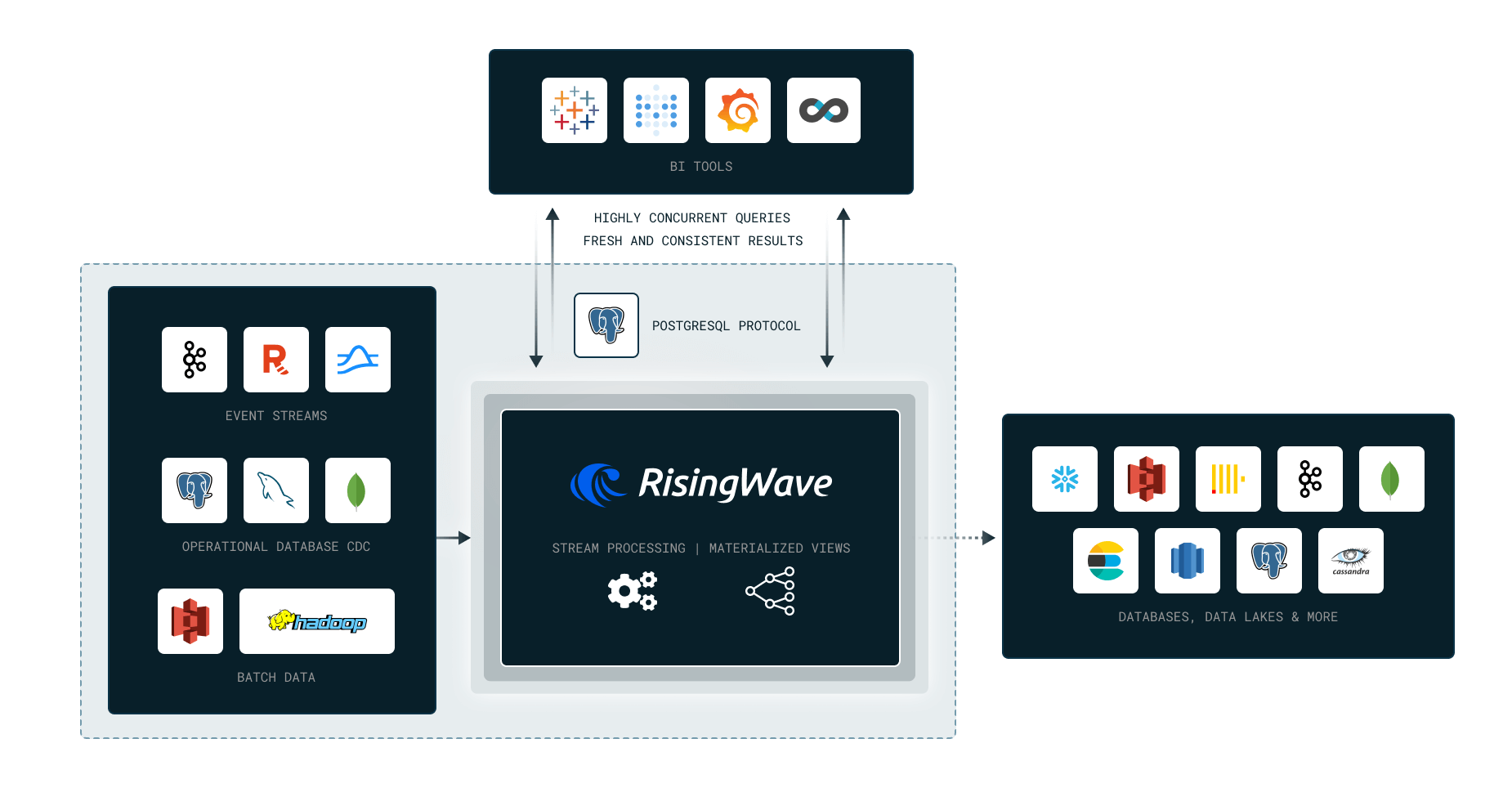

In this hands-on workshop, we’ll learn how to process real-time streaming data using SQL in RisingWave. The system we’ll use is RisingWave, an open-source SQL database for processing and managing streaming data. You may not feel unfamiliar with RisingWave’s user experience, as it’s fully wire compatible with PostgreSQL.

We will use the NYC Taxi dataset, which contains information about taxi trips in New York City.

We’ll cover the following topics in this Workshop:

- Why Stream Processing?

- Stateless computation (Filters, Projections)

- Stateful Computation (Aggregations, Joins)

- Data Ingestion and Delivery

RisingWave in 10 Minutes: https://tutorials.risingwave.com/docs/intro

- Docker and Docker Compose

- Python 3.7 or later

pipandvirtualenvfor Pythonpsql(I use PostgreSQL-14.9)- Clone this repository:

Or, if you prefer HTTPS:

git clone git@github.com:risingwavelabs/risingwave-data-talks-workshop-2024-03-04.git cd risingwave-data-talks-workshop-2024-03-04git clone https://github.com/risingwavelabs/risingwave-data-talks-workshop-2024-03-04.git cd risingwave-data-talks-workshop-2024-03-04

The NYC Taxi dataset is a public dataset that contains information about taxi trips in New York City. The dataset is available in Parquet format and can be downloaded from the NYC Taxi & Limousine Commission website.

We will be using the following files from the dataset:

yellow_tripdata_2022-01.parquettaxi_zone.csv

For your convenience, these have already been downloaded and are available in the data directory.

The file seed_kafka.py contains the logic to process the data and populate RisingWave.

In this workshop, we will replace the timestamp fields in the trip_data with timestamps close to the current time.

That's because yellow_tripdata_2022-01.parquet contains historical data from 2022,

and we want to simulate processing real-time data.

$ tree -L 1

.

├── README.md # This file

├── clickhouse-sql # SQL scripts for Clickhouse

├── commands.sh # Commands to operate the cluster

├── data # Data files (trip_data, taxi_zone)

├── docker # Contains docker compose files

├── requirements.txt # Python dependencies

├── risingwave-sql # SQL scripts for RisingWave (includes some homework files)

└── seed_kafka.py # Python script to seed Kafka

Before getting your hands dirty with the project, we will:

- Run some diagnostics.

- Start the RisingWave cluster.

- Setup our python environment.

# Check version of psql

psql --version

source commands.sh

# Start the RW cluster

start-cluster

# Setup python

python3 -m venv .venv

source .venv/bin/activate

pip install -r requirements.txtcommands.sh contains several commands to operate the cluster. You may reference it to see what commands are available.

Now you're ready to start on the workshop!