Julius AI is an advanced AI-powered platform that conducts complete technical interviews through real-time voice interaction, structured conversational assessment, and separate coding evaluation. The system orchestrates a deterministic interview state machine with autonomous agents, culminating in detailed scoring and hiring recommendations that combine both conversational and coding performance metrics.

Experience Julius AI in action:

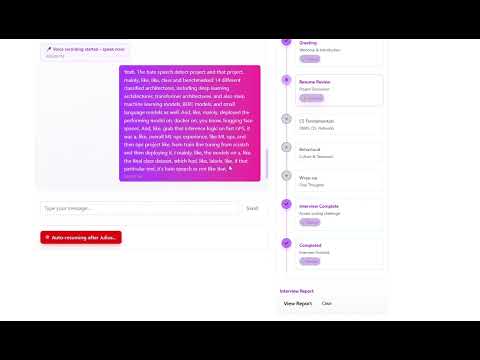

- 6-Stage Conversational Interview: Greeting → Resume Review → Computer Science → Behavioral Questions → Wrap-up → Completion

- Real-time Voice Interaction: Speech-to-text and text-to-speech powered by Deepgram and ElevenLabs

- Intelligent Conversational Scoring: Comprehensive evaluation of communication skills, technical knowledge, and behavioral competencies

- Resume-Driven Questions: AI analyzes uploaded resumes to generate targeted, experience-specific questions

- Adaptive Questioning: Difficulty and progression adjust based on candidate responses and knowledge demonstration

- AI-Generated Problem Sets: Curator agent creates 3 unique problems (easy/medium/hard) with starter templates

- Multi-Language Support: Java, Python, and C++ with syntax-highlighted code editors

- 90-Minute Timed Assessment: Auto-submission with comprehensive evaluation

- Automated Code Evaluation: Test case execution, correctness validation, and AI-powered code analysis

- Detailed Performance Metrics: Scores across correctness, optimization, readability, and algorithmic efficiency

- Dual-Assessment Scoring: Combines conversational interview performance with coding proficiency

- Comprehensive Reports: Strengths, weaknesses, improvement areas, and actionable recommendations

- Hiring Recommendations: Data-driven hire/no-hire decisions with detailed rationale

- Performance Analytics: Historical tracking and comparative analysis for recruiters

- Frontend: Next.js 14 with TypeScript, Tailwind CSS, and real-time WebSocket integration

- Backend: Node.js with WebSocket server for low-latency audio processing

- AI Integration: Groq API for conversational agents, OpenAI for advanced evaluation

- Voice Services: Deepgram (STT) and ElevenLabs (TTS) with AWS fallbacks

- Code Execution: OneCompiler API for secure, sandboxed code testing

- Data Persistence: MongoDB for authoritative interview records, Redis for session caching and performance optimization

- Overview & Architecture

- Interview Flow (6 Stages)

- Coding Challenge Platform

- Real-time Voice Processing

- Evaluation & Scoring System

- Technical Implementation

- Directory Structure

- API Endpoints

- Deployment & Operations

- Security & Compliance

Julius AI revolutionizes technical interviewing by combining conversational AI assessment with practical coding evaluation. The platform maintains conversation history, adapts questions based on candidate responses, and provides comprehensive evaluation reports.

flowchart TB

%% Client Layer

subgraph CLIENT["🖥️ Client Browser"]

UI[Interview UI]

MIC[Microphone Input]

EDITOR[Coding Editor]

PLAYER[Audio Player]

UPLOADER[Resume Upload]

end

%% Core Processing

subgraph CORE["🧠 Core Processing"]

ORCHESTRATOR[Interview Orchestrator]

UNIFIED_AGENT[Unified Interview Agent]

SCORING_AGENT[Scoring Agent]

RECOMMEND_AGENT[Recommendation Agent]

end

%% Coding Platform

subgraph CODING["💻 Coding Platform"]

CURATOR[Coding Curator Agent]

EVALUATOR[Coding Evaluator Agent]

EXECUTOR[Code Executor]

TIMER[90-min Timer]

end

%% External Services

subgraph SERVICES["🔧 External Services"]

DEEPGRAM[Deepgram STT]

ELEVENLABS[ElevenLabs TTS]

GROQ[Groq API]

ONECOMPILER[OneCompiler API]

end

%% Data Layer

subgraph STORAGE["💾 Storage"]

MONGODB[(MongoDB)]

REDIS[(Redis Cache)]

end

%% Flow Connections

CLIENT -->|WebSocket Events| ORCHESTRATOR

ORCHESTRATOR -->|Route by Stage| UNIFIED_AGENT

UNIFIED_AGENT -->|Structured Responses| ORCHESTRATOR

ORCHESTRATOR -->|End Interview| SCORING_AGENT

SCORING_AGENT -->|Conversational Report| RECOMMEND_AGENT

CLIENT -->|Start Coding| CURATOR

CURATOR -->|Problems + Timer| CLIENT

CLIENT -->|Code Submission| EVALUATOR

EVALUATOR -->|Evaluation Report| RECOMMEND_AGENT

UNIFIED_AGENT -->|Prompts| GROQ

SCORING_AGENT -->|Analysis| GROQ

RECOMMEND_AGENT -->|Final Report| GROQ

CURATOR -->|Problem Generation| GROQ

EVALUATOR -->|Code Analysis| GROQ

EVALUATOR -->|Test Execution| ONECOMPILER

ORCHESTRATOR -->|Audio Synthesis| ELEVENLABS

ELEVENLABS -->|Audio Response| CLIENT

MIC -->|Audio Stream| DEEPGRAM

DEEPGRAM -->|Transcripts| ORCHESTRATOR

ORCHESTRATOR -->|Persist Records| MONGODB

CURATOR -->|Cache Problems| REDIS

RECOMMEND_AGENT -->|Final Report| MONGODB

The interview follows a structured progression through conversational assessment stages, each driven by AI agents that adapt based on candidate responses.

- Agent: Unified Interview Agent

- Focus: Rapport building, role expectations, technical background

- Duration: 3-4 targeted questions

- Transition: Advances to resume review after establishing context

- Agent: Unified Interview Agent with resume analysis

- Focus: Experience validation, project deep-dives, technical achievements

- Features: AI extracts key artifacts from uploaded resume for specific questions

- Transition: Moves to technical assessment

- Agent: Unified Interview Agent

- Focus: Algorithms, data structures, system design, problem-solving

- Adaptation: Difficulty scales based on demonstrated knowledge

- Transition: Advances to behavioral assessment or early wrap-up if knowledge gaps identified

- Agent: Unified Interview Agent

- Focus: Teamwork, leadership, conflict resolution, communication

- Methodology: STAR method for structured responses

- Transition: Proceeds to interview conclusion

- Agent: Unified Interview Agent

- Focus: Overall experience, key takeaways, final questions

- Transition: Completes conversational interview

- Trigger: Scoring and recommendation generation

- Output: Conversational performance report

- Next Step: Access to coding challenge platform

Following interview completion, candidates access a separate coding assessment with AI-generated challenges.

- Curator Agent: Generates 3 unique problems across difficulty levels

- Languages: Java, Python, C++ with appropriate starter templates

- Test Cases: Comprehensive input/output validation

- Caching: Redis-backed problem storage for consistent assessment

- Timer: 90-minute completion window with auto-submission

- Editor: Syntax-highlighted code editor with language switching

- Testing: Real-time test case execution and result display

- Submission: Manual or automatic code evaluation

- Correctness: Test case pass/fail analysis (1-10 scale)

- Optimization: Time/space complexity assessment (1-10 scale)

- Readability: Code quality and maintainability scoring (1-10 scale)

- Feedback: AI-generated analysis with specific improvement suggestions

- Service: Deepgram WebSocket streaming

- Features: Real-time transcription with interim results

- Integration: Direct WebSocket connection per session

- Fallback: AWS Transcribe for reliability

- Service: ElevenLabs neural voice synthesis

- Trigger: Agent response generation

- Format: Base64 audio buffer delivery

- Control: Playback synchronization with microphone management

- Speech Tracking: Active speech detection and silence timeouts

- Code Tracking: Keystroke debouncing and idle detection

- Invocation Rules: Intelligent LLM triggering based on content readiness

- Audio Management: TTS playback blocking to prevent feedback loops

- Scoring Agent: Analyzes interview performance across dimensions

- Metrics: Communication clarity, technical knowledge, problem-solving approach

- Output: Stage-wise breakdowns and overall performance score (1-100)

- Recommendation: Hire/No-hire with detailed rationale

- Evaluator Agent: Comprehensive code analysis

- Execution: Test case validation via OneCompiler API

- Analysis: AI-powered assessment of algorithm efficiency and code quality

- Reporting: Detailed feedback with strengths, weaknesses, and suggestions

- Recommendation Agent: Synthesizes all assessment data

- Integration: Combines conversational and coding performance

- Output: Comprehensive hiring recommendation with actionable insights

- Persistence: MongoDB storage for audit and analytics

- Manages interview state machine

- Routes messages to appropriate agents

- Handles stage transitions and scoring triggers

- Persists interview records to MongoDB

- Processes conversational stages

- Adapts questioning based on responses

- Integrates resume analysis for targeted questions

- Returns structured JSON responses

- Generates problem sets using Groq API

- Creates starter templates and test cases

- Caches problems in Redis for performance

- Executes code against test cases

- Performs AI analysis of solutions

- Generates detailed evaluation reports

start_transcription: Initialize audio streamingaudio_chunk: Base64 PCM audio datatext_input: Fallback text messagescode_input: Code submissionsset_resume_path: Resume association

partial_transcript: Real-time speech recognitionfinal_transcript: Completed speech processingagent_response: AI agent repliesaudio_response: Synthesized speech audiostage_changed: Interview progression updatesscoring_result: Performance evaluationrecommendation_result: Final hiring recommendation

- Interview step records

- Complete conversation history

- Scoring and recommendation reports

- User session data

- Session conversation context

- Curated problem sets

- Ephemeral session flags

- Rate limiting and counters

julius-ai/

├── app/ # Next.js application

│ ├── api/ # API route handlers

│ │ ├── curate-coding/ # Problem generation endpoints

│ │ ├── evaluate-coding/ # Code evaluation API

│ │ ├── interview-stage/ # Interview progression

│ │ ├── upload-resume/ # Resume processing

│ │ └── sessions/ # Session management

│ ├── coding-test/ # Coding assessment UI

│ ├── components/ # Reusable React components

│ ├── interview/ # Main interview interface

│ └── page.tsx # Landing page

├── core/ # Runtime session management

│ ├── agent/ # Agent orchestration

│ ├── audio/ # Audio processing

│ ├── interview/ # Interview state models

│ ├── messaging/ # Message handling

│ └── server/ # WebSocket server

├── lib/ # Domain services & integrations

│ ├── models/ # Data models & schemas

│ ├── prompts/ # AI agent prompt templates

│ ├── services/ # Business logic services

│ └── utils/ # Helper utilities

├── ws-server/ # WebSocket server for real-time audio

├── assests/ # Static assets and images

├── tests/ # Unit and integration tests

└── uploads/ # User-uploaded files

POST /api/sessions- Create new interview sessionGET /api/session-status- Check session statePOST /api/interview-stage- Advance interview stage

GET /api/curate-coding- Generate coding problemsPOST /api/evaluate-coding- Submit and evaluate codeGET /api/curate-coding/problem/[id]- Retrieve cached problem

POST /api/upload-resume- Upload and process resumeGET /api/user/interviews- Retrieve user interview history

GET /api/recruiter/sessions- Access all sessions (recruiter view)

# Install dependencies

npm install

# Configure environment variables

cp .env.local.example .env.local

# Add API keys for Deepgram, ElevenLabs, Groq, etc.

# Start development servers

npm run ws-server # WebSocket server on port 8080

npm run dev # Next.js app on port 3000- WebSocket Server: Dedicated service for audio processing

- Next.js Application: Standard deployment with API routes

- Database: MongoDB cluster with connection pooling

- Cache: Redis instance with persistence

- Load Balancing: Sticky sessions for WebSocket connections

- Horizontal scaling with session affinity

- Deepgram concurrency monitoring

- Redis-backed session state sharing

- Comprehensive error logging and alerting

- Resume Handling: Secure file storage with access controls

- Session Data: Encrypted conversation history

- API Security: Key rotation and access monitoring

- PII Protection: Minimal data retention policies

- Environment-based secret management

- No hardcoded API keys in repository

- Regular credential rotation

- Restricted access to production secrets

- WebSocket connection validation

- Rate limiting on API endpoints

- Input sanitization and validation

- Secure code execution sandboxing

-

Clone and Install

git clone <repository-url> cd julius-ai npm install

-

Environment Configuration

cp .env.local.example .env.local # Configure required API keys -

Database Setup

# Ensure MongoDB and Redis are running # Update connection strings in .env.local

-

Start Services

npm run ws-server # Terminal 1 npm run dev # Terminal 2

-

Access Application

- Interview: http://localhost:3000/interview

- Coding Test: http://localhost:3000/coding-test

- Follow TypeScript strict mode

- Maintain comprehensive test coverage

- Use conventional commit messages

- Document API changes

- ESLint configuration enforced

- Pre-commit hooks for validation

- Automated testing pipeline

- Performance monitoring

Julius AI is designed for comprehensive technical assessment, combining conversational intelligence with practical coding evaluation to provide recruiters with data-driven hiring insights.

For support or questions, please refer to the documentation or contact the development team.