This project was submitted as part of Udacity's Deep Reinforcement Learning Nanodegree and is a solution to UnityML "Reacher"

The purpose of the project is to build and train a single agent that tries to maintain its position at the target location for as many time steps as possible.

This problem is episodic, where each episode is consists of 1000 steps. The environment provides a reward of +0.1 for each step that the agent's hand is in the goal location, and zero otherwise. Thus, the goal of the agent is to maintain its position at the target location for as many time steps as possible. The minimal requirement for success is to have an average score of at least 30.0 points in 100 consecutive episodes.

The observation space consists of 33 variables corresponding to position, rotation, velocity, and angular velocities of the arm. Each action is a vector with four numbers, corresponding to torque applicable to two joints. Every entry in the action vector should be a floating point number between -1 and 1.

The agent runs on Python 3.6 + PyTorch. The paper that describes the algorithm is "DDPG-network".

The implementation details, the hyperparameters and the results, can be found at: report.md

The original git repo of this project is at: https://github.com/udacity/deep-reinforcement-learning/tree/master/p2_navigation

To set up a python environment to run the code in this repository, please follow the instructions below:

-

Create (and activate) a new environment with Python 3.6.

- Linux or Mac:

conda create --name drlnd python=3.6 source activate drlnd- Windows:

conda create --name drlnd python=3.6 conda activate drlnd

-

Install pytorch using conda:

conda install pytorch torchvision cudatoolkit=10.2 -c pytorch

- Clone this git repo

git clone git@github.com:drormeir/ReacherRL.git

cd ReacherRL

pip install .-

Download the environment from one of the links below. You need only select the environment that matches your operating system:

- Linux: click here

- Mac OSX: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

-

Create an IPython kernel for the

drlndenvironment.

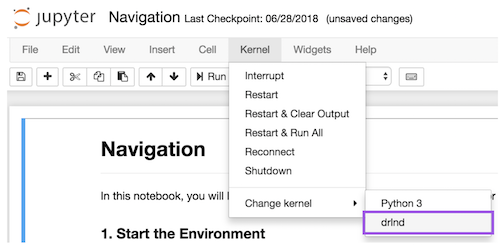

python -m ipykernel install --user --name drlnd --display-name "drlnd"- Before running code in a notebook, change the kernel to match the

drlndenvironment by using the drop-downKernelmenu.

The Jupyter notebook Continuous_Control.ipynb imports all necessary dependencies and the python files of this project.

A detailed report describing the learning algorithm, along with ideas for future work is at report.md