|

Best Poster Award at the ACM International Conference on Multimedia Retrieval (ICMR) 2016 |

|---|

|

|

|

|

|

|

|---|---|---|---|---|---|

| Eva Mohedano | Amaia Salvador | Kevin McGuinness | Xavier Giro-i-Nieto | Noel O'Connor | Ferran Marques |

A joint collaboration between:

|

|

|

|

|

|---|---|---|---|---|

| Insight Centre for Data Analytics | Dublin City University (DCU) | Universitat Politecnica de Catalunya (UPC) | UPC ETSETB TelecomBCN | UPC Image Processing Group |

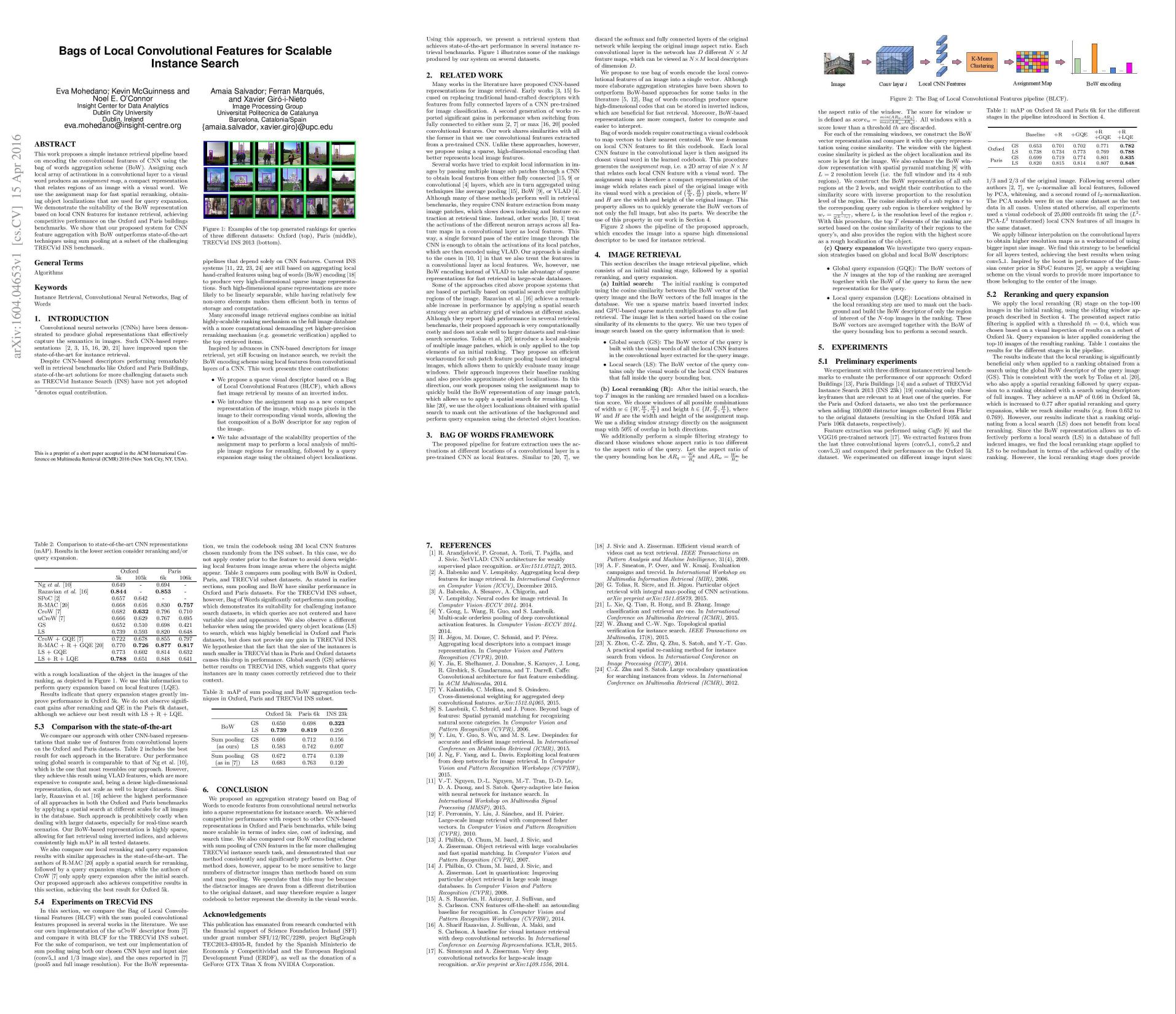

This work proposes a simple instance retrieval pipeline based on encoding the convolutional features of CNN using the bag of words aggregation scheme (BoW). Assigning each local array of activations in a convolutional layer to a visual word produces an assignment map, a compact representation that relates regions of an image with a visual word. We use the assignment map for fast spatial reranking, obtaining object localizations that are used for query expansion. We demonstrate the suitability of the BoW representation based on local CNN features for instance retrieval, achieving competitive performance on the Oxford and Paris buildings benchmarks. We show that our proposed system for CNN feature aggregation with BoW outperforms state-of-the-art techniques using sum pooling at a subset of the challenging TRECVid INS benchmark.

Find our paper at ACM Digital Library, arXiv and DCU Doras.

Please cite with the following Bibtex code:

@inproceedings{Mohedano:2016:BLC:2911996.2912061,

author = {Mohedano, Eva and McGuinness, Kevin and O'Connor, Noel E. and Salvador, Amaia and Marques, Ferran and Giro-i-Nieto, Xavier},

title = {Bags of Local Convolutional Features for Scalable Instance Search},

booktitle = {Proceedings of the 2016 ACM on International Conference on Multimedia Retrieval},

series = {ICMR '16},

year = {2016},

isbn = {978-1-4503-4359-6},

location = {New York, New York, USA},

pages = {327--331},

numpages = {5},

url = {http://doi.acm.org/10.1145/2911996.2912061},

doi = {10.1145/2911996.2912061},

acmid = {2912061},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {bag of words, convolutional neural networks, instance retrieval},

}

2016-05-Seminar-AmaiaSalvador-DeepVision from Image Processing Group on Vimeo.

This talk also covers our paper "Faster R-CNN features for Instance Search" at CVPR 2016 Workshop on DeepVision.

<iframe src="//www.slideshare.net/slideshow/embed_code/key/lZzb4HdY6OEZ01" width="595" height="485" frameborder="0" marginwidth="0" marginheight="0" scrolling="no" style="border:1px solid #CCC; border-width:1px; margin-bottom:5px; max-width: 100%;" allowfullscreen> </iframe>These slides also cover our paper "Faster R-CNN features for Instance Search" at CVPR 2016 Workshop on DeepVision.

It contains scripts to build Bag of Visual Words based on local CNN features to perform instance search in three different datasets:

-

Oxford Buildings (and Oxford 105k).

-

Paris Buildings (and Paris 106k).

-

Trecvid_subset: Subset of 23.614 keyframes/~13.000video shots from TRECVid-INS dataset. Keyframes extracted uniformly at 1/4fps. Queries and groundtruth correspond to INS2013.

Python packages necessary specified in requirements.txt run:

pip install -r requirements.txt

It also needs:

-

caffe with python support

-

vlfeat library

- Once installed, modify 'kmeans.py' file, located in the vlfeat python package: (i.e /usr/local/lib/python2.7/dist-packages/vlfeat_ctypes-0.1.4-py2.7.egg/vlfeat/kmeans.py) for lib/kmeans.py of this repo.

-

invidx module. Follow instructions in 'lib/py=inverted-index'/

NOTE You can create a virtual enviroment to set the specific dependences of this project in an independent enviroment, without modifying your original python enviroment. Check how to create a virtual enviroment. Using --system-site-packages flag when creating the venv will copy all the packages from your python installation. This will prevent you of re-installing packages in the new virtual enviroment that you have already installed. This will allow you to install only the new packages.

bow_pipeline folder contain the main scripts. Parameters must be specified in the settings.json file located in the folder named as the dataset.

This script extracts features from a pre-trained CNN (by default the fully convolutional VGG-16 network. It computers descriptors from the specified layer/s Layer_output parameter in a levelDB dataset in featuresDB paramenter in the settings.json, with the format [featuresDB]/[layer]_db.

NOTE Features are stored as the original dictionary created by the Net class from caffe. For reading, you should use the class Local_Feature_ReaderDB located in bow_pipeline/reader.py. This class contains methods to extract local features in the format (n_samples, n_dimensions)/image for BoW encoding. It also performs SumPooling, generating (1, n_dimensions)/image. It also contains methods to interpolate feature maps when reading. For more info in, check bow_pipeline/reader.py script.

It performs the clustering of the local features. It is necessary to set the following parameters:

TRAIN_PCA=True -- If we want PCA/whitenning (default true)

TRAIN_CENTROIDS=True -- if we want to train centroids (default true)

l2norm=True -- if we want to perform l2-norm on the features (default true)

n_centers=25000 -- # of clusters

pca_dim=512 -- dimensions when doing PCA

It is necessary to specify the settings.json (different for each dataset, which contains paths for reading features/store models.

Once the visual vocabulary is build, we can compute the assignments based on the local features/image and we can index the dataset of images. It is necessary to set the following parameters (check the script):

settings file for the dataset

dim_input -- network input dimensions in string format

network="vgg16" -- string with network name to use

list_layers -- list with layer/s to use

new_dim -- tuple with the feature map dimension

It uses the inverted file generated and generates the rankigns for the queries. It computes the assignments for the query on-the-fly. It is necessary to set parameters as in Step 3, with the additional:

- masking = 3 (Kind of masking applied on query):

0== No maks;

1 == Only consider words from foreground;

2 == Only consider words from background [CHANGED];

3 == Apply inverse weight to the foreground object

- augmentation = [0] [TO REVIEW]

0 == No augmentation;

1 == 0+Flipped image;

2 == 0+Zoomed image (same size as input net, but just capturing center crop

of the image when it has been zoomed to the double size).

3 == 0+Flipped zoomed crop

- QUERY_EXPANSION [TO_ADD]

NOTE If using bow_pipeline/D_rankings_Pooling.py; Skip steps 2 and 3. The whole pipeline is based in sumpooled features (without inverted index and codebook generation).

.txt files are generated per query under datasetFolder/lists_[bow/pooling].

It computes mean average precission for rankings generated in Step 4.

-

evaluate_Oxf_Par for Paris and Oxford datasets

-

evaluate_trecvid for TRECVid subset.

NOTE It generate map.txt in bow_pipeline folder with results.