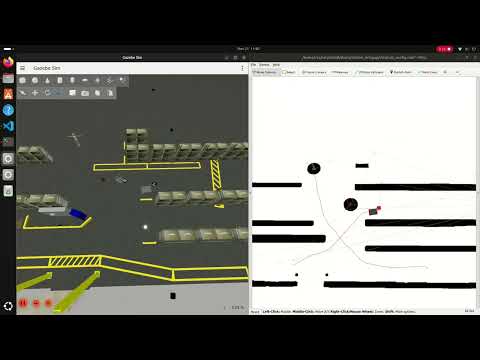

This simulation environment, based on the Ignition Gazebo simulator and ROS 2, resembles a Volvo Trucks warehouse and serves as a playground for rapid prototyping and testing of systems that rely on a multi-camera setup for perception, monitoring, localization or even navigation. This project is inspired by GPSS (Generic photo-based sensor system) that utilizes ceiling-mounted cameras, deep learning and computer vision algorithms, and very simple transport robots. [📹 GPSS demo]

- Ignition Gazebo

- Library of assets

- Real-World environment inspired design (camera position and warehouse layout)

- ROS 2 interfaces (Humble and Jazzy)

- Simple GPSS (Generic Photo-based Sensor System) navigation

- Multi-Robot localization and navigation using Nav2

- ArUco marker localization

- Bird's-Eye view projection

- Multi-Sensor Support (LiDAR, RGB camera, semantic segmentation, depth, etc.)

- Geofencing for safe zones and safe stop on collision

- Humanoid worker model

- Panda arm robotic arm

- Deep-learning-based human pose capture and replay,

📹 Click the YouTube link below to view the SIMLAN demo video:

Installation [📹 Demo]

Ubuntu 24.04: use the instructions in dependencies.md#linux-dependencies to install Docker and ensure that your Linux user account has docker access.

Attention: Make sure to restart the computer (for the changes in group membership to take effect) before proceeding to the next step.

Windows 11: use the instructions in dependencies.md#windows-dependencies to install dependencies.

Production environment: follow installation procedure used in .devcontainer/Dockerfile to install dependencies.

Development environment: To improve collaboration we use VS Code and Docker as explained in this instruction and docker files.

Install Visual Studio Code (VS Code) and open the project folder. VS Code will prompt you to install the required extension dependencies.

Make sure the Dev containers extension is installed. Reopen the project in VS Code, and you will be prompted to rebuild the container. Accept the prompt; this process may take a few minutes.

Once VS Code is connected to Docker (as shown in the image below), open the terminal and run the following commands:

(if you don't see this try to build manually in VS Code by pressing Ctrl + Shift + P and select Dev Containers: Rebuild and Reopen in Container.)

The best place to learn about the various features, start different components, and understand the project structure is ./control.sh.

Attention: The following commands (using ./control.sh) are executed in a separate terminal tab inside VS Code.

To kill all the relevant processes (related to Gazebo and ROS 2), delete build files, delete recorded images and rosbag files using the following command:

./control.sh cleanTo clean up and build the project:

./control.sh build(optionally, in VS Code you can click on Terminal-> Run Task/Run Build Task or use Ctrl + Shift + B)

GPSS controls (pallet trucks, aruco) [📹 Demo]

It is possible for the cameras to detect ArUco markers on the floor and publish their location to TF, both relative to the camera, and the ArUcos transform from origin. The package ./camera_utility/aruco_localization contains the code for handling ArUco detection.

You can also use Nav2 to make a robot_agent (that can be either robot/pallet_truck) navigate by itself to a goal position. You can find the code in simulation/pallet_truck/pallet_truck_navigation

Run these three in separate terminals

./control.sh gpss # spawn the simulation, robot_agents and GPSS ArUco detection

./control.sh nav # spawn map server, and separate nav2 stack in a separate namespace for each robot_agent

./control.sh send_goal # send navigation goals to nav2 stack for each robot_agentcamera_enabled_ids in config.sh specifies which cameras are enabled in the GPSS system for ArUco code detection and bird's-eye view.

./control.sh birdeyeRITA controls (humanoid, robotic arm) [📹 Demo]

To spawn a human worker run the following command

./control.sh sim

./control.sh humanoidWe employ a deep neural network to learn the mapping between human pose and humanoid robot motion. The model takes 33 MediaPipe pose landmarks as input and predicts corresponding robot joint positions. Read more about it Humanoid Utilities

Spawn the Panda arm inside SIMLAN and instruct it to pick and place a box around with the following commands:

./control panda

./control plan_motion

./control pick

Integration tests can be found inside of the integration_tests/test/ package. Running the tests helps maintain the project's quality. For more information about how the tests are set up, check out the package README. To run all tests, run the following command:

./control.sh build

./control.sh test

In config.sh it is possible to customize your scenarios. From there you can edit what world you want to run, how many cameras you want enabled, and also edit Humanoid-related properties. Modifying these variables is preferred, rather than modifying the control.sh file.

In config.sh there is a variable headless_gazebo that you can set to "true" or "false". Setting it to true means will run without a window though the simulation will still run as normal. This can be uselfull when visualization is redundant. Setting it to false means the gazebo window will be visible.

in the config.sh script, you can adjust the world fidelity

default: Contains the default world with maximum objectsmedium: Based on default but boxes are removedlight: Based on medium but shelves are removedempty: Everything except the ground is removed

gz_classic_humblebranch contains code for Gazebo Classic (Gazebo11) that has reached end-of-life (EOL).ign_humblebranch contains code for ROS 2 Humble & Ignition Gazebo, an earlier version of this repository.

You can build the online documentation page or a PDF file by running scripts in resources/build-documentation.

control.shscript is a shortcut to run different launch scripts, please also see these diagram.config.shcontains information about which world is loaded, which cameras are active, and what and where the robots are spawned.- Marp Markdown Presentation

- Configuration Generation

- Bringup and launch files

- Pallet Truck Navigation Documentation

- Camera Utilities and notebooks: (Extrinsic/Intrinsic calibrations and Projection )

- Humanoid Utilities (pose2motion)

CHANGELOG.mdcredits.mdLICENSE(apache 2)contributing.mdISSUES.md: known issues and advanced featuressimulation/: ROS2 packages- Simulation and Warehouse Specification (fidelity)

- Camera and Birdeye Configuration

- Building Gazebo models (Blender/Phobos)

- Objects Specifications

- Warehouse Specification

- Pallet truck bringup

- Aruco Localization Documentation

- Geofencing and Collision safe stop

- Visualize Real Data requires data from Volvo

- Humanoid bringup

- humanoid_robot simulation

- Humanoid Control

This work was carried out within these research projects:

- The SMILE IV project financed by Vinnova, FFI, Fordonsstrategisk forskning och innovation under the grant number 2023-00789.

- The EUREKA ITEA4 ArtWork - The smart and connected worker financed by Vinnova under the grant number 2023-00970.

| INFOTIV AB | Dyno-robotics | RISE Research Institutes of Sweden | CHALMERS | Volvo Group |

|---|---|---|---|---|

|

|

|

|

|

SIMLAN project was started and is currently maintained by Hamid Ebadi. To see a complete list of contributors see the changelog.