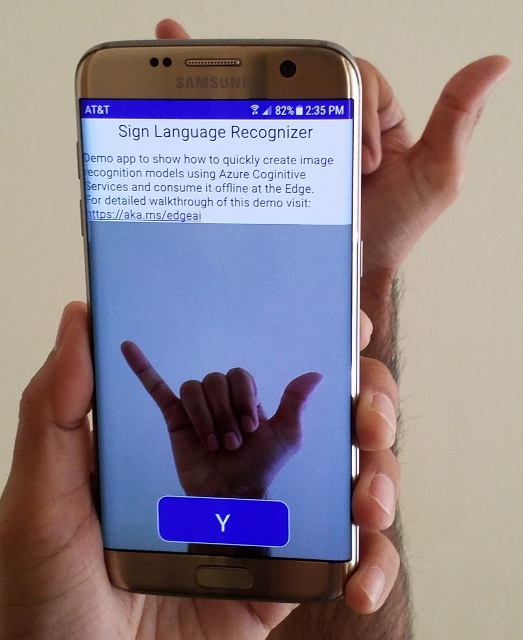

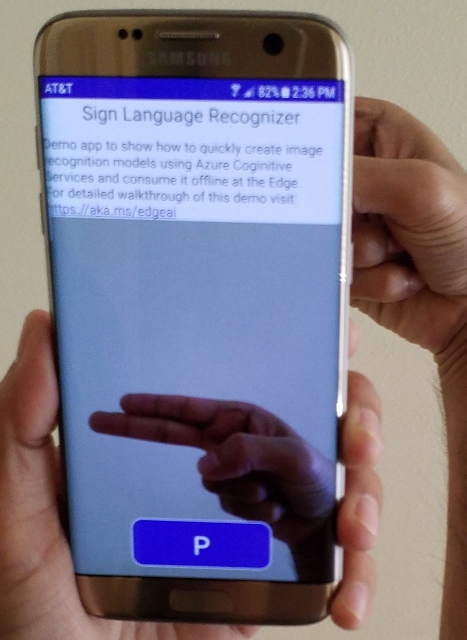

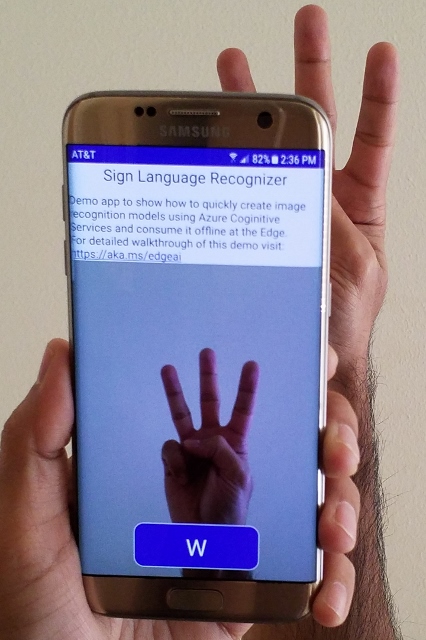

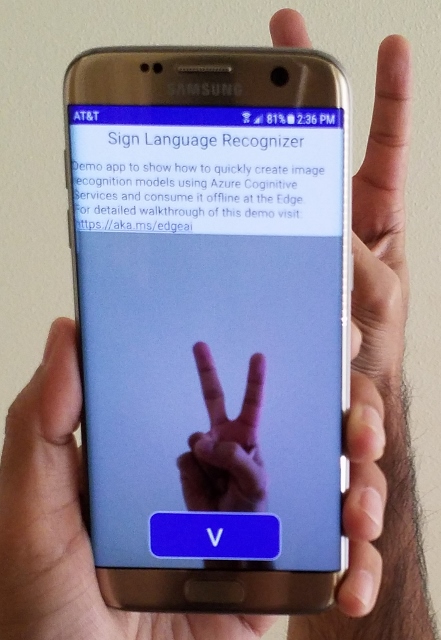

For this walkthrough we will use an Android Phone/Tablet as the Intelligent Edge device. The goal is to show how we can quickly create image recognition models using Custom Vision Service and export it to consume it offline at the Edge.

If you just want to test the app you can download the APK file from here.

-

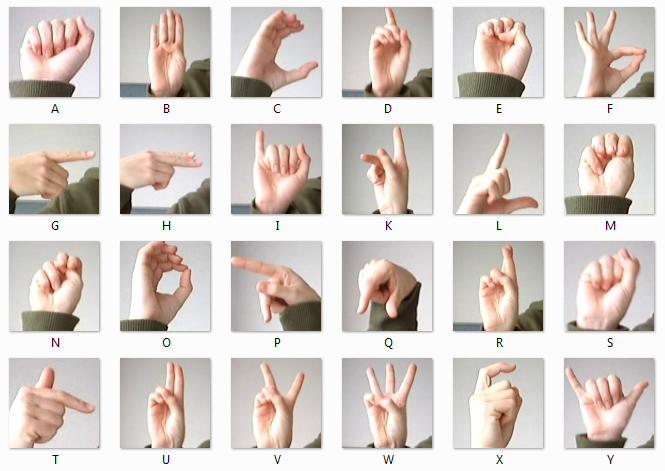

Download dataset.zip file and extract it

- Original dataset can be found here

-

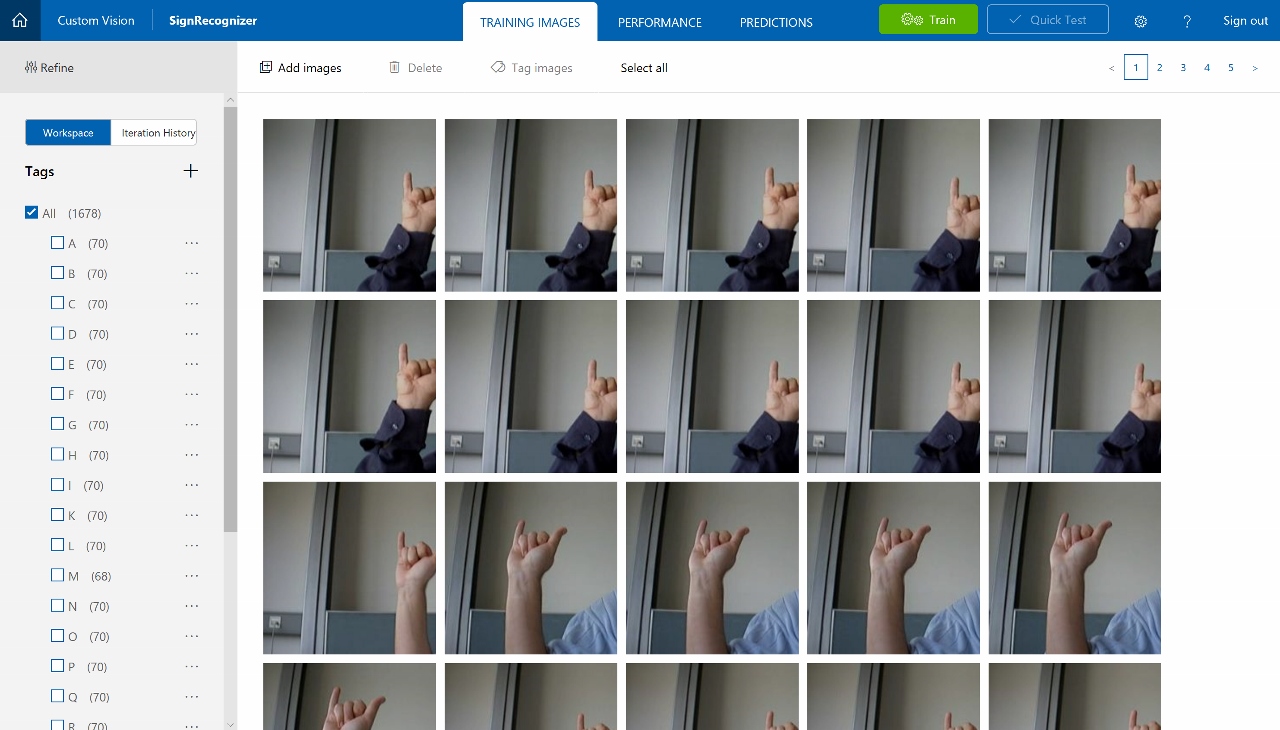

Sigin to Custom Vision Service using your Azure Account

-

Create New Project with Domains as General (compact)

-

Upload all images from dataset\A folder with Tag A

-

Repeat above step for all alphabets in the dataset...

-

Click the Train button at top to start training the model

-

Once the training is complete use the Quick Test button to upload a new image and test it.

-

Under Performance tab click Export

-

Select Android (Tensorflow) and download the zip file

-

Extract the zip file and verify that it contains model.pb and labels.txt file

-

Clone cognitive-services-android-customvision-sample repo as a template or you can use the android-mobileapp code from this repo.

-

Replace both model.pb and labels.txt files in

app\src\main\assets\ -

Open the project in Android Studio

-

Make any updates to UI / Labels as necessary

-

First enable Developer Mode + USB Debugging on the Android device

- See instructions for Samsung Galaxy S7 here

-

Connect your device to laptop via USB

-

Click Run and select the app

-

Select the Connected Device

- For first time you need to allow the camera and other permissions and run it again.