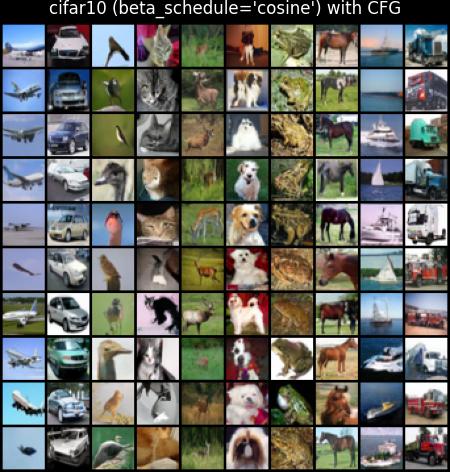

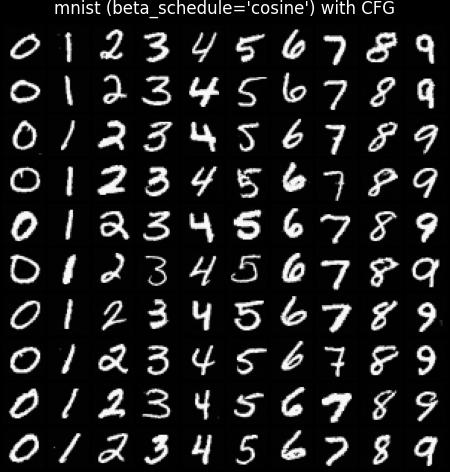

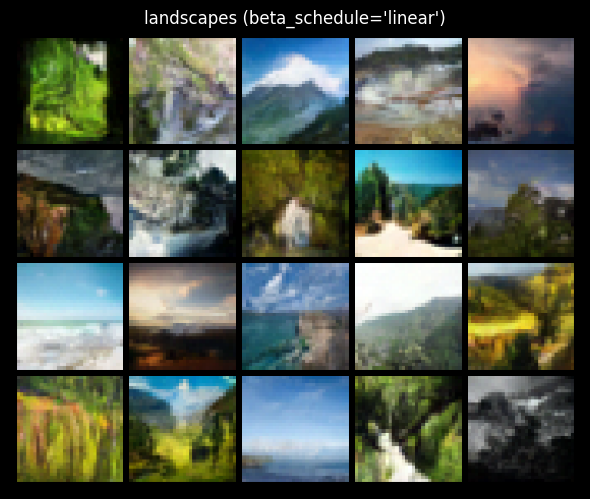

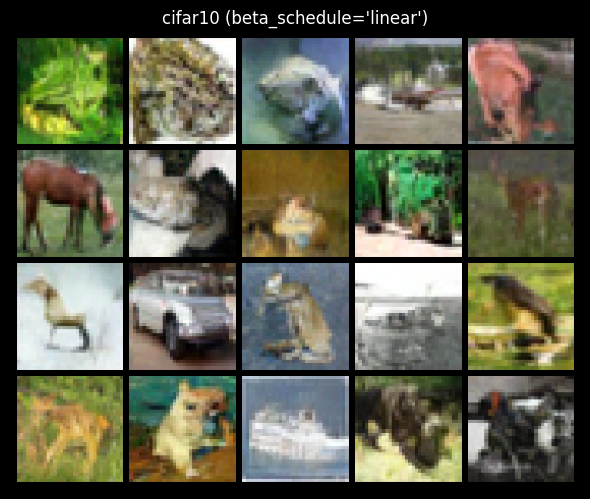

A minimal, hackable repro of Denoising Diffusion Probabilistic Model with parts of Improved DDPM (cosine beta schedule) and Classifier Free Guidance. Model is roughly same UNet used in the paper with Self Attention. Also support classifier free guidance for conditional generation, cosine and linear beta schedules, EMA etc. Experimented with include CIFAR-10, MNIST and Landscapes datasets. Training script uses Hydra for config and (optional) WandB logging. Still work in progress, but good enough to mess around with generative diffusion models.

pip install -r requirements.txtpython train.pypython train.py +run=landscapespython train.py +run=mnist_cfg- uses lite version of the model since mnist is 24x24x1

python train.py +run=cifar10_cfgpython sample.py <model checkpoint.pt> -n <number of samples> <--save>