Asynchronous background job processing for AWS Lambda with Ruby using Lambda Extensions. Inspired by the SuckerPunch gem but specifically tooled to work with Lambda's invoke model.

Asynchronous background job processing for AWS Lambda with Ruby using Lambda Extensions. Inspired by the SuckerPunch gem but specifically tooled to work with Lambda's invoke model.

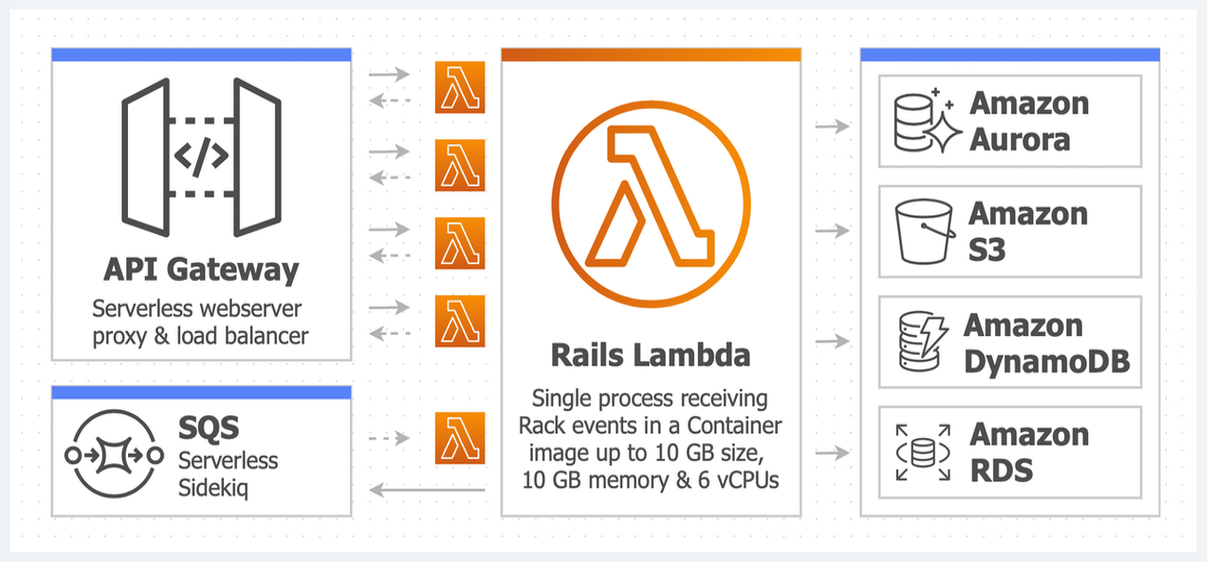

For a more robust background job solution, please consider using AWS SQS with the Lambdakiq gem. A drop-in replacement for Sidekiq when running Rails in AWS Lambda using the Lamby gem.

Because AWS Lambda freezes the execution environment after each invoke, there is no "process" that continues to run after the handler's response. However, thanks to Lambda Extensions along with its "early return", we can do two important things. First, we leverage the rb-inotify gem to send the extension process a simulated POST-INVOKE event. We then use Distributed Ruby (DRb) from the extension to signal your application to work jobs off a queue. Both of these are synchronous calls. Once complete the LambdaPunch extensions signals it is done and your function is ready for the next request.

The LambdaPunch extension process is very small and lean. It only requires a few Ruby libraries and needed gems from your application's bundle. Its only job is to send signals back to your application on the runtime. It does this within a few milliseconds and adds no noticeable overhead to your function.

Add this line to your project's Gemfile and then make sure to bundle install afterward. It is only needed in the production group.

gem 'lambda_punch'Within your project or Rails application's Dockerfile, add the following. Make sure you do this before you COPY your code. The idea is to implicitly use the default USER root since it needs permission to create an /opt/extensions directory.

RUN gem install lambda_punch && lambda_punch installLambdaPunch uses the LAMBDA_TASK_ROOT environment variable to find your project's Gemfile. If you are using a provided AWS Runtime container, this should be set for you to /var/task. However, if you are using your own base image, make sure to set this to your project directory.

ENV LAMBDA_TASK_ROOT=/appInstallation with AWS Lambda via the Lamby v4 (or higher) gem can be done using Lamby's handled_proc config. For example, appends these to your config/environments/production.rb file. Here we are ensuring that the LambdaPunch DRb server is running and that after each Lamby request we notify LambdaPunch.

config.to_prepare { LambdaPunch.start_server! }

config.lamby.handled_proc = Proc.new do |_event, context|

LambdaPunch.handled!(context)

endIf you are using an older version of Lamby or a simple Ruby project with your own handler method, the installation would look something like this:

LambdaPunch.start_server!

def handler(event:, context:)

# ...

ensure

LambdaPunch.handled!(context)

endAnywhere in your application's code, use the LambdaPunch.push method to add blocks of code to your jobs queue.

LambdaPunch.push do

# ...

endA common use case would be to ensure the New Relic APM flushes its data after each request. Using Lamby in your config/environments/production.rb file would look like this:

config.to_prepare { LambdaPunch.start_server! }

config.lamby.handled_proc = Proc.new do |_event, context|

LambdaPunch.push { NewRelic::Agent.agent.flush_pipe_data }

LambdaPunch.handled!(context)

endYou can use LambdaPunch with Rails' ActiveJob. For a more robust background job solution, please consider using AWS SQS with the Lambdakiq gem.

config.active_job.queue_adapter = :lambda_punchYour function's timeout is the max amount to handle the request and process all extension's invoke events. If your function times out, it is possible that queued jobs will not be processed until the next invoke.

If your application integrates with API Gateway (which has a 30 second timeout) then it is possible your function can be set with a higher timeout to perform background work. Since work is done after each invoke, the LambdaPunch queue should be empty when your function receives the SHUTDOWN event. If jobs are in the queue when this happens they will have two seconds max to work them down before being lost.

For a more robust background job solution, please consider using AWS SQS with the Lambdakiq gem.

The default log level is error, so you will not see any LambdaPunch lines in your logs. However, if you want some low level debugging information on how LambdaPunch is working, you can use this environment variable to change the log level.

Environment:

Variables:

LAMBDA_PUNCH_LOG_LEVEL: debugAs jobs are worked off the queue, all job errors are simply logged. If you want to customize this, you can set your own error handler.

LambdaPunch.error_handler = lambda { |e| ... }When using Extensions, your function's CloudWatch Duration metrics will be the sum of your response time combined with your extension's execution time. For example, if your request takes 200ms to respond but your background task takes 1000ms your duration will be a combined 1200ms. For more details see the "Performance impact and extension overhead" section of the Lambda Extensions API

Thankfully, when using Lambda Extensions, CloudWatch will create a PostRuntimeExtensionsDuration metric that you can use to isolate your true response times Duration using some metric math. Here is an example where the metric math above is used in the first "Duration (response)" widget.

After checking out the repo, run the following commands to build a Docker image and install dependencies.

$ ./bin/bootstrap

$ ./bin/setupThen, to run the tests use the following command.

$ ./bin/testYou can also run the ./bin/console command for an interactive prompt within the development Docker container. Likewise you can use ./bin/run ... followed by any command which would be executed within the same container.

Bug reports and pull requests are welcome on GitHub at https://github.com/rails-lambda/lambda_punch. This project is intended to be a safe, welcoming space for collaboration, and contributors are expected to adhere to the code of conduct.

The gem is available as open source under the terms of the MIT License.

Everyone interacting in the LambdaPunch project's codebases, issue trackers, chat rooms and mailing lists is expected to follow the code of conduct.