✔️ Enhanced Stability: Our method is more stable compared to LoRA-DreamBooth. Stability refers to variations in images generated across different learning rates and Kronecker factor/ranks, which makes LoRA-DreamBooth harder to fine-tune.

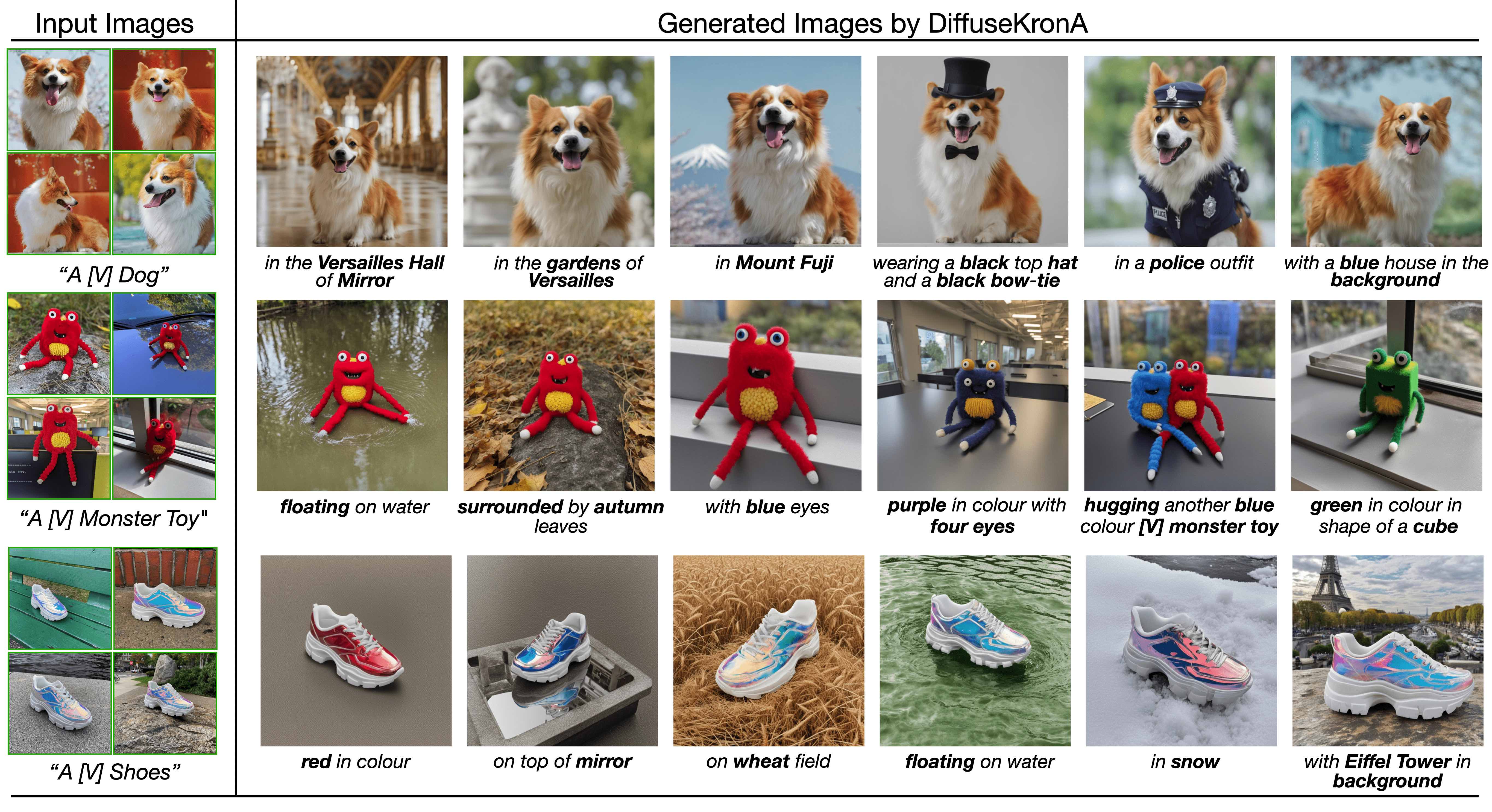

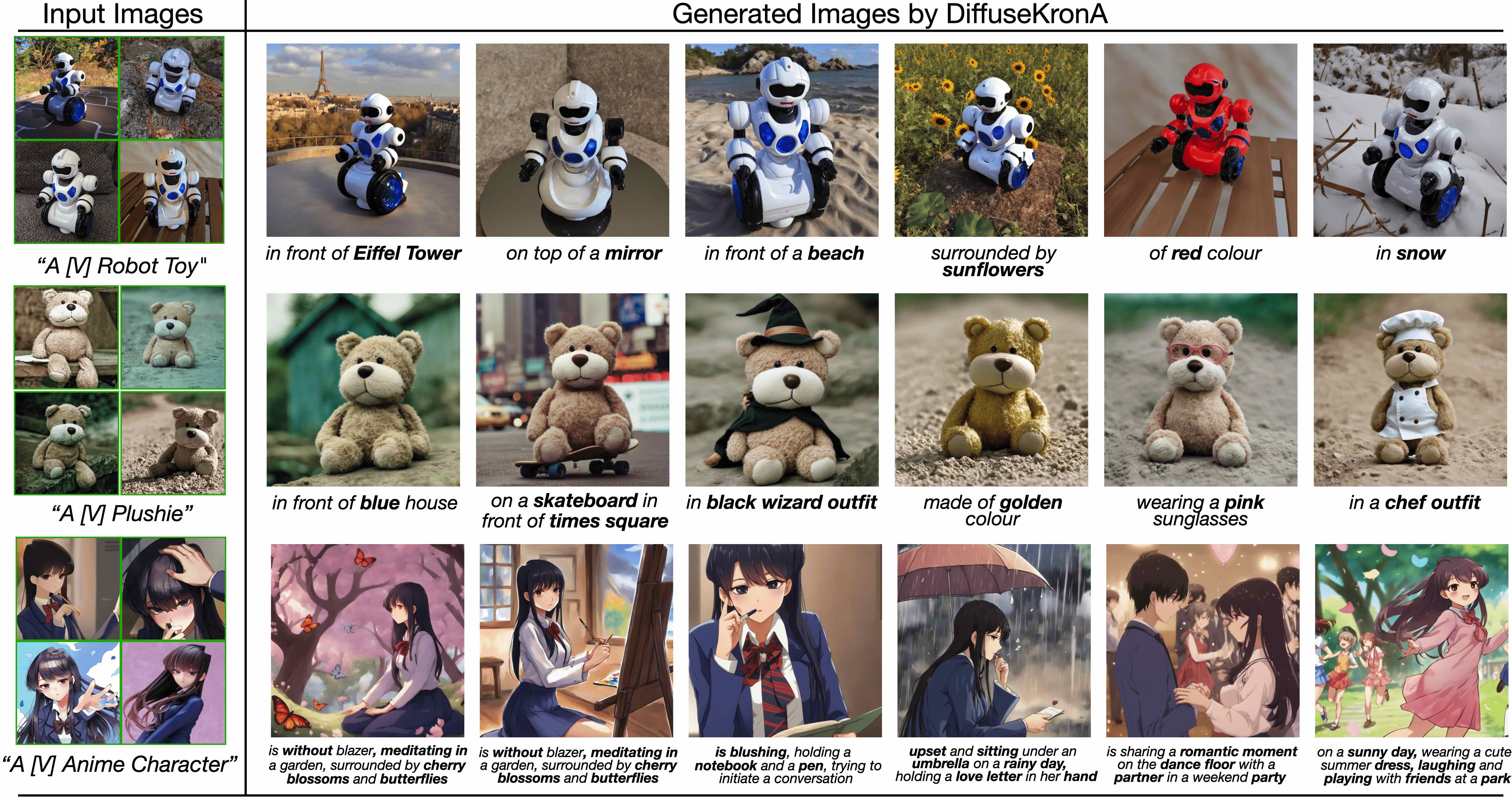

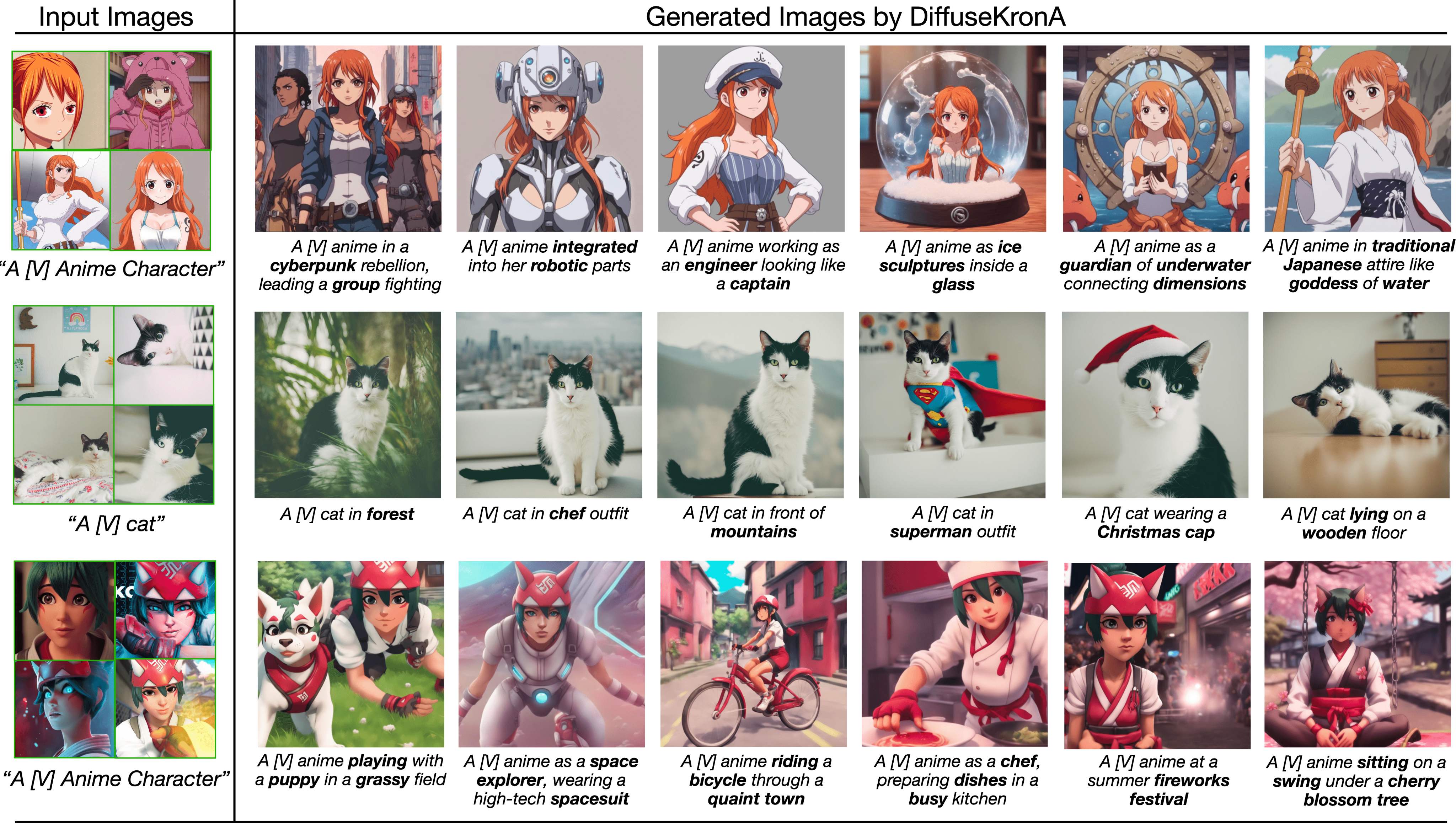

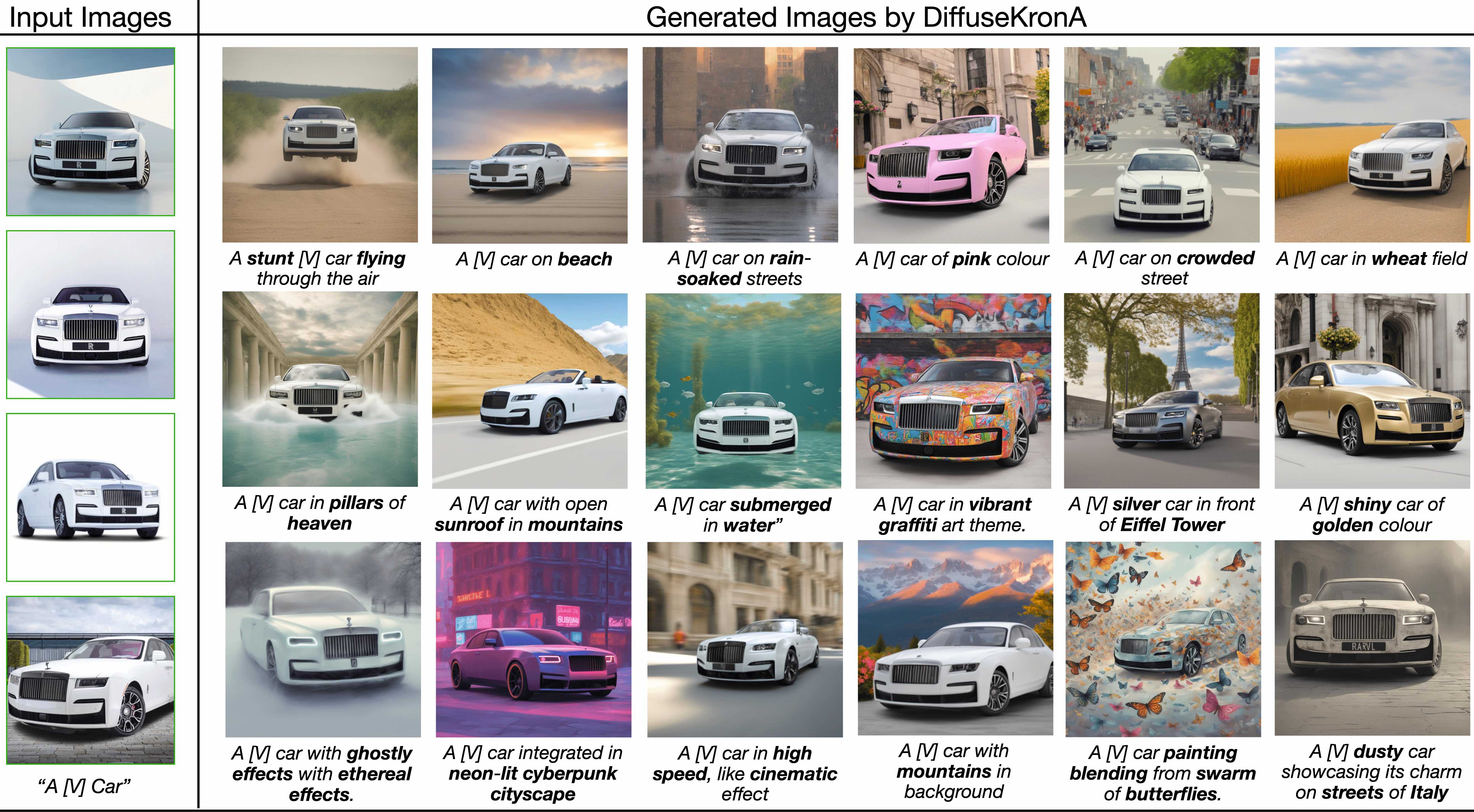

✔️ Text Alignment and Fidelity: On average, DiffusekronA captures better subject semantics and large contextual prompts.

✔️ Interpretability: Leverages the advantages of the Kronecker product to capture structured relationships in attention-weight matrices. More controllable decomposition makes DiffusekronA more interpretable.

Overview of DiffuseKronA:

✨ Fine-tuning process involves optimizing the multi-head attention parameters (Q, K, V , and O) using Kronecker Adapter, elaborated in the subsequent blocks.

✨ During inference, newly trained parameters, denoted as θ, are integrated with the original weights Dϕ and images are synthesized using the updated personalized model Dϕ+θ.

✨ We also present a schematic illustration of LoRA vs DiffuseKronA; LoRA is limited to one controllable parameter, the rank r; while the Kronecker product showcases enhanced interpretability by introducing two controllable parameters a1 and a2 (or equivalently b1 and b2). Furthermore, we also showcase

the advantages of the proposed method.

- Create conda environment

conda create -y -n diffusekrona python=3.11

conda activate diffusekrona

- Package installation

pip install diffusers==0.21.0

pip install -r requirements.txt

pip install accelerator

- Install CLIP

pip install git+https://github.com/openai/CLIP.gitNote: For

diffusers=0.21.0, you will getImportError: cannot import name 'cached_download' from 'huggingface_hub'error. To solve it please remove the linefrom huggingface_hub import HfFolder, cached_download, hf_hub_download, model_infoin dyanamic_models_utils.py script.

- Clone the dataset and remove the

*subject/generatedsubfolders

git clone https://github.com/diffusekrona/data && rm -rf data/.git

mkdir outputs

cd diffusekrona/

python format_datasets.py # To format the dataset (NOT mandatory)- Finetune diffusekrona using script file

cd diffusekrona/ # RUN inside diffusekrona folder

CUDA_VISIBLE_DEVICES=$GPU_ID bash scripts/finetune_sdxl.sh # Leveraging SDXL model

CUDA_VISIBLE_DEVICES=$GPU_ID bash scripts/finetune_sd.sh # Leveraging SDXL model- Generate images from the finetuned weights (RUN inside diffusekrona folder)

CUDA_VISIBLE_DEVICES=$GPU_ID accelerate launch scripts/inference_sdxl.sh # Leveraging SDXL model

CUDA_VISIBLE_DEVICES=$GPU_ID accelerate launch scripts/inference_sd.sh # Leveraging SD modelNote: Specify a single GPU index only (e.g.,

CUDA_VISIBLE_DEVICES=0) and avoid listing multiple IDs.

For more results, please visit here.

Our codebase is built on top of the HuggingFace Diffusers library, and we’re incredibly grateful for their amazing work!

If you think this project is helpful, please feel free to leave a star⭐️ and cite our paper:

@InProceedings{Marjit_2025_WACV,

author = {Marjit, Shyam and Singh, Harshit and Mathur, Nityanand and Paul, Sayak and Yu, Chia-Mu and Chen, Pin-Yu},

title = {DiffuseKronA: A Parameter Efficient Fine-Tuning Method for Personalized Diffusion Models},

booktitle = {Proceedings of the Winter Conference on Applications of Computer Vision (WACV)},

month = {February},

year = {2025},

pages = {3529-3538}

}Shyam Marjit: marjitshyam@gmail.com or shyam.marjit@iiitg.ac.in