Graphic Author: Natalia Rusin

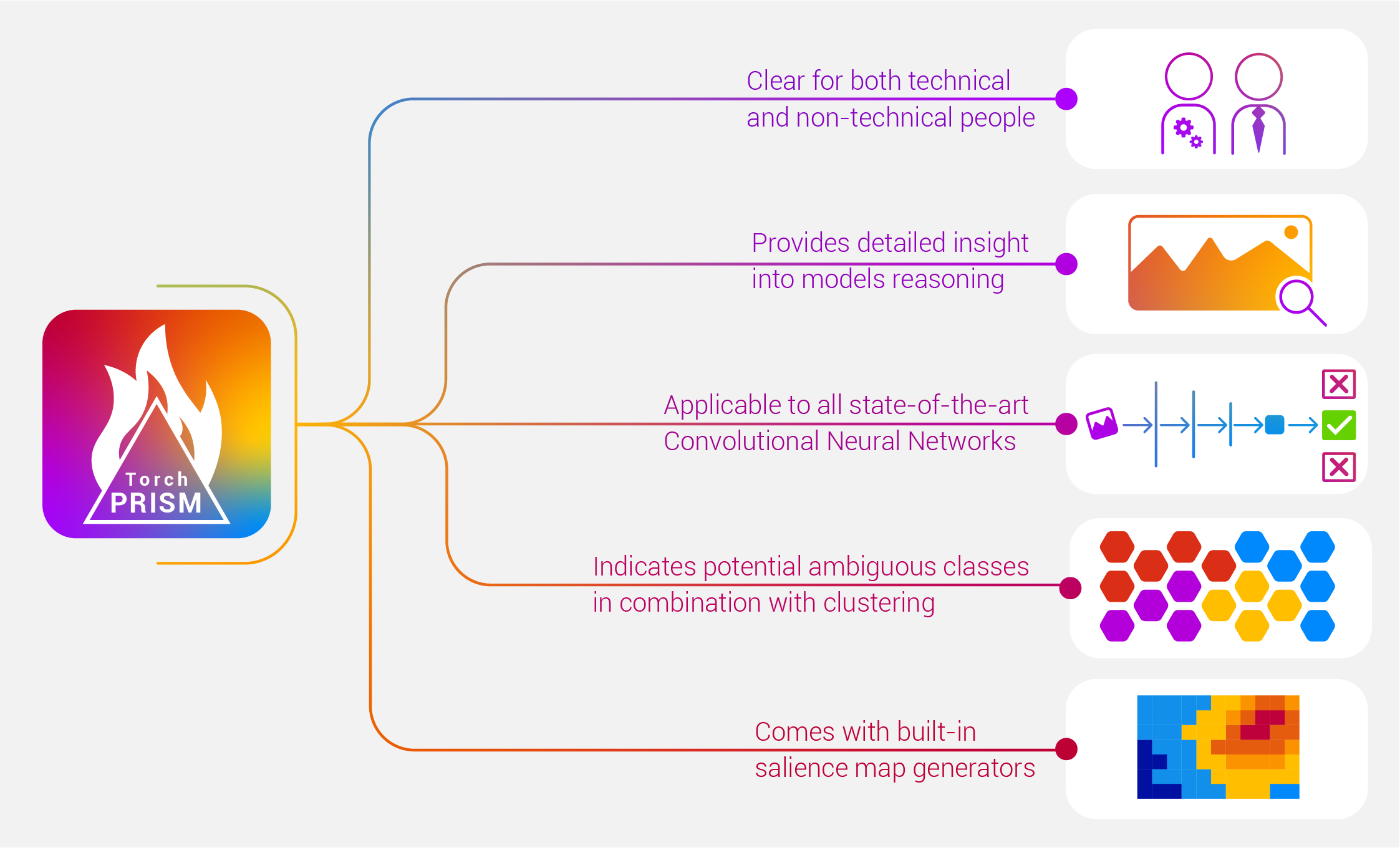

A novel tool that utilizes Principal Component Analysis to display discriminative featues detected by a given convolutional neural network. It complies with virtually all CNNs.

- Usage

prismarguments- Other arguments

- Demo

- Results

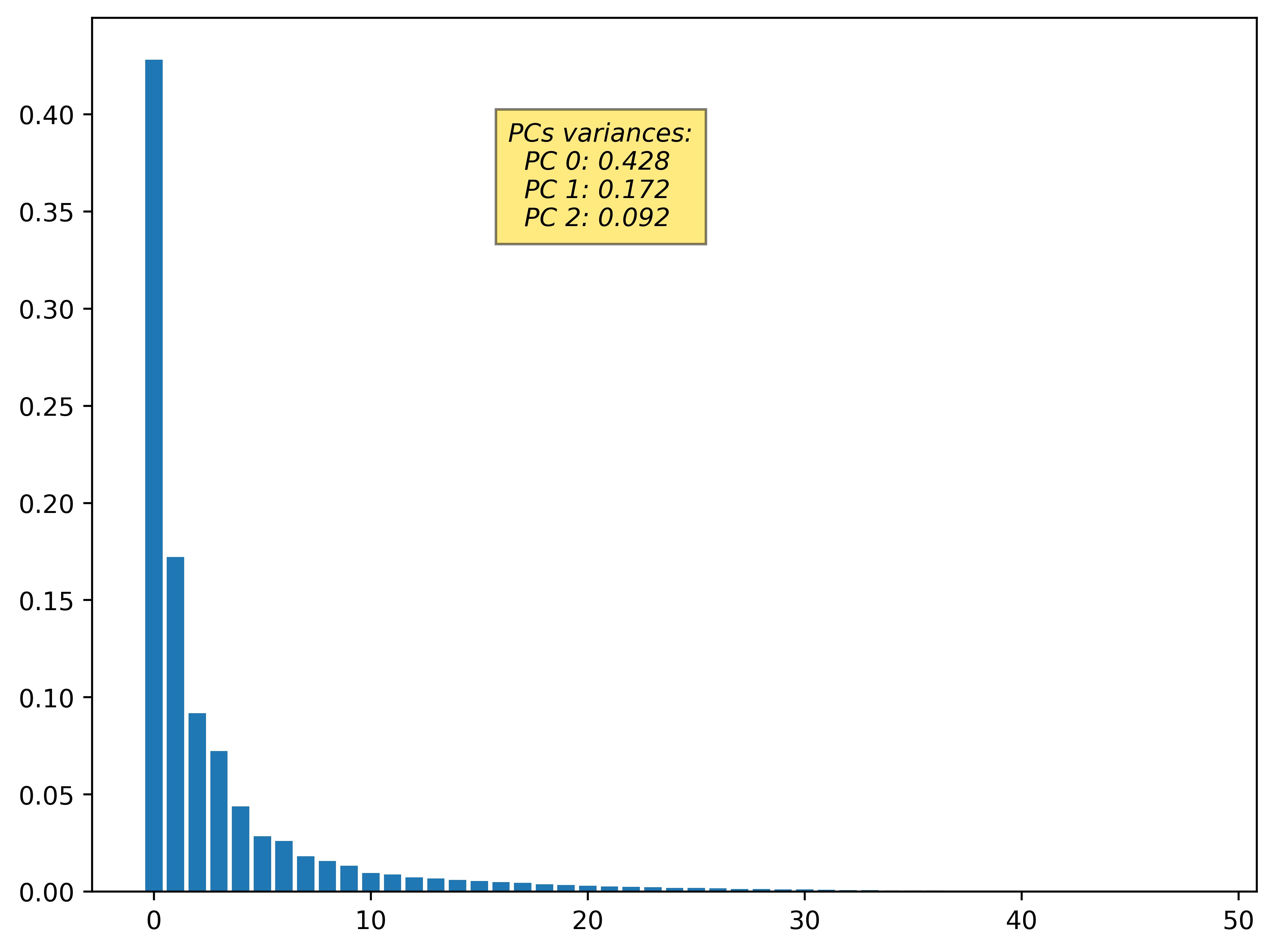

- Variance across all Principal Componentes

- Saliency maps integration

- Clustering

- Read more

For user's convenience we have prepared an argument-feedable excutable prism.

In order to use it, please prepare virtual env:

python3 -m venv venv

source ./venv/bin/activate

pip install --upgrade pip

pip install -r requirements.txt

./prism| Argument | Description | Default |

|---|---|---|

--input=/path/to/... |

Path from where to take images. Note it is a glob, so value ./samples/**/*.jpg will mean: jpg images from ALL subfolders of samples |

./samples/*.jpg |

--model=model-name |

Model to be used with PRISM. Note that Gradual Extrapolation may not behave properly for some models outside vgg family. | vgg16 |

--saliency=model-name |

Makes TorchPRISM perform chosen saliency map generating process and combines it with PRISM’s output. Currently supports: - Contrastive Excitation Backpropagation exct-backp- GradCAM gradcam |

none |

| --cluster | Generates binary file with list of lists - which image contains which features according to PRISM. It can be further used for clustering in script som.py | |

| --help | Print help details and exit |

Simplest snippet of working code.

import sys

sys.path.insert(0, "../")

from torchprism import PRISM

from torchvision import models

from utils import load_images, draw_input_n_prism

# load images into batch

input_batch = load_images()

model = models.vgg11(pretrained=True)

model.eval()

PRISM.register_hooks(model)

model(input_batch)

prism_maps_batch = PRISM.get_maps()

drawable_input_batch = input_batch.permute(0, 2, 3, 1).detach().cpu().numpy()

drawable_prism_maps_batch = prism_maps_batch.permute(0, 2, 3, 1).detach().cpu().numpy()

draw_input_n_prism(drawable_input_batch, drawable_prism_maps_batch)First we have to import PRISM and torch models., as well as functions for preparing input images as simple torch batch and function to draw batches. Next we have to load the model, in this case a pretrained vgg11 has been chosen and then we have to call the first PRISM method to register required hooks in the model. With such a prepared model we can perform the classification and, since the actual output is not needed, we can just ignore it. Model execution is followed by using the second PRISM method to calculate features maps for the processed batch. Finally we have to prepare both input and PRISM output so they can be drawn and as the last step we call a method that displays them using e.g. matplotlib.

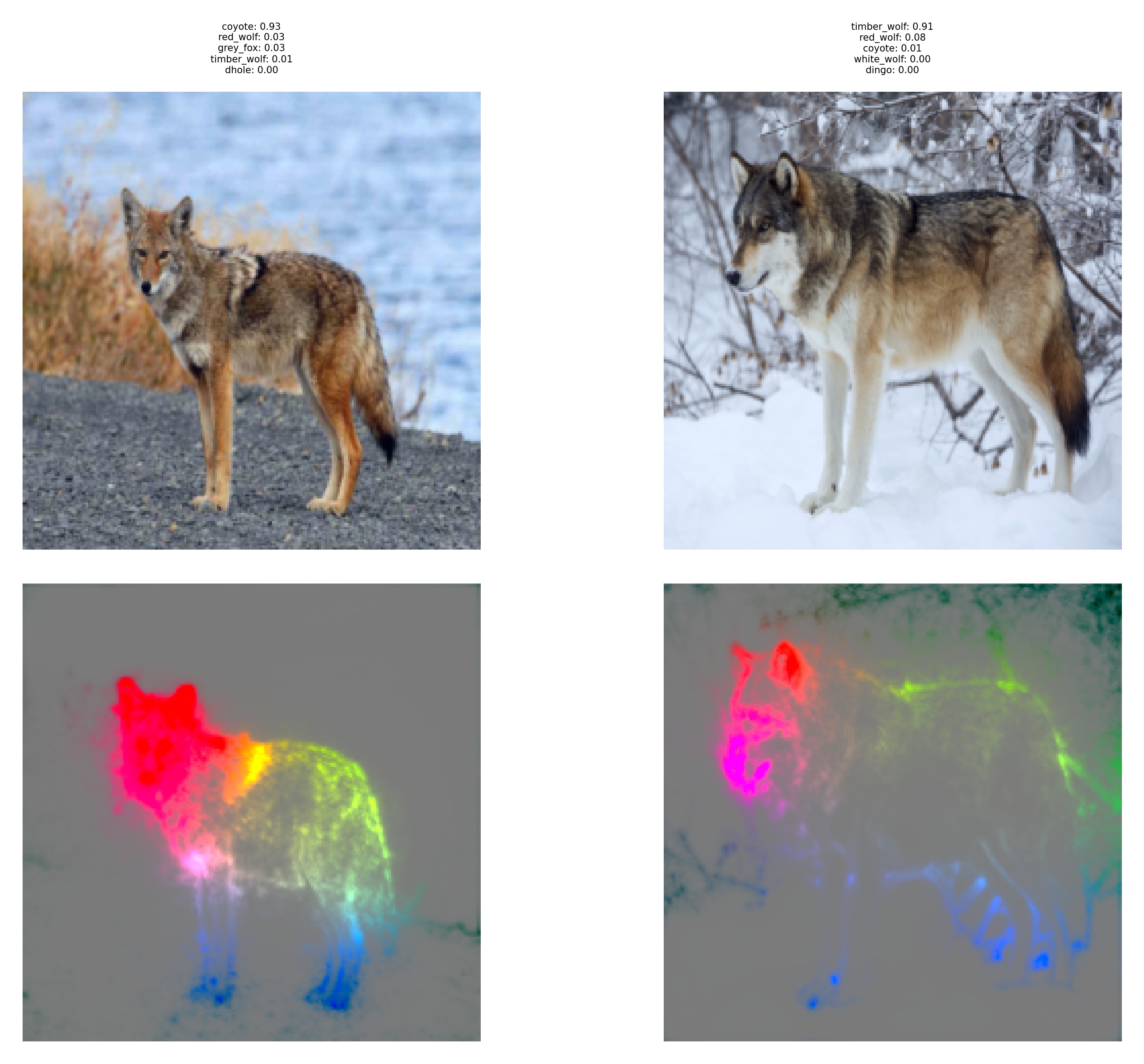

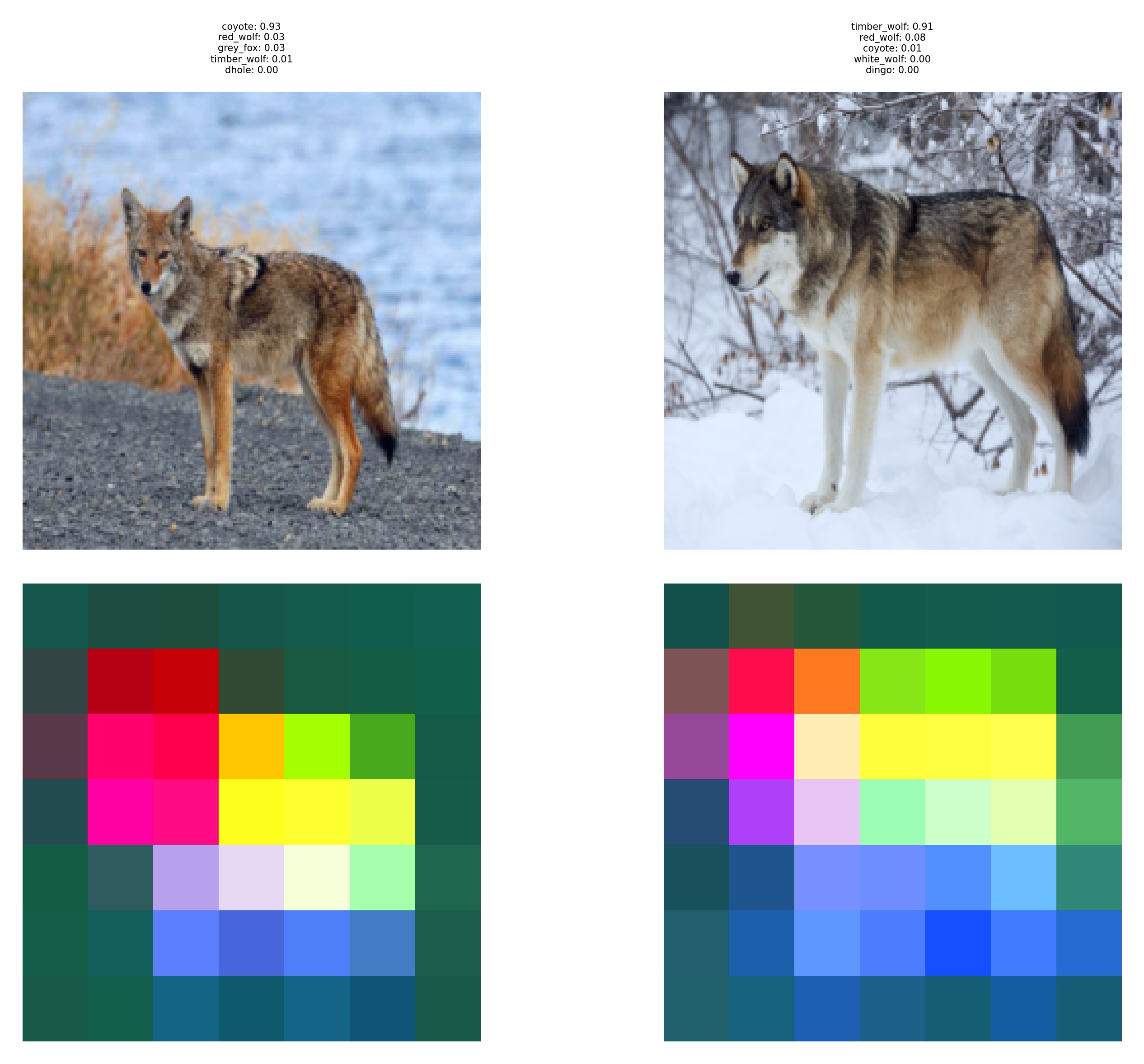

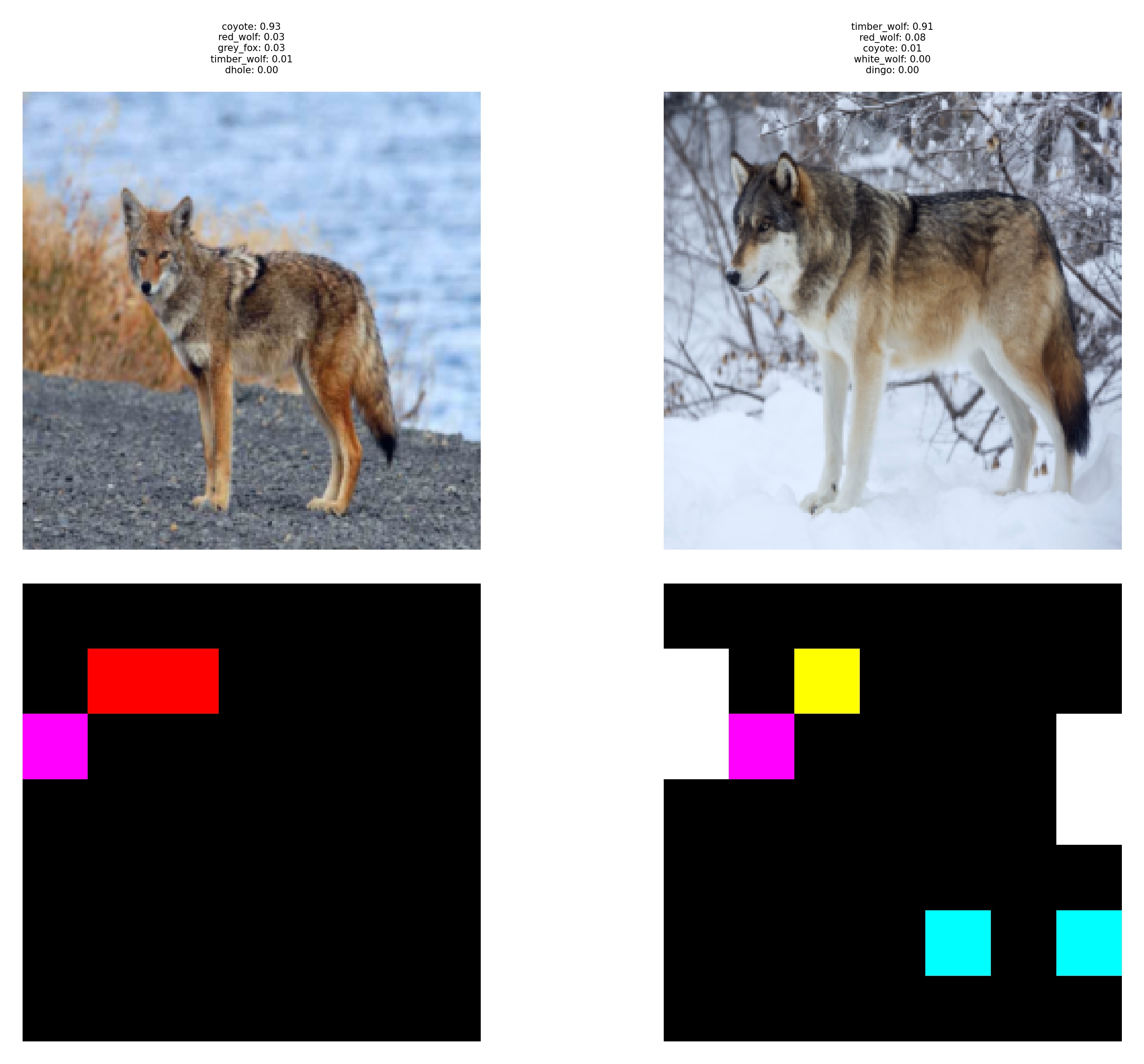

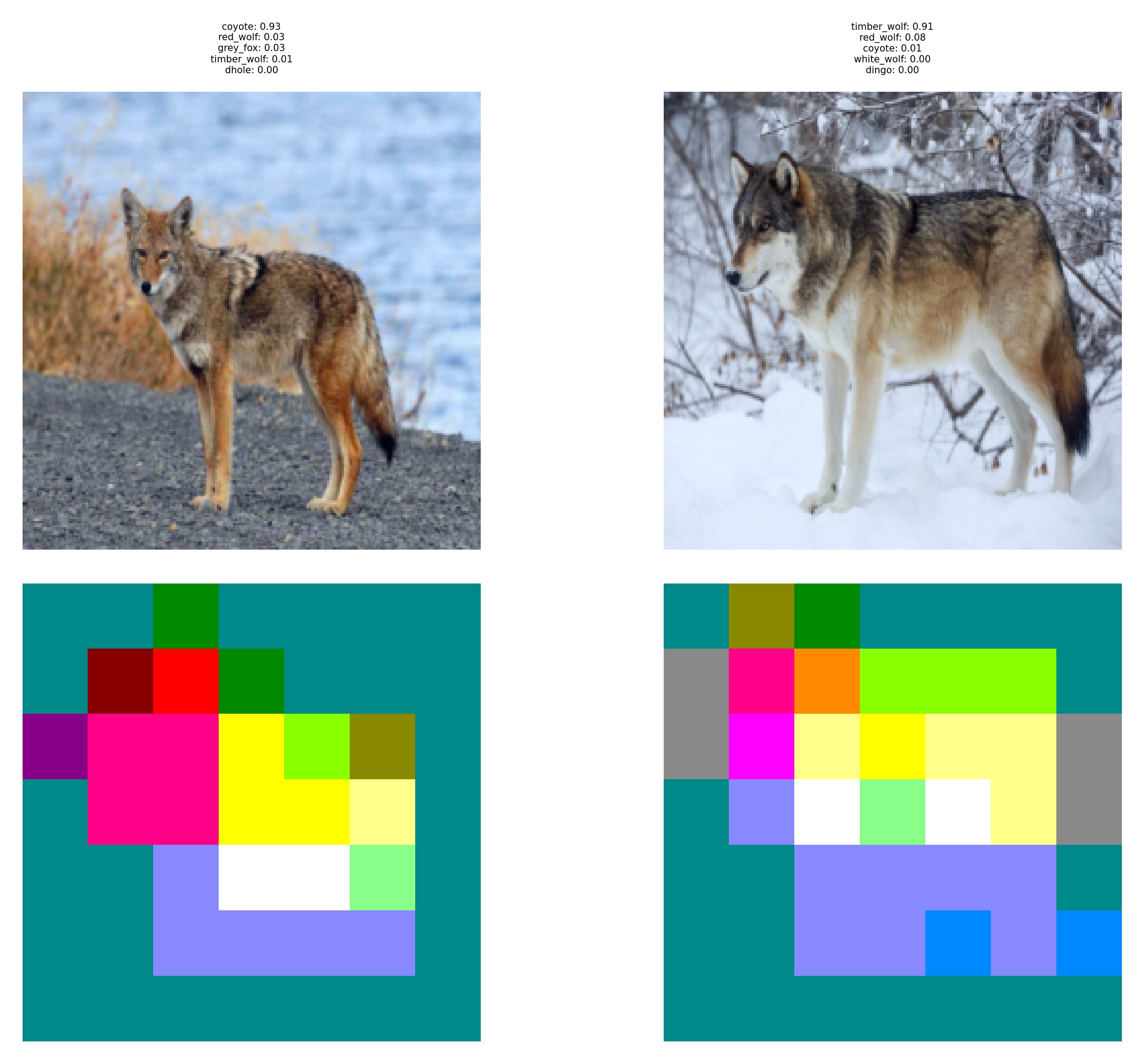

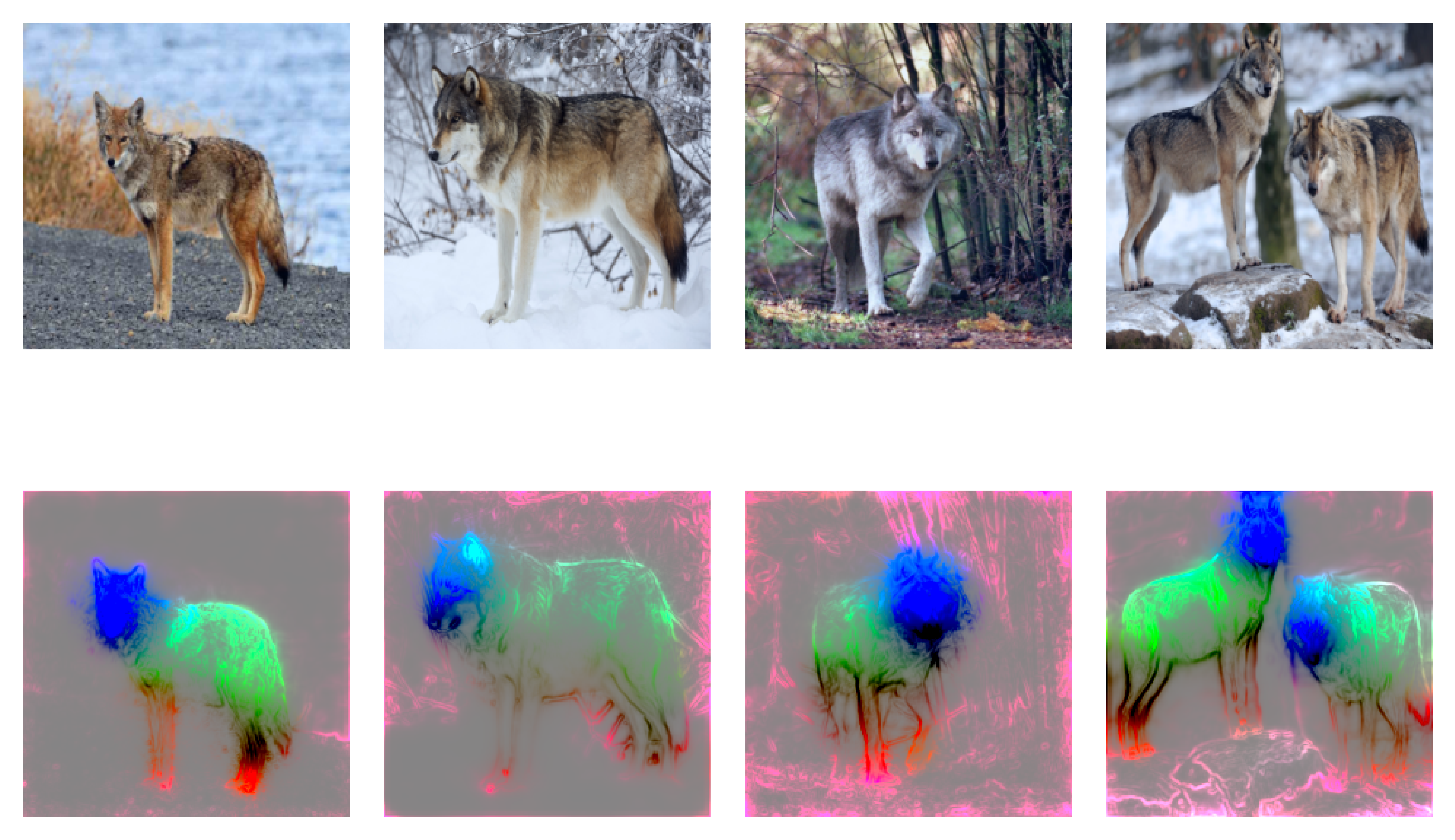

The results allow us to see the discriminative features found by the model. On the sample images below we can see wolves

We can notice that all wolves have similar colors - features, found on their bodies. Furthermore the coyote also shows almost identical characteristics except the mouth element. wolves have a black stain around their noses, while coyote does not.

Also an image with variance is being plotted.

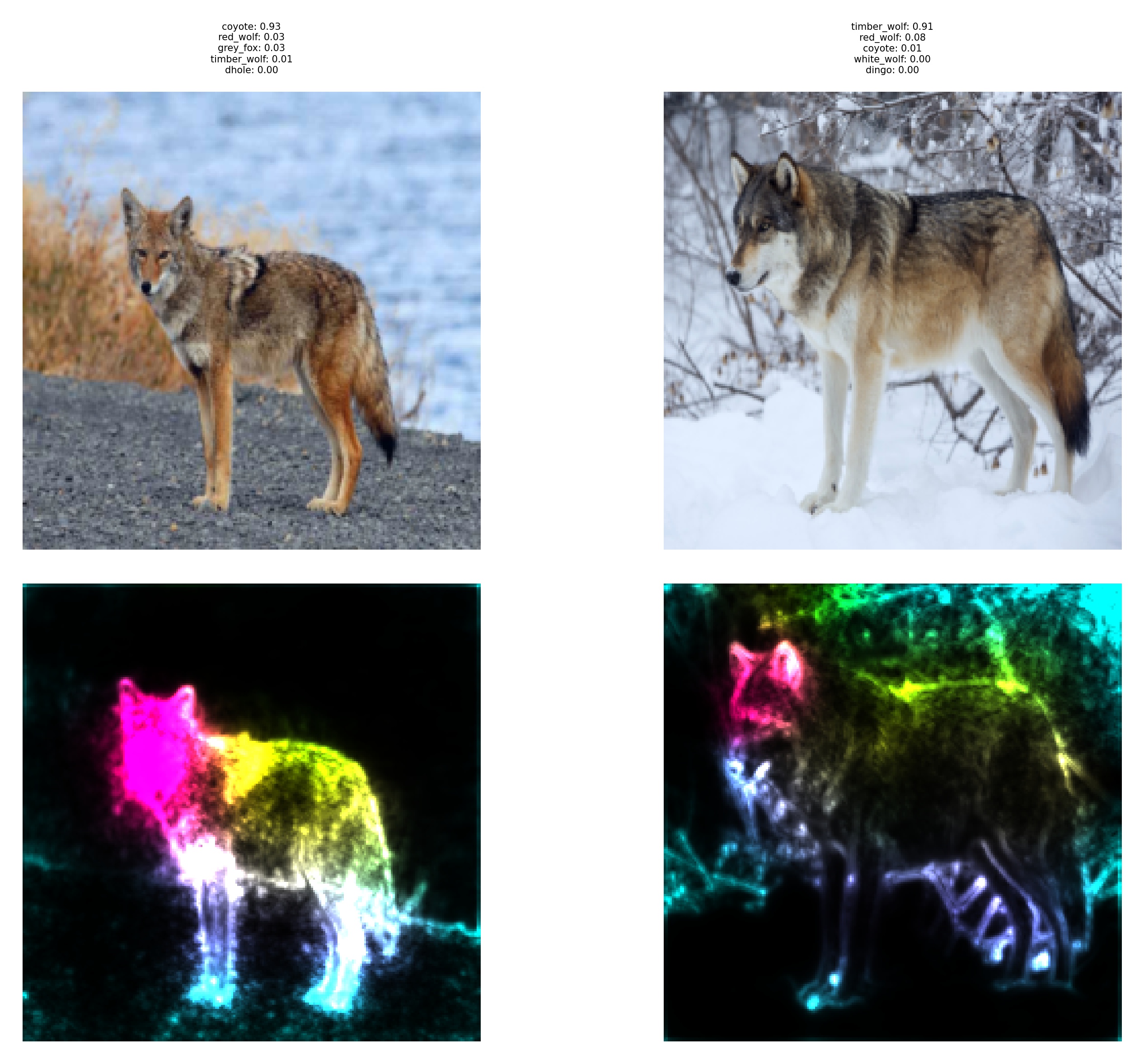

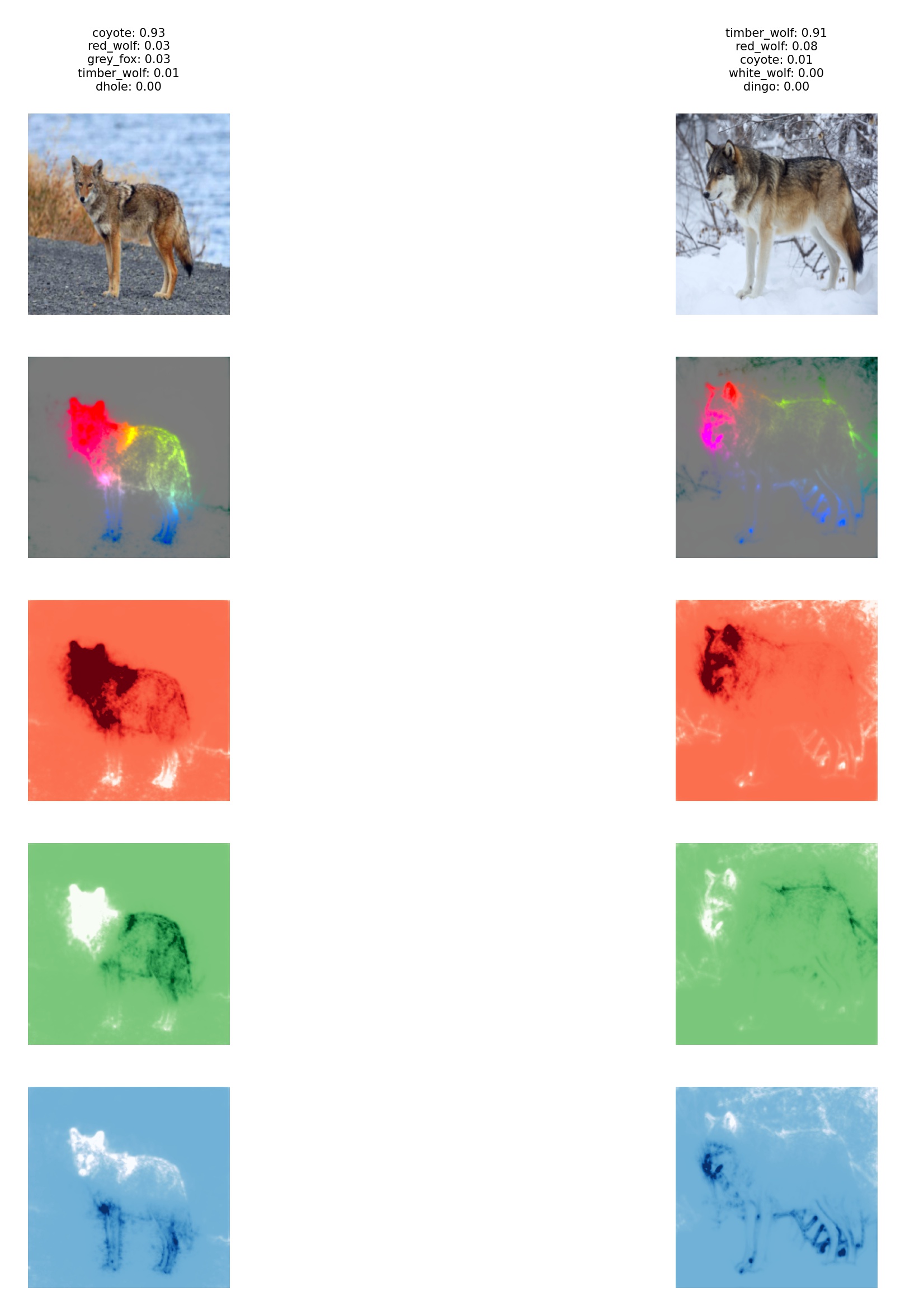

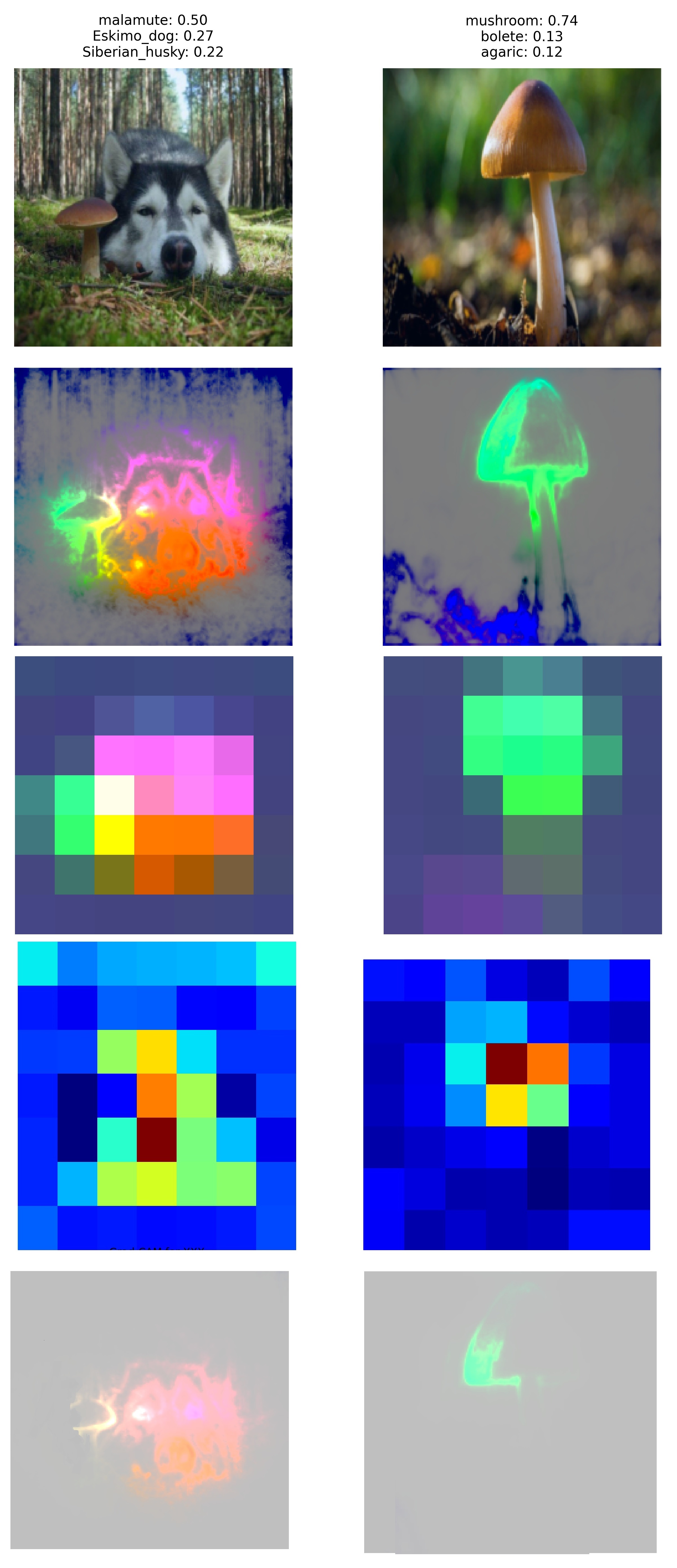

Since PRISM can be integrated with all saliency map types it comes with built-in generating tools.

We have an example where dog (malamute) is properly recognized by VGG-16 model. However it is alongside mushroom, which despite being correctly identified, has no impact on models classification decision.

Apparently mushroom is not important for the classification, therefore we can generate saliency map for the given example and merge it with PRISM's output.

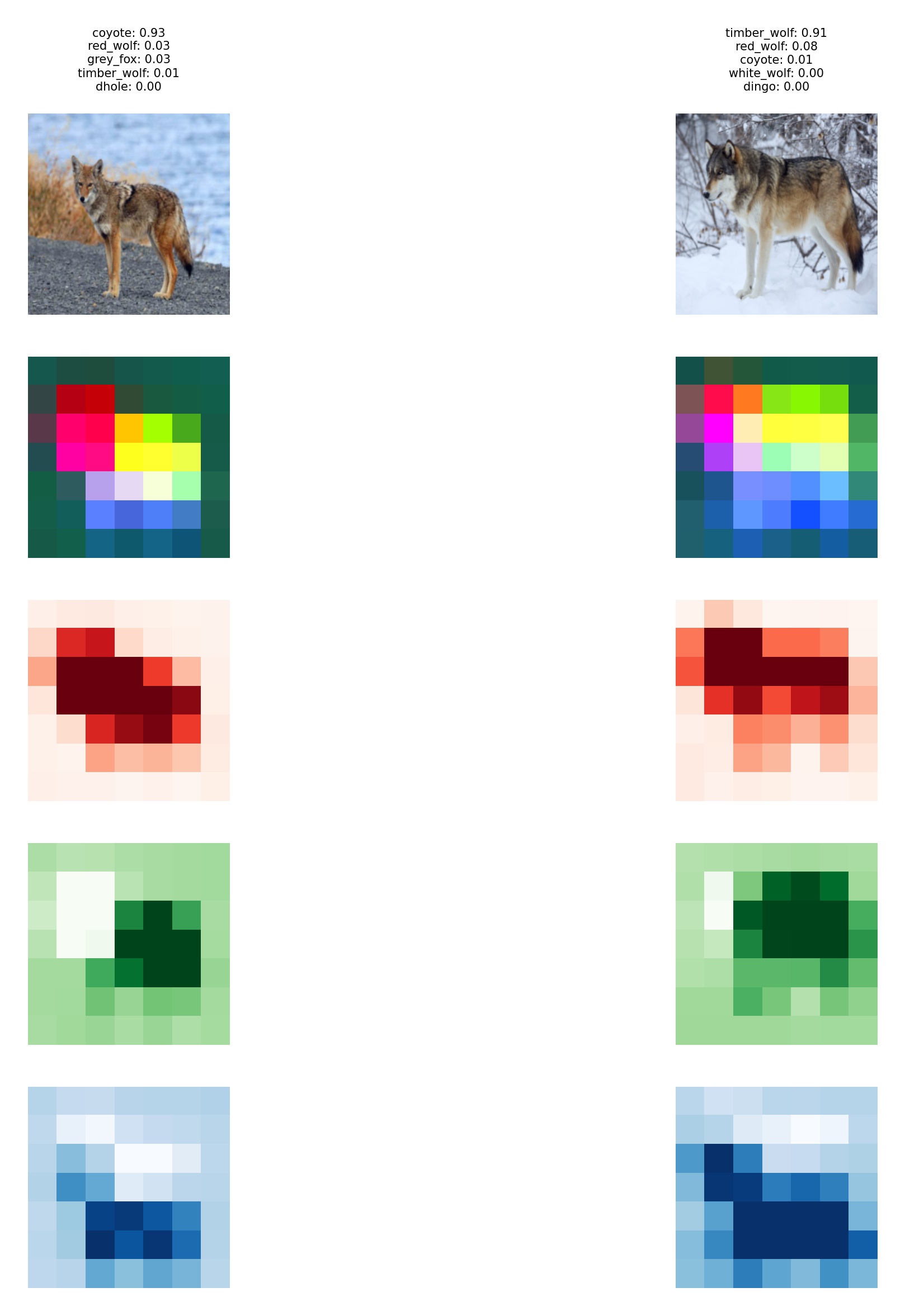

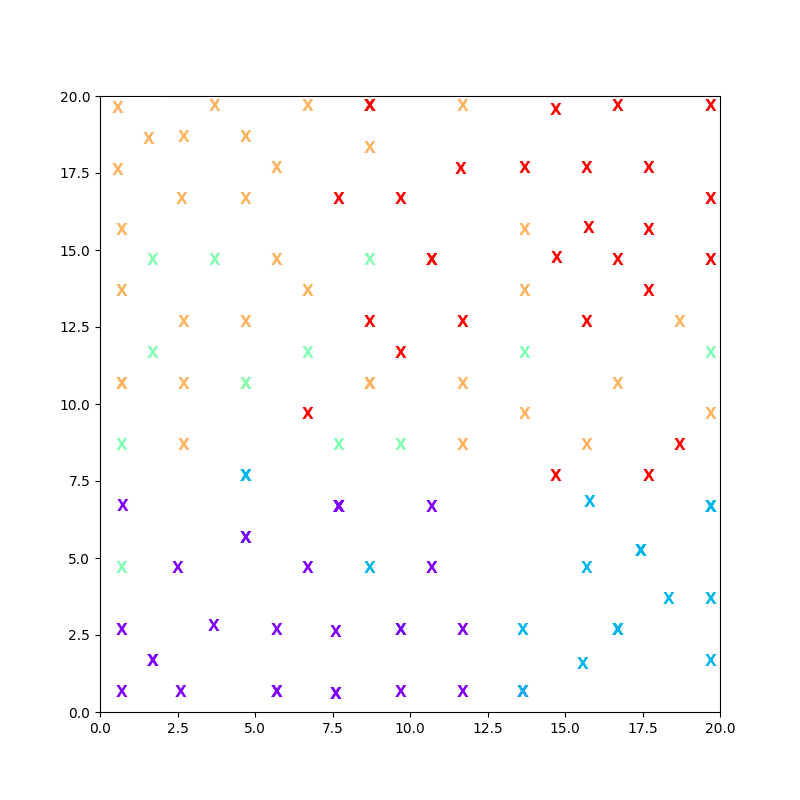

Last but not least a clusterig can be performed using PRISM in order to detect potentially amibgious classes. We have taken 5 canine classes (colour from cluster map in bracket):

- coyote (orange)

- grey fox (red)

- timber wolf (green)

- samoyed (purple)

- border collie (blue)

From the figure we can conclude that coyotes(orange) could be easily confused with timber wolves(green) and grey foxes(red). On the other hand the Samoyed and Border collie specimens (purple and blue respectively) are clearly distinguishable from the rest.