-

Notifications

You must be signed in to change notification settings - Fork 50

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Mismatch between image and corresponding LiDAR point cloud #27

Comments

|

Hi, @nilimapaul , I checked from my side, the projection is OK. The number of image and lidar are not consistent. you can deleted the last extra images. About the your calibration model, reason might because there's some problem about the data or code that cause the wrong projection as you shown above. Another problem, it might because KITTI and our dataset have different setup and sensors that cause your modal can't be directly transferred to our dateset if you learn your model only on KITTI. Hope this helps! Best wishes! |

|

Hi @maskjp, Thanks a lot for your time. For projection I used the same code given in your github https://github.com/unmannedlab/RELLIS-3D/blob/main/utils/lidar2img.ipynb, I tried to just run your code giving inputs to check if the code working properly at my end. The output projected depth image I have given above is output of your depth-map code only. Apart from that I understand that the model trained on kitti dataset will not give very good result for this dataset. But I've not gone so far, before using this data in my calibration model I wanted to check the depth maps with your code only and I'm getting the above mentions issues. Now please tell me how to get rid of this. Thanks You! |

|

Hi @maskjp, |

|

Hi, @nilimapaul, Yes, the transformation is between the Basler camera and ouster lidar. I added the transformation between Ouster and Velodyne Lidar. You can find the example here. I hope it helps! |

I am working on deep learning-based extrinsic calibration for lidar and camera fusion and facing the following issues:

But the problem is when I tried with the data from a random sequence (00000,...,00004) of the Rellis-3D dataset(image, bin file, transform.yaml, camera_info.txt), downloaded from https://drive.google.com/drive/folders/1aZ1tJ3YYcWuL3oWKnrTIC5gq46zx1bMc

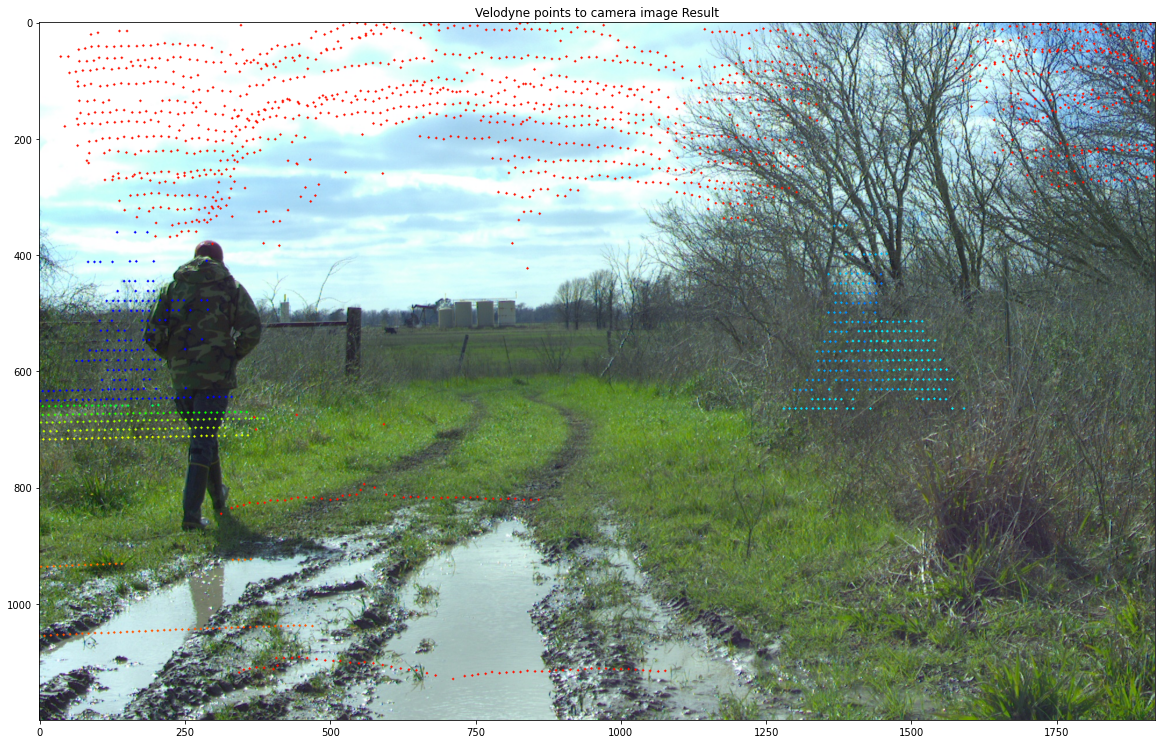

I found the output is not as expected. It seems the lidar data and image is not from the same pair. There is not even minimum matching with image and lidar data. The lidar points are spread randomly over the image.Though I have used image and bin file with same sequence number (ex. xxxx104.jpg and xxx104.bin) and camera_info.txt, transform.yaml from the same sequence. The output shown below:

Another example:

Therefore, please let me know what could be the possible reason for such unexpected output. I understand there must be a little miscalibration effect, but these results are absolutely undesirable.

I tried to evaluate my deep learning model for extrinsic calibration (trained on KITTI dataset) with small data from rellis-3D dataset, and I got huge errors (more than 1m translation error and 20deg+ rotation error even with the model of 5th iterative refinement which was giving pretty good result with Kitti dataset).

I wonder if the problem is with the depth image issues stated above. If there is any issue with the dataset given on the website, please provide me with the updated dataset.

Thanks in advance.

The text was updated successfully, but these errors were encountered: