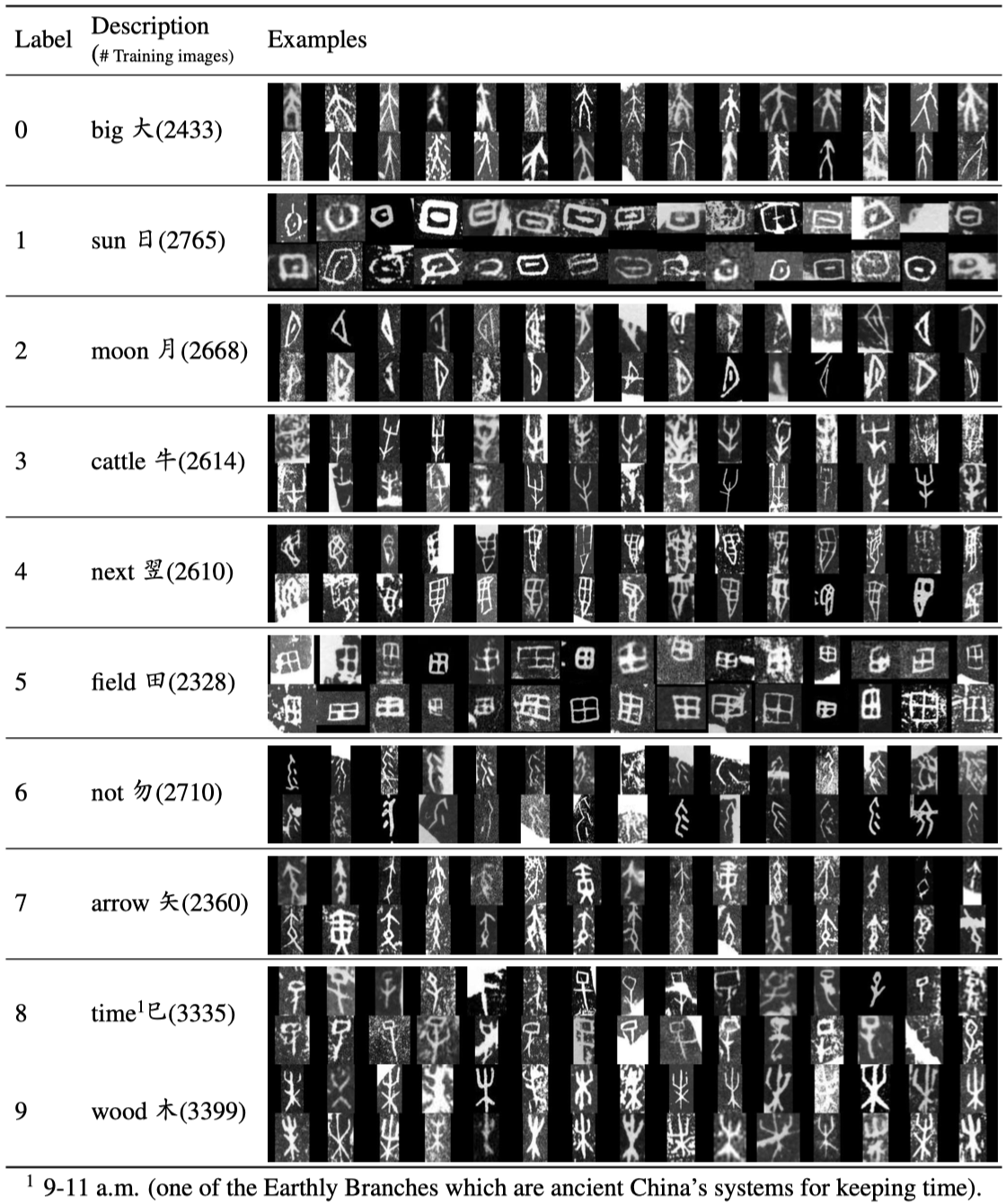

Oracle-MNIST dataset comprises of 28×28 grayscale images of 30,222 ancient characters from 10 categories, for benchmarking pattern classification, with particular challenges on image noise and distortion. The training set totally consists of 27,222 images, and the test set contains 300 images per class.

1. Easy-of-use. Oracle-MNIST shares the same data format with the original MNIST dataset, allowing for direct compatibility with all existing classifiers and systems.

2. Real-world challenge. Oracle-MNIST constitutes a more challenging classification task than MNIST. The images of oracle characters suffer from 1) extremely serious and unique noises caused by three- thousand years of burial and aging and 2) dramatically variant writing styles by ancient Chinese, which all make them realistic for machine learning research.

Oracle characters are the oldest hieroglyphs in China. Here's an example of how the data looks (each class takes two-rows):

You can directly download the dataset from Google drive or Baidu drive (code: 5pq5). The data is stored in the same format as the original MNIST data. The result files are listed in following table.

| Name | Content | Examples | Size |

|---|---|---|---|

train-images-idx3-ubyte.gz |

training set images | 27,222 | 12.4 MBytes |

train-labels-idx1-ubyte.gz |

training set labels | 27,222 | 13.7 KBytes |

t10k-images-idx3-ubyte.gz |

test set images | 3,000 | 1.4 MBytes |

t10k-labels-idx1-ubyte.gz |

test set labels | 3,000 | 1.6 KBytes |

Alternatively, you can clone this GitHub repository; the dataset appears under data/oracle. This repo also contains some scripts for benchmark.

Note: All of the scanned images in Oracle-MNIST are preprocessed by the following conversion pipeline. We also make the original images available and left the data processing job to the algorithm developers. You can download the original images from Google drive or Baidu drive (code: 7aem).

Loading data with Python (requires NumPy)

Use src/mnist_reader in this repo:

import mnist_reader

x_train, y_train = mnist_reader.load_data('data/oracle', kind='train')

x_test, y_test = mnist_reader.load_data('data/oracle', kind='t10k')Make sure you have downloaded the data and placed it in data/oracle. Otherwise, Tensorflow will download and use the original MNIST.

from tensorflow.examples.tutorials.mnist import input_data

data = input_data.read_data_sets('data/oracle')

data.train.next_batch(BATCH_SIZE)Note:This official packages tensorflow.examples.tutorials.mnist.input_data would split training data into two subset: 22,222 samples are used for training, and 5,000 samples are left for validation. You can instead use src/mnist_reader_tf in this repo to load data. The number of validation data can be arbitrarily changed by varying the value of valid_num:

import mnist_reader_tf as mnist_reader

data = mnist_reader.read_data_sets('data/oracle', one_hot=True, valid_num=0)

data.train.next_batch(BATCH_SIZE)You can reproduce the results of CNN by running src/train_pytorch.py or src/train_tensorflow_keras.py, and reproduce the results of other machine learning algorithms by running benchmark/runner.py provided by Fashion-MNIST.

CNN (pytorch):

python train_pytorch.py --lr 0.1 --epochs 15 --net Net1 --data-dir ../data/oracle/CNN (tensorflow+keras):

python train_tensorflow_keras.py --lr 0.1 --epochs 15 --data-dir ../data/oracle/If you use Oracle-MNIST in a scientific publication, we would appreciate references to the following paper:

A dataset of oracle characters for benchmarking machine learning algorithms. Mei Wang, Weihong Deng. Scientific Data

Biblatex entry:

@article{wang2024dataset,

title={A dataset of oracle characters for benchmarking machine learning algorithms},

author={Wang, Mei and Deng, Weihong},

journal={Scientific Data},

volume={11},

number={1},

pages={87},

year={2024},

publisher={Nature Publishing Group UK London}

}