The Sign-to-Text Translation Tool bridges the communication gap by translating hand sign language into readable text. It assists mute individuals by converting hand gestures into alphabets (A-Z) with high accuracy.

This project is divided into four main components for modularity and clarity:

- Data Preparation (

data.pickle)

Handles structured storage of dataset information. - Image Collection (

images_collection.py)

Facilitates systematic data collection for training models. - Training Classifier (

train_classifier.py)

Processes data, trains a RandomForest model, and evaluates its performance. - Inference Classifier (

inference_classifier.py)

Performs real-time hand gesture recognition.

- File Structure:

Adata.picklefile stores a dictionary with:data: The primary dataset for training.labels: Classification labels.

- Purpose:

Acts as a compact representation of the dataset and its attributes for seamless processing.

- Purpose:

Captures image datasets for recognizing alphabets (A-Z). - Key Features:

- Creates a

datafolder with subfolders for each alphabet (A-Z). - Captures 100 images per alphabet using a webcam (configurable).

- Prompts user interaction to start/stop data collection.

- Creates a

- Dependencies:

RequiresOpenCVandoslibraries.

- Workflow:

- Preprocesses data by extracting and normalizing hand landmarks.

- Trains a RandomForestClassifier using the processed data.

- Saves the trained model as

model.pickle.

- Key Requirements:

- Python libraries: OpenCV, Mediapipe, Scikit-learn, Pickle.

- Clear, labeled gesture images.

- A functional webcam for testing.

- Steps to Run:

- Place the dataset in the

./datafolder. - Execute the script and select:

- Option 1: Preprocess Data

- Option 2: Train Classifier

- Option 3: Real-Time Detection

- Press

Escto exit detection.

- Place the dataset in the

- Purpose:

Performs real-time hand gesture recognition using the trained model. - Key Features:

- Model Loading: Loads

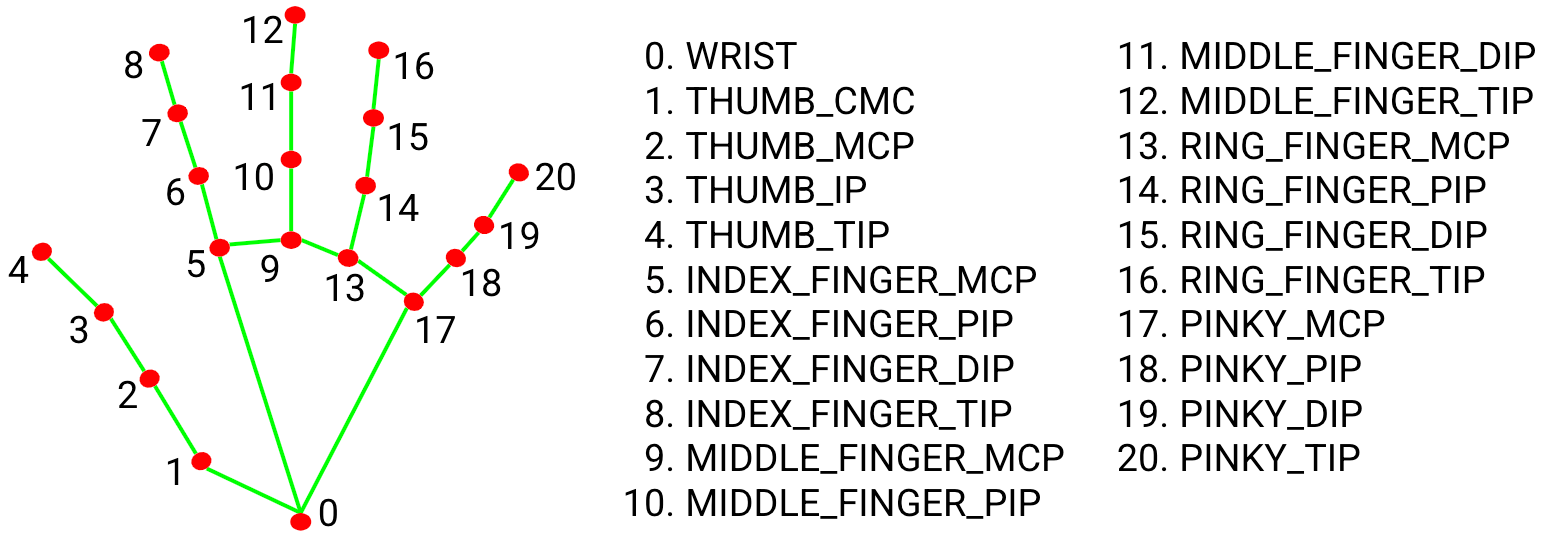

model.picklefor predictions. - Hand Tracking: Uses Mediapipe to detect 21 hand-knuckle landmarks.

- Prediction: Classifies gestures into alphabets (A-Z).

- Visualization: Displays results with bounding boxes and predictions.

- Model Loading: Loads

- Dependencies:

- Libraries: OpenCV, Mediapipe, Numpy, Pickle.

- Hardware: Webcam for real-time input.

- Usage:

Run the script and interact using the "q" key to quit.

- Managing and integrating multiple components of the project.

- Ensuring precise hand landmark detection and consistent datasets.

- Overcoming inaccuracies to achieve a final accuracy of 99%.

- Expand support to recognize complete words and phrases.

- Improve processing speed and accuracy.

- Extend functionality to integrate with robotic systems for automated communication.

- OpenCV: A library for computer vision and image processing.

Learn more - Mediapipe: Framework for building ML-based pipelines for hand tracking.

Learn more - Hand Landmark Model: Detects 21 key points for precise gesture recognition.

More info

- Face Detection, Face Mesh, OpenPose, Holistic, Hand Detection Using Mediapipe

- Introduction to OpenCV

Sign-to-Text/

│

├── data/ # Dataset for training

├── models/ # Saved trained models (e.g., model.pickle)

├── images_collection.py # Script for collecting image datasets

├── train_classifier.py # Script for training the model

├── inference_classifier.py # Real-time recognition script

├── data.pickle # Preprocessed dataset

└── README.md # Project documentation